hypertables.md 339 KB

Timescaledb - Hypertables

Pages: 103

chunks_detailed_size()

URL: llms-txt#chunks_detailed_size()

Contents:

- Samples

- Required arguments

- Returns

Get information about the disk space used by the chunks belonging to a hypertable, returning size information for each chunk table, any indexes on the chunk, any toast tables, and the total size associated with the chunk. All sizes are reported in bytes.

If the function is executed on a distributed hypertable, it returns disk space usage information as a separate row per node. The access node is not included since it doesn't have any local chunk data.

Additional metadata associated with a chunk can be accessed

via the timescaledb_information.chunks view.

Required arguments

| Name | Type | Description |

|---|---|---|

hypertable |

REGCLASS | Name of the hypertable |

| Column | Type | Description |

|---|---|---|

| chunk_schema | TEXT | Schema name of the chunk |

| chunk_name | TEXT | Name of the chunk |

| table_bytes | BIGINT | Disk space used by the chunk table |

| index_bytes | BIGINT | Disk space used by indexes |

| toast_bytes | BIGINT | Disk space of toast tables |

| total_bytes | BIGINT | Total disk space used by the chunk, including all indexes and TOAST data |

| node_name | TEXT | Node for which size is reported, applicable only to distributed hypertables |

If executed on a relation that is not a hypertable, the function

returns NULL.

===== PAGE: https://docs.tigerdata.com/api/hypertable/create_hypertable_old/ =====

Examples:

Example 1 (sql):

SELECT * FROM chunks_detailed_size('dist_table')

ORDER BY chunk_name, node_name;

chunk_schema | chunk_name | table_bytes | index_bytes | toast_bytes | total_bytes | node_name

-----------------------+-----------------------+-------------+-------------+-------------+-------------+-----------------------

_timescaledb_internal | _dist_hyper_1_1_chunk | 8192 | 32768 | 0 | 40960 | data_node_1

_timescaledb_internal | _dist_hyper_1_2_chunk | 8192 | 32768 | 0 | 40960 | data_node_2

_timescaledb_internal | _dist_hyper_1_3_chunk | 8192 | 32768 | 0 | 40960 | data_node_3

add_columnstore_policy()

URL: llms-txt#add_columnstore_policy()

Contents:

- Samples

- Arguments

Create a [job][job] that automatically moves chunks in a hypertable to the columnstore after a specific time interval.

You enable the columnstore a hypertable or continuous aggregate before you create a columnstore policy.

You do this by calling CREATE TABLE for hypertables and ALTER MATERIALIZED VIEW for continuous aggregates. When

columnstore is enabled, [bloom filters][bloom-filters] are enabled by default, and every new chunk has a bloom index.

If you converted chunks to columnstore using TimescaleDB v2.19.3 or below, to enable bloom filters on that data you have

to convert those chunks to the rowstore, then convert them back to the columnstore.

Bloom indexes are not retrofitted, meaning that the existing chunks need to be fully recompressed to have the bloom indexes present. Please check out the PR description for more in-depth explanations of how bloom filters in TimescaleDB work.

To view the policies that you set or the policies that already exist, see [informational views][informational-views], to remove a policy, see [remove_columnstore_policy][remove_columnstore_policy].

A columnstore policy is applied on a per-chunk basis. If you remove an existing policy and then add a new one, the new policy applies only to the chunks that have not yet been converted to columnstore. The existing chunks in the columnstore remain unchanged. This means that chunks with different columnstore settings can co-exist in the same hypertable.

Since TimescaleDB v2.18.0

To create a columnstore job:

- Enable columnstore

Create a [hypertable][hypertables-section] for your time-series data using [CREATE TABLE][hypertable-create-table].

For [efficient queries][secondary-indexes] on data in the columnstore, remember to segmentby the column you will

use most often to filter your data. For example:

- [Use

CREATE TABLEfor a hypertable][hypertable-create-table]

If you are self-hosting TimescaleDB v2.19.3 and below, create a [Postgres relational table][pg-create-table], then convert it using [create_hypertable][create_hypertable]. You then enable hypercore with a call to [ALTER TABLE][alter_table_hypercore].

- [Use

ALTER MATERIALIZED VIEWfor a continuous aggregate][compression_continuous-aggregate]

- Add a policy to move chunks to the columnstore at a specific time interval

60 days after the data was added to the table:

- 3 months prior to the moment you run the query:

With an integer-based time column:

Older than eight weeks:

Control the time your policy runs:

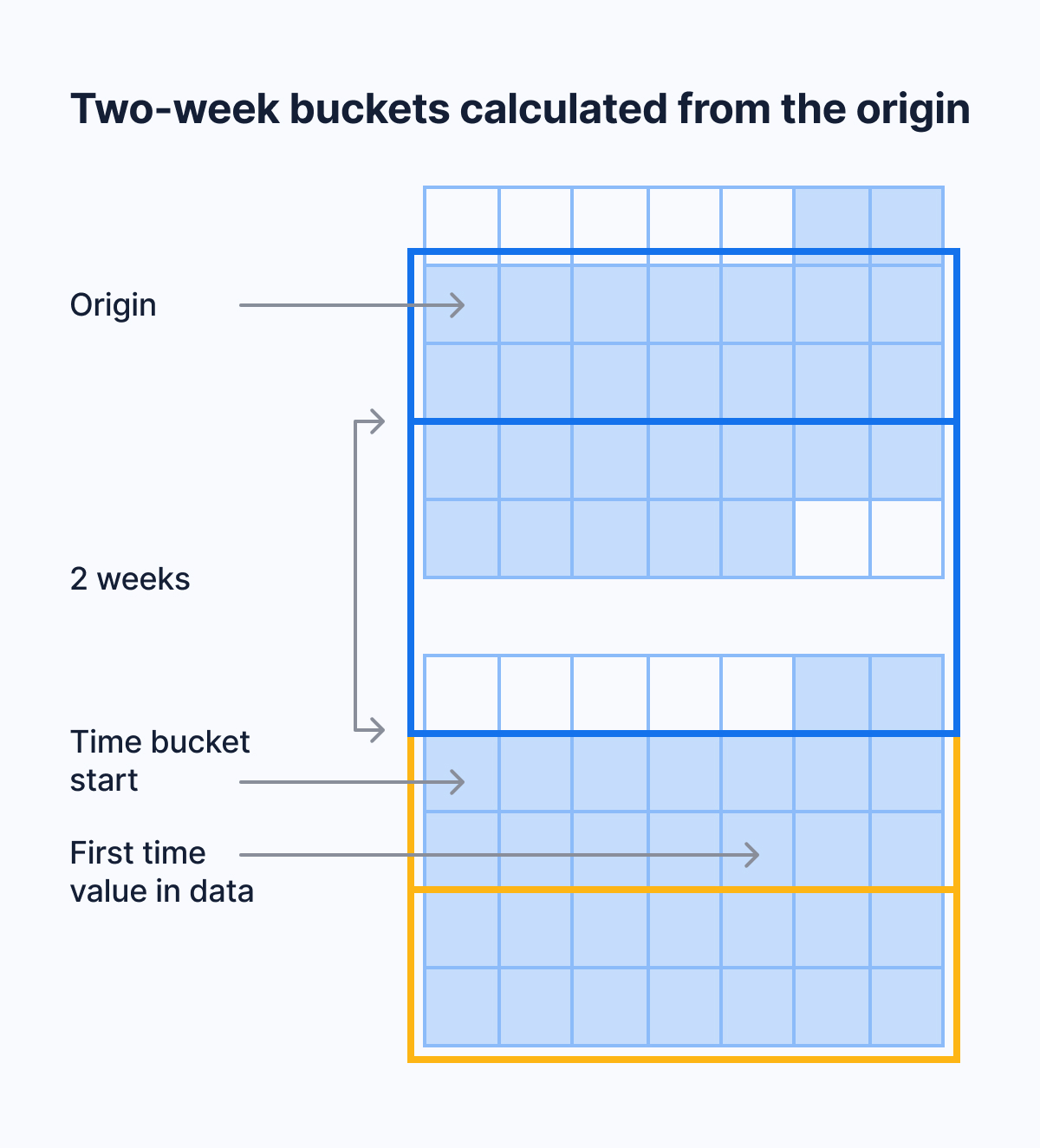

When you use a policy with a fixed schedule, TimescaleDB uses the initial_start time to compute the

next start time. When TimescaleDB finishes executing a policy, it picks the next available time on the

schedule,

skipping any candidate start times that have already passed.

When you set the next_start time, it only changes the start time of the next immediate execution. It does not

change the computation of the next scheduled execution after that next execution. To change the schedule so a

policy starts at a specific time, you need to set `initial_start`. To change the next immediate

execution, you need to set `next_start`. For example, to modify a policy to execute on a fixed schedule 15 minutes past the hour, and every

hour, you need to set both `initial_start` and `next_start` using `alter_job`:

- View the policies that you set or the policies that already exist

See [timescaledb_information.jobs][informational-views].

Calls to add_columnstore_policy require either after or created_before, but cannot have both.

| Name | Type | Default | Required | Description |

|-------------------------------|--|------------------------------------------------------------------------------------------------------------------------------|----------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| hypertable |REGCLASS| - | ✔ | Name of the hypertable or continuous aggregate to run this [job][job] on. |

| after |INTERVAL or INTEGER| - | ✖ | Add chunks containing data older than now - {after}::interval to the columnstore.

Use an object type that matchs the time column type in hypertable:

TIMESTAMP,TIMESTAMPTZ, orDATE: use anINTERVALtype.- Integer-based timestamps : set an integer type using the [integer_now_func][set_integer_now_func].

after is mutually exclusive with created_before. |

| created_before |INTERVAL| NULL | ✖ | Add chunks with a creation time of now() - created_before to the columnstore. created_before is - Not supported for continuous aggregates.

- Mutually exclusive with

after.

schedule_interval |INTERVAL| 12 hours when [chunk_time_interval][chunk_time_interval] >= 1 day for hypertable. Otherwise chunk_time_interval / 2. | ✖ | Set the interval between the finish time of the last execution of this policy and the next start. |

| initial_start |TIMESTAMPTZ| The interval from the finish time of the last execution to the [next_start][next-start]. | ✖ | Set the time this job is first run. This is also the time that next_start is calculated from. |

| next_start |TIMESTAMPTZ| -| ✖ | Set the start time of the next immediate execution. It does not change the computation of the next scheduled time after the next execution. |

| timezone |TEXT| UTC. However, daylight savings time(DST) changes may shift this alignment. | ✖ | Set to a valid time zone to mitigate DST shifting. If initial_start is set, subsequent executions of this policy are aligned on initial_start. |

| if_not_exists |BOOLEAN| false | ✖ | Set to true so this job fails with a warning rather than an error if a columnstore policy already exists on hypertable |

===== PAGE: https://docs.tigerdata.com/api/hypercore/hypertable_columnstore_settings/ =====

Examples:

Example 1 (sql):

CREATE TABLE crypto_ticks (

"time" TIMESTAMPTZ,

symbol TEXT,

price DOUBLE PRECISION,

day_volume NUMERIC

) WITH (

tsdb.hypertable,

tsdb.partition_column='time',

tsdb.segmentby='symbol',

tsdb.orderby='time DESC'

);

Example 2 (sql):

ALTER MATERIALIZED VIEW assets_candlestick_daily set (

timescaledb.enable_columnstore = true,

timescaledb.segmentby = 'symbol' );

Example 3 (unknown):

* 3 months prior to the moment you run the query:

Example 4 (unknown):

* With an integer-based time column:

Create distributed hypertables

URL: llms-txt#create-distributed-hypertables

Contents:

- Creating a distributed hypertable

[Multi-node support is sunsetted][multi-node-deprecation].

TimescaleDB v2.13 is the last release that includes multi-node support for Postgres versions 13, 14, and 15.

If you have a [multi-node environment][multi-node], you can create a distributed hypertable across your data nodes. First create a standard Postgres table, and then convert it into a distributed hypertable.

You need to set up your multi-node cluster before creating a distributed hypertable. To set up multi-node, see the multi-node section.

Creating a distributed hypertable

On the access node of your multi-node cluster, create a standard [Postgres table][postgres-createtable]:

Convert the table to a distributed hypertable. Specify the name of the table you want to convert, the column that holds its time values, and a space-partitioning parameter.

===== PAGE: https://docs.tigerdata.com/self-hosted/distributed-hypertables/foreign-keys/ =====

Examples:

Example 1 (sql):

CREATE TABLE conditions (

time TIMESTAMPTZ NOT NULL,

location TEXT NOT NULL,

temperature DOUBLE PRECISION NULL,

humidity DOUBLE PRECISION NULL

);

Example 2 (sql):

SELECT create_distributed_hypertable('conditions', 'time', 'location');

show_chunks()

URL: llms-txt#show_chunks()

Contents:

- Samples

- Required arguments

- Optional arguments

Get list of chunks associated with a hypertable.

Function accepts the following required and optional arguments. These arguments

have the same semantics as the drop_chunks [function][drop_chunks].

Get list of all chunks associated with a table:

Get all chunks from hypertable conditions older than 3 months:

Get all chunks from hypertable conditions created before 3 months:

Get all chunks from hypertable conditions created in the last 1 month:

Get all chunks from hypertable conditions before 2017:

Required arguments

|Name|Type|Description|

|-|-|-|

|relation|REGCLASS|Hypertable or continuous aggregate from which to select chunks.|

Optional arguments

|Name|Type|Description|

|-|-|-|

|older_than|ANY|Specification of cut-off point where any chunks older than this timestamp should be shown.|

|newer_than|ANY|Specification of cut-off point where any chunks newer than this timestamp should be shown.|

|created_before|ANY|Specification of cut-off point where any chunks created before this timestamp should be shown.|

|created_after|ANY|Specification of cut-off point where any chunks created after this timestamp should be shown.|

The older_than and newer_than parameters can be specified in two ways:

interval type: The cut-off point is computed as

now() - older_thanand similarlynow() - newer_than. An error is returned if an INTERVAL is supplied and the time column is not one of a TIMESTAMP, TIMESTAMPTZ, or DATE.timestamp, date, or integer type: The cut-off point is explicitly given as a TIMESTAMP / TIMESTAMPTZ / DATE or as a SMALLINT / INT / BIGINT. The choice of timestamp or integer must follow the type of the hypertable's time column.

The created_before and created_after parameters can be specified in two ways:

interval type: The cut-off point is computed as

now() - created_beforeand similarlynow() - created_after. This uses the chunk creation time for the filtering.timestamp, date, or integer type: The cut-off point is explicitly given as a

TIMESTAMP/TIMESTAMPTZ/DATEor as aSMALLINT/INT/BIGINT. The choice of integer value must follow the type of the hypertable's partitioning column. Otherwise the chunk creation time is used for the filtering.

When both older_than and newer_than arguments are used, the

function returns the intersection of the resulting two ranges. For

example, specifying newer_than => 4 months and older_than => 3

months shows all chunks between 3 and 4 months old.

Similarly, specifying newer_than => '2017-01-01' and older_than

=> '2017-02-01' shows all chunks between '2017-01-01' and

'2017-02-01'. Specifying parameters that do not result in an

overlapping intersection between two ranges results in an error.

When both created_before and created_after arguments are used, the

function returns the intersection of the resulting two ranges. For

example, specifying created_after=> 4 monthsandcreated_before=> 3

months shows all chunks created between 3 and 4 months from now.

Similarly, specifying created_after=> '2017-01-01'andcreated_before

=> '2017-02-01' shows all chunks created between '2017-01-01' and

'2017-02-01'. Specifying parameters that do not result in an

overlapping intersection between two ranges results in an error.

The created_before/created_after parameters cannot be used together with

older_than/newer_than.

===== PAGE: https://docs.tigerdata.com/api/hypertable/merge_chunks/ =====

Examples:

Example 1 (sql):

SELECT show_chunks('conditions');

Example 2 (sql):

SELECT show_chunks('conditions', older_than => INTERVAL '3 months');

Example 3 (sql):

SELECT show_chunks('conditions', created_before => INTERVAL '3 months');

Example 4 (sql):

SELECT show_chunks('conditions', created_after => INTERVAL '1 month');

Optimize time-series data in hypertables

URL: llms-txt#optimize-time-series-data-in-hypertables

Contents:

- Prerequisites

- Create a hypertable

- Speed up data ingestion

- Optimize cooling data in the columnstore

- Alter a hypertable

- Add a column to a hypertable

- Rename a hypertable

- Drop a hypertable

Hypertables are designed for real-time analytics, they are Postgres tables that automatically partition your data by

time. Typically, you partition hypertables on columns that hold time values.

[Best practice is to use timestamptz][timestamps-best-practice] column type. However, you can also partition on

date, integer, timestamp and [UUIDv7][uuidv7_functions] types.

To follow the steps on this page:

- Create a target [Tiger Cloud service][create-service] with the Real-time analytics capability.

You need [your connection details][connection-info]. This procedure also works for [self-hosted TimescaleDB][enable-timescaledb].

Create a hypertable

Create a [hypertable][hypertables-section] for your time-series data using [CREATE TABLE][hypertable-create-table].

For [efficient queries][secondary-indexes] on data in the columnstore, remember to segmentby the column you will use

most often to filter your data:

If you are self-hosting TimescaleDB v2.19.3 and below, create a [Postgres relational table][pg-create-table], then convert it using [create_hypertable][create_hypertable]. You then enable hypercore with a call to [ALTER TABLE][alter_table_hypercore].

To convert an existing table with data in it, call create_hypertable on that table with

[migrate_data to true][api-create-hypertable-arguments]. However, if you have a lot of data, this may take a long time.

Speed up data ingestion

When you set timescaledb.enable_direct_compress_copy your data gets compressed in memory during ingestion with COPY statements.

By writing the compressed batches immediately in the columnstore, the IO footprint is significantly lower.

Also, the [columnstore policy][add_columnstore_policy] you set is less important, INSERT already produces compressed chunks.

Please note that this feature is a tech preview and not production-ready. Using this feature could lead to regressed query performance and/or storage ratio, if the ingested batches are not correctly ordered or are of too high cardinality.

To enable in-memory data compression during ingestion:

Important facts

- High cardinality use cases do not produce good batches and lead to degreaded query performance.

- The columnstore is optimized to store 1000 records per batch, which is the optimal format for ingestion per segment by.

- WAL records are written for the compressed batches rather than the individual tuples.

- Currently only

COPYis support,INSERTwill eventually follow. - Best results are achieved for batch ingestion with 1000 records or more, upper boundary is 10.000 records.

- Continous Aggregates are not supported at the moment.

Optimize cooling data in the columnstore

As the data cools and becomes more suited for analytics, [add a columnstore policy][add_columnstore_policy] so your data is automatically converted to the columnstore after a specific time interval. This columnar format enables fast scanning and aggregation, optimizing performance for analytical workloads while also saving significant storage space. In the columnstore conversion, hypertable chunks are compressed by up to 98%, and organized for efficient, large-scale queries. This columnar format enables fast scanning and aggregation, optimizing performance for analytical workloads.

To optimize your data, add a columnstore policy:

You can also manually [convert chunks][convert_to_columnstore] in a hypertable to the columnstore.

Alter a hypertable

You can alter a hypertable, for example to add a column, by using the Postgres

[ALTER TABLE][postgres-altertable] command. This works for both regular and

distributed hypertables.

Add a column to a hypertable

You add a column to a hypertable using the ALTER TABLE command. In this

example, the hypertable is named conditions and the new column is named

humidity:

If the column you are adding has the default value set to NULL, or has no

default value, then adding a column is relatively fast. If you set the default

to a non-null value, it takes longer, because it needs to fill in this value for

all existing rows of all existing chunks.

Rename a hypertable

You can change the name of a hypertable using the ALTER TABLE command. In this

example, the hypertable is called conditions, and is being changed to the new

name, weather:

Drop a hypertable using a standard Postgres [DROP TABLE][postgres-droptable]

command:

All data chunks belonging to the hypertable are deleted.

===== PAGE: https://docs.tigerdata.com/use-timescale/hypertables/improve-query-performance/ =====

Examples:

Example 1 (sql):

CREATE TABLE conditions (

time TIMESTAMPTZ NOT NULL,

location TEXT NOT NULL,

device TEXT NOT NULL,

temperature DOUBLE PRECISION NULL,

humidity DOUBLE PRECISION NULL

) WITH (

tsdb.hypertable,

tsdb.partition_column='time',

tsdb.segmentby = 'device',

tsdb.orderby = 'time DESC'

);

Example 2 (sql):

SET timescaledb.enable_direct_compress_copy=on;

Example 3 (sql):

CALL add_columnstore_policy('conditions', after => INTERVAL '1d');

Example 4 (sql):

ALTER TABLE conditions

ADD COLUMN humidity DOUBLE PRECISION NULL;

add_reorder_policy()

URL: llms-txt#add_reorder_policy()

Contents:

- Samples

- Required arguments

- Optional arguments

- Returns

Create a policy to reorder the rows of a hypertable's chunks on a specific index. The policy reorders the rows for all chunks except the two most recent ones, because these are still getting writes. By default, the policy runs every 24 hours. To change the schedule, call [alter_job][alter_job] and adjust schedule_interval.

You can have only one reorder policy on each hypertable.

For manual reordering of individual chunks, see [reorder_chunk][reorder_chunk].

When a chunk's rows have been reordered by a policy, they are not reordered by subsequent runs of the same policy. If you write significant amounts of data into older chunks that have already been reordered, re-run [reorder_chunk][reorder_chunk] on them. If you have changed a lot of older chunks, it is better to drop and recreate the policy.

Creates a policy to reorder chunks by the existing (device_id, time) index every 24 hours.

This applies to all chunks except the two most recent ones.

Required arguments

|Name|Type| Description |

|-|-|--------------------------------------------------------------|

|hypertable|REGCLASS| Hypertable to create the policy for |

|index_name|TEXT| Existing hypertable index by which to order the rows on disk |

Optional arguments

|Name|Type| Description |

|-|-|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|if_not_exists|BOOLEAN| Set to true to avoid an error if the reorder_policy already exists. A notice is issued instead. Defaults to false. |

|initial_start|TIMESTAMPTZ| Controls when the policy first runs and how its future run schedule is calculated.

- If omitted or set to

NULL(default):- The first run is scheduled at

now()+schedule_interval(defaults to 24 hours). - The next run is scheduled at one full

schedule_intervalafter the end of the previous run.

- The first run is scheduled at

- If set:

- The first run is at the specified time.

- The next run is scheduled as

initial_start+schedule_intervalregardless of when the previous run ends.

timezone|TEXT| A valid time zone. If initial_start is also specified, subsequent runs of the reorder policy are aligned on its initial start. However, daylight savings time (DST) changes might shift this alignment. Set to a valid time zone if this is an issue you want to mitigate. If omitted, UTC bucketing is performed. Defaults to NULL. |

|Column|Type|Description|

|-|-|-|

|job_id|INTEGER|TimescaleDB background job ID created to implement this policy|

===== PAGE: https://docs.tigerdata.com/api/hypertable/hypertable_detailed_size/ =====

Examples:

Example 1 (sql):

SELECT add_reorder_policy('conditions', 'conditions_device_id_time_idx');

split_chunk()

URL: llms-txt#split_chunk()

Contents:

- Samples

- Required arguments

- Returns

Split a large chunk at a specific point in time. If you do not specify the timestamp to split at, chunk

is split equally.

Split a chunk at a specific time:

Split a chunk in two:

For example, If the chunk duration is, 24 hours, the following command splits chunk_1 into

two chunks of 12 hours each.

Required arguments

| Name | Type | Required | Description |

|---|---|---|---|

chunk |

REGCLASS | ✔ | Name of the chunk to split. |

split_at |

TIMESTAMPTZ |

✖ | Timestamp to split the chunk at. |

This function returns void.

===== PAGE: https://docs.tigerdata.com/api/hypertable/attach_chunk/ =====

Examples:

Example 1 (sql):

CALL split_chunk('chunk_1', split_at => '2025-03-01 00:00');

Example 2 (sql):

CALL split_chunk('chunk_1');

timescaledb_information.chunk_columnstore_settings

URL: llms-txt#timescaledb_information.chunk_columnstore_settings

Contents:

- Samples

- Returns

Retrieve the compression settings for each chunk in the columnstore.

Since TimescaleDB v2.18.0

To retrieve information about settings:

Show settings for all chunks in the columnstore:

Find all chunk columnstore settings for a specific hypertable:

| Name | Type | Description |

|--|--|--|--|--|

|hypertable|REGCLASS| The name of the hypertable in the columnstore. |

|chunk|REGCLASS| The name of the chunk in the hypertable. |

|segmentby|TEXT| The list of columns used to segment the hypertable. |

|orderby|TEXT| The list of columns used to order the data in the hypertable, along with the ordering and NULL ordering information. |

|index| TEXT | The sparse index details. |

===== PAGE: https://docs.tigerdata.com/api/hypercore/add_columnstore_policy/ =====

Examples:

Example 1 (sql):

SELECT * FROM timescaledb_information.chunk_columnstore_settings

Example 2 (sql):

hypertable | chunk | segmentby | orderby

------------+-------+-----------+---------

measurements | _timescaledb_internal._hyper_1_1_chunk| | "time" DESC

Example 3 (sql):

SELECT *

FROM timescaledb_information.chunk_columnstore_settings

WHERE hypertable::TEXT LIKE 'metrics';

Example 4 (sql):

hypertable | chunk | segmentby | orderby

------------+-------+-----------+---------

metrics | _timescaledb_internal._hyper_2_3_chunk | metric_id | "time"

Alter and drop distributed hypertables

URL: llms-txt#alter-and-drop-distributed-hypertables

[Multi-node support is sunsetted][multi-node-deprecation].

TimescaleDB v2.13 is the last release that includes multi-node support for Postgres versions 13, 14, and 15.

You can alter and drop distributed hypertables in the same way as standard hypertables. To learn more, see:

- [Altering hypertables][alter]

- [Dropping hypertables][drop]

When you alter a distributed hypertable, or set privileges on it, the commands are automatically applied across all data nodes. For more information, see the section on [multi-node administration][multinode-admin].

===== PAGE: https://docs.tigerdata.com/self-hosted/distributed-hypertables/create-distributed-hypertables/ =====

Can't create unique index on hypertable, or can't create hypertable with unique index

URL: llms-txt#can't-create-unique-index-on-hypertable,-or-can't-create-hypertable-with-unique-index

You might get a unique index and partitioning column error in 2 situations:

- When creating a primary key or unique index on a hypertable

- When creating a hypertable from a table that already has a unique index or primary key

For more information on how to fix this problem, see the [section on creating unique indexes on hypertables][unique-indexes].

===== PAGE: https://docs.tigerdata.com/_troubleshooting/explain/ =====

merge_chunks()

URL: llms-txt#merge_chunks()

Contents:

- Since2180

- Samples

- Arguments

Merge two or more chunks into one.

The partition boundaries for the new chunk is the union of all partitions of the merged chunks. The new chunk retains the name, constraints, and triggers of the first chunk in the partition order.

You can only merge chunks that have directly adjacent partitions. It is not possible to merge chunks that have another chunk, or an empty range between them in any of the partitioning dimensions.

Chunk merging has the following limitations. You cannot:

- Merge chunks with tiered data

- Read or write from the chunks while they are being merged

Refer to the installation documentation for detailed setup instructions.

- Merge more than two chunks:

You can merge either two chunks, or an arbitrary number of chunks specified as an array of chunk identifiers.

When you call merge_chunks, you must specify either chunk1 and chunk2, or chunks. You cannot use both

arguments.

| Name | Type | Default | Required | Description |

|--------------------|-------------|--|--|------------------------------------------------|

| chunk1, chunk2 | REGCLASS | - | ✖ | The two chunk to merge in partition order |

| chunks | REGCLASS[] |- | ✖ | The array of chunks to merge in partition order |

===== PAGE: https://docs.tigerdata.com/api/hypertable/add_dimension/ =====

Examples:

Example 1 (sql):

CALL merge_chunks('_timescaledb_internal._hyper_1_1_chunk', '_timescaledb_internal._hyper_1_2_chunk');

Example 2 (sql):

CALL merge_chunks('{_timescaledb_internal._hyper_1_1_chunk, _timescaledb_internal._hyper_1_2_chunk, _timescaledb_internal._hyper_1_3_chunk}');

disable_chunk_skipping()

URL: llms-txt#disable_chunk_skipping()

Contents:

- Samples

- Required arguments

- Optional arguments

- Returns

Disable range tracking for a specific column in a hypertable in the columnstore.

In this sample, you convert the conditions table to a hypertable with

partitioning on the time column. You then specify and enable additional

columns to track ranges for. You then disable range tracking:

Best practice is to enable range tracking on columns which are correlated to the

partitioning column. In other words, enable tracking on secondary columns that are

referenced in the WHERE clauses in your queries.

Use this API to disable range tracking on columns when the query patterns don't

use this secondary column anymore.

Required arguments

|Name|Type|Description|

|-|-|-|

|hypertable|REGCLASS|Hypertable that the column belongs to|

|column_name|TEXT|Column to disable tracking range statistics for|

Optional arguments

|Name|Type|Description|

|-|-|-|

|if_not_exists|BOOLEAN|Set to true so that a notice is sent when ranges are not being tracked for a column. By default, an error is thrown|

|Column|Type|Description|

|-|-|-|

|hypertable_id|INTEGER|ID of the hypertable in TimescaleDB.|

|column_name|TEXT|Name of the column range tracking is disabled for|

|disabled|BOOLEAN|Returns true when tracking is disabled. false when if_not_exists is true and the entry was

not removed|

To disable_chunk_skipping(), you must have first called [enable_chunk_skipping][enable_chunk_skipping]

and enabled range tracking on a column in the hypertable.

===== PAGE: https://docs.tigerdata.com/api/hypertable/remove_reorder_policy/ =====

Examples:

Example 1 (sql):

SELECT create_hypertable('conditions', 'time');

SELECT enable_chunk_skipping('conditions', 'device_id');

SELECT disable_chunk_skipping('conditions', 'device_id');

Optimize your data for real-time analytics

URL: llms-txt#optimize-your-data-for-real-time-analytics

Contents:

- Prerequisites

- Optimize your data with columnstore policies

- Reference

[Hypercore][hypercore] is the hybrid row-columnar storage engine in TimescaleDB used by hypertables. Traditional databases force a trade-off between fast inserts (row-based storage) and efficient analytics (columnar storage). Hypercore eliminates this trade-off, allowing real-time analytics without sacrificing transactional capabilities.

Hypercore dynamically stores data in the most efficient format for its lifecycle:

- Row-based storage for recent data: the most recent chunk (and possibly more) is always stored in the rowstore, ensuring fast inserts, updates, and low-latency single record queries. Additionally, row-based storage is used as a writethrough for inserts and updates to columnar storage.

- Columnar storage for analytical performance: chunks are automatically compressed into the columnstore, optimizing storage efficiency and accelerating analytical queries.

Unlike traditional columnar databases, hypercore allows data to be inserted or modified at any stage, making it a flexible solution for both high-ingest transactional workloads and real-time analytics—within a single database.

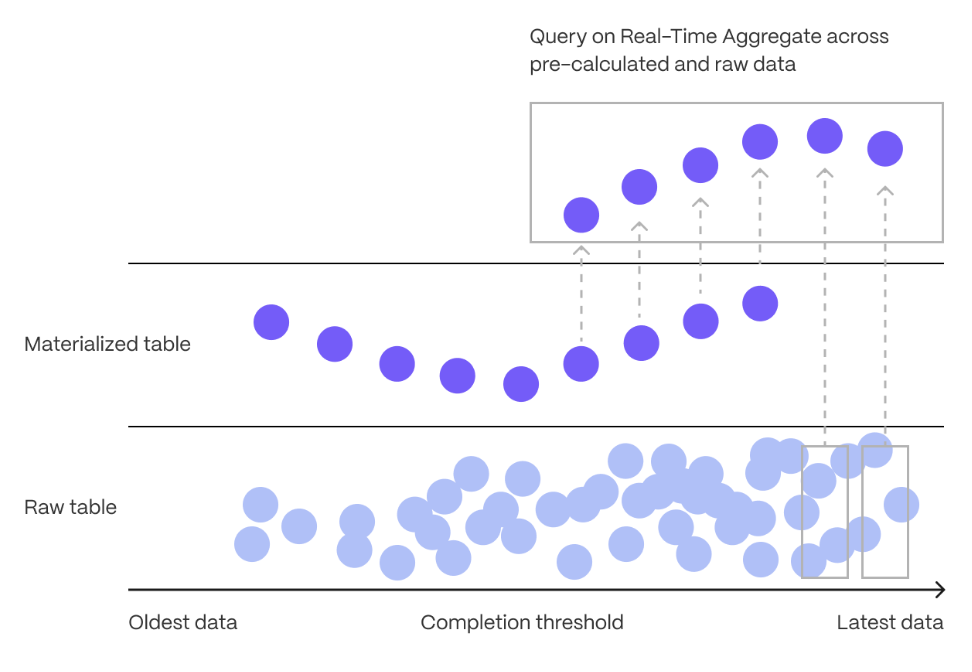

When you convert chunks from the rowstore to the columnstore, multiple records are grouped into a single row. The columns of this row hold an array-like structure that stores all the data. For example, data in the following rowstore chunk:

| Timestamp | Device ID | Device Type | CPU | Disk IO |

|---|---|---|---|---|

| 12:00:01 | A | SSD | 70.11 | 13.4 |

| 12:00:01 | B | HDD | 69.70 | 20.5 |

| 12:00:02 | A | SSD | 70.12 | 13.2 |

| 12:00:02 | B | HDD | 69.69 | 23.4 |

| 12:00:03 | A | SSD | 70.14 | 13.0 |

| 12:00:03 | B | HDD | 69.70 | 25.2 |

Is converted and compressed into arrays in a row in the columnstore:

|Timestamp|Device ID|Device Type|CPU|Disk IO| |-|-|-|-|-| |[12:00:01, 12:00:01, 12:00:02, 12:00:02, 12:00:03, 12:00:03]|[A, B, A, B, A, B]|[SSD, HDD, SSD, HDD, SSD, HDD]|[70.11, 69.70, 70.12, 69.69, 70.14, 69.70]|[13.4, 20.5, 13.2, 23.4, 13.0, 25.2]|

Because a single row takes up less disk space, you can reduce your chunk size by up to 98%, and can also speed up your queries. This saves on storage costs, and keeps your queries operating at lightning speed.

For an in-depth explanation of how hypertables and hypercore work, see the [Data model][data-model].

This page shows you how to get the best results when you set a policy to automatically convert chunks in a hypertable from the rowstore to the columnstore.

To follow the steps on this page:

- Create a target [Tiger Cloud service][create-service] with real-time analytics enabled.

You need your [connection details][connection-info].

The code samples in this page use the crypto_sample.zip data from [this key features tutorial][ingest-data].

Optimize your data with columnstore policies

The compression ratio and query performance of data in the columnstore is dependent on the order and structure of your

data. Rows that change over a dimension should be close to each other. With time-series data, you orderby the time

dimension. For example, Timestamp:

| Timestamp | Device ID | Device Type | CPU | Disk IO |

|---|---|---|---|---|

| 12:00:01 | A | SSD | 70.11 | 13.4 |

This ensures that records are compressed and accessed in the same order. However, you would always have to access the data using the time dimension, then filter all the rows using other criteria. To make your queries more efficient, you segment your data based on the following:

- The way you want to access it. For example, to rapidly access data about a

single device, you

segmentbytheDevice IDcolumn. This enables you to run much faster analytical queries on data in the columnstore. - The compression rate you want to achieve. The [lower the cardinality][cardinality-blog] of the

segmentbycolumn, the better compression results you get.

When TimescaleDB converts a chunk to the columnstore, it automatically creates a different schema for your

data. It also creates and uses custom indexes to incorporate the segmentby and orderby parameters when

you write to and read from the columnstore.

To set up your hypercore automation:

- Connect to your Tiger Cloud service

In [Tiger Cloud Console][services-portal] open an [SQL editor][in-console-editors]. You can also connect to your service using [psql][connect-using-psql].

- Enable columnstore on a hypertable

Create a [hypertable][hypertables-section] for your time-series data using [CREATE TABLE][hypertable-create-table].

For [efficient queries][secondary-indexes] on data in the columnstore, remember to segmentby the column you will

use most often to filter your data. For example:

- [Use

CREATE TABLEfor a hypertable][hypertable-create-table]

If you are self-hosting TimescaleDB v2.19.3 and below, create a [Postgres relational table][pg-create-table], then convert it using [create_hypertable][create_hypertable]. You then enable hypercore with a call to [ALTER TABLE][alter_table_hypercore].

[Use

ALTER MATERIALIZED VIEWfor a continuous aggregate][compression_continuous-aggregate]Before you say

huh, a continuous aggregate is a specialized hypertable.

- Add a policy to convert chunks to the columnstore at a specific time interval

Create a [columnstore_policy][add_columnstore_policy] that automatically converts chunks in a hypertable to the columnstore at a specific time interval. For example, convert yesterday's crypto trading data to the columnstore:

TimescaleDB is optimized for fast updates on compressed data in the columnstore. To modify data in the columnstore, use standard SQL.

Check the columnstore policy

View your data space saving:

When you convert data to the columnstore, as well as being optimized for analytics, it is compressed by more than

90%. This helps you save on storage costs and keeps your queries operating at lightning speed. To see the amount of space

saved:

You see something like:

| before | after |

|---------|--------|

| 194 MB | 24 MB |

- View the policies that you set or the policies that already exist:

See [timescaledb_information.jobs][informational-views].

- Pause a columnstore policy

See [alter_job][alter_job].

- Restart a columnstore policy

See [alter_job][alter_job].

- Remove a columnstore policy

See [remove_columnstore_policy][remove_columnstore_policy].

- Disable columnstore

If your table has chunks in the columnstore, you have to [convert the chunks back to the rowstore][convert_to_rowstore] before you disable the columnstore.

See [alter_table_hypercore][alter_table_hypercore].

For integers, timestamps, and other integer-like types, data is compressed using [delta encoding][delta], [delta-of-delta][delta-delta], [simple-8b][simple-8b], and [run-length encoding][run-length]. For columns with few repeated values, [XOR-based][xor] and [dictionary compression][dictionary] is used. For all other types, [dictionary compression][dictionary] is used.

===== PAGE: https://docs.tigerdata.com/use-timescale/hypercore/compression-methods/ =====

Examples:

Example 1 (sql):

CREATE TABLE crypto_ticks (

"time" TIMESTAMPTZ,

symbol TEXT,

price DOUBLE PRECISION,

day_volume NUMERIC

) WITH (

tsdb.hypertable,

tsdb.partition_column='time',

tsdb.segmentby='symbol',

tsdb.orderby='time DESC'

);

Example 2 (sql):

ALTER MATERIALIZED VIEW assets_candlestick_daily set (

timescaledb.enable_columnstore = true,

timescaledb.segmentby = 'symbol' );

Example 3 (unknown):

TimescaleDB is optimized for fast updates on compressed data in the columnstore. To modify data in the

columnstore, use standard SQL.

1. **Check the columnstore policy**

1. View your data space saving:

When you convert data to the columnstore, as well as being optimized for analytics, it is compressed by more than

90%. This helps you save on storage costs and keeps your queries operating at lightning speed. To see the amount of space

saved:

Example 4 (unknown):

You see something like:

| before | after |

|---------|--------|

| 194 MB | 24 MB |

1. View the policies that you set or the policies that already exist:

Triggers

URL: llms-txt#triggers

Contents:

- Create a trigger

- Creating a trigger

TimescaleDB supports the full range of Postgres triggers. Creating, altering, or dropping triggers on a hypertable propagates the changes to all of the underlying chunks.

This example creates a new table called error_conditions with the same schema

as conditions, but that only stores records which are considered errors. An

error, in this case, is when an application sends a temperature or humidity

reading with a value that is greater than or equal to 1000.

Creating a trigger

Create a function that inserts erroneous data into the

error_conditionstable:Create a trigger that calls this function whenever a new row is inserted into the hypertable:

All data is inserted into the

conditionstable, but rows that contain errors are also added to theerror_conditionstable.

TimescaleDB supports the full range of triggers, including BEFORE INSERT,

AFTER INSERT, BEFORE UPDATE, AFTER UPDATE, BEFORE DELETE, and

AFTER DELETE. For more information, see the

[Postgres docs][postgres-createtrigger].

===== PAGE: https://docs.tigerdata.com/use-timescale/schema-management/foreign-data-wrappers/ =====

Examples:

Example 1 (sql):

CREATE OR REPLACE FUNCTION record_error()

RETURNS trigger AS $record_error$

BEGIN

IF NEW.temperature >= 1000 OR NEW.humidity >= 1000 THEN

INSERT INTO error_conditions

VALUES(NEW.time, NEW.location, NEW.temperature, NEW.humidity);

END IF;

RETURN NEW;

END;

$record_error$ LANGUAGE plpgsql;

Example 2 (sql):

CREATE TRIGGER record_error

BEFORE INSERT ON conditions

FOR EACH ROW

EXECUTE PROCEDURE record_error();

copy_chunk()

URL: llms-txt#copy_chunk()

Contents:

- Required arguments

- Required settings

- Failures

- Sample usage

[Multi-node support is sunsetted][multi-node-deprecation].

TimescaleDB v2.13 is the last release that includes multi-node support for Postgres versions 13, 14, and 15.

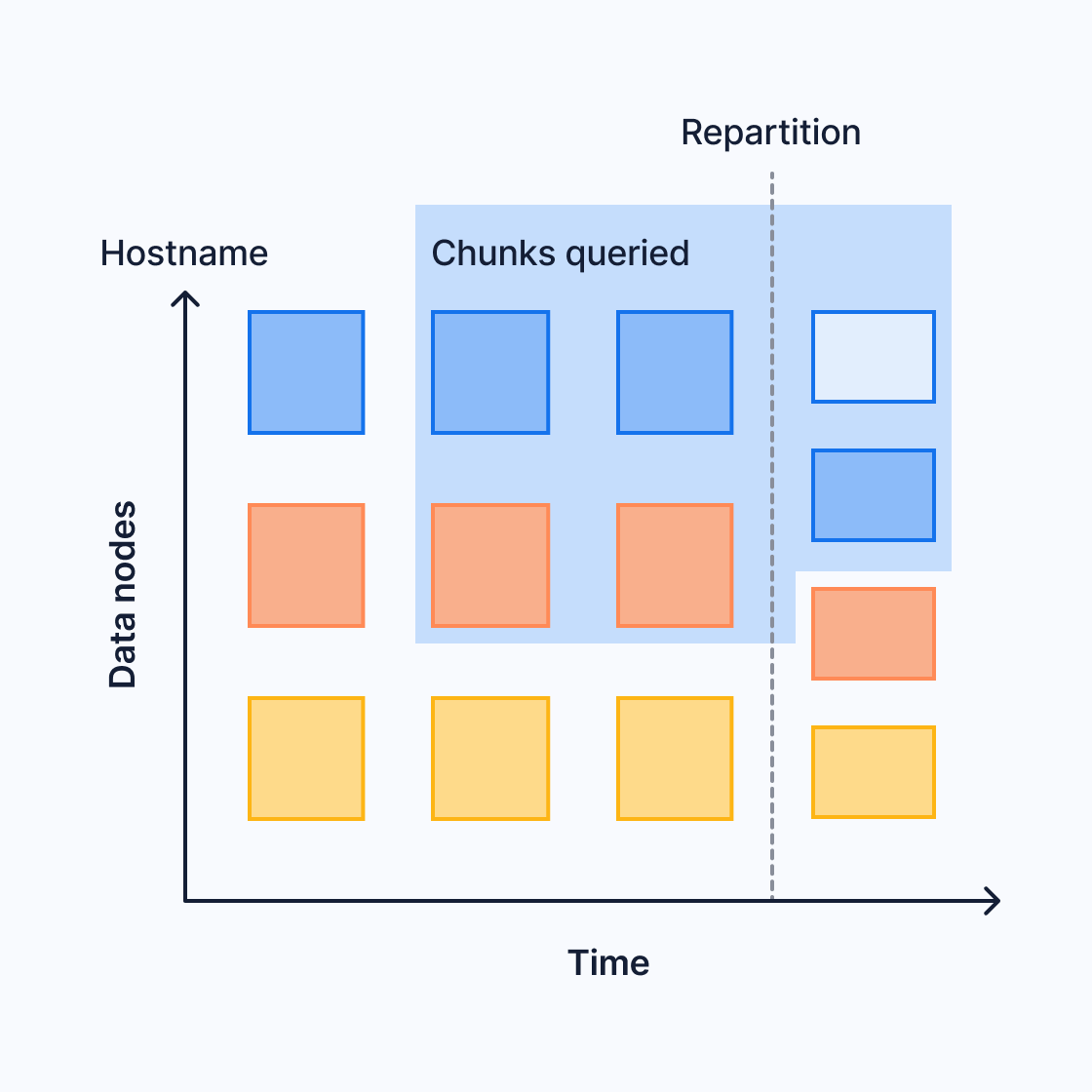

TimescaleDB allows you to copy existing chunks to a new location within a multi-node environment. This allows each data node to work both as a primary for some chunks and backup for others. If a data node fails, its chunks already exist on other nodes that can take over the responsibility of serving them.

Experimental features could have bugs. They might not be backwards compatible, and could be removed in future releases. Use these features at your own risk, and do not use any experimental features in production.

Required arguments

|Name|Type|Description|

|-|-|-|

|chunk|REGCLASS|Name of chunk to be copied|

|source_node|NAME|Data node where the chunk currently resides|

|destination_node|NAME|Data node where the chunk is to be copied|

When copying a chunk, the destination data node needs a way to authenticate with the data node that holds the source chunk. It is currently recommended to use a [password file][password-config] on the data node.

The wal_level setting must also be set to logical or higher on

data nodes from which chunks are copied. If you are copying or moving

many chunks in parallel, you can increase max_wal_senders and

max_replication_slots.

When a copy operation fails, it sometimes creates objects and metadata on

the destination data node. It can also hold a replication slot open on the

source data node. To clean up these objects and metadata, use

[cleanup_copy_chunk_operation][cleanup_copy_chunk].

===== PAGE: https://docs.tigerdata.com/api/distributed-hypertables/alter_data_node/ =====

hypertable_detailed_size()

URL: llms-txt#hypertable_detailed_size()

Contents:

- Samples

- Required arguments

- Returns

Get detailed information about disk space used by a hypertable or continuous aggregate, returning size information for the table itself, any indexes on the table, any toast tables, and the total size of all. All sizes are reported in bytes. If the function is executed on a distributed hypertable, it returns size information as a separate row per node, including the access node.

When a continuous aggregate name is provided, the function transparently looks up the backing hypertable and returns its statistics instead.

For more information about using hypertables, including chunk size partitioning, see the [hypertable section][hypertable-docs].

Get the size information for a hypertable.

The access node is listed without a user-given node name. Normally, the access node holds no data, but still maintains, for example, index information that occupies a small amount of disk space.

Required arguments

| Name | Type | Description |

|---|---|---|

hypertable |

REGCLASS | Hypertable or continuous aggregate to show detailed size of. |

|Column|Type|Description|

|-|-|-|

|table_bytes|BIGINT|Disk space used by main_table (like pg_relation_size(main_table))|

|index_bytes|BIGINT|Disk space used by indexes|

|toast_bytes|BIGINT|Disk space of toast tables|

|total_bytes|BIGINT|Total disk space used by the specified table, including all indexes and TOAST data|

|node_name|TEXT|For distributed hypertables, this is the user-given name of the node for which the size is reported. NULL is returned for the access node and non-distributed hypertables.|

If executed on a relation that is not a hypertable, the function

returns NULL.

===== PAGE: https://docs.tigerdata.com/api/continuous-aggregates/show_policies/ =====

Examples:

Example 1 (sql):

-- disttable is a distributed hypertable --

SELECT * FROM hypertable_detailed_size('disttable') ORDER BY node_name;

table_bytes | index_bytes | toast_bytes | total_bytes | node_name

-------------+-------------+-------------+-------------+-------------

16384 | 40960 | 0 | 57344 | data_node_1

8192 | 24576 | 0 | 32768 | data_node_2

0 | 8192 | 0 | 8192 |

Limitations

URL: llms-txt#limitations

Contents:

- Hypertable limitations

While TimescaleDB generally offers capabilities that go beyond what Postgres offers, there are some limitations to using hypertables.

Hypertable limitations

- Time dimensions (columns) used for partitioning cannot have NULL values.

- Unique indexes must include all columns that are partitioning dimensions.

UPDATEstatements that move values between partitions (chunks) are not supported. This includes upserts (INSERT ... ON CONFLICT UPDATE).- Foreign key constraints from a hypertable referencing another hypertable are not supported.

===== PAGE: https://docs.tigerdata.com/use-timescale/tigerlake/ =====

remove_retention_policy()

URL: llms-txt#remove_retention_policy()

Contents:

- Samples

- Required arguments

- Optional arguments

Remove a policy to drop chunks of a particular hypertable.

Removes the existing data retention policy for the conditions table.

Required arguments

| Name | Type | Description |

|---|---|---|

relation |

REGCLASS | Name of the hypertable or continuous aggregate from which to remove the policy |

Optional arguments

| Name | Type | Description |

|---|---|---|

if_exists |

BOOLEAN | Set to true to avoid throwing an error if the policy does not exist. Defaults to false. |

===== PAGE: https://docs.tigerdata.com/api/hypertable/create_table/ =====

Examples:

Example 1 (sql):

SELECT remove_retention_policy('conditions');

show_tablespaces()

URL: llms-txt#show_tablespaces()

Contents:

- Samples

- Required arguments

Show the tablespaces attached to a hypertable.

Required arguments

| Name | Type | Description |

|---|---|---|

hypertable |

REGCLASS | Hypertable to show attached tablespaces for. |

===== PAGE: https://docs.tigerdata.com/api/hypertable/disable_chunk_skipping/ =====

Examples:

Example 1 (sql):

SELECT * FROM show_tablespaces('conditions');

show_tablespaces

------------------

disk1

disk2

Hypertables and chunks

URL: llms-txt#hypertables-and-chunks

Contents:

- The hypertable workflow

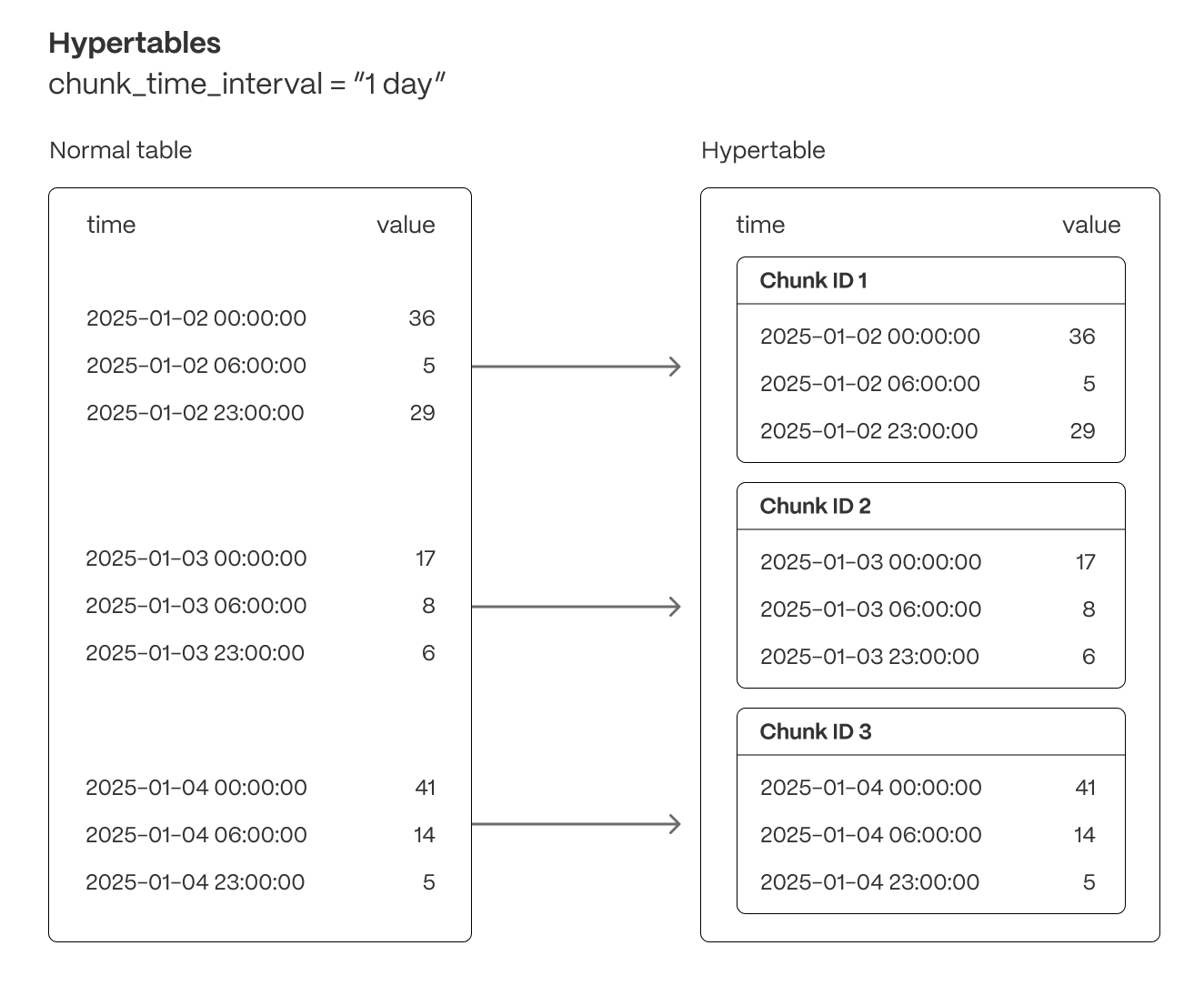

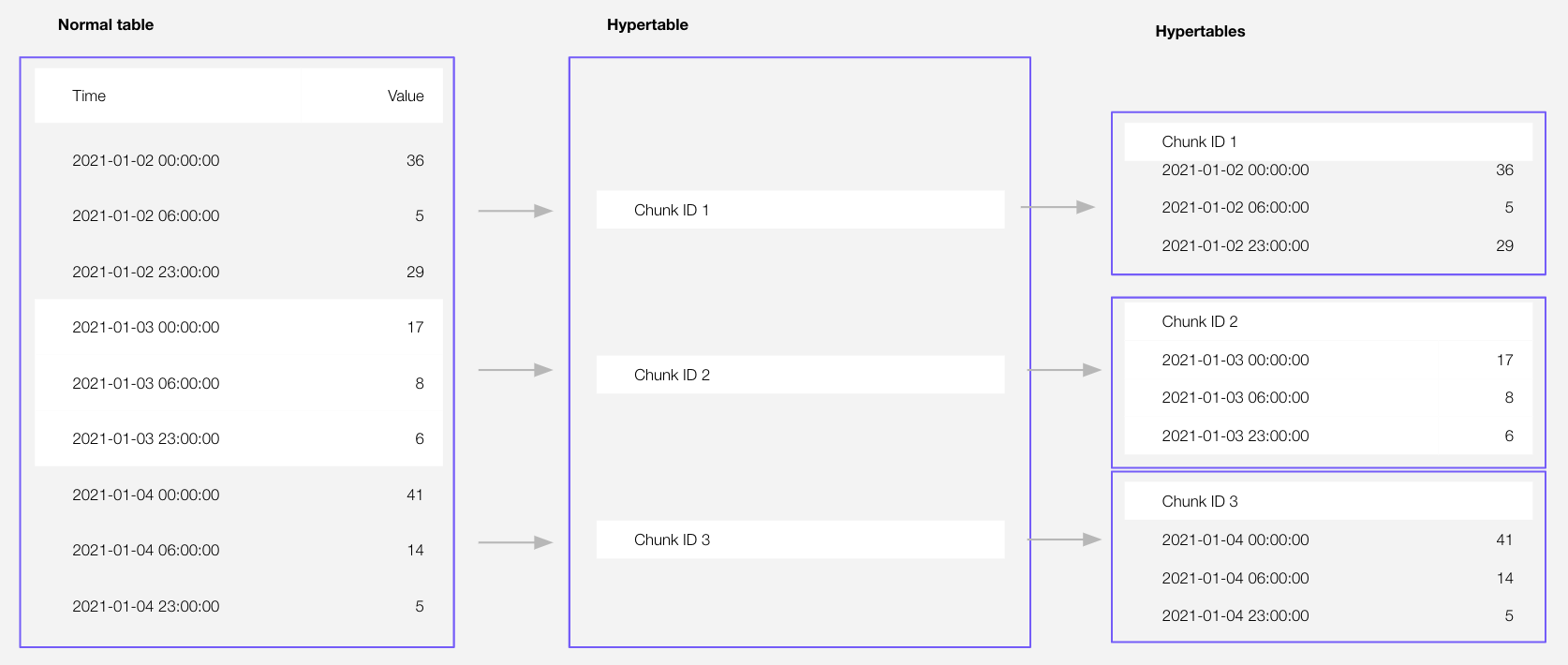

Tiger Cloud supercharges your real-time analytics by letting you run complex queries continuously, with near-zero latency. Under the hood, this is achieved by using hypertables—Postgres tables that automatically partition your time-series data by time and optionally by other dimensions. When you run a query, Tiger Cloud identifies the correct partition, called chunk, and runs the query on it, instead of going through the entire table.

Hypertables offer the following benefits:

Efficient data management with [automated partitioning by time][chunk-size]: Tiger Cloud splits your data into chunks that hold data from a specific time range. For example, one day or one week. You can configure this range to better suit your needs.

Better performance with [strategic indexing][hypertable-indexes]: an index on time in the descending order is automatically created when you create a hypertable. More indexes are created on the chunk level, to optimize performance. You can create additional indexes, including unique indexes, on the columns you need.

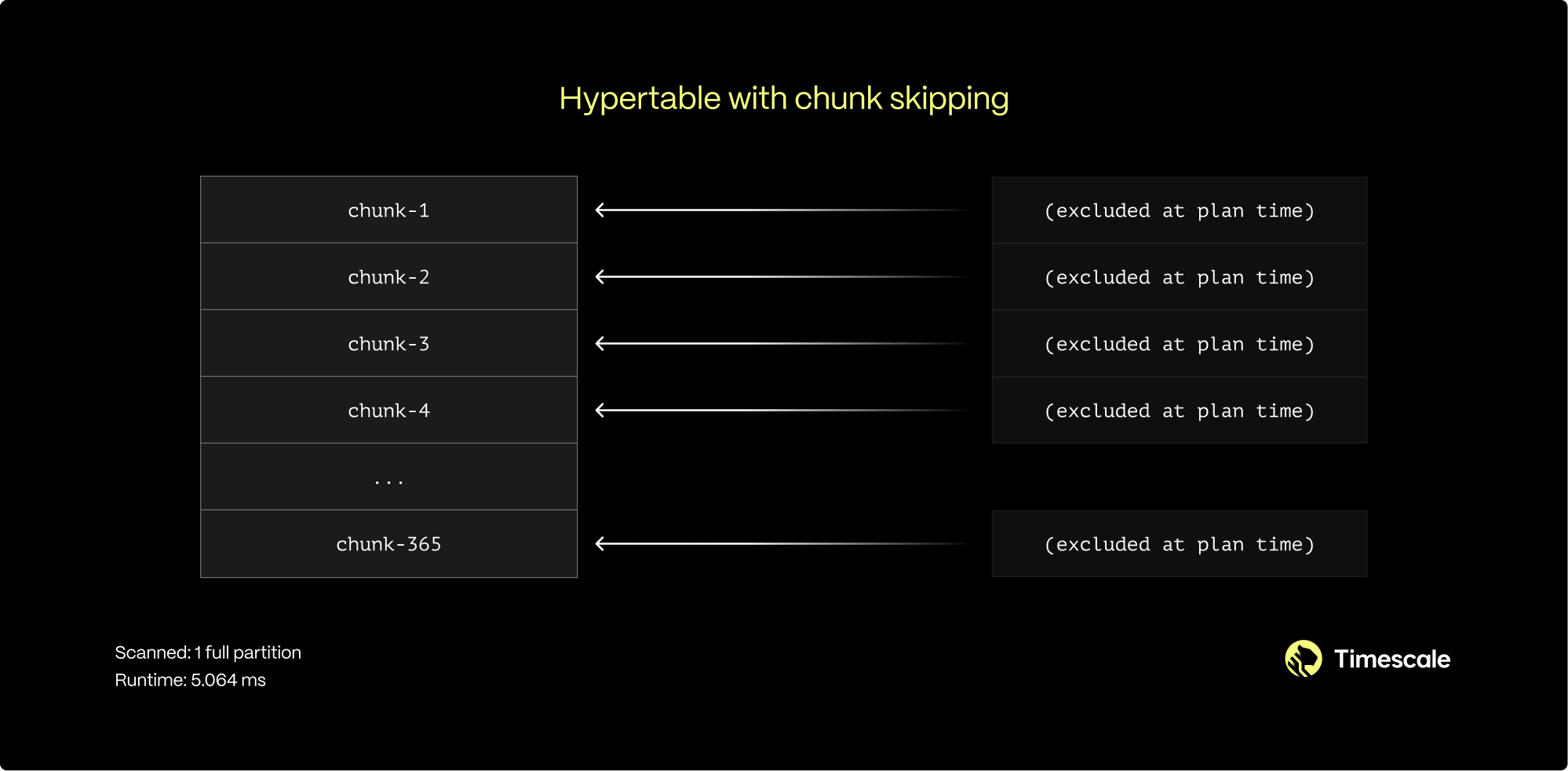

Faster queries with [chunk skipping][chunk-skipping]: Tiger Cloud skips the chunks that are irrelevant in the context of your query, dramatically reducing the time and resources needed to fetch results. Even more—you can enable chunk skipping on non-partitioning columns.

Advanced data analysis with [hyperfunctions][hyperfunctions]: Tiger Cloud enables you to efficiently process, aggregate, and analyze significant volumes of data while maintaining high performance.

To top it all, there is no added complexity—you interact with hypertables in the same way as you would with regular Postgres tables. All the optimization magic happens behind the scenes.

Inheritance is not supported for hypertables and may lead to unexpected behavior.

For more information about using hypertables, including chunk size partitioning, see the [hypertable section][hypertable-docs].

The hypertable workflow

Best practice for using a hypertable is to:

- Create a hypertable

Create a [hypertable][hypertables-section] for your time-series data using [CREATE TABLE][hypertable-create-table].

For [efficient queries][secondary-indexes] on data in the columnstore, remember to segmentby the column you will

use most often to filter your data. For example:

If you are self-hosting TimescaleDB v2.19.3 and below, create a [Postgres relational table][pg-create-table], then convert it using [create_hypertable][create_hypertable]. You then enable hypercore with a call to [ALTER TABLE][alter_table_hypercore].

- Set the columnstore policy

===== PAGE: https://docs.tigerdata.com/api/hypercore/ =====

Examples:

Example 1 (sql):

CREATE TABLE conditions (

time TIMESTAMPTZ NOT NULL,

location TEXT NOT NULL,

device TEXT NOT NULL,

temperature DOUBLE PRECISION NULL,

humidity DOUBLE PRECISION NULL

) WITH (

tsdb.hypertable,

tsdb.partition_column='time',

tsdb.segmentby = 'device',

tsdb.orderby = 'time DESC'

);

Example 2 (sql):

CALL add_columnstore_policy('conditions', after => INTERVAL '1d');

Create foreign keys in a distributed hypertable

URL: llms-txt#create-foreign-keys-in-a-distributed-hypertable

Contents:

- Creating foreign keys in a distributed hypertable

[Multi-node support is sunsetted][multi-node-deprecation].

TimescaleDB v2.13 is the last release that includes multi-node support for Postgres versions 13, 14, and 15.

Tables and values referenced by a distributed hypertable must be present on the

access node and all data nodes. To create a foreign key from a distributed

hypertable, use [distributed_exec][distributed_exec] to first create the

referenced table on all nodes.

Creating foreign keys in a distributed hypertable

- Create the referenced table on the access node.

- Use [

distributed_exec][distributed_exec] to create the same table on all data nodes and update it with the correct data. - Create a foreign key from your distributed hypertable to your referenced table.

===== PAGE: https://docs.tigerdata.com/self-hosted/distributed-hypertables/triggers/ =====

CREATE TABLE

URL: llms-txt#create-table

Contents:

- Samples

- Arguments

- Returns

Create a [hypertable][hypertable-docs] partitioned on a single dimension with [columnstore][hypercore] enabled, or create a standard Postgres relational table.

A hypertable is a specialized Postgres table that automatically partitions your data by time. All actions that work on a Postgres table, work on hypertables. For example, [ALTER TABLE][alter_table_hypercore] and [SELECT][sql-select]. By default, a hypertable is partitioned on the time dimension. To add secondary dimensions to a hypertable, call [add_dimension][add-dimension]. To convert an existing relational table into a hypertable, call [create_hypertable][create_hypertable].

As the data cools and becomes more suited for analytics, [add a columnstore policy][add_columnstore_policy] so your data is automatically converted to the columnstore after a specific time interval. This columnar format enables fast scanning and aggregation, optimizing performance for analytical workloads while also saving significant storage space. In the columnstore conversion, hypertable chunks are compressed by up to 98%, and organized for efficient, large-scale queries. This columnar format enables fast scanning and aggregation, optimizing performance for analytical workloads. You can also manually [convert chunks][convert_to_columnstore] in a hypertable to the columnstore.

Hypertable to hypertable foreign keys are not allowed, all other combinations are permitted.

The [columnstore][hypercore] settings are applied on a per-chunk basis. You can change the settings by calling [ALTER TABLE][alter_table_hypercore] without first converting the entire hypertable back to the [rowstore][hypercore]. The new settings apply only to the chunks that have not yet been converted to columnstore, the existing chunks in the columnstore do not change. Similarly, if you [remove an existing columnstore policy][remove_columnstore_policy] and then [add a new one][add_columnstore_policy], the new policy applies only to the unconverted chunks. This means that chunks with different columnstore settings can co-exist in the same hypertable.

TimescaleDB calculates default columnstore settings for each chunk when it is created. These settings apply to each chunk, and not the entire hypertable. To explicitly disable the defaults, set a setting to an empty string.

CREATE TABLE extends the standard Postgres [CREATE TABLE][pg-create-table]. This page explains the features and

arguments specific to TimescaleDB.

Since TimescaleDB v2.20.0

- Create a hypertable partitioned on the time dimension and enable columnstore:

Create the hypertable:

Enable hypercore by adding a columnstore policy:

Create a hypertable partitioned on the time with fewer chunks based on time interval:

Create a hypertable partitioned using [UUIDv7][uuidv7_functions]:

Enable data compression during ingestion:

When you set timescaledb.enable_direct_compress_copy your data gets compressed in memory during ingestion with COPY statements.

By writing the compressed batches immediately in the columnstore, the IO footprint is significantly lower.

Also, the [columnstore policy][add_columnstore_policy] you set is less important, INSERT already produces compressed chunks.

Please note that this feature is a tech preview and not production-ready. Using this feature could lead to regressed query performance and/or storage ratio, if the ingested batches are not correctly ordered or are of too high cardinality.

To enable in-memory data compression during ingestion:

Important facts

- High cardinality use cases do not produce good batches and lead to degreaded query performance.

- The columnstore is optimized to store 1000 records per batch, which is the optimal format for ingestion per segment by.

- WAL records are written for the compressed batches rather than the individual tuples.

- Currently only

COPYis support,INSERTwill eventually follow. - Best results are achieved for batch ingestion with 1000 records or more, upper boundary is 10.000 records.

- Continous Aggregates are not supported at the moment.

Create a hypertable:

- Copy data into the hypertable: You achieve the highest insert rate using binary format. CSV and text format are also supported.

- Create a Postgres relational table:

| Name | Type | Default | Required | Description |

|---|---|---|---|---|

tsdb.hypertable |

BOOLEAN | true |

✖ | Create a new [hypertable][hypertable-docs] for time-series data rather than a standard Postgres relational table. |

tsdb.partition_column |

TEXT | true |

✖ | Set the time column to automatically partition your time-series data by. |

tsdb.chunk_interval |

TEXT | 7 days |

✖ | Change this to better suit your needs. For example, if you set chunk_interval to 1 day, each chunk stores data from the same day. Data from different days is stored in different chunks. |

tsdb.create_default_indexes |

BOOLEAN | true |

✖ | Set to false to not automatically create indexes. The default indexes are:

|

tsdb.associated_schema |

REGCLASS | _timescaledb_internal |

✖ | Set the schema name for internal hypertable tables. |

tsdb.associated_table_prefix |

TEXT | _hyper |

✖ | Set the prefix for the names of internal hypertable chunks. |

tsdb.orderby |

TEXT | Descending order on the time column in table_name. |

✖ | The order in which items are used in the columnstore. Specified in the same way as an ORDER BY clause in a SELECT query. Setting tsdb.orderby automatically creates an implicit min/max sparse index on the orderby column. |

tsdb.segmentby |

TEXT | TimescaleDB looks at pg_stats and determines an appropriate column based on the data cardinality and distribution. If pg_stats is not available, TimescaleDB looks for an appropriate column from the existing indexes. |

✖ | Set the list of columns used to segment data in the columnstore for table. An identifier representing the source of the data such as device_id or tags_id is usually a good candidate. |

tsdb.sparse_index |

TEXT | TimescaleDB evaluates the columns you already have indexed, checks which data types are a good fit for sparse indexing, then creates a sparse index as an optimization. | ✖ | Configure the sparse indexes for compressed chunks. Requires setting tsdb.orderby. Supported index types include: bloom(<column_name>): a probabilistic index, effective for = filters. Cannot be applied to tsdb.orderby columns.minmax(<column_name>): stores min/max values for each compressed chunk. Setting tsdb.orderby automatically creates an implicit min/max sparse index on the orderby column. |

TimescaleDB returns a simple message indicating success or failure.

===== PAGE: https://docs.tigerdata.com/api/hypertable/drop_chunks/ =====

Examples:

Example 1 (sql):

CREATE TABLE crypto_ticks (

"time" TIMESTAMPTZ,

symbol TEXT,

price DOUBLE PRECISION,

day_volume NUMERIC

) WITH (

tsdb.hypertable,

tsdb.partition_column='time',

tsdb.segmentby='symbol',

tsdb.orderby='time DESC'

);

Example 2 (sql):

CALL add_columnstore_policy('crypto_ticks', after => INTERVAL '1d');

Example 3 (sql):

CREATE TABLE IF NOT EXISTS hypertable_control_chunk_interval(

time int4 NOT NULL,

device text,

value float

) WITH (

tsdb.hypertable,

tsdb.partition_column='time',

tsdb.chunk_interval=3453

);

Example 4 (sql):

-- For optimal compression on the ID column, first enable UUIDv7 compression

SET enable_uuid_compression=true;

-- Then create your table

CREATE TABLE events (

id uuid PRIMARY KEY DEFAULT generate_uuidv7(),

payload jsonb

) WITH (tsdb.hypertable, tsdb.partition_column = 'id');

Dropping chunks times out

URL: llms-txt#dropping-chunks-times-out

When you drop a chunk, it requires an exclusive lock. If a chunk is being accessed by another session, you cannot drop the chunk at the same time. If a drop chunk operation can't get the lock on the chunk, then it times out and the process fails. To resolve this problem, check what is locking the chunk. In some cases, this could be caused by a continuous aggregate or other process accessing the chunk. When the drop chunk operation can get an exclusive lock on the chunk, it completes as expected.

For more information about locks, see the [Postgres lock monitoring documentation][pg-lock-monitoring].

===== PAGE: https://docs.tigerdata.com/_troubleshooting/hypertables-unique-index-partitioning/ =====

Create a data retention policy

URL: llms-txt#create-a-data-retention-policy

Contents:

- Add a data retention policy

- Adding a data retention policy

- Remove a data retention policy

- See scheduled data retention jobs

Automatically drop data once its time value ages past a certain interval. When you create a data retention policy, TimescaleDB automatically schedules a background job to drop old chunks.

Add a data retention policy

Add a data retention policy by using the

[add_retention_policy][add_retention_policy] function.

Adding a data retention policy

- Choose which hypertable you want to add the policy to. Decide how long

you want to keep data before dropping it. In this example, the hypertable

named

conditionsretains the data for 24 hours. - Call

add_retention_policy:

A data retention policy only allows you to drop chunks based on how far they are in the past. To drop chunks based on how far they are in the future, manually drop chunks.

Remove a data retention policy

Remove an existing data retention policy by using the

[remove_retention_policy][remove_retention_policy] function. Pass it the name

of the hypertable to remove the policy from.

See scheduled data retention jobs

To see your scheduled data retention jobs and their job statistics, query the

[timescaledb_information.jobs][timescaledb_information.jobs] and

[timescaledb_information.job_stats][timescaledb_information.job_stats] tables.

For example:

The results look like this:

===== PAGE: https://docs.tigerdata.com/use-timescale/data-retention/manually-drop-chunks/ =====

Examples:

Example 1 (sql):

SELECT add_retention_policy('conditions', INTERVAL '24 hours');

Example 2 (sql):

SELECT remove_retention_policy('conditions');

Example 3 (sql):

SELECT j.hypertable_name,

j.job_id,

config,

schedule_interval,

job_status,

last_run_status,

last_run_started_at,

js.next_start,

total_runs,

total_successes,

total_failures

FROM timescaledb_information.jobs j

JOIN timescaledb_information.job_stats js

ON j.job_id = js.job_id

WHERE j.proc_name = 'policy_retention';

Example 4 (sql):

-[ RECORD 1 ]-------+-----------------------------------------------

hypertable_name | conditions

job_id | 1000

config | {"drop_after": "5 years", "hypertable_id": 14}

schedule_interval | 1 day

job_status | Scheduled

last_run_status | Success

last_run_started_at | 2022-05-19 16:15:11.200109+00

next_start | 2022-05-20 16:15:11.243531+00

total_runs | 1

total_successes | 1

total_failures | 0

chunk_columnstore_stats()

URL: llms-txt#chunk_columnstore_stats()

Contents:

- Samples

- Arguments

- Returns

Retrieve statistics about the chunks in the columnstore

chunk_columnstore_stats returns the size of chunks in the columnstore, these values are computed when you call either:

- [add_columnstore_policy][add_columnstore_policy]: create a [job][job] that automatically moves chunks in a hypertable to the columnstore at a specific time interval.

- [convert_to_columnstore][convert_to_columnstore]: manually add a specific chunk in a hypertable to the columnstore.

Inserting into a chunk in the columnstore does not change the chunk size. For more information about how to compute chunk sizes, see [chunks_detailed_size][chunks_detailed_size].

Since TimescaleDB v2.18.0

To retrieve statistics about chunks:

- Show the status of the first two chunks in the

conditionshypertable:

Returns:

- Use

pg_size_prettyto return a more human friendly format:

| Name | Type | Default | Required | Description |

|--|--|--|--|--|

|hypertable|REGCLASS|-|✖| The name of a hypertable |

|Column|Type| Description |

|-|-|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|chunk_schema|TEXT| Schema name of the chunk. |

|chunk_name|TEXT| Name of the chunk. |

|compression_status|TEXT| Current compression status of the chunk. |

|before_compression_table_bytes|BIGINT| Size of the heap before compression. Returns NULL if compression_status == Uncompressed. |

|before_compression_index_bytes|BIGINT| Size of all the indexes before compression. Returns NULL if compression_status == Uncompressed. |

|before_compression_toast_bytes|BIGINT| Size the TOAST table before compression. Returns NULL if compression_status == Uncompressed. |

|before_compression_total_bytes|BIGINT| Size of the entire chunk table (before_compression_table_bytes + before_compression_index_bytes + before_compression_toast_bytes) before compression. Returns NULL if compression_status == Uncompressed.|

|after_compression_table_bytes|BIGINT| Size of the heap after compression. Returns NULL if compression_status == Uncompressed. |

|after_compression_index_bytes|BIGINT| Size of all the indexes after compression. Returns NULL if compression_status == Uncompressed. |

|after_compression_toast_bytes|BIGINT| Size the TOAST table after compression. Returns NULL if compression_status == Uncompressed. |

|after_compression_total_bytes|BIGINT| Size of the entire chunk table (after_compression_table_bytes + after_compression_index_bytes+ after_compression_toast_bytes) after compression. Returns NULL if compression_status == Uncompressed. |

|node_name|TEXT| DEPRECATED: nodes the chunk is located on, applicable only to distributed hypertables. |

===== PAGE: https://docs.tigerdata.com/api/hypercore/convert_to_rowstore/ =====

Examples:

Example 1 (sql):

SELECT * FROM chunk_columnstore_stats('conditions')

ORDER BY chunk_name LIMIT 2;

Example 2 (sql):

-[ RECORD 1 ]------------------+----------------------

chunk_schema | _timescaledb_internal

chunk_name | _hyper_1_1_chunk

compression_status | Uncompressed

before_compression_table_bytes |

before_compression_index_bytes |

before_compression_toast_bytes |

before_compression_total_bytes |

after_compression_table_bytes |

after_compression_index_bytes |

after_compression_toast_bytes |

after_compression_total_bytes |

node_name |

-[ RECORD 2 ]------------------+----------------------

chunk_schema | _timescaledb_internal

chunk_name | _hyper_1_2_chunk

compression_status | Compressed

before_compression_table_bytes | 8192

before_compression_index_bytes | 32768

before_compression_toast_bytes | 0

before_compression_total_bytes | 40960

after_compression_table_bytes | 8192

after_compression_index_bytes | 32768

after_compression_toast_bytes | 8192

after_compression_total_bytes | 49152

node_name |

Example 3 (sql):

SELECT pg_size_pretty(after_compression_total_bytes) AS total

FROM chunk_columnstore_stats('conditions')

WHERE compression_status = 'Compressed';

Example 4 (sql):

-[ RECORD 1 ]--+------

total | 48 kB

timescaledb_information.dimensions

URL: llms-txt#timescaledb_information.dimensions

Contents:

- Samples

- Available columns

Returns information about the dimensions of a hypertable. Hypertables can be partitioned on a range of different dimensions. By default, all hypertables are partitioned on time, but it is also possible to partition on other dimensions in addition to time.

For hypertables that are partitioned solely on time,

timescaledb_information.dimensions returns a single row of metadata. For

hypertables that are partitioned on more than one dimension, the call returns a

row for each dimension.

For time-based dimensions, the metadata returned indicates the integer datatype,

such as BIGINT, INTEGER, or SMALLINT, and the time-related datatype, such as

TIMESTAMPTZ, TIMESTAMP, or DATE. For space-based dimension, the metadata

returned specifies the number of num_partitions.

If the hypertable uses time data types, the time_interval column is defined.

Alternatively, if the hypertable uses integer data types, the integer_interval

and integer_now_func columns are defined.

Get information about the dimensions of hypertables.

The by_range and by_hash dimension builders are an addition to TimescaleDB 2.13.

Get information about dimensions of a hypertable that has two time-based dimensions.

|Name|Type|Description|

|-|-|-|

|hypertable_schema|TEXT|Schema name of the hypertable|

|hypertable_name|TEXT|Table name of the hypertable|

|dimension_number|BIGINT|Dimension number of the hypertable, starting from 1|

|column_name|TEXT|Name of the column used to create this dimension|

|column_type|REGTYPE|Type of the column used to create this dimension|

|dimension_type|TEXT|Is this a time based or space based dimension|

|time_interval|INTERVAL|Time interval for primary dimension if the column type is a time datatype|

|integer_interval|BIGINT|Integer interval for primary dimension if the column type is an integer datatype|

|integer_now_func|TEXT|integer_nowfunction for primary dimension if the column type is an integer datatype|

|num_partitions`|SMALLINT|Number of partitions for the dimension|

The time_interval and integer_interval columns are not applicable for space

based dimensions.

===== PAGE: https://docs.tigerdata.com/api/informational-views/job_errors/ =====

Examples:

Example 1 (sql):

-- Create a range and hash partitioned hypertable

CREATE TABLE dist_table(time timestamptz, device int, temp float);

SELECT create_hypertable('dist_table', by_range('time', INTERVAL '7 days'));

SELECT add_dimension('dist_table', by_hash('device', 3));

SELECT * from timescaledb_information.dimensions

ORDER BY hypertable_name, dimension_number;

-[ RECORD 1 ]-----+-------------------------

hypertable_schema | public

hypertable_name | dist_table

dimension_number | 1

column_name | time

column_type | timestamp with time zone

dimension_type | Time

time_interval | 7 days

integer_interval |

integer_now_func |

num_partitions |

-[ RECORD 2 ]-----+-------------------------

hypertable_schema | public

hypertable_name | dist_table

dimension_number | 2

column_name | device

column_type | integer

dimension_type | Space

time_interval |

integer_interval |

integer_now_func |

num_partitions | 2

About Tiger Cloud storage tiers

URL: llms-txt#about-tiger-cloud-storage-tiers

Contents:

- High-performance storage

- Low-cost storage

The tiered storage architecture in Tiger Cloud includes a high-performance storage tier and a low-cost object storage tier. You use the high-performance tier for data that requires quick access, and the object tier for rarely used historical data. Tiering policies move older data asynchronously and periodically from high-performance to low-cost storage, sparing you the need to do it manually. Chunks from a single hypertable, including compressed chunks, can stretch across these two storage tiers.

High-performance storage

High-performance storage is where your data is stored by default, until you [enable tiered storage][manage-tiering] and [move older data to the low-cost tier][move-data]. In the high-performance storage, your data is stored in the block format and optimized for frequent querying. The [hypercore row-columnar storage engine][hypercore] available in this tier is designed specifically for real-time analytics. It enables you to compress the data in the high-performance storage by up to 90%, while improving performance. Coupled with other optimizations, Tiger Cloud high-performance storage makes sure your data is always accessible and your queries run at lightning speed.

Tiger Cloud high-performance storage comes in the following types:

- Standard (default): based on [AWS EBS gp3][aws-gp3] and designed for general workloads. Provides up to 16 TB of storage and 16,000 IOPS.

- Enhanced: based on [EBS io2][ebs-io2] and designed for high-scale, high-throughput workloads. Provides up to 64 TB of storage and 32,000 IOPS.

[See the differences][aws-storage-types] in the underlying AWS storage. You [enable enhanced storage][enable-enhanced] as needed in Tiger Cloud Console.

Once you [enable tiered storage][manage-tiering], you can start moving rarely used data to the object tier. The object tier is based on AWS S3 and stores your data in the [Apache Parquet][parquet] format. Within a Parquet file, a set of rows is grouped together to form a row group. Within a row group, values for a single column across multiple rows are stored together. The original size of the data in your service, compressed or uncompressed, does not correspond directly to its size in S3. A compressed hypertable may even take more space in S3 than it does in Tiger Cloud.

Apache Parquet allows for more efficient scans across longer time periods, and Tiger Cloud uses other metadata and query optimizations to reduce the amount of data that needs to be fetched to satisfy a query, such as:

- Chunk skipping: exclude the chunks that fall outside the query time window.

- Row group skipping: identify the row groups within the Parquet object that satisfy the query.

- Column skipping: fetch only columns that are requested by the query.

The following query is against a tiered dataset and illustrates the optimizations:

EXPLAIN illustrates which chunks are being pulled in from the object storage tier:

- Fetch data from chunks 42, 43, and 44 from the object storage tier.

- Skip row groups and limit the fetch to a subset of the offsets in the

Parquet object that potentially match the query filter. Only fetch the data

for

device_uuid,sensor_id, andobserved_atas the query needs only these 3 columns.

The object storage tier is more than an archiving solution. It is also:

- Cost-effective: store high volumes of data at a lower cost. You pay only for what you store, with no extra cost for queries.

- Scalable: scale past the restrictions of even the enhanced high-performance storage tier.

- Online: your data is always there and can be [queried when needed][querying-tiered-data].

By default, tiered data is not included when you query from a Tiger Cloud service. To access tiered data, you [enable tiered reads][querying-tiered-data] for a query, a session, or even for all sessions. After you enable tiered reads, when you run regular SQL queries, a behind-the-scenes process transparently pulls data from wherever it's located: the standard high-performance storage tier, the object storage tier, or both. You can JOIN against tiered data, build views, and even define continuous aggregates on it. In fact, because the implementation of continuous aggregates also uses hypertables, they can be tiered to low-cost storage as well.

For low-cost storage, Tiger Data charges only for the size of your data in S3 in the Apache Parquet format, regardless of whether it was compressed in Tiger Cloud before tiering. There are no additional expenses, such as data transfer or compute.

The low-cost storage tier comes with the following limitations:

- Limited schema modifications: some schema modifications are not allowed on hypertables with tiered chunks.

Allowed modifications include: renaming the hypertable, adding columns

with `NULL` defaults, adding indexes, changing or renaming the hypertable

schema, and adding `CHECK` constraints. For `CHECK` constraints, only

untiered data is verified.

Columns can also be deleted, but you cannot subsequently add a new column

to a tiered hypertable with the same name as the now-deleted column.

Disallowed modifications include: adding a column with non-NULL

defaults, renaming a column, changing the data type of a

column, and adding a `NOT NULL` constraint to the column.

Limited data changes: you cannot insert data into, update, or delete a tiered chunk. These limitations take effect as soon as the chunk is scheduled for tiering.