llms-full.md 3.3 MB

===== PAGE: https://docs.tigerdata.com/getting-started/try-key-features-timescale-products/ =====

Try the key features in Tiger Data products

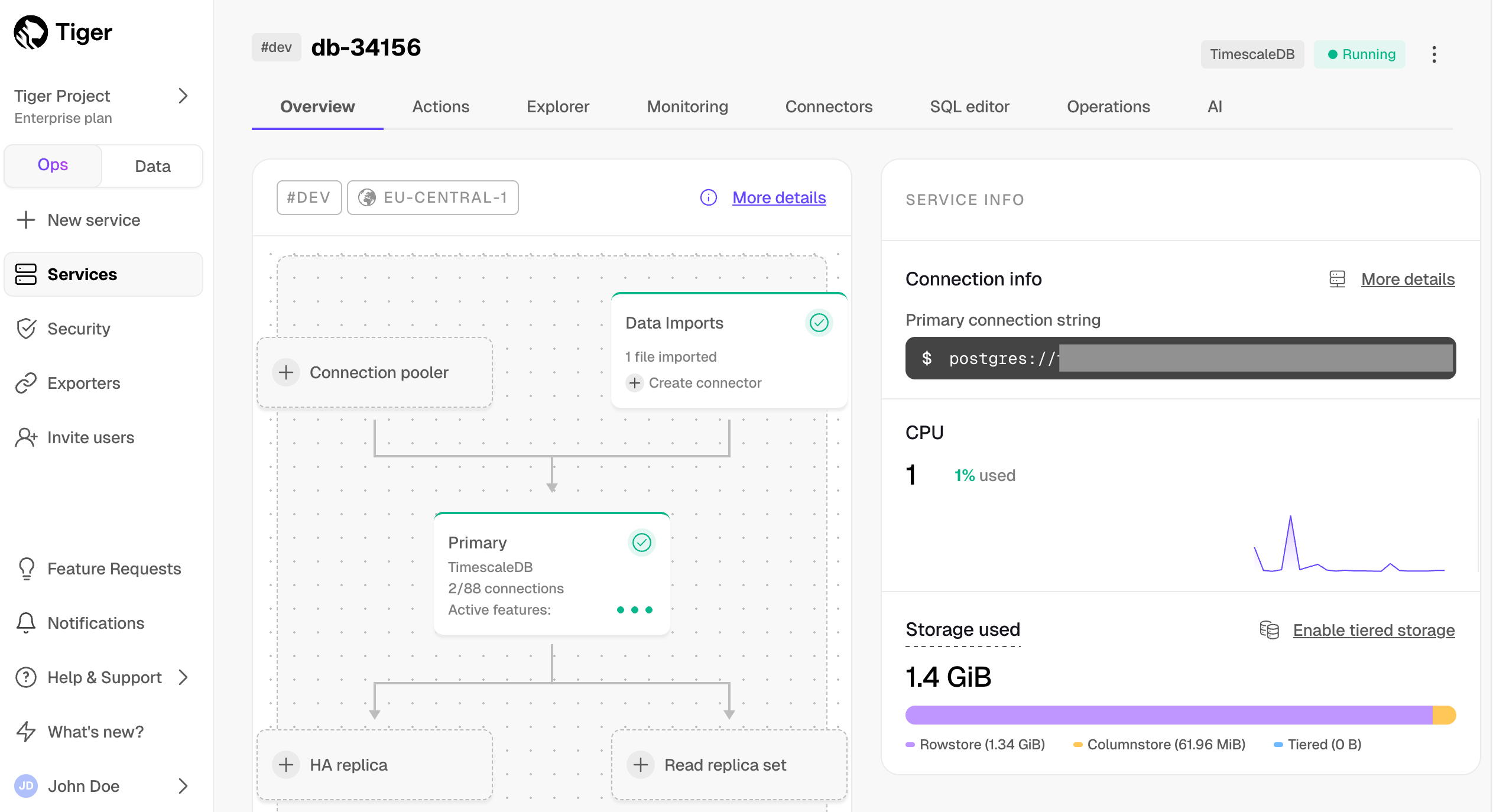

Tiger Cloud offers managed database services that provide a stable and reliable environment for your applications.

Each Tiger Cloud service is a single optimised Postgres instance extended with innovations such as TimescaleDB in the database engine, in a cloud infrastructure that delivers speed without sacrifice. A radically faster Postgres for transactional, analytical, and agentic workloads at scale.

Tiger Cloud scales Postgres to ingest and query vast amounts of live data. Tiger Cloud provides a range of features and optimizations that supercharge your queries while keeping the costs down. For example:

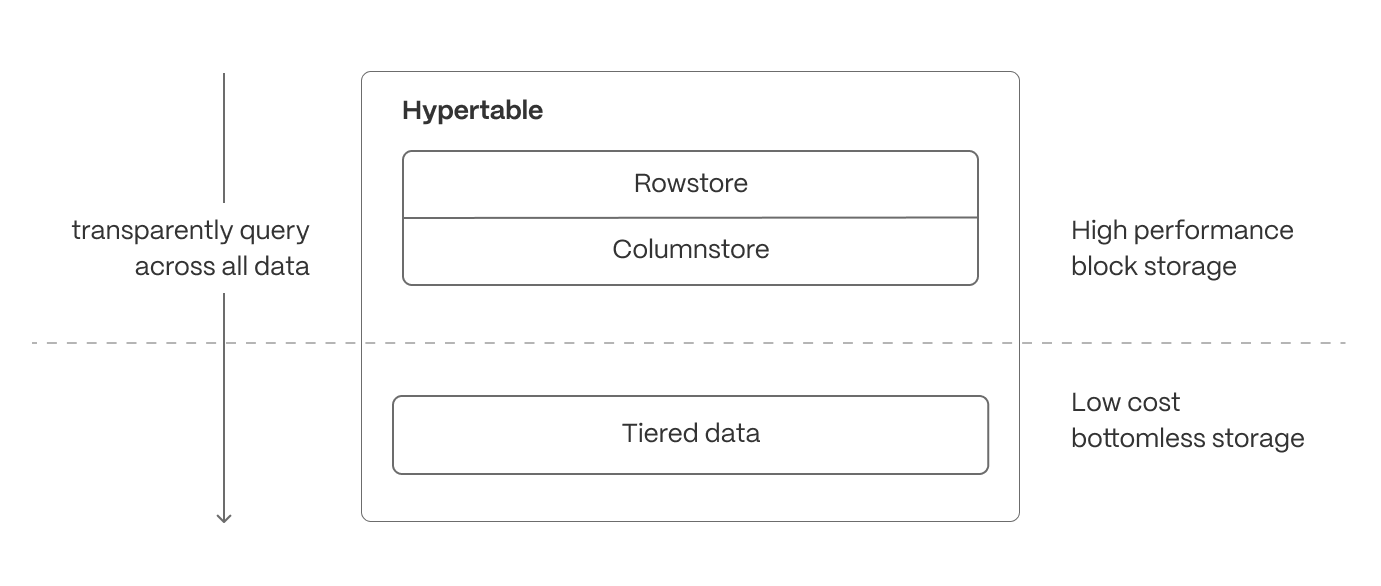

- The hypercore row-columnar engine in TimescaleDB makes queries up to 350x faster, ingests 44% faster, and reduces storage by 90%.

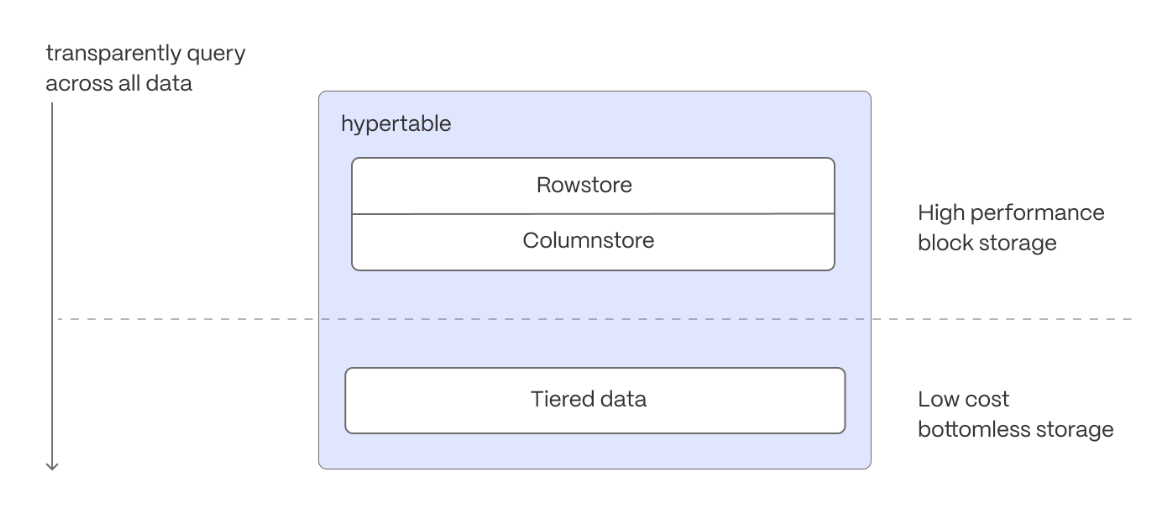

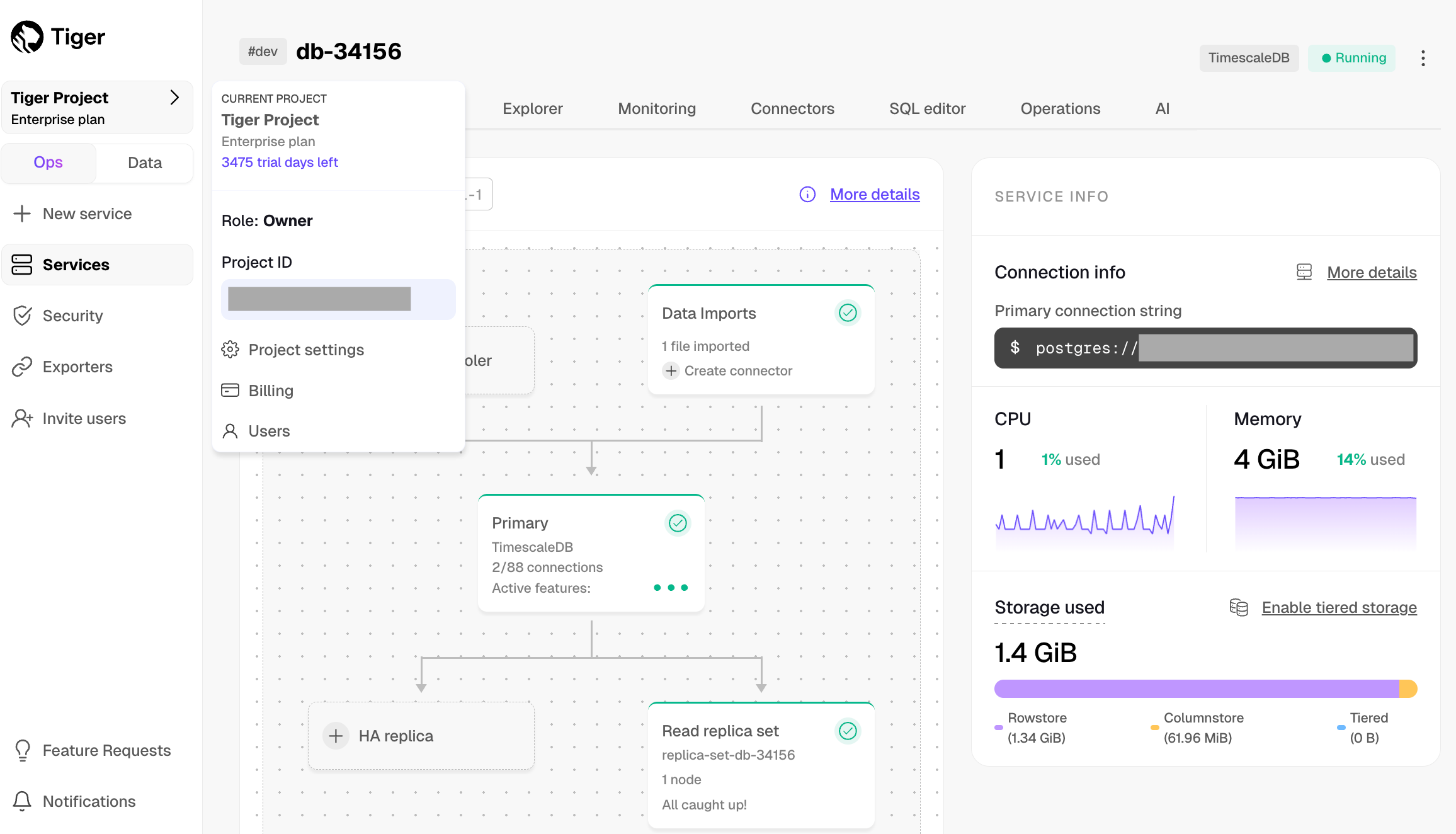

- Tiered storage in Tiger Cloud seamlessly moves your data from high performance storage for frequently accessed data to low cost bottomless storage for rarely accessed data.

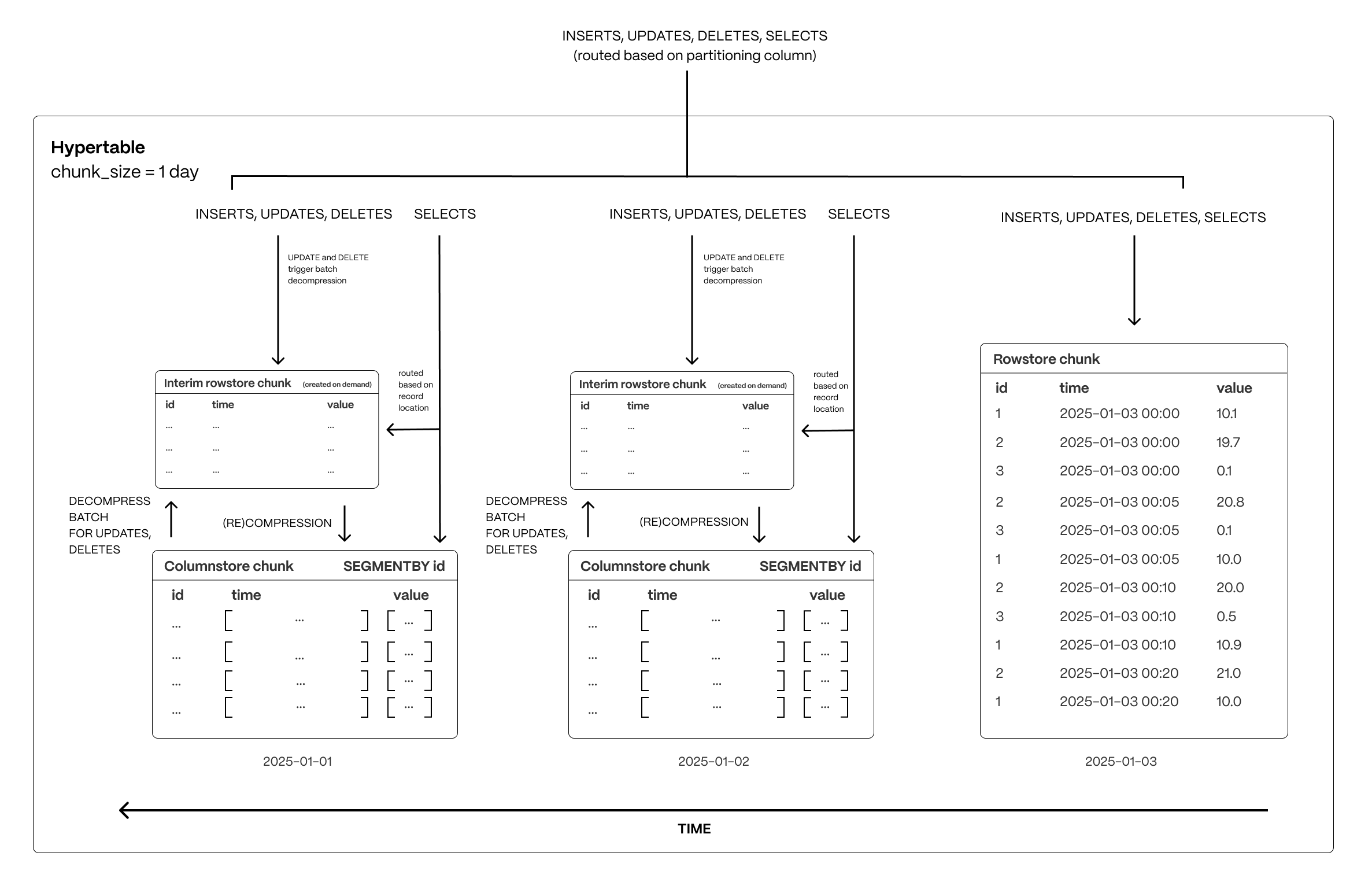

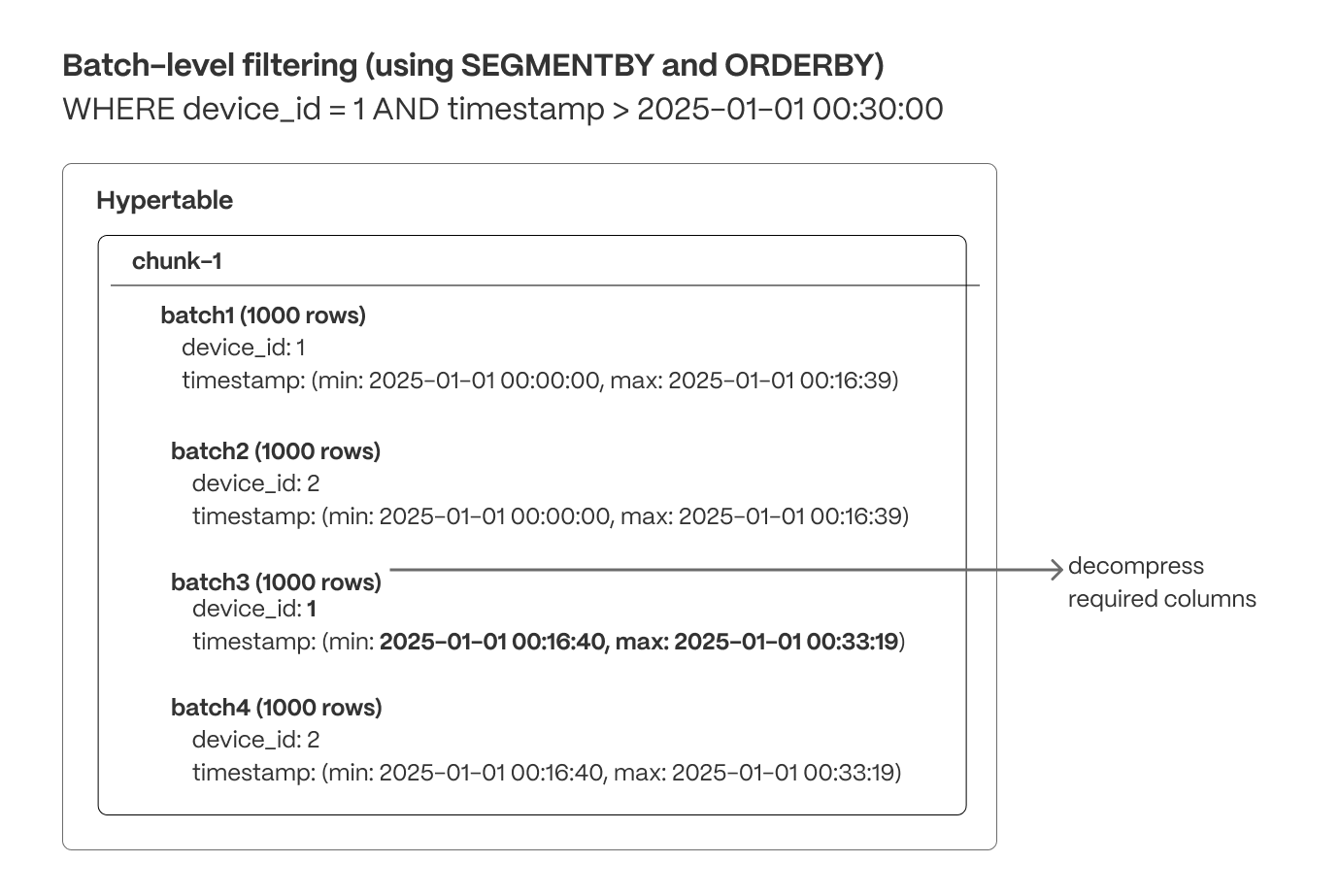

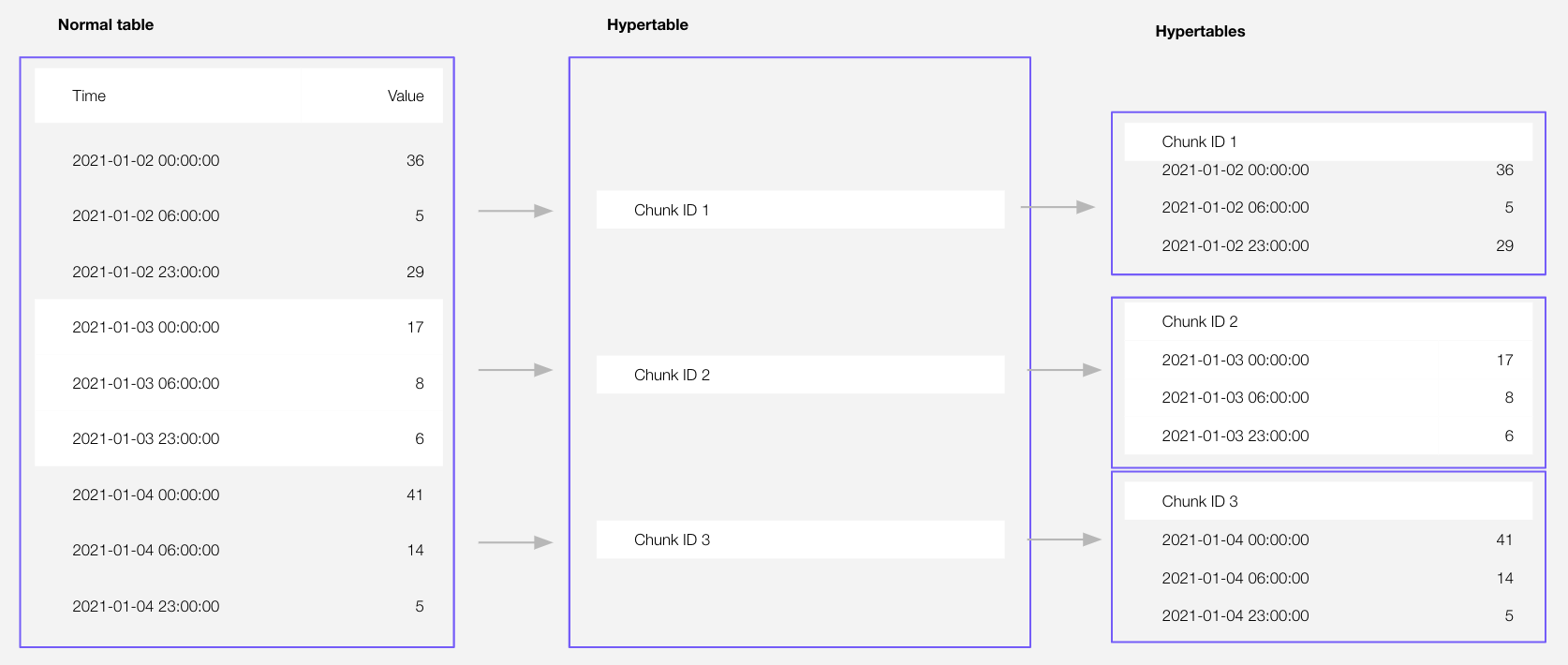

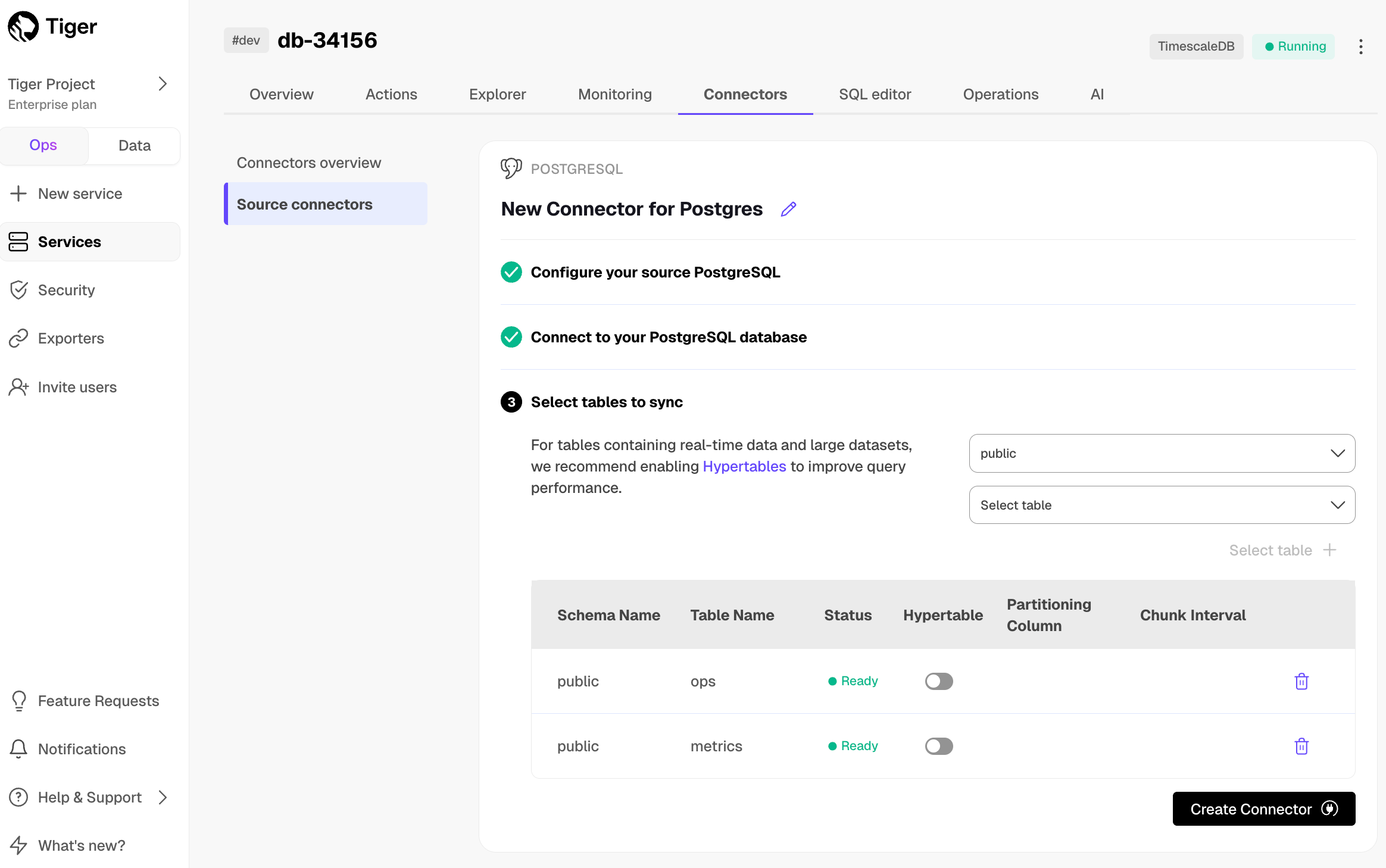

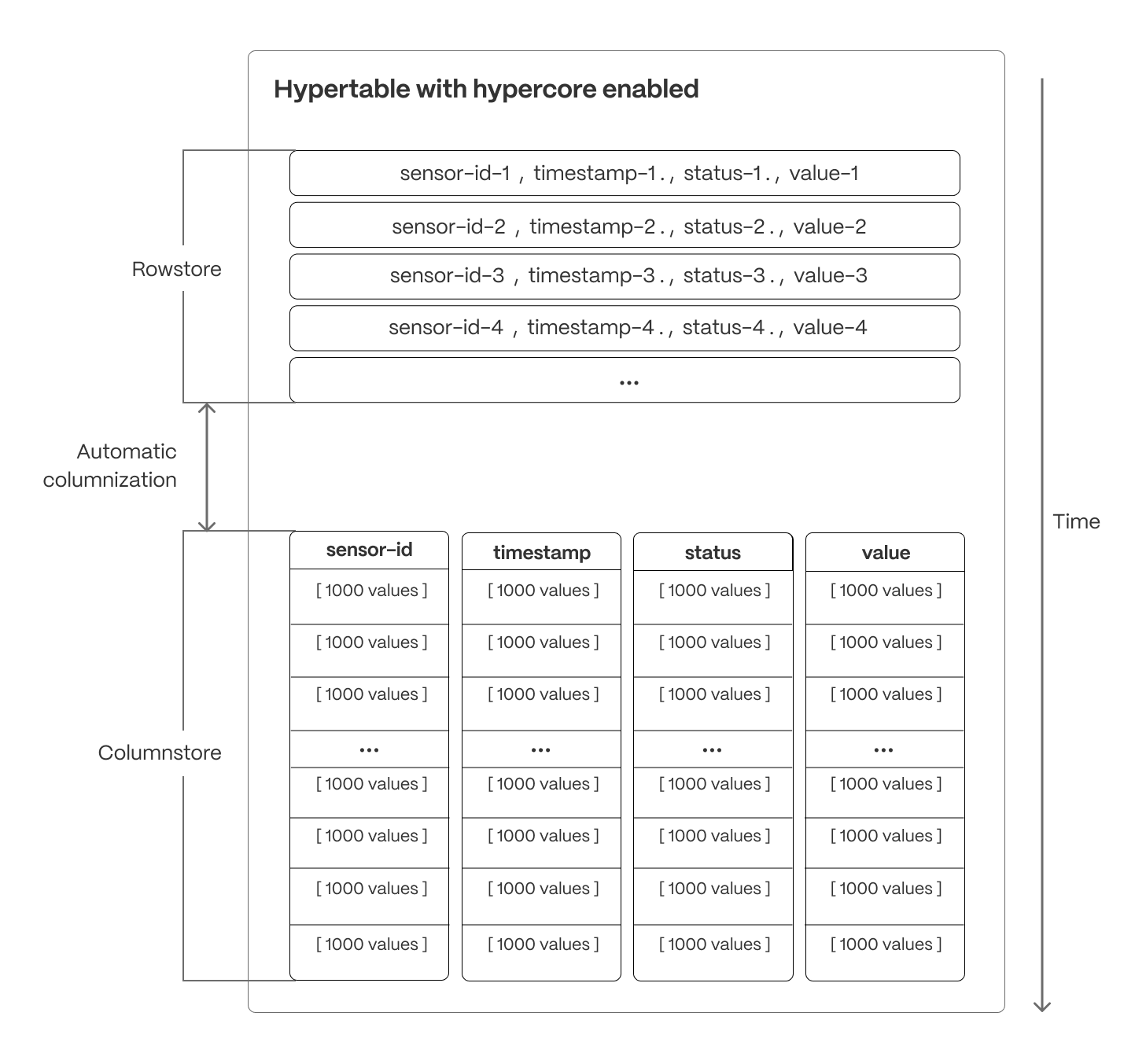

The following figure shows how TimescaleDB optimizes your data for superfast real-time analytics:

This page shows you how to rapidly implement the features in Tiger Cloud that enable you to ingest and query data faster while keeping the costs low.

Prerequisites

To follow the steps on this page:

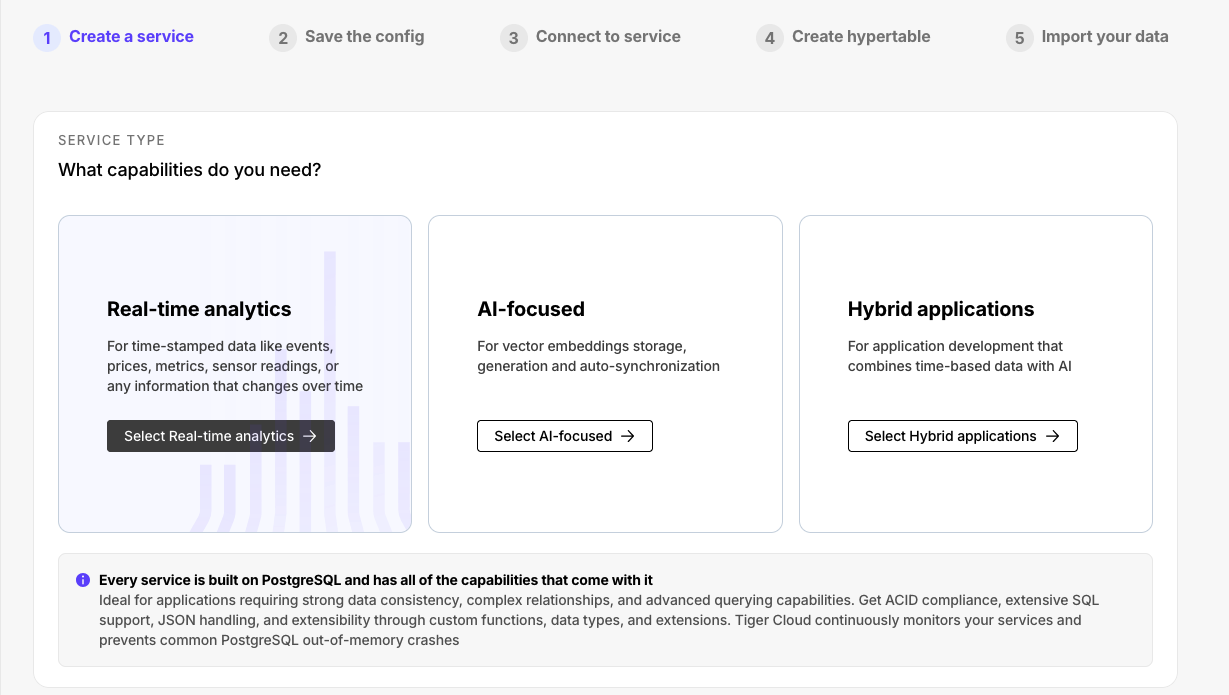

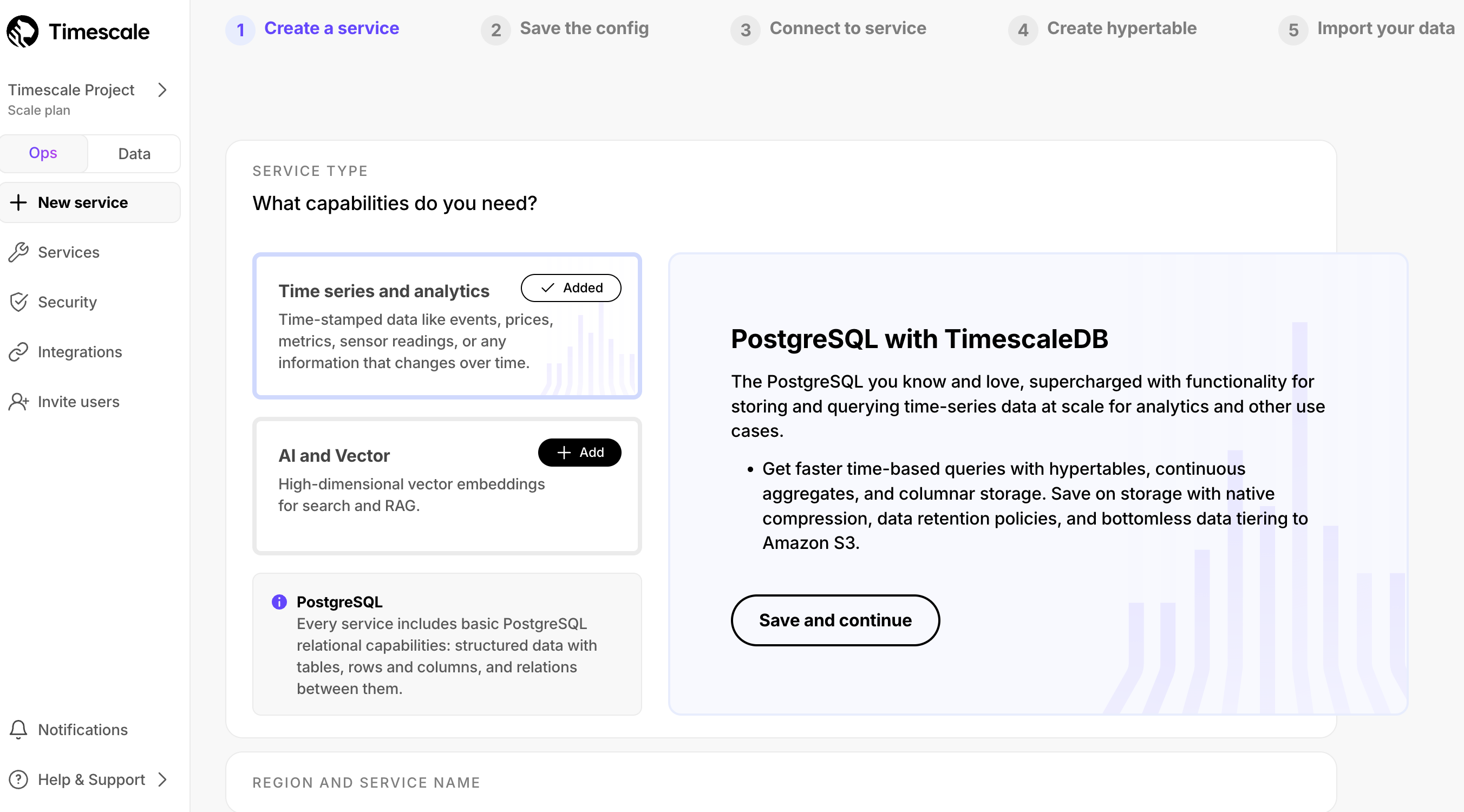

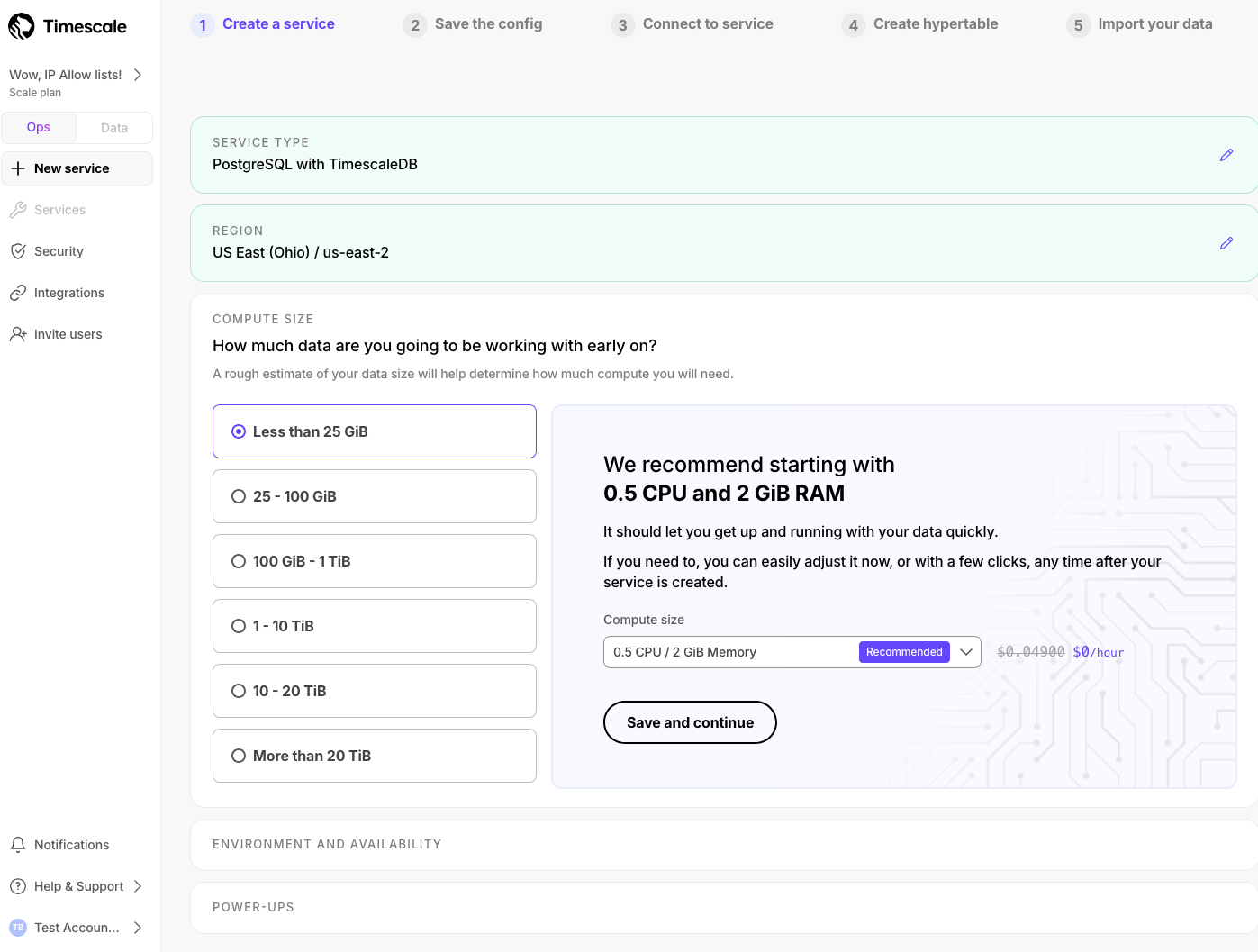

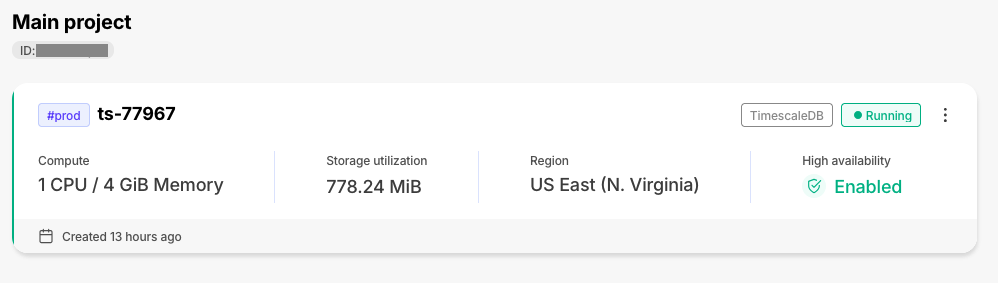

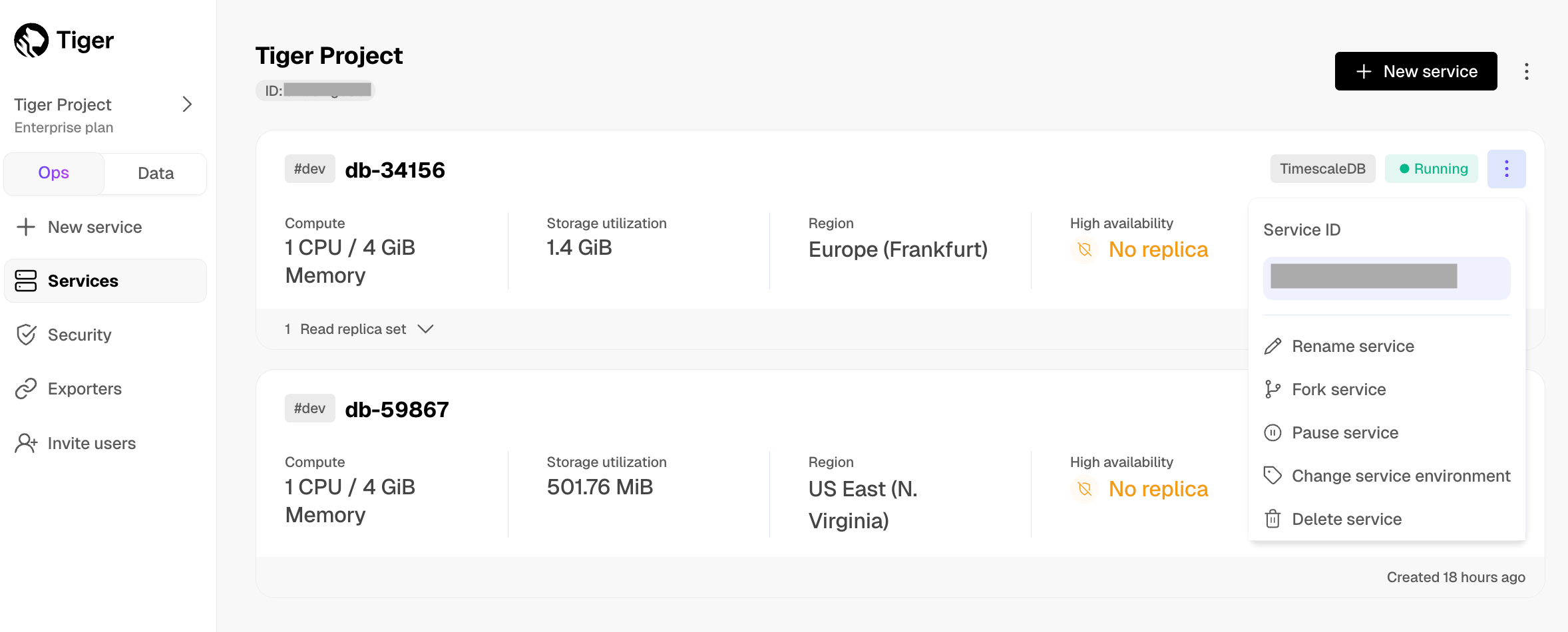

- Create a target Tiger Cloud service with the Real-time analytics capability.

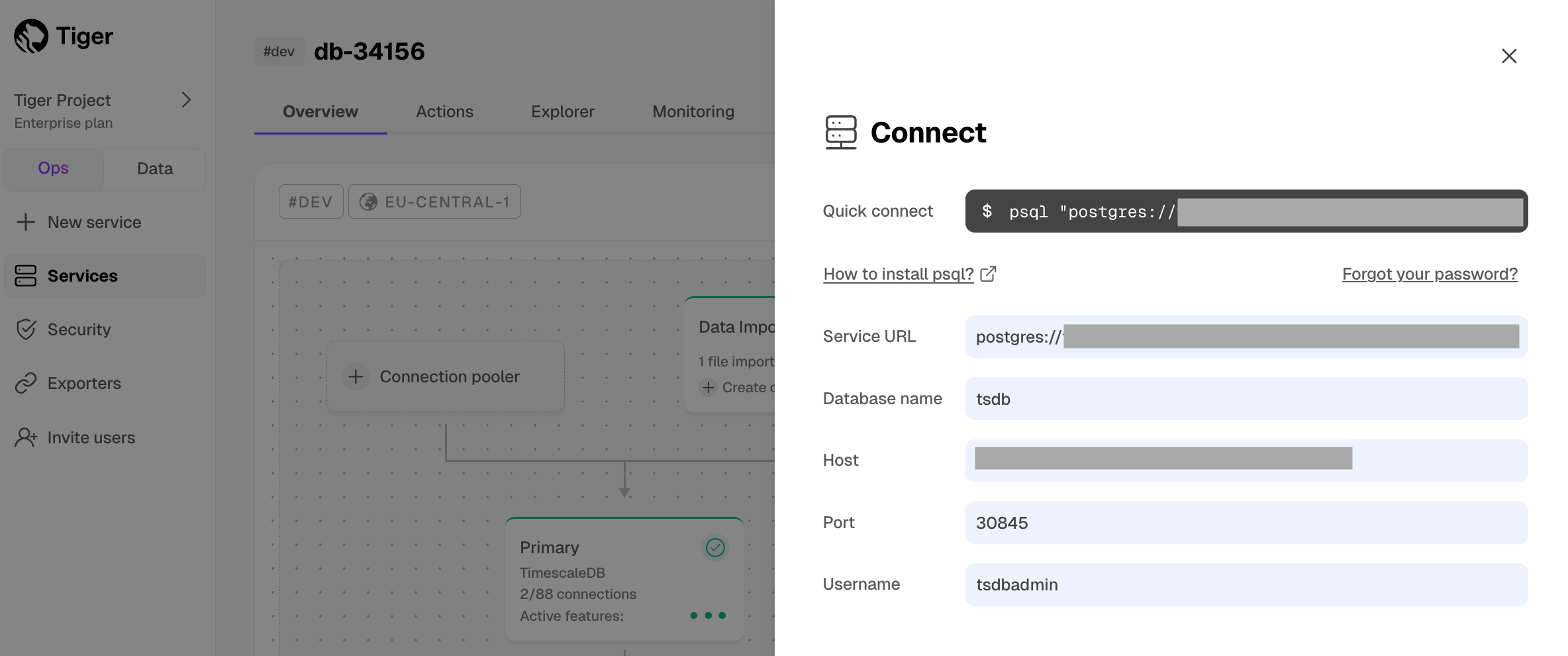

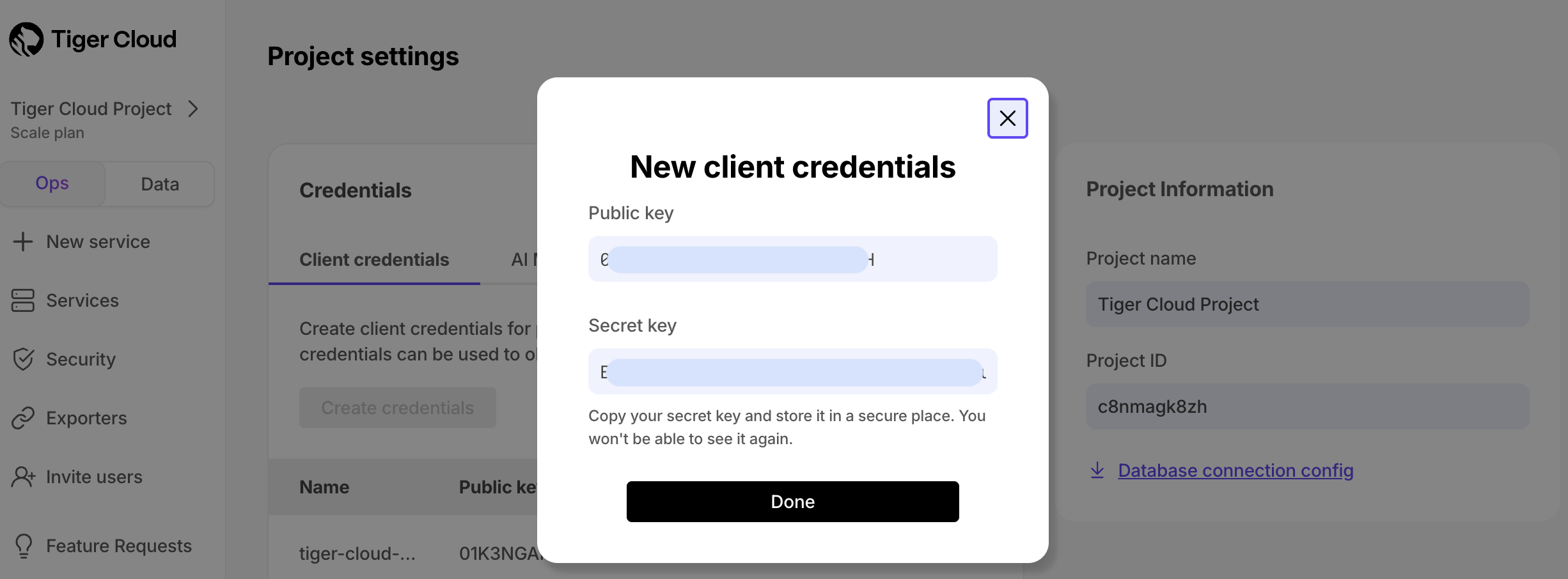

You need your connection details. This procedure also works for self-hosted TimescaleDB.

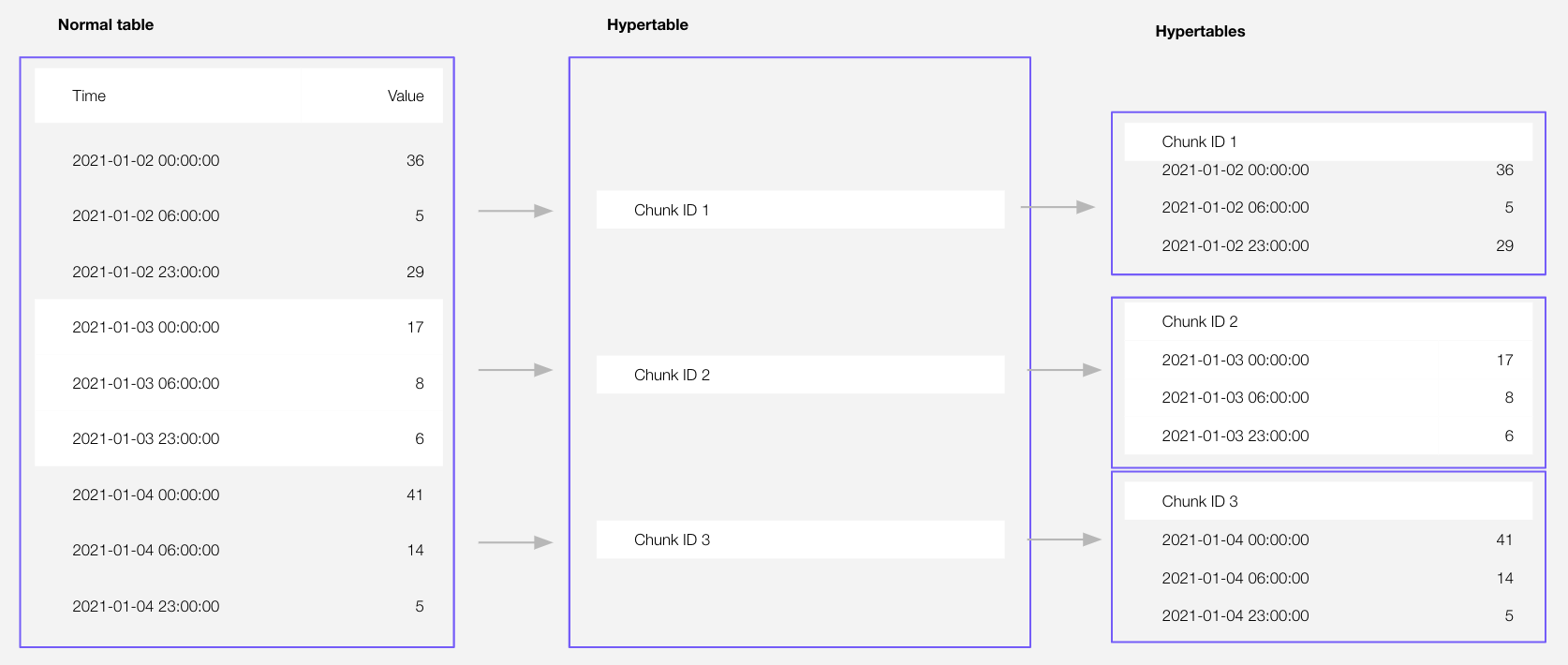

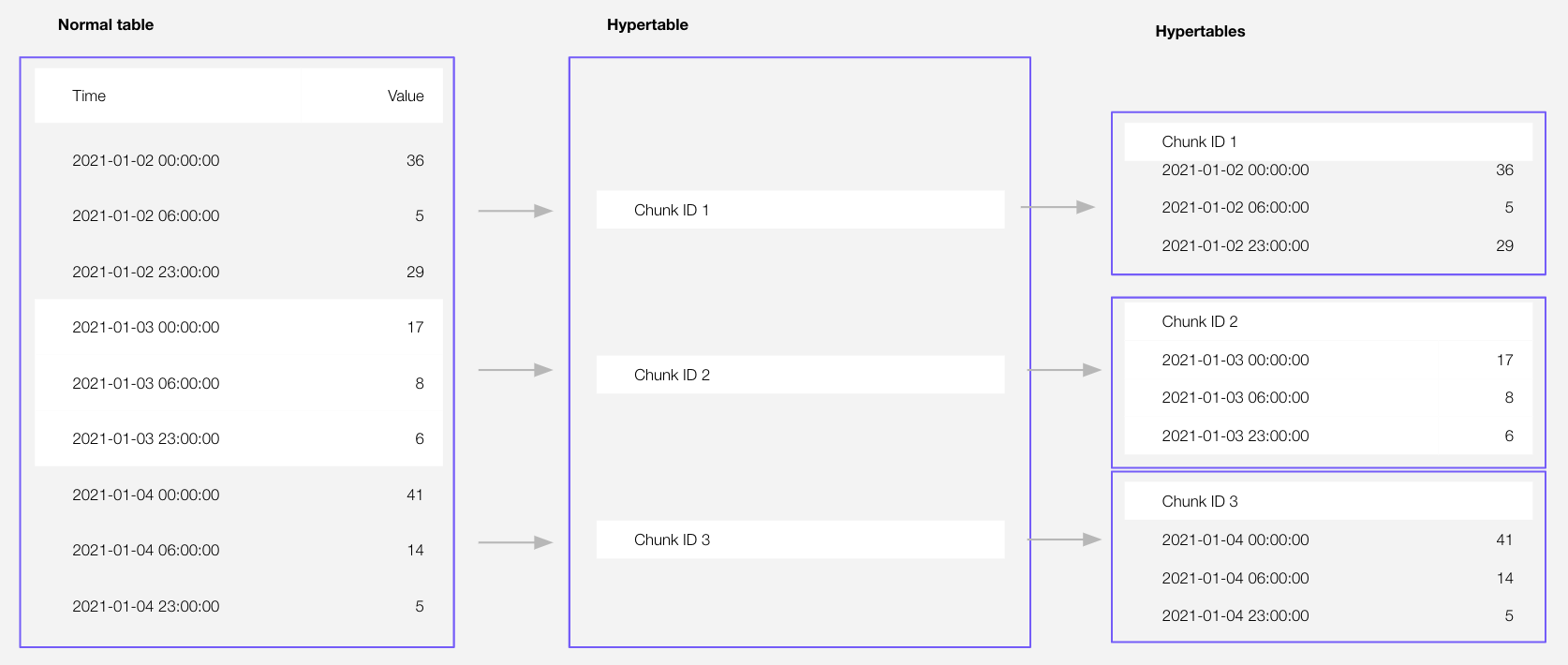

Optimize time-series data in hypertables with hypercore

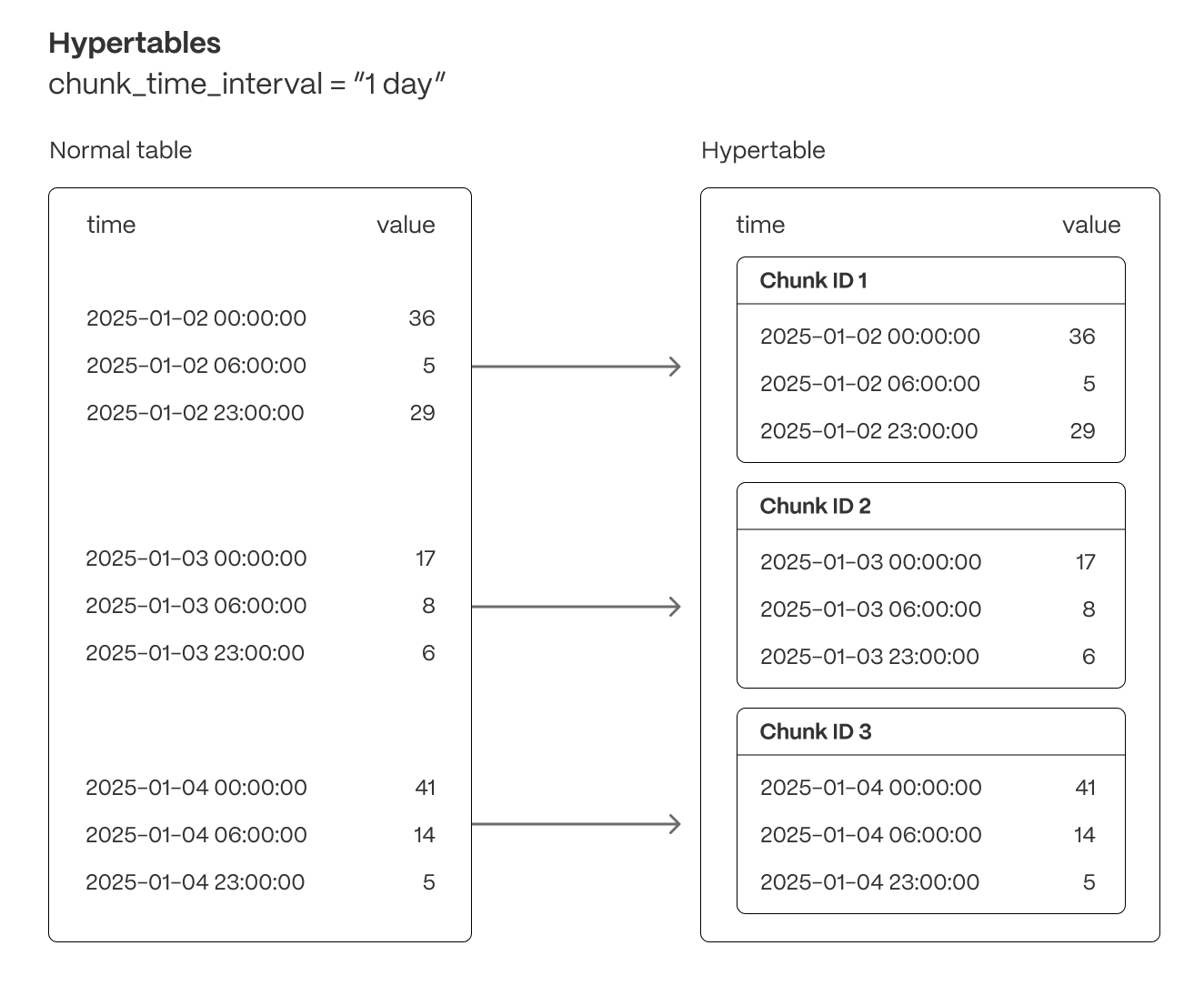

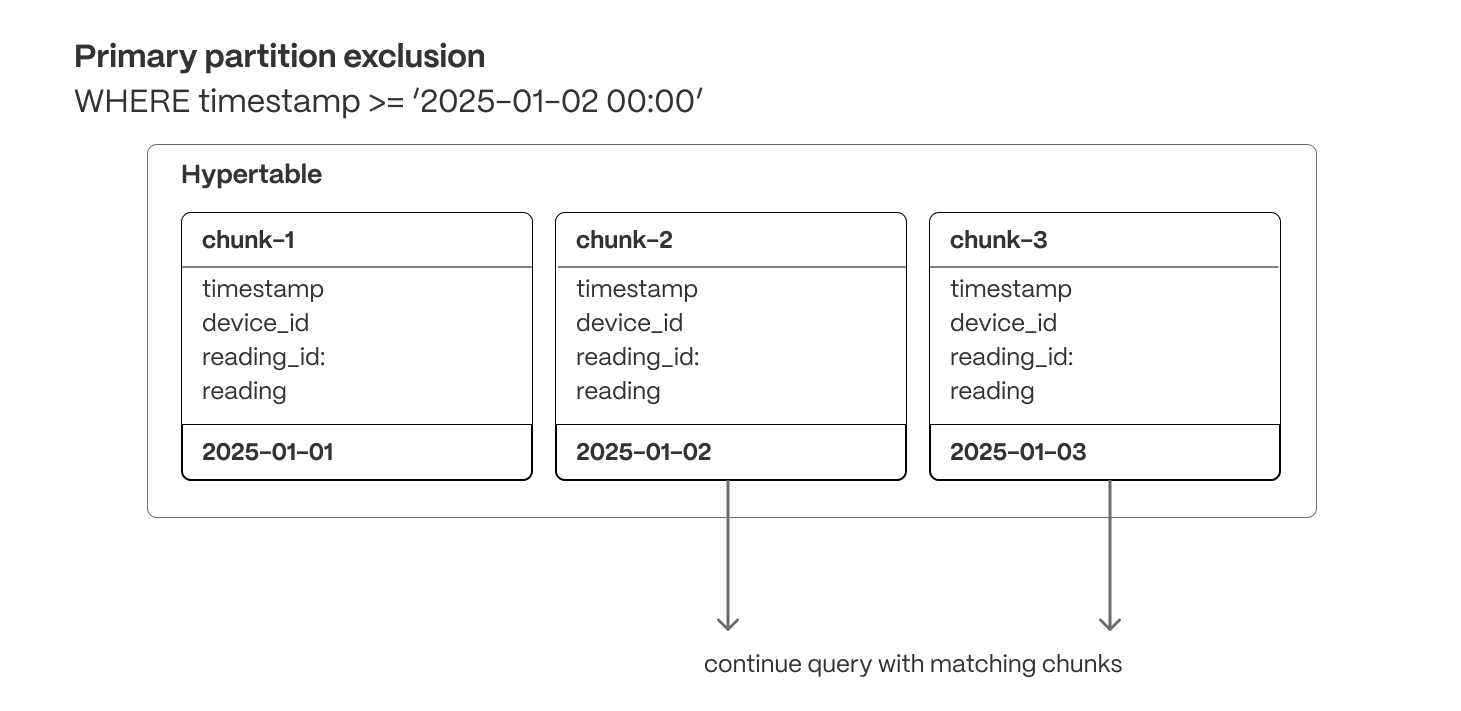

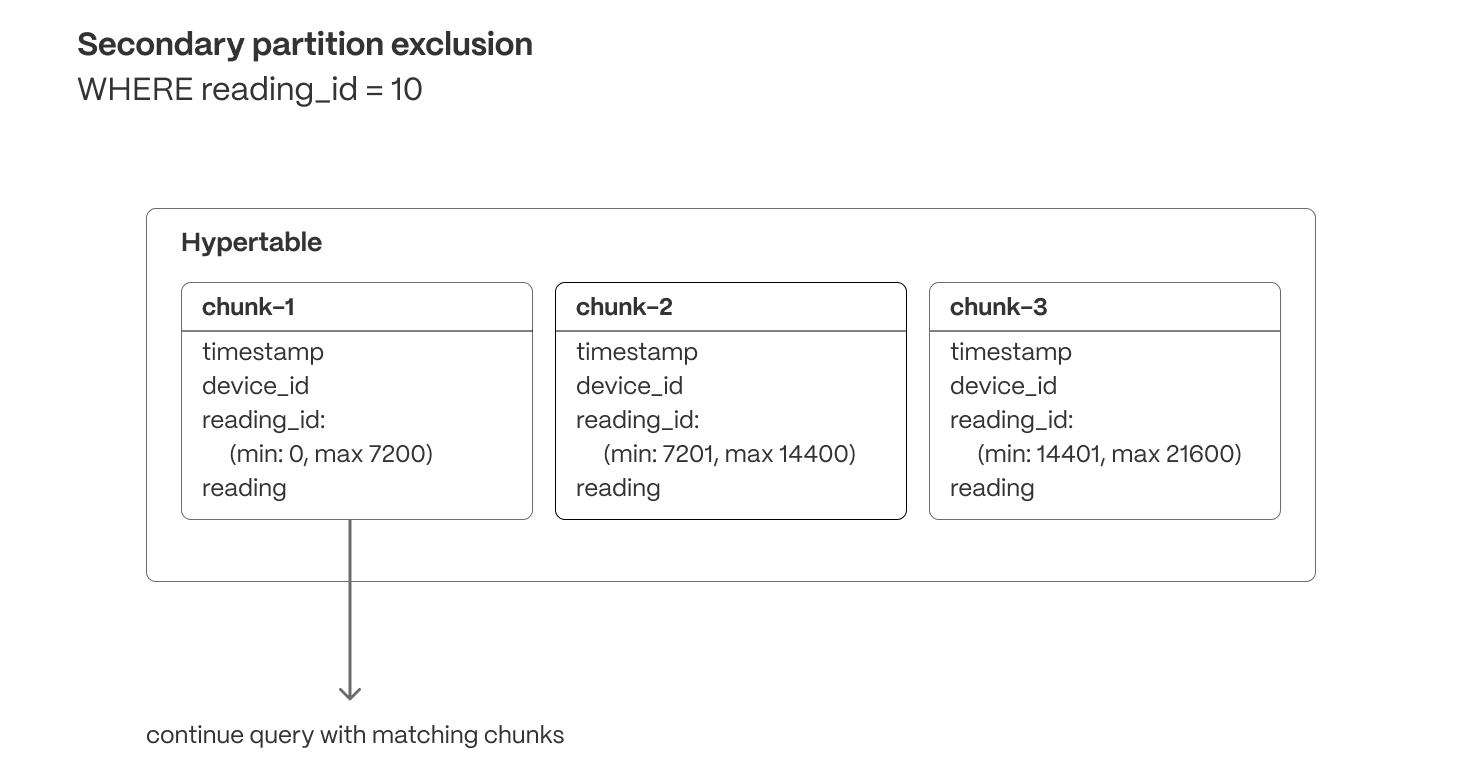

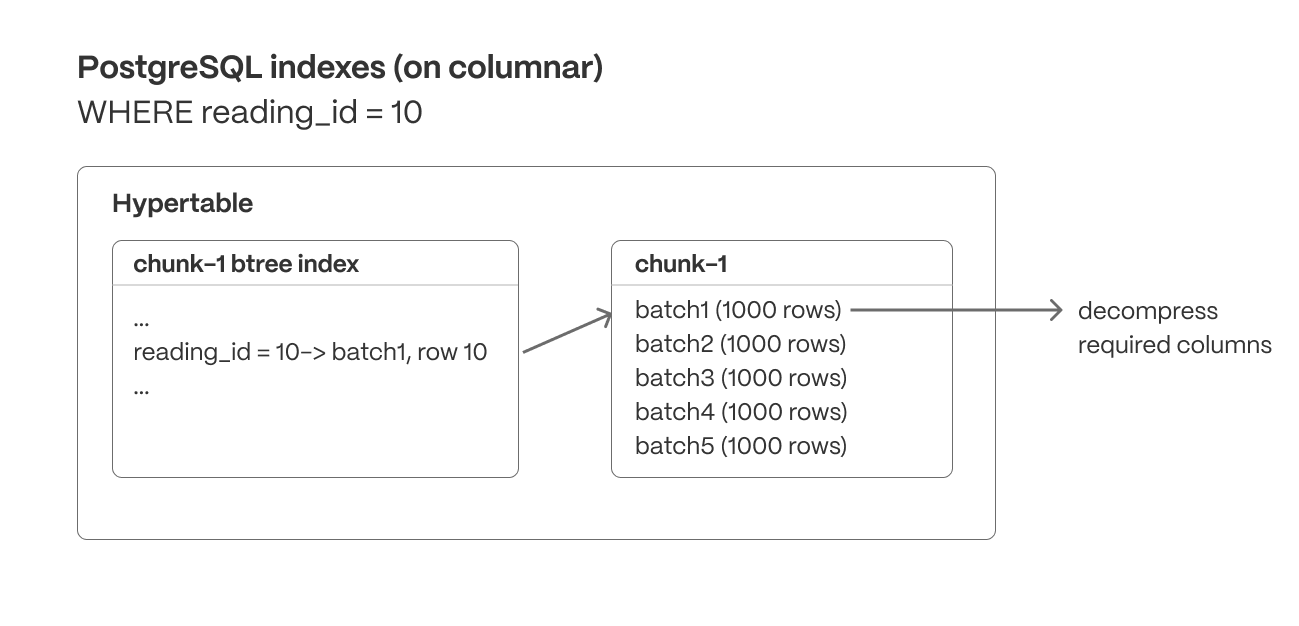

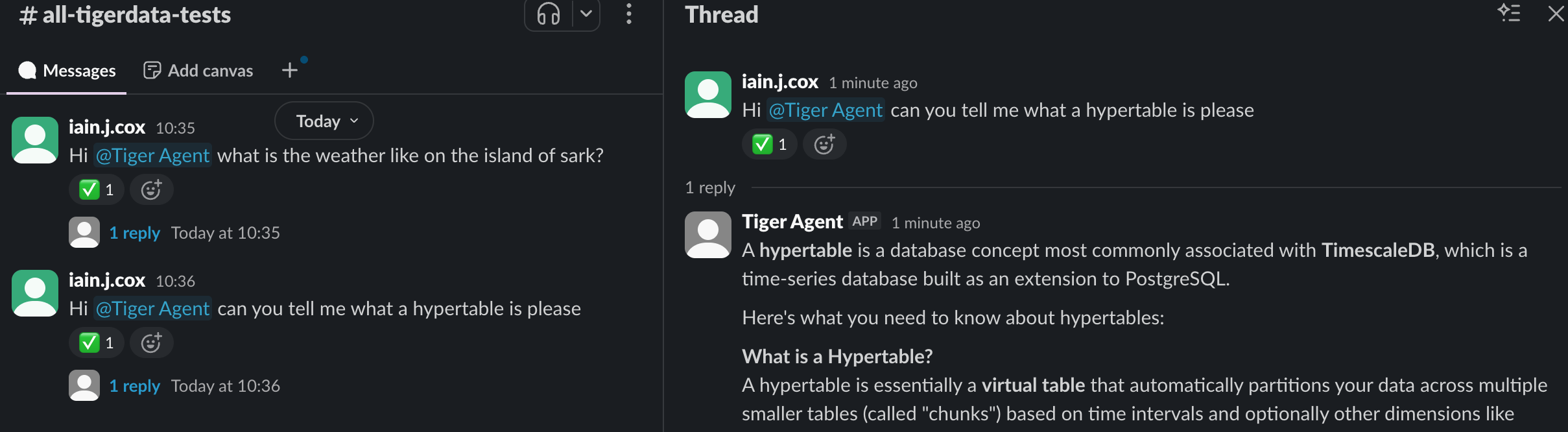

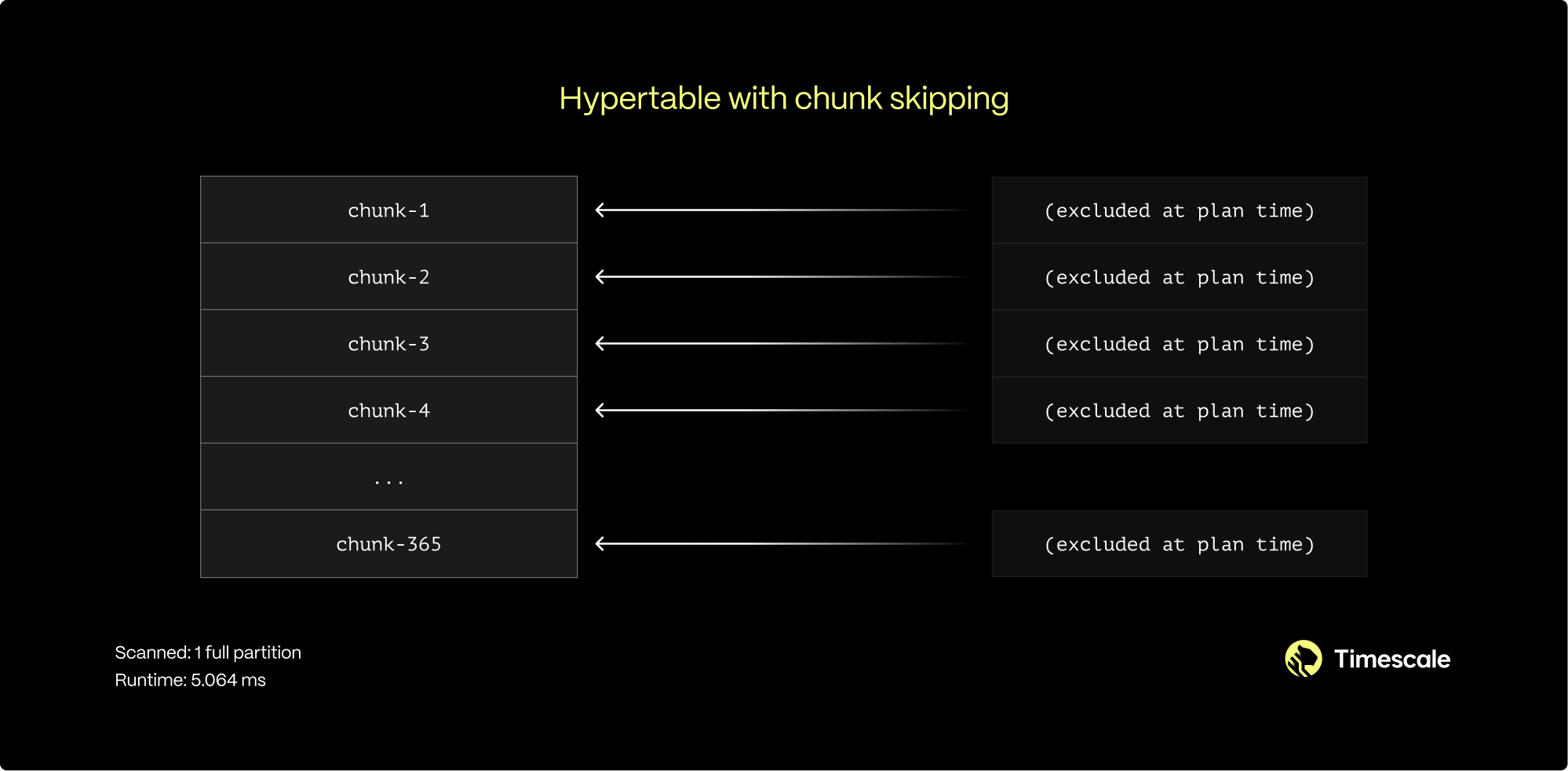

Time-series data represents the way a system, process, or behavior changes over time. Hypertables are Postgres tables that help you improve insert and query performance by automatically partitioning your data by time. Each hypertable is made up of child tables called chunks. Each chunk is assigned a range of time, and only contains data from that range. When you run a query, TimescaleDB identifies the correct chunk and runs the query on it, instead of going through the entire table. You can also tune hypertables to increase performance even more.

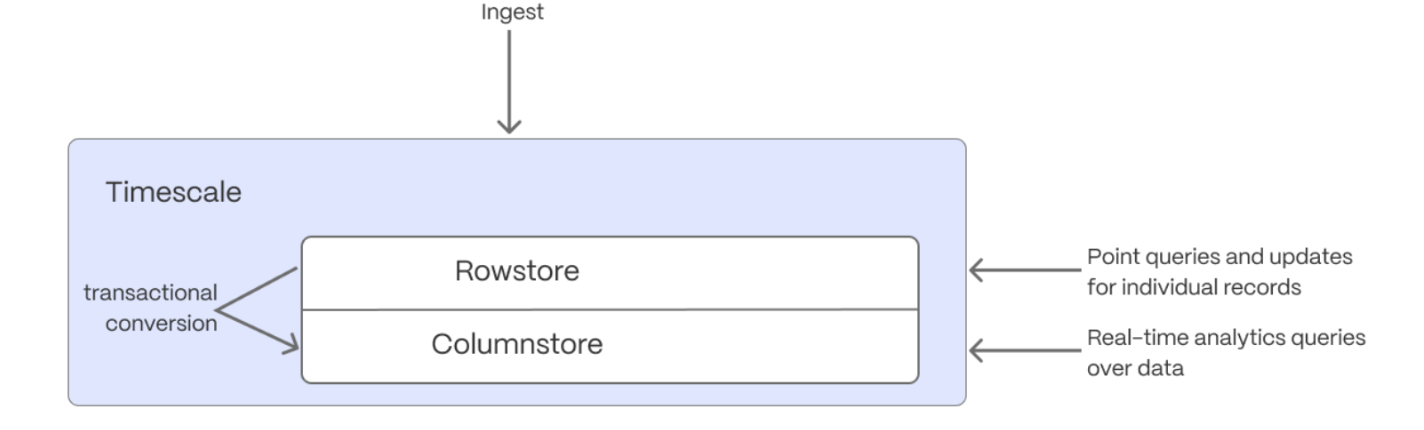

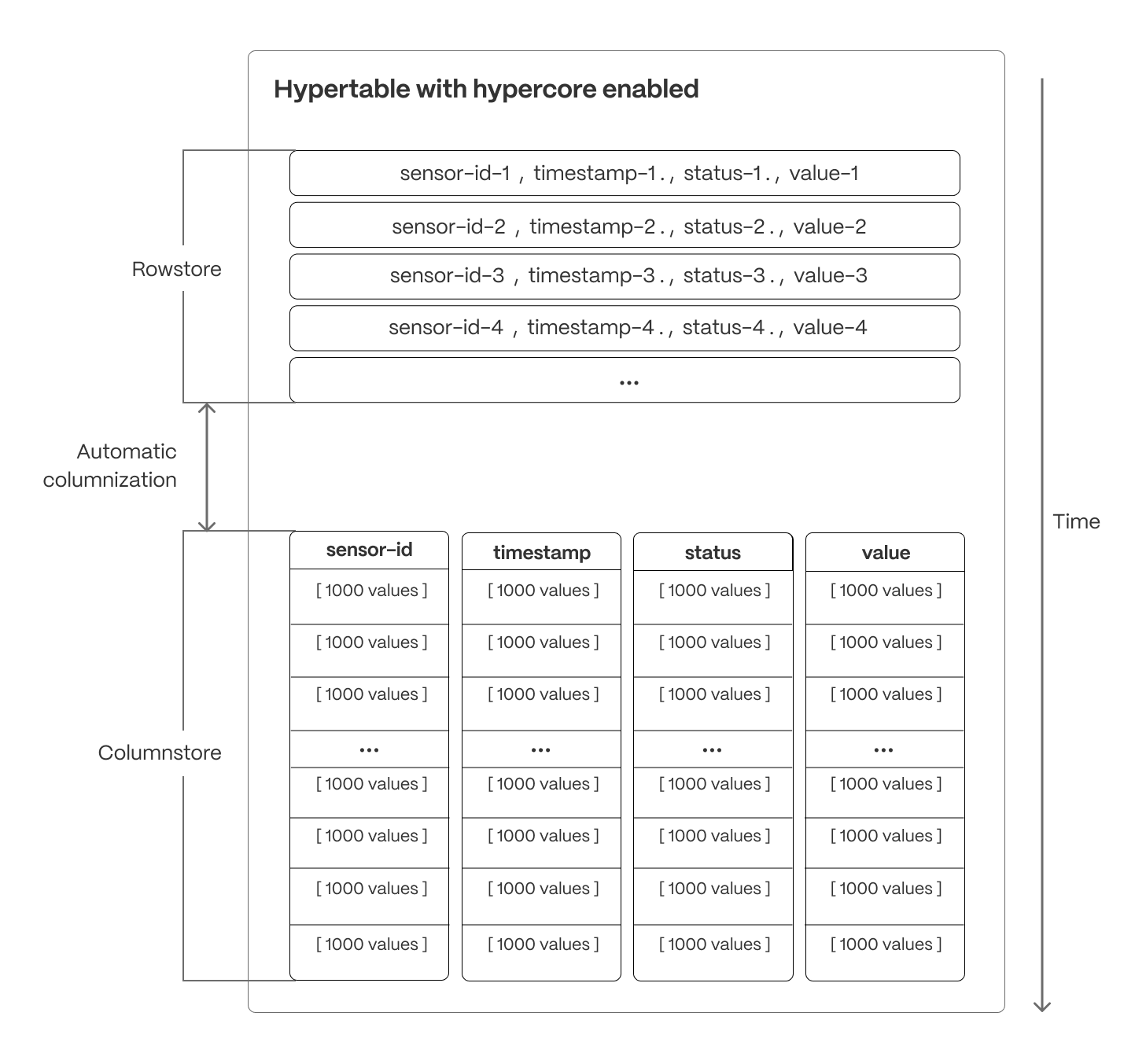

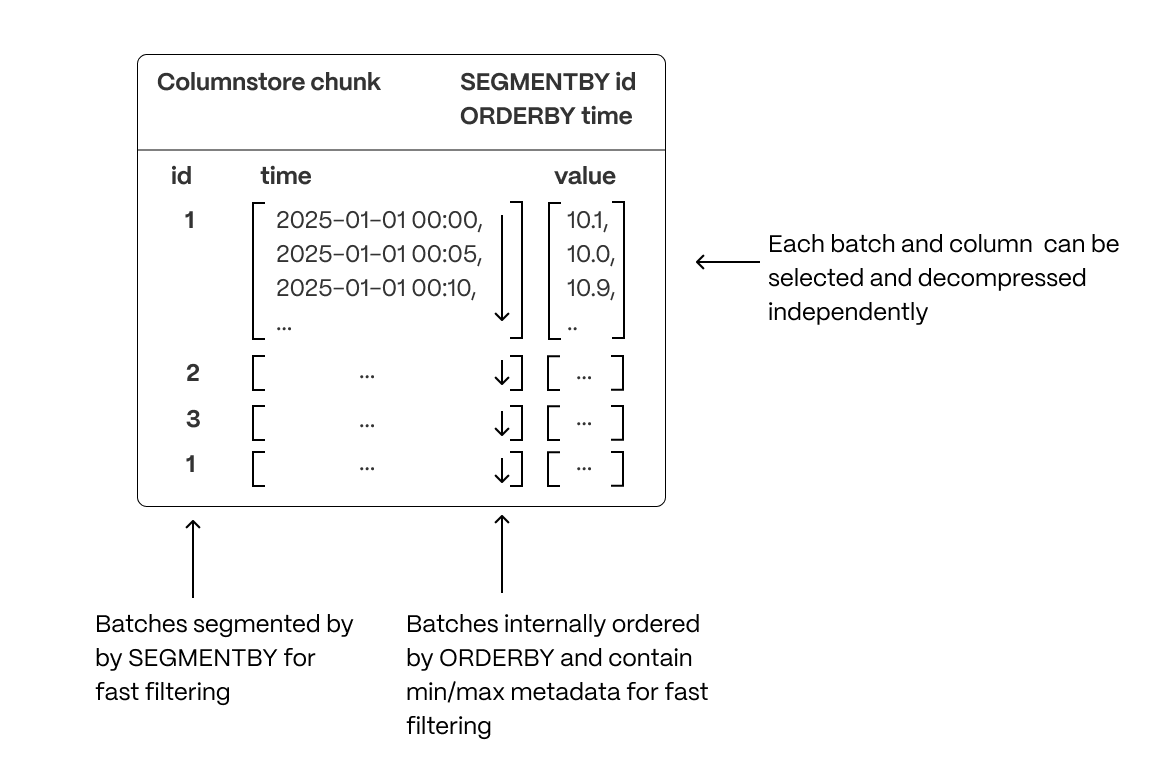

Hypercore is the hybrid row-columnar storage engine in TimescaleDB used by hypertables. Traditional databases force a trade-off between fast inserts (row-based storage) and efficient analytics (columnar storage). Hypercore eliminates this trade-off, allowing real-time analytics without sacrificing transactional capabilities.

Hypercore dynamically stores data in the most efficient format for its lifecycle:

- Row-based storage for recent data: the most recent chunk (and possibly more) is always stored in the rowstore, ensuring fast inserts, updates, and low-latency single record queries. Additionally, row-based storage is used as a writethrough for inserts and updates to columnar storage.

- Columnar storage for analytical performance: chunks are automatically compressed into the columnstore, optimizing storage efficiency and accelerating analytical queries.

Unlike traditional columnar databases, hypercore allows data to be inserted or modified at any stage, making it a flexible solution for both high-ingest transactional workloads and real-time analytics—within a single database.

Hypertables exist alongside regular Postgres tables. You use regular Postgres tables for relational data, and interact with hypertables and regular Postgres tables in the same way.

This section shows you how to create regular tables and hypertables, and import relational and time-series data from external files.

Import some time-series data into hypertables

- Unzip crypto_sample.zip to a

<local folder>.

This test dataset contains:

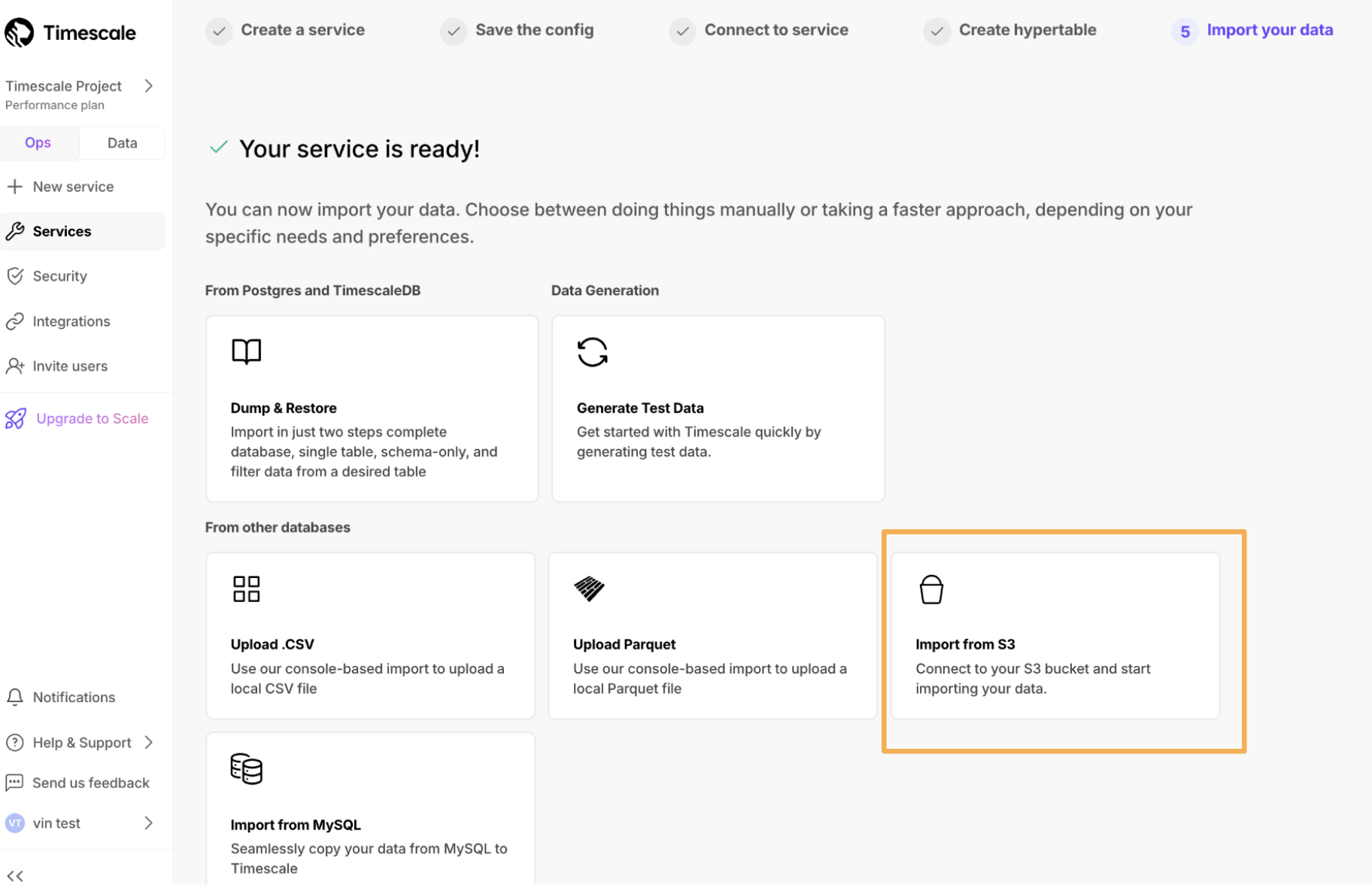

- Second-by-second data for the most-traded crypto-assets. This time-series data is best suited for optimization in a [hypertable][hypertables-section]. - A list of asset symbols and company names. This is best suited for a regular relational table.To import up to 100 GB of data directly from your current Postgres-based database, migrate with downtime using native Postgres tooling. To seamlessly import 100GB-10TB+ of data, use the live migration tooling supplied by Tiger Data. To add data from non-Postgres data sources, see Import and ingest data.

- Upload data into a hypertable:

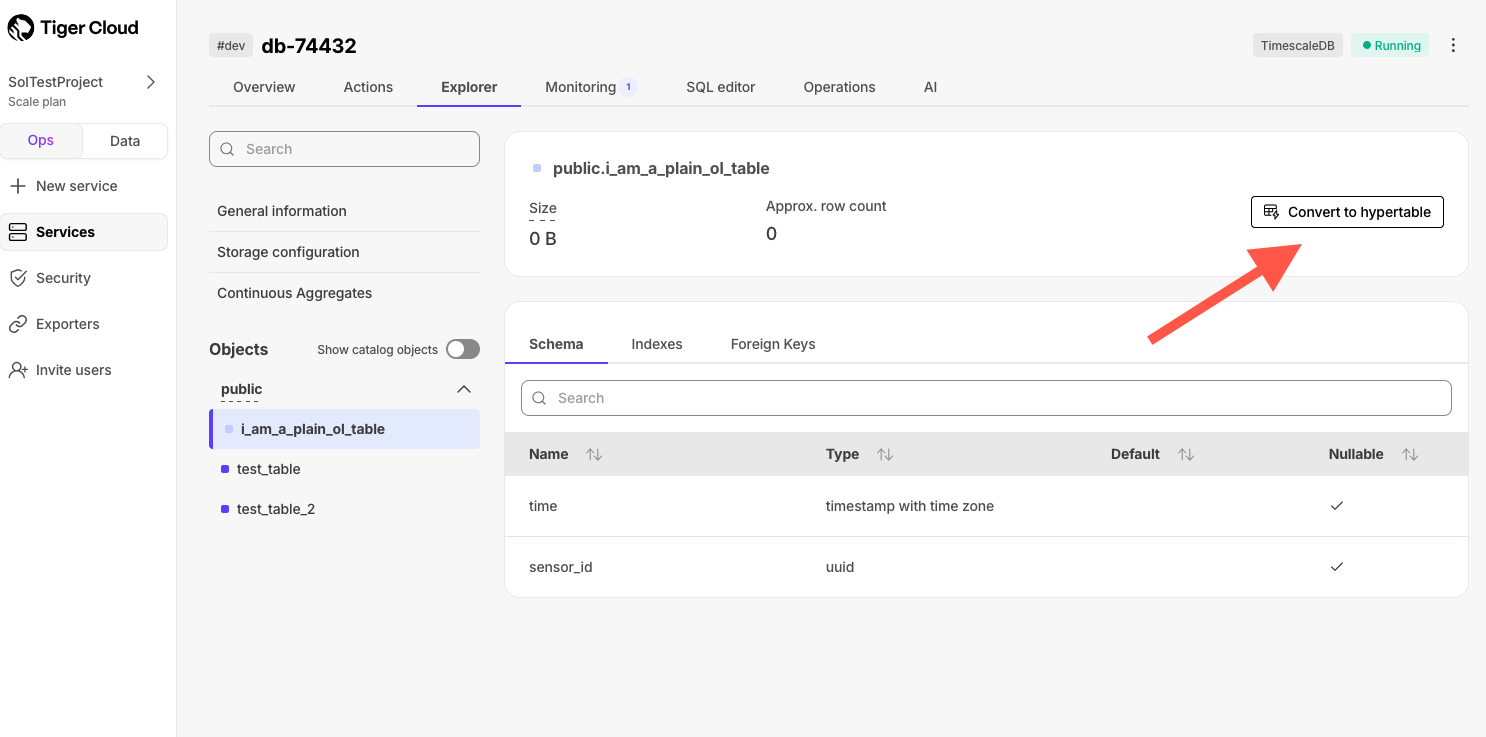

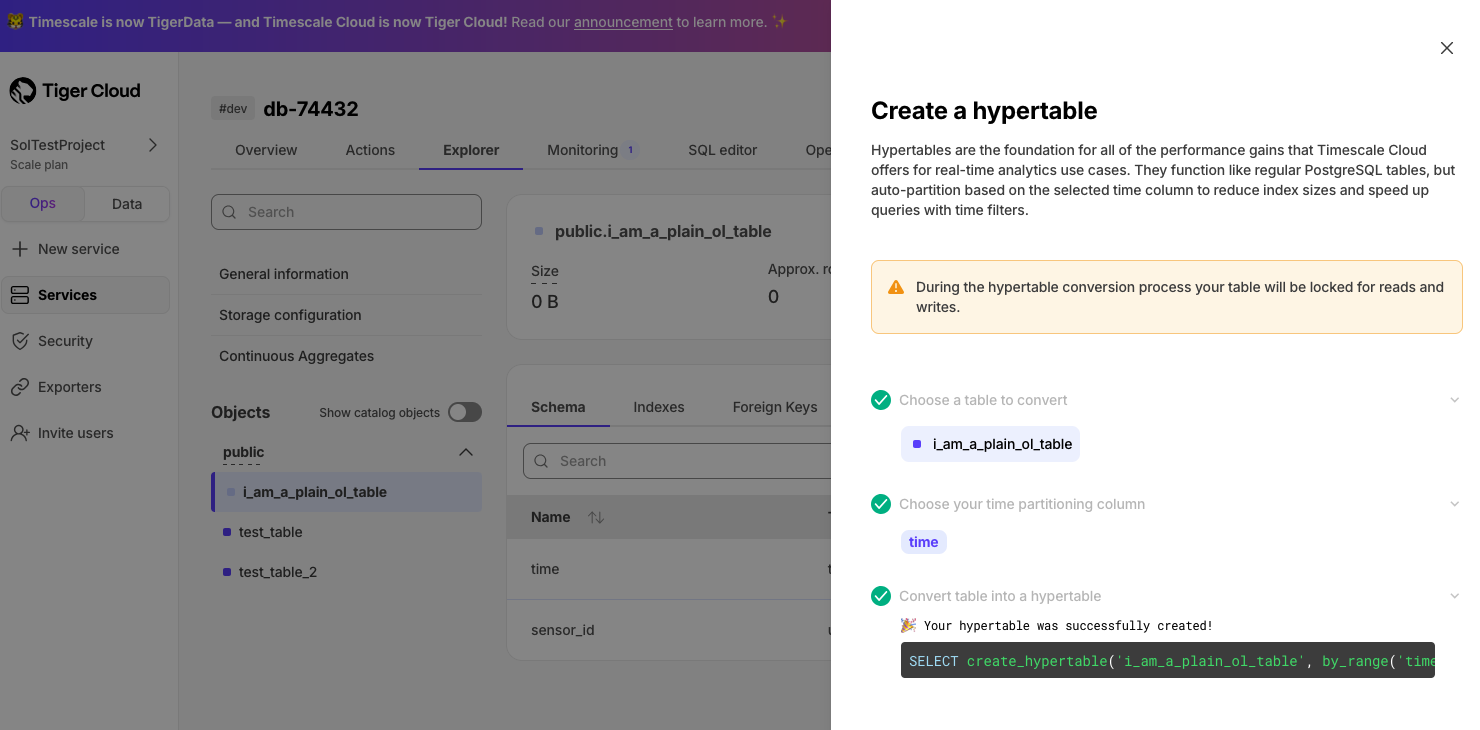

To more fully understand how to create a hypertable, how hypertables work, and how to optimize them for performance by tuning chunk intervals and enabling chunk skipping, see the hypertables documentation.

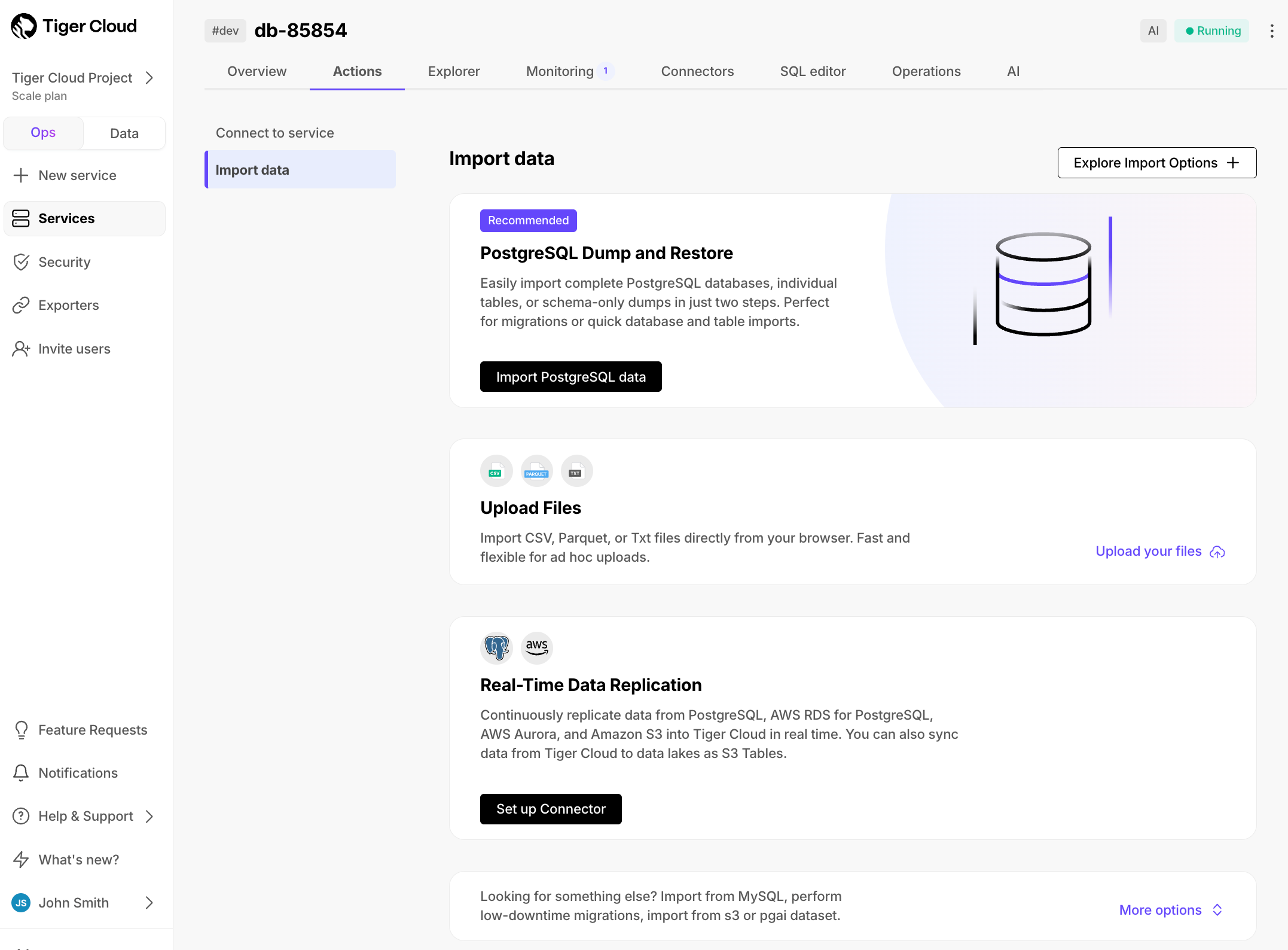

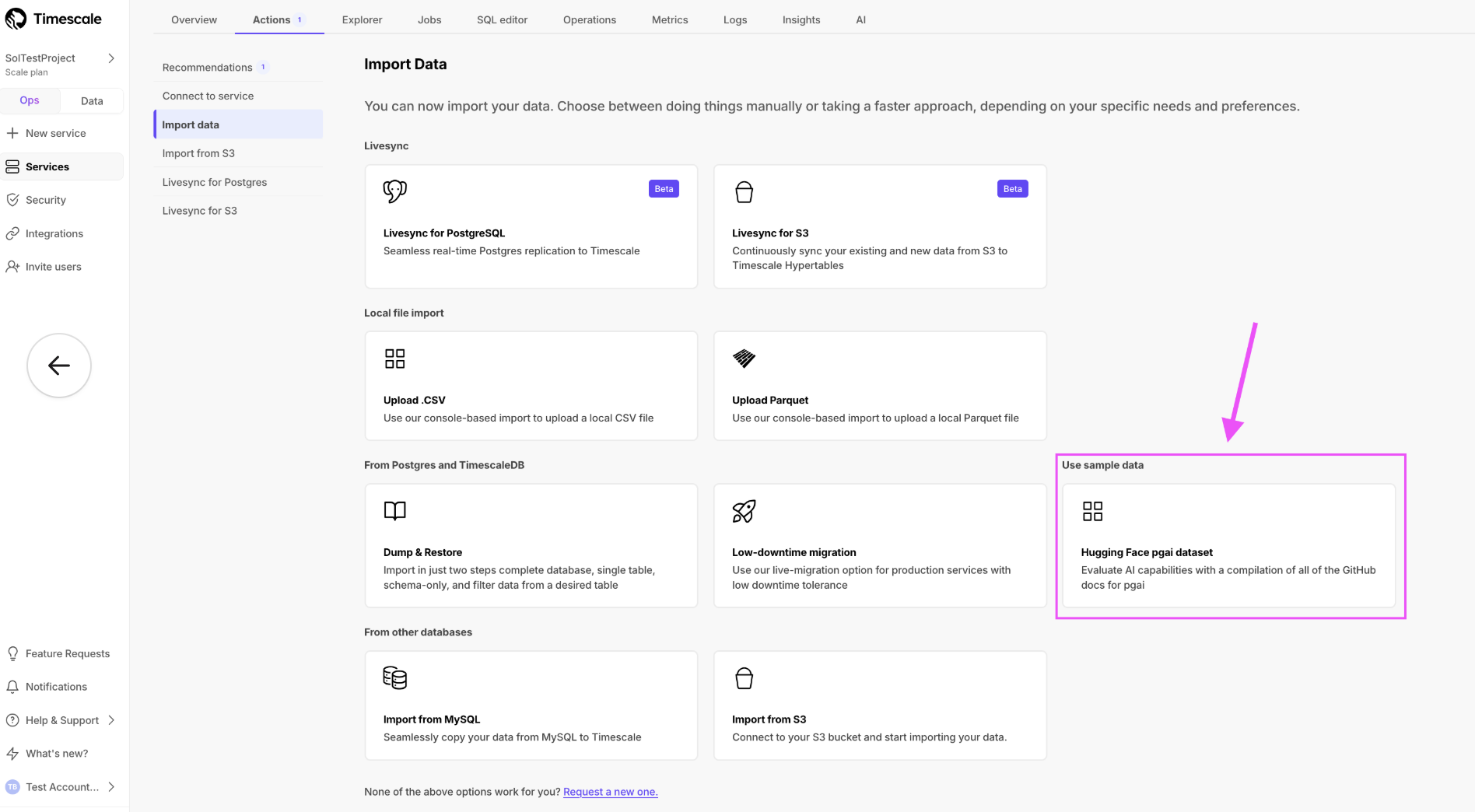

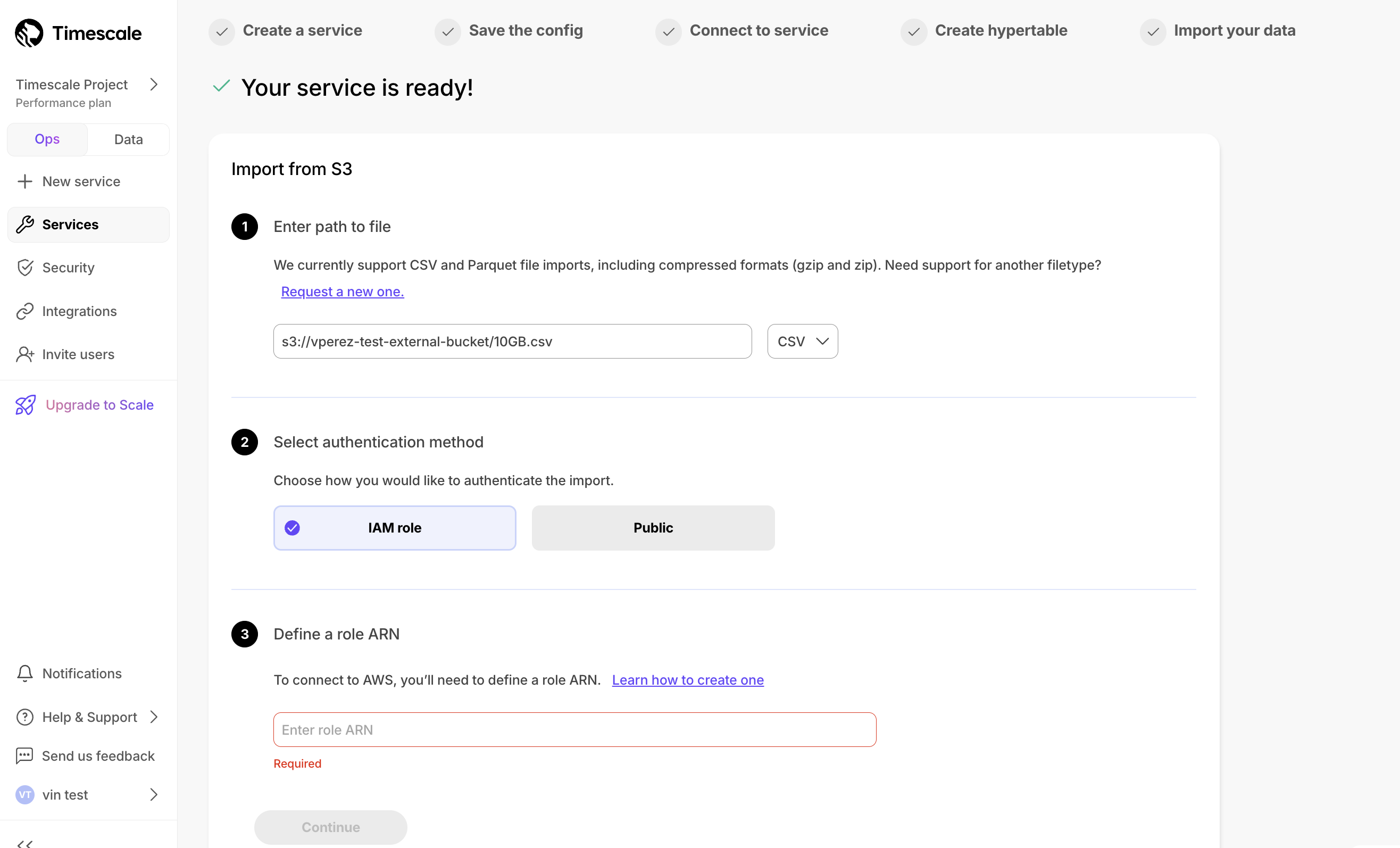

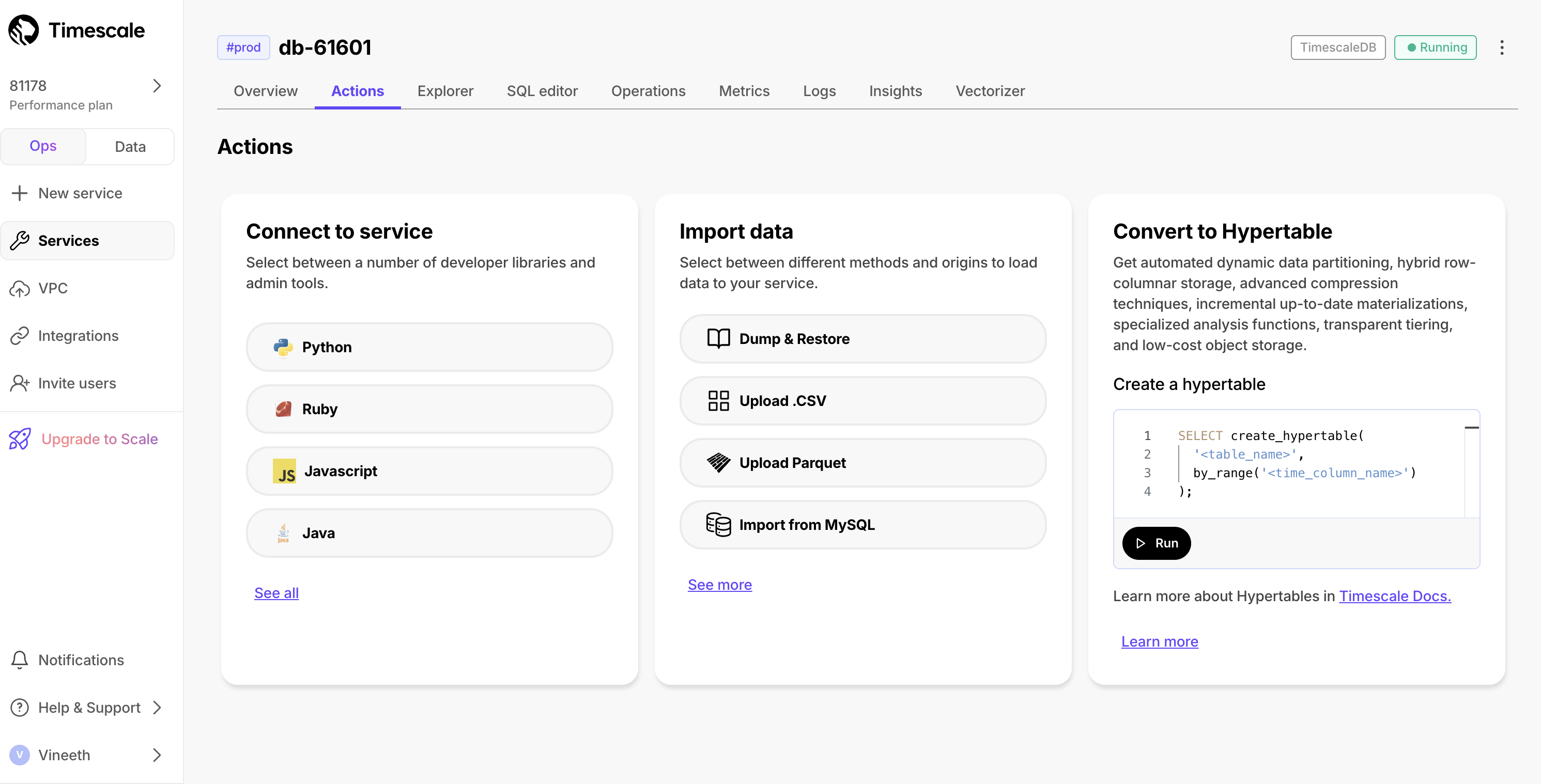

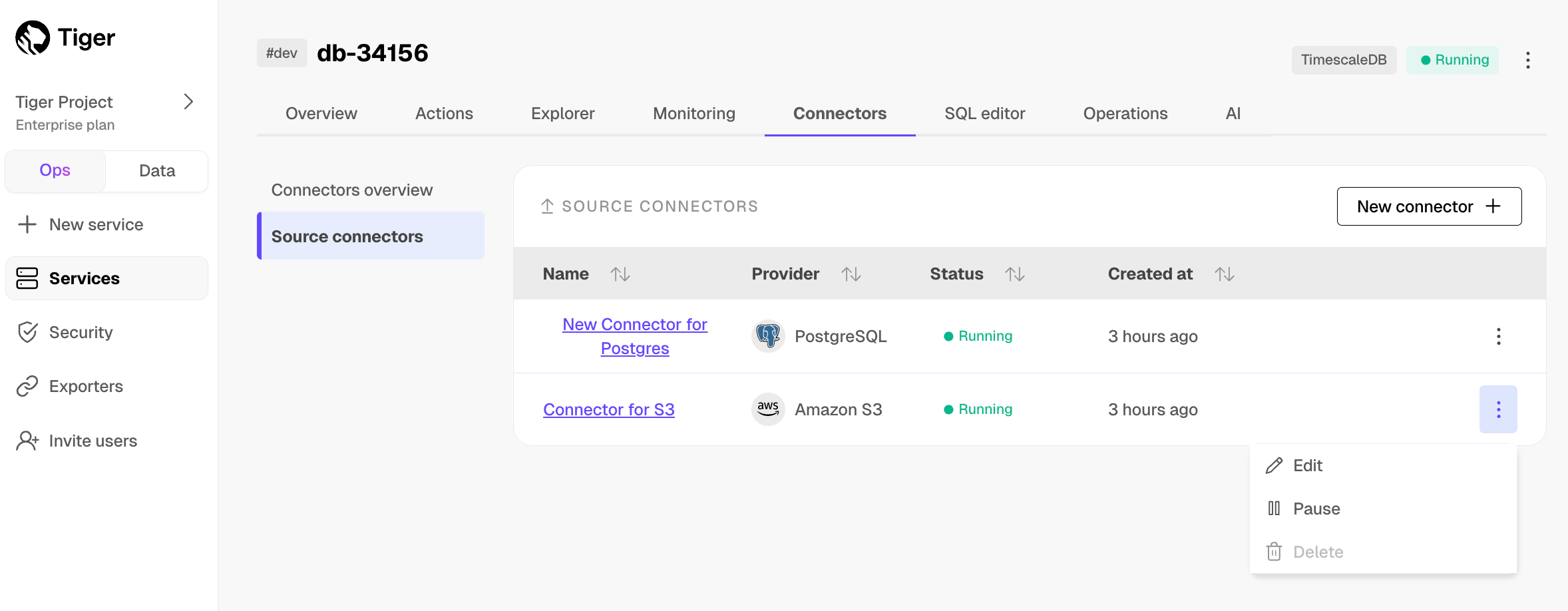

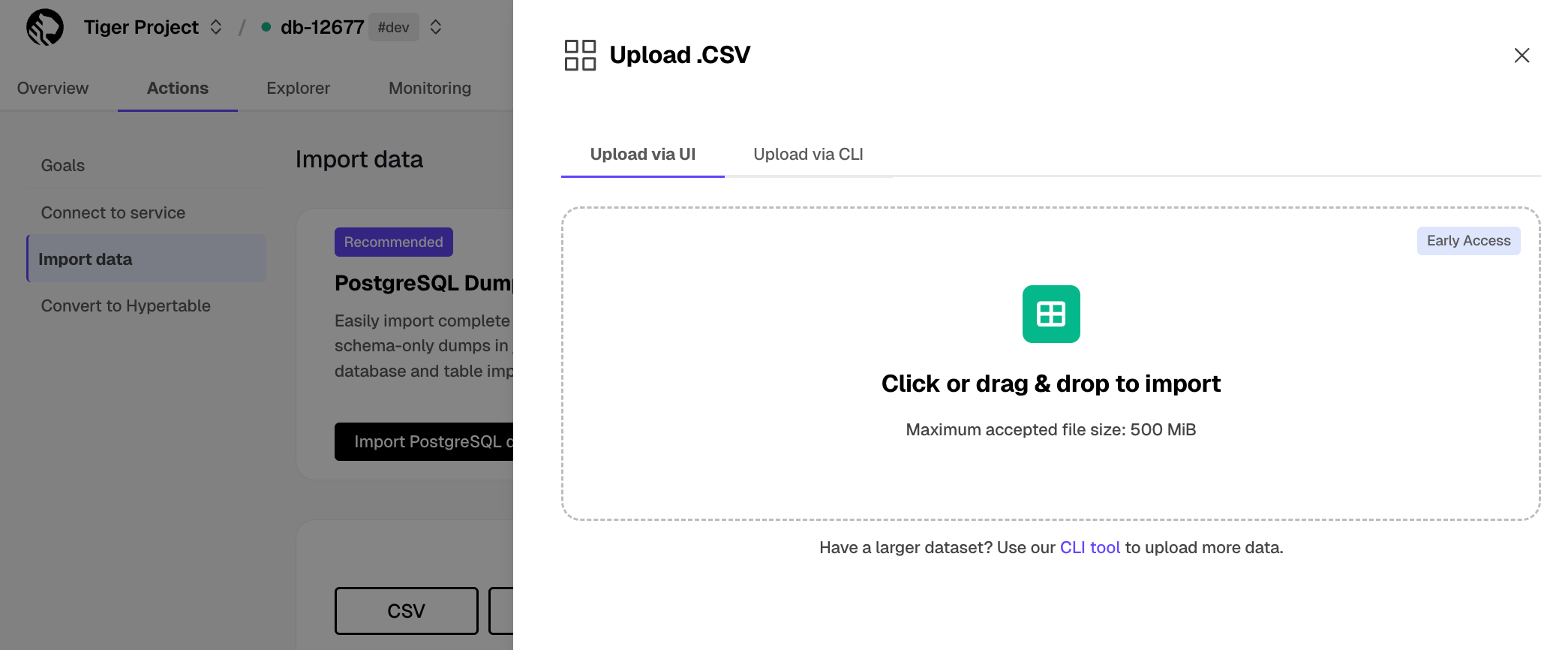

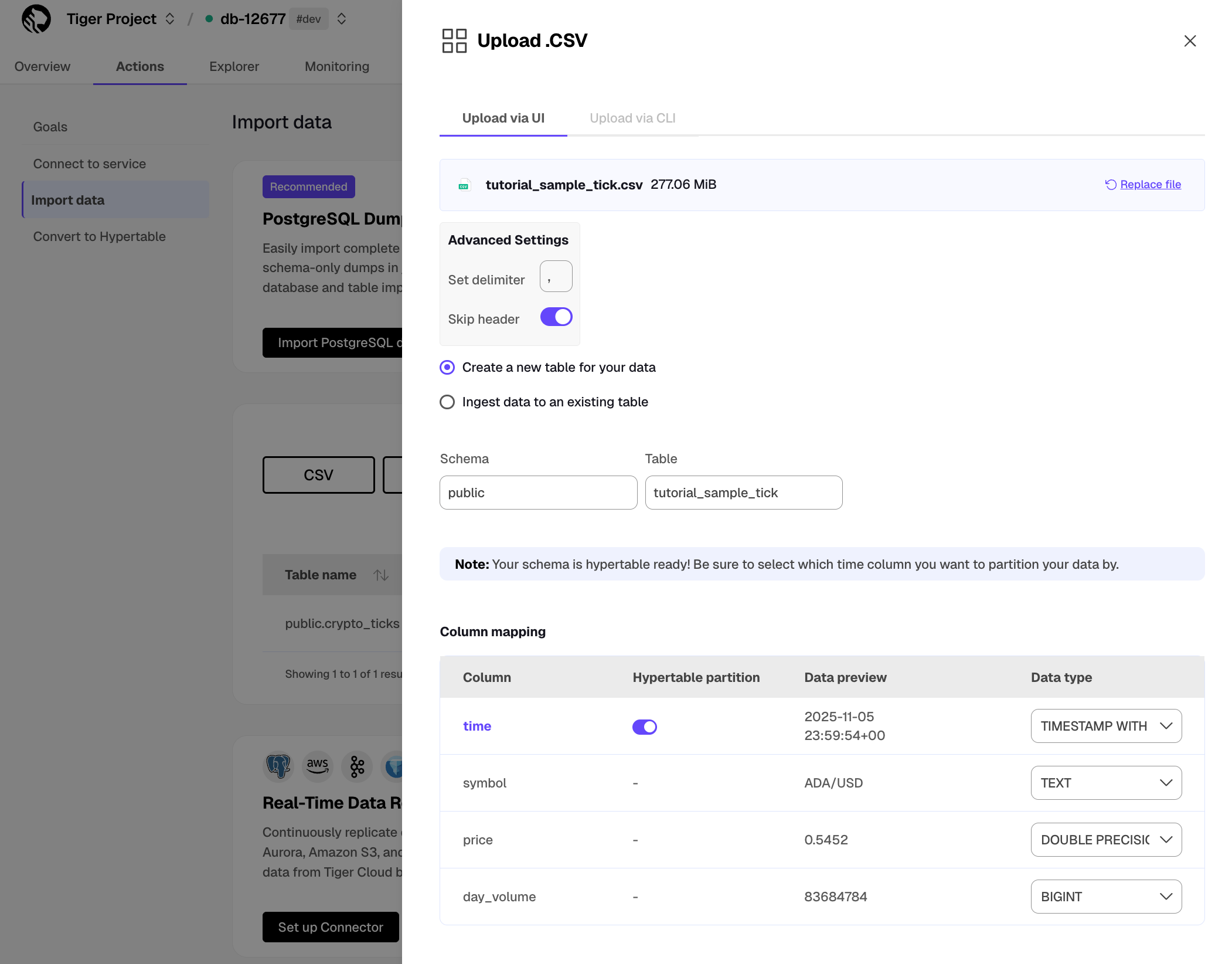

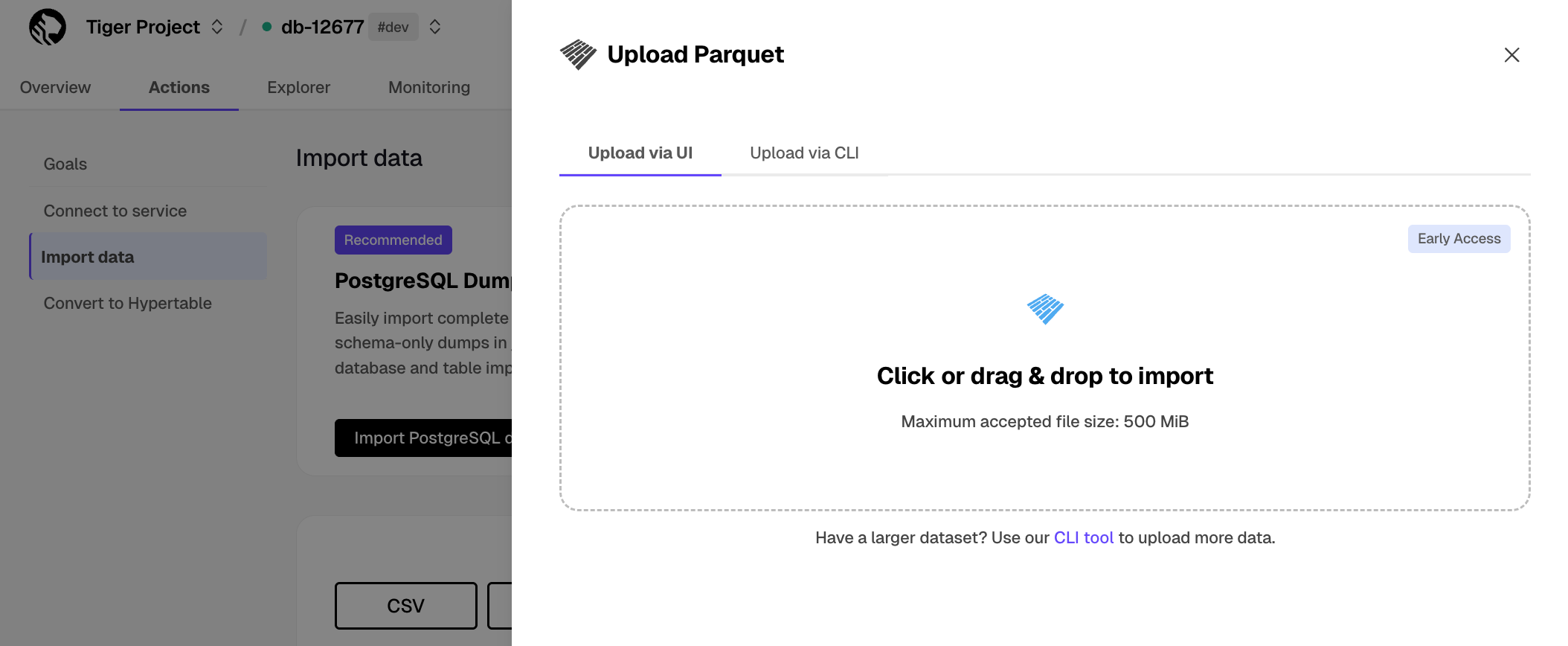

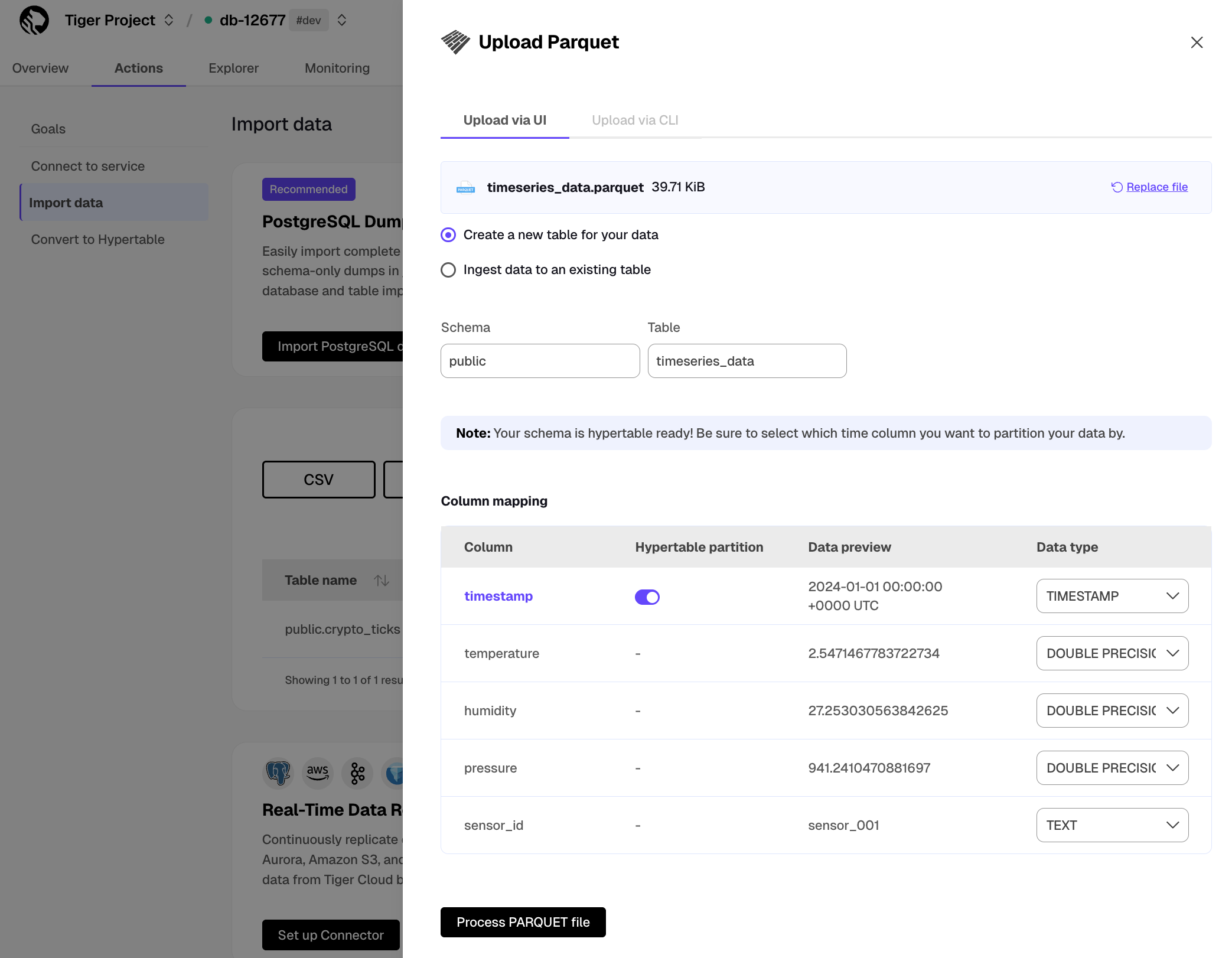

The Tiger Cloud Console data upload creates hypertables and relational tables from the data you are uploading: 1. In [Tiger Cloud Console][portal-ops-mode], select the service to add data to, then click `Actions` > `Import data` > `Upload .CSV`. 1. Click to browse, or drag and drop `<local folder>/tutorial_sample_tick.csv` to upload. 1. Leave the default settings for the delimiter, skipping the header, and creating a new table. 1. In `Table`, provide `crypto_ticks` as the new table name. 1. Enable `hypertable partition` for the `time` column and click `Process CSV file`. The upload wizard creates a hypertable containing the data from the CSV file. 1. When the data is uploaded, close `Upload .CSV`. If you want to have a quick look at your data, press `Run` . 1. Repeat the process with `<local folder>/tutorial_sample_assets.csv` and rename to `crypto_assets`. There is no time-series data in this table, so you don't see the `hypertable partition` option.In Terminal, navigate to

<local folder>and connect to your service.psql -d "postgres://<username>:<password>@<host>:<port>/<database-name>"You use your connection details to fill in this Postgres connection string.

Create tables for the data to import:

For the time-series data:

In your sql client, create a hypertable:

Create a hypertable for your time-series data using CREATE TABLE. For efficient queries, remember to

segmentbythe column you will use most often to filter your data. For example:CREATE TABLE crypto_ticks ( "time" TIMESTAMPTZ, symbol TEXT, price DOUBLE PRECISION, day_volume NUMERIC ) WITH ( tsdb.hypertable, tsdb.partition_column='time', tsdb.segmentby = 'symbol' );If you are self-hosting TimescaleDB v2.19.3 and below, create a Postgres relational table, then convert it using create_hypertable. You then enable hypercore with a call to ALTER TABLE.

For the relational data:

In your sql client, create a normal Postgres table:

CREATE TABLE crypto_assets ( symbol TEXT NOT NULL, name TEXT NOT NULL );

Speed up data ingestion:

When you set

timescaledb.enable_direct_compress_copyyour data gets compressed in memory during ingestion withCOPYstatements. By writing the compressed batches immediately in the columnstore, the IO footprint is significantly lower. Also, the columnstore policy you set is less important,INSERTalready produces compressed chunks.

- Unzip crypto_sample.zip to a

Please note that this feature is a tech preview and not production-ready. Using this feature could lead to regressed query performance and/or storage ratio, if the ingested batches are not correctly ordered or are of too high cardinality.

To enable in-memory data compression during ingestion:

SET timescaledb.enable_direct_compress_copy=on;

Important facts

- High cardinality use cases do not produce good batches and lead to degreaded query performance.

- The columnstore is optimized to store 1000 records per batch, which is the optimal format for ingestion per segment by.

- WAL records are written for the compressed batches rather than the individual tuples.

- Currently only

COPYis support,INSERTwill eventually follow. - Best results are achieved for batch ingestion with 1000 records or more, upper boundary is 10.000 records.

Continous Aggregates are not supported at the moment.

Upload the dataset to your service:

\COPY crypto_ticks from './tutorial_sample_tick.csv' DELIMITER ',' CSV HEADER;\COPY crypto_assets from './tutorial_sample_assets.csv' DELIMITER ',' CSV HEADER;

Have a quick look at your data

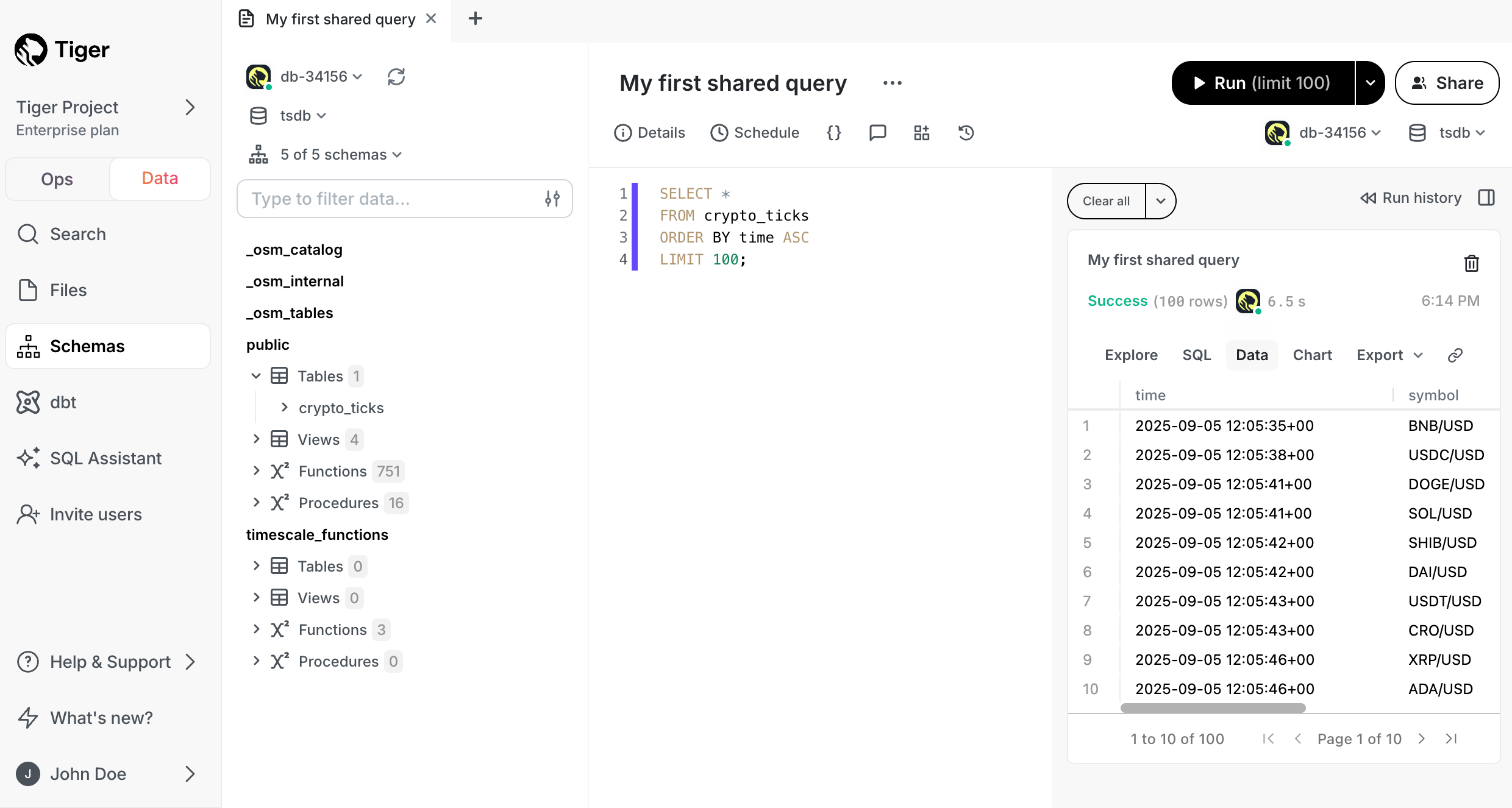

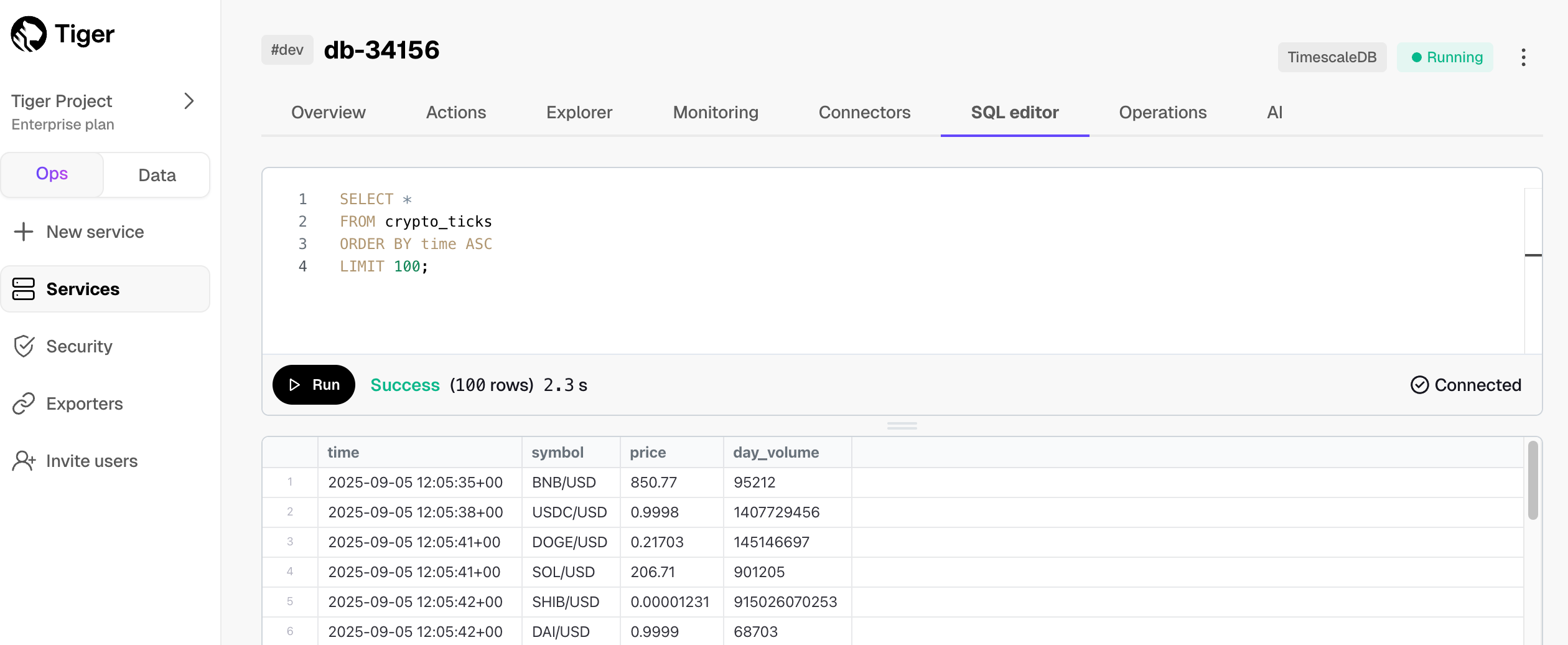

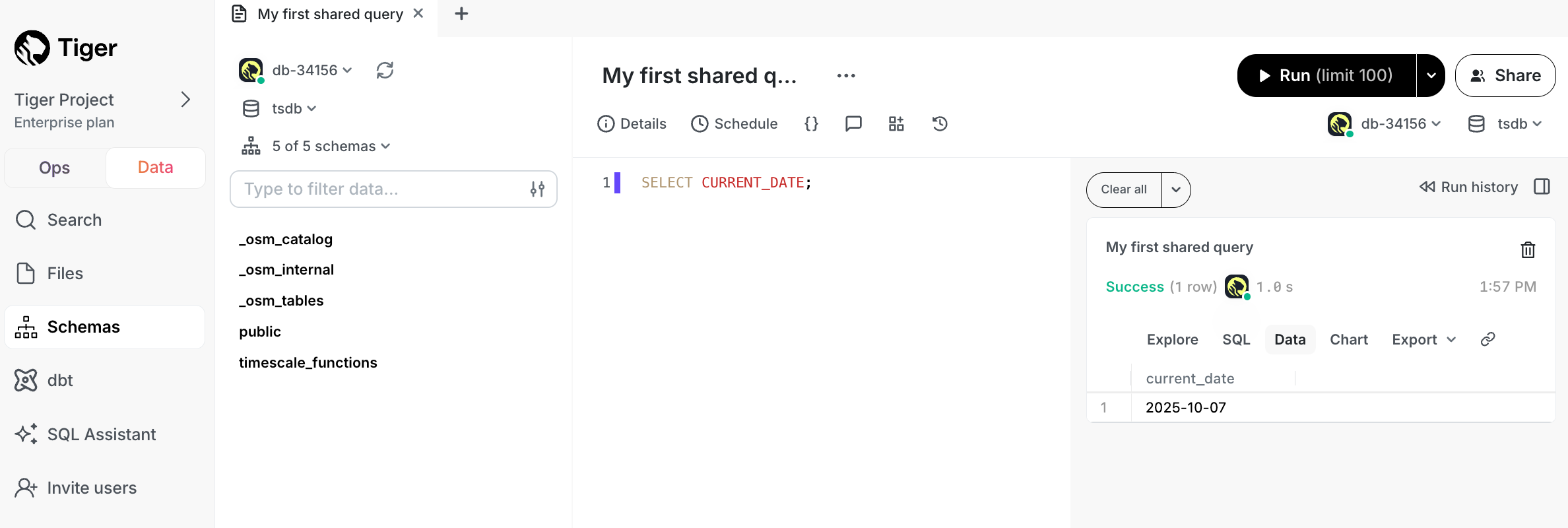

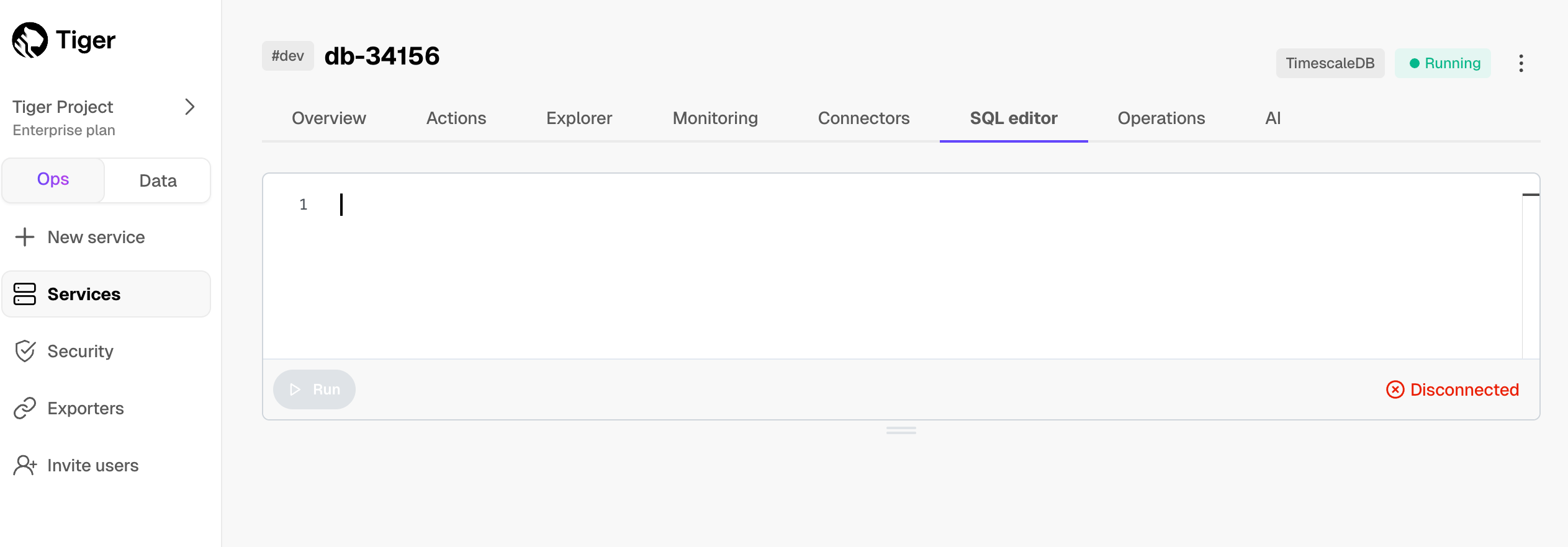

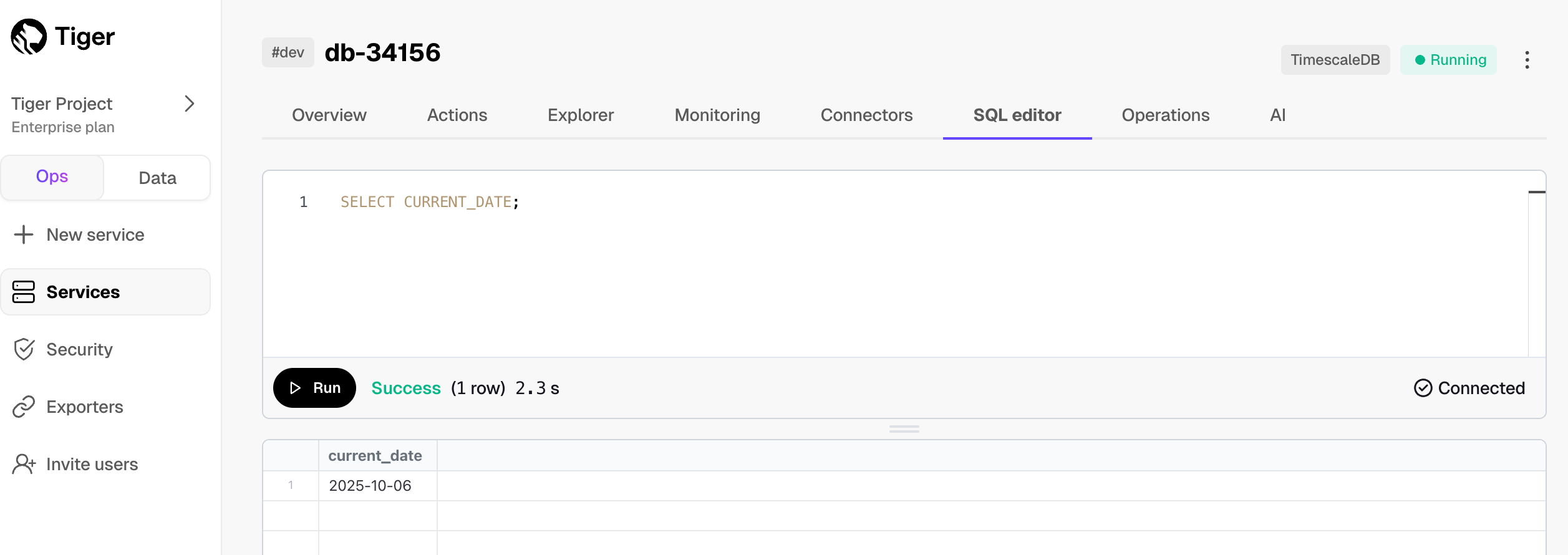

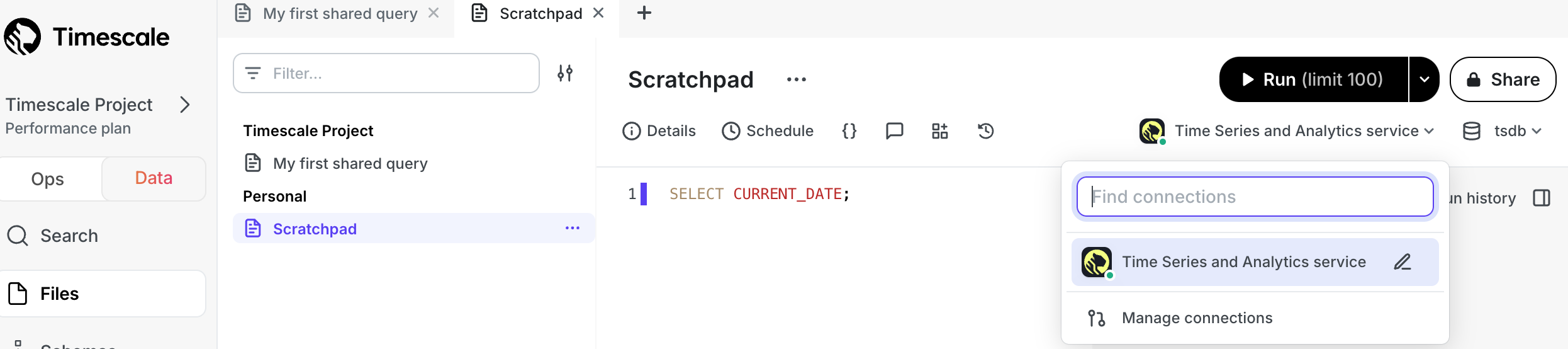

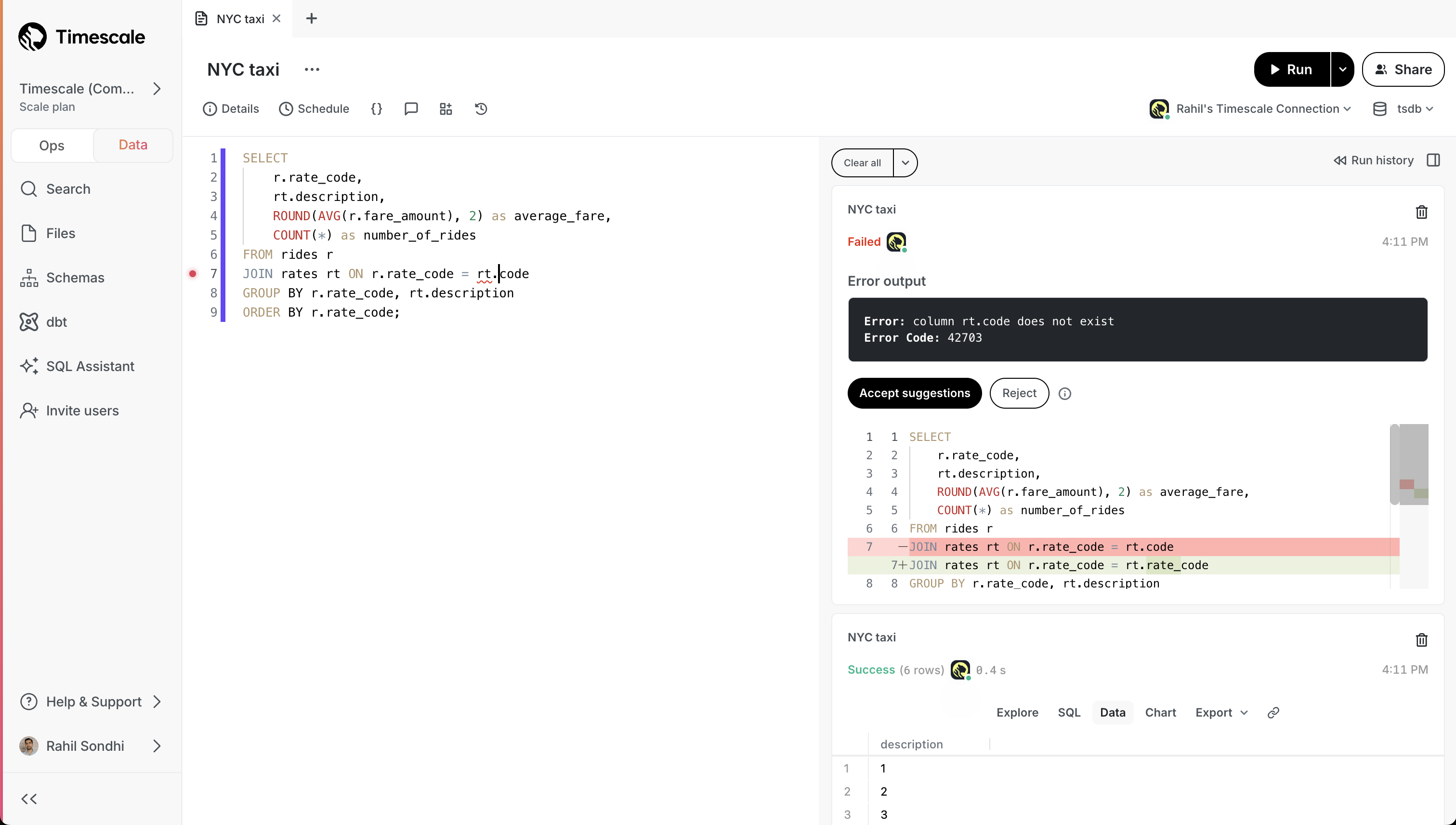

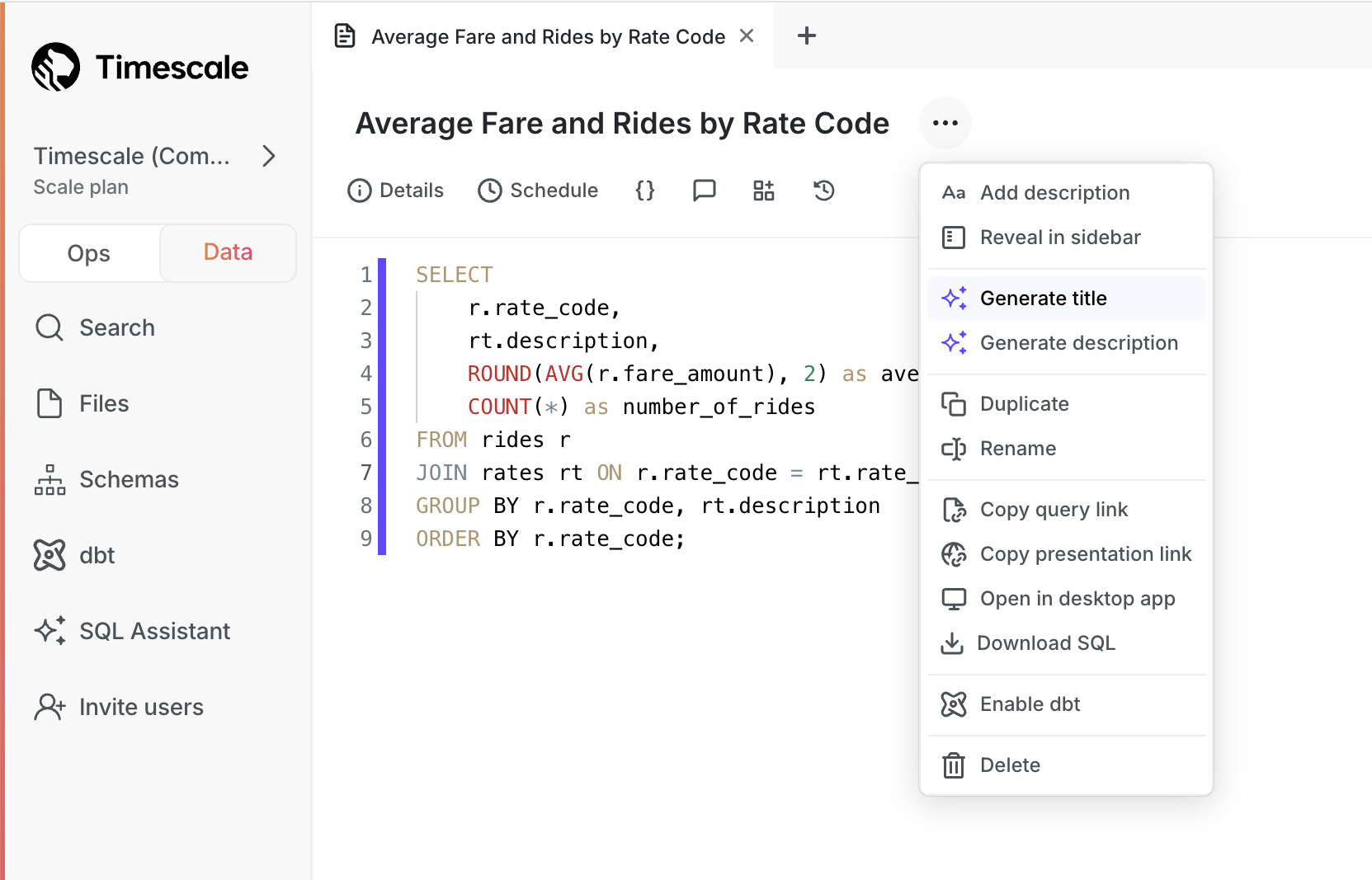

You query hypertables in exactly the same way as you would a relational Postgres table. Use one of the following SQL editors to run a query and see the data you uploaded:

- Data mode: write queries, visualize data, and share your results in Tiger Cloud Console for all your Tiger Cloud services. This feature is not available under the Free pricing plan.

- SQL editor: write, fix, and organize SQL faster and more accurately in Tiger Cloud Console for a Tiger Cloud service.

- psql: easily run queries on your Tiger Cloud services or self-hosted TimescaleDB deployment from Terminal.

Enhance query performance for analytics

Hypercore is the TimescaleDB hybrid row-columnar storage engine, designed specifically for real-time analytics and powered by time-series data. The advantage of hypercore is its ability to seamlessly switch between row-oriented and column-oriented storage. This flexibility enables TimescaleDB to deliver the best of both worlds, solving the key challenges in real-time analytics.

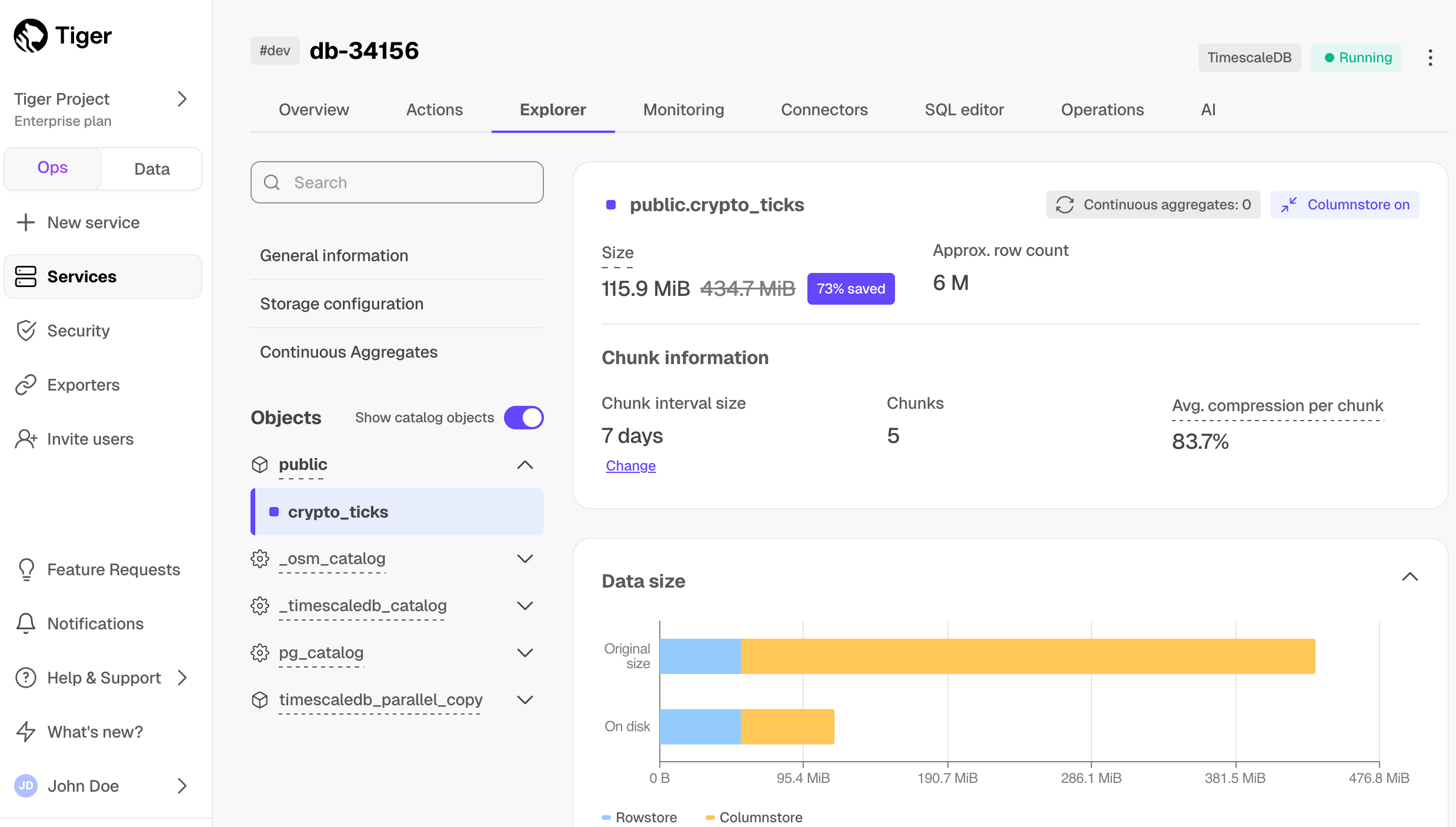

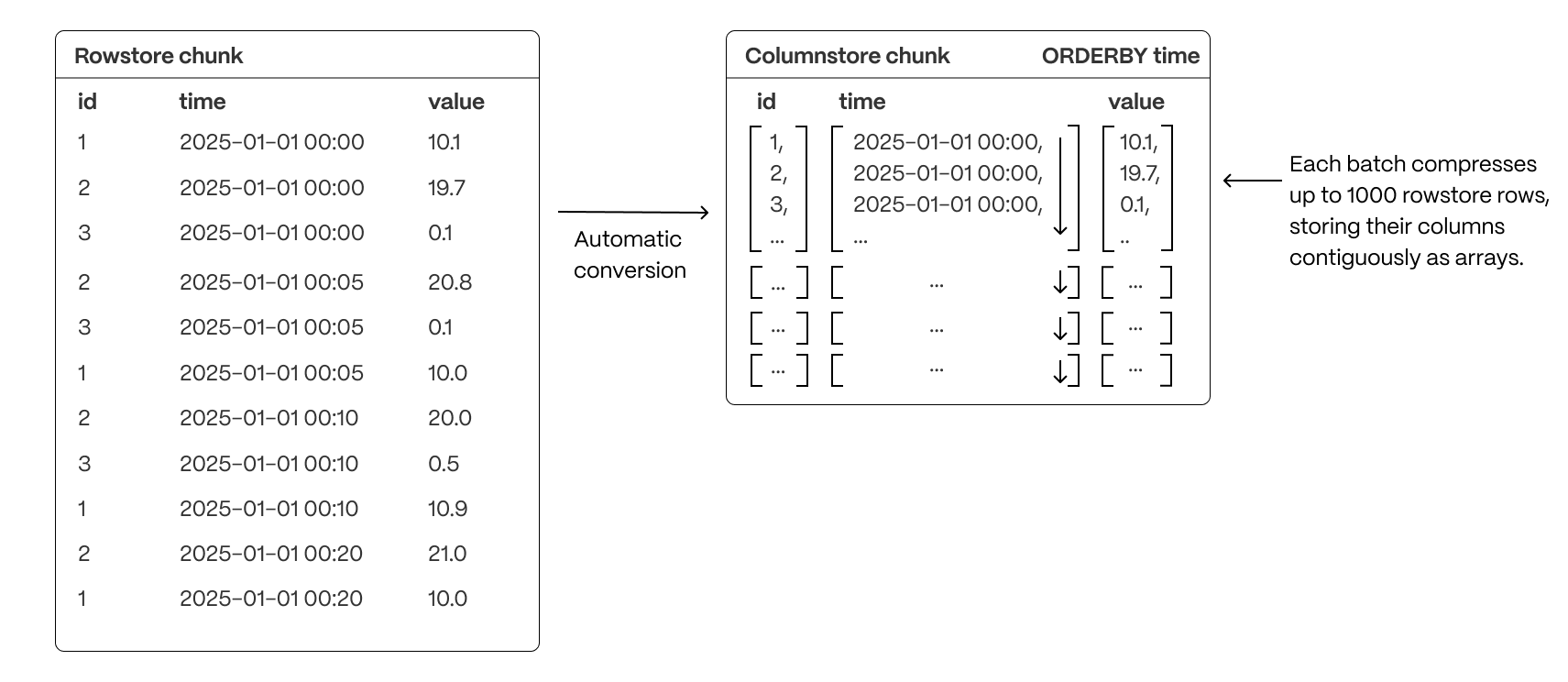

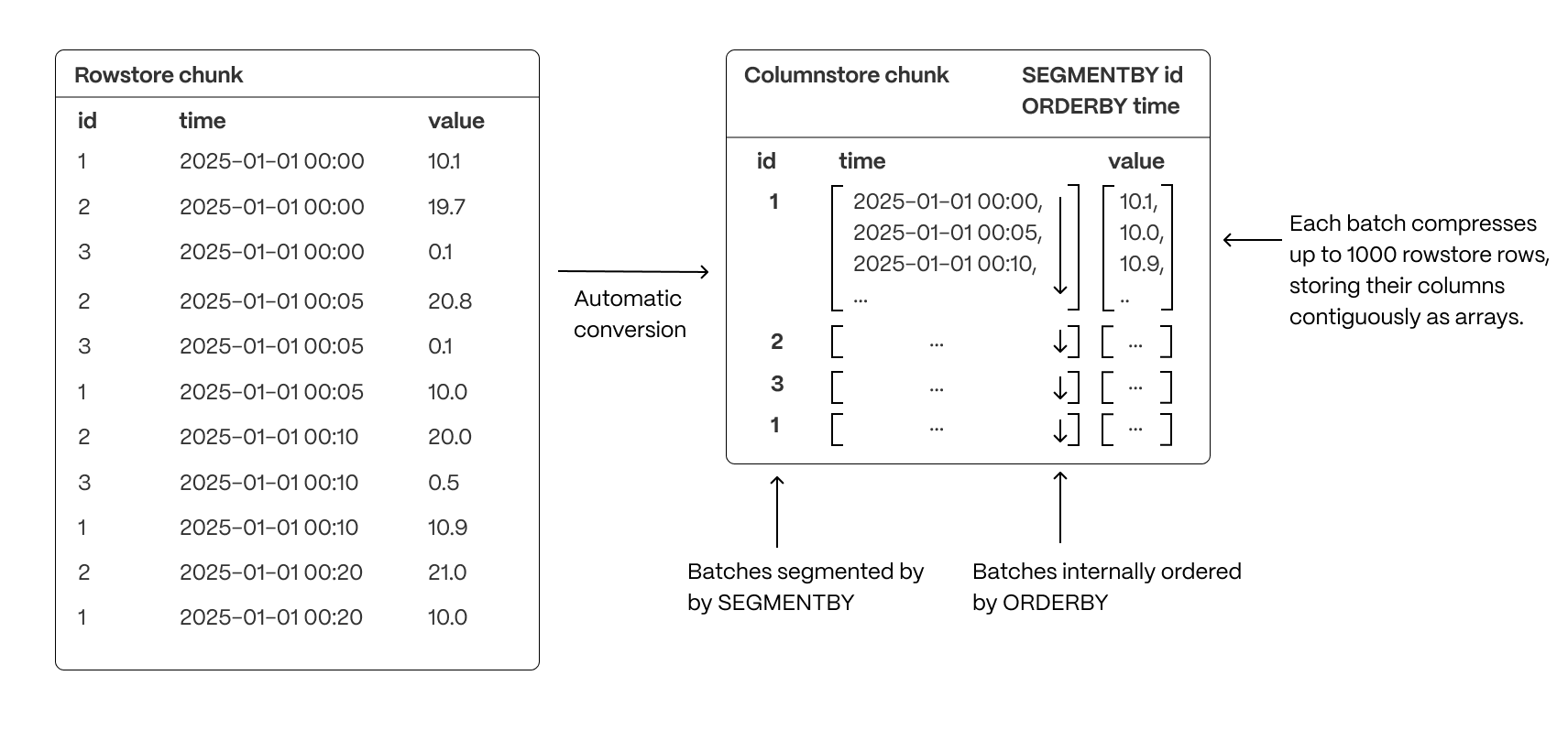

When TimescaleDB converts chunks from the rowstore to the columnstore, multiple records are grouped into a single row. The columns of this row hold an array-like structure that stores all the data. Because a single row takes up less disk space, you can reduce your chunk size by up to 98%, and can also speed up your queries. This helps you save on storage costs, and keeps your queries operating at lightning speed.

hypercore is enabled by default when you call CREATE TABLE. Best practice is to compress data that is no longer needed for highest performance queries, but is still accessed regularly in the columnstore. For example, yesterday's market data.

- Add a policy to convert chunks to the columnstore at a specific time interval

For example, yesterday's data:

CALL add_columnstore_policy('crypto_ticks', after => INTERVAL '1d');

If you have not configured a segmentby column, TimescaleDB chooses one for you based on the data in your

hypertable. For more information on how to tune your hypertables for the best performance, see

efficient queries.

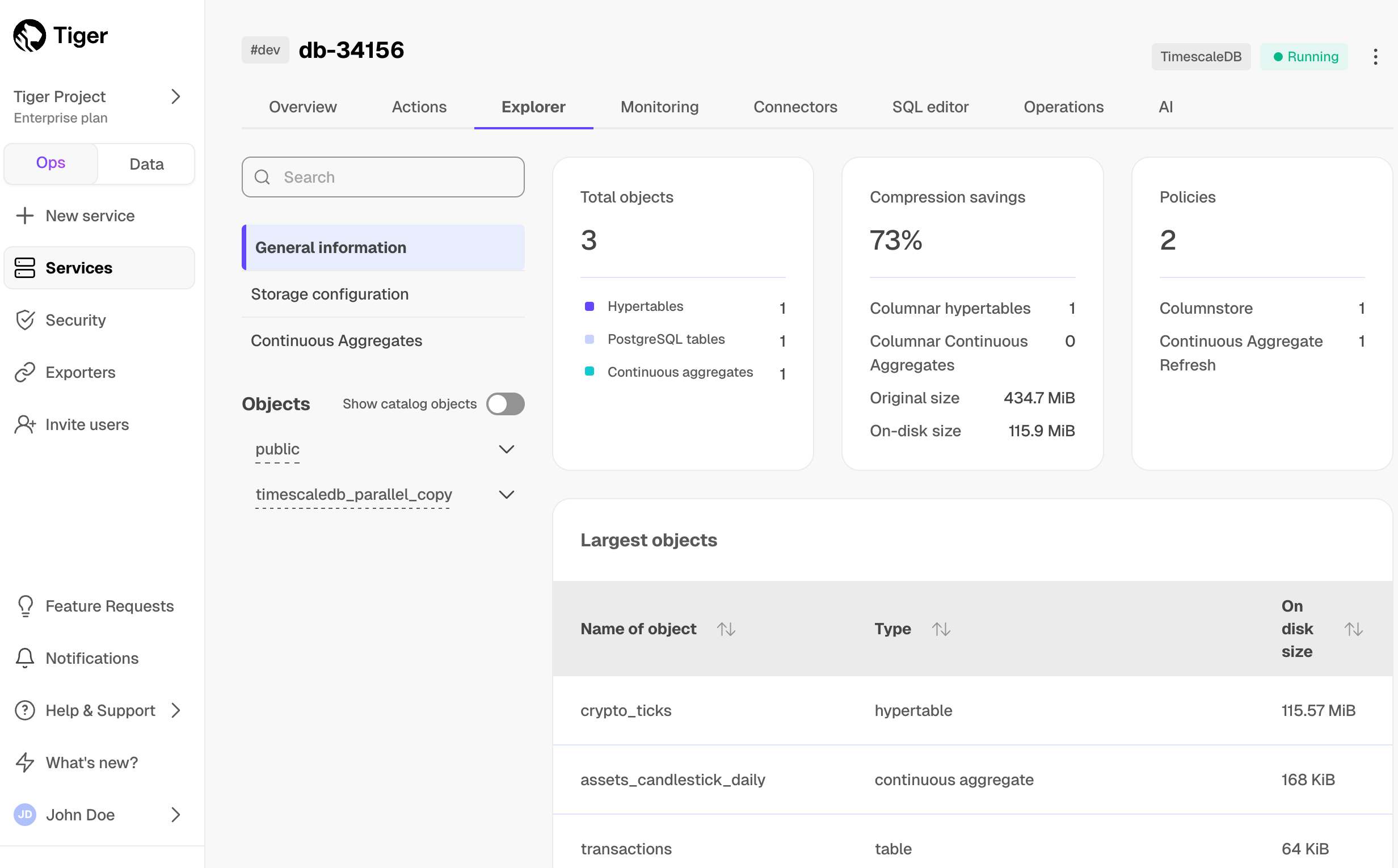

- View your data space saving

When you convert data to the columnstore, as well as being optimized for analytics, it is compressed by more than

90%. This helps you save on storage costs and keeps your queries operating at lightning speed. To see the amount of space

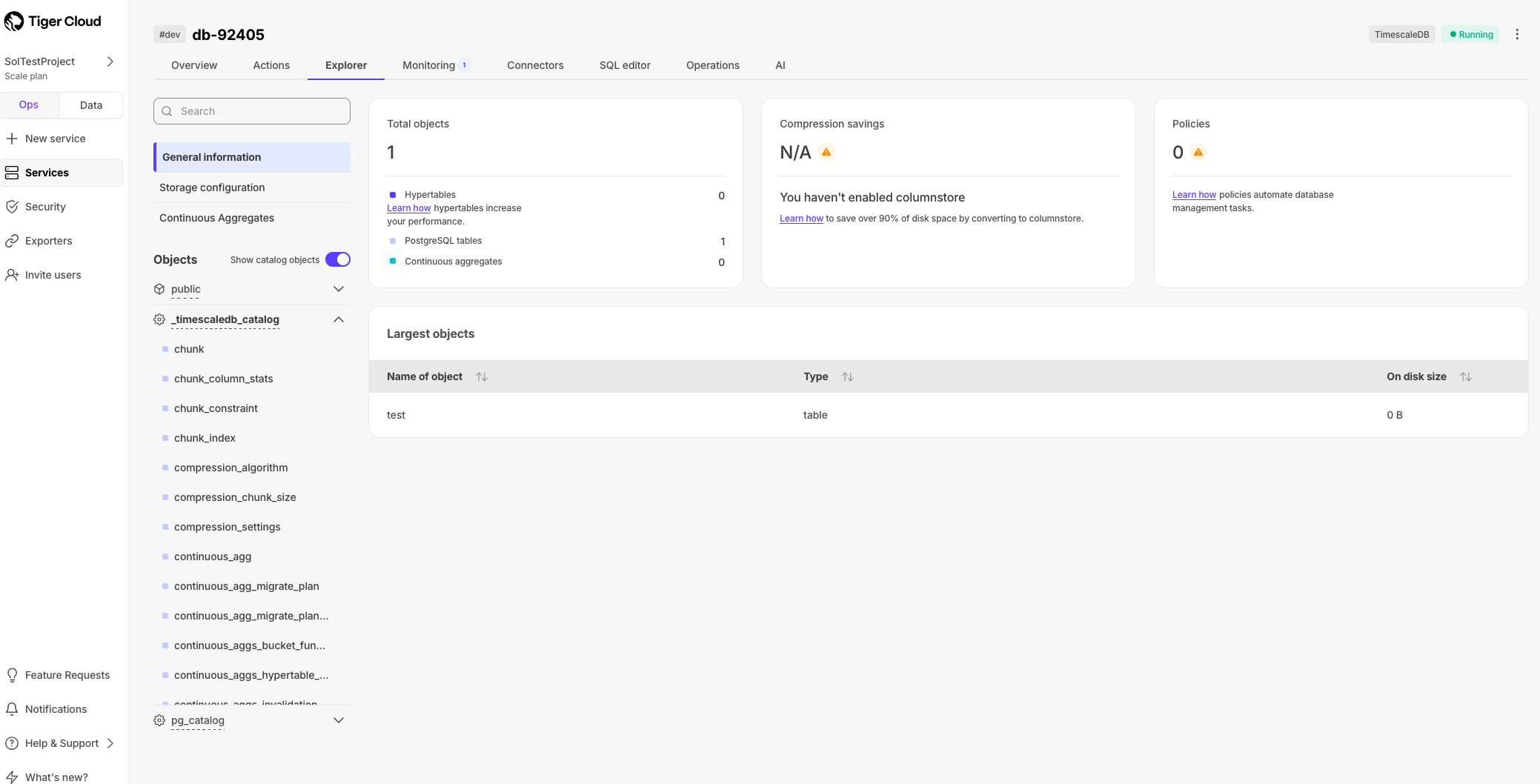

saved, click Explorer > public > crypto_ticks.

Write fast and efficient analytical queries

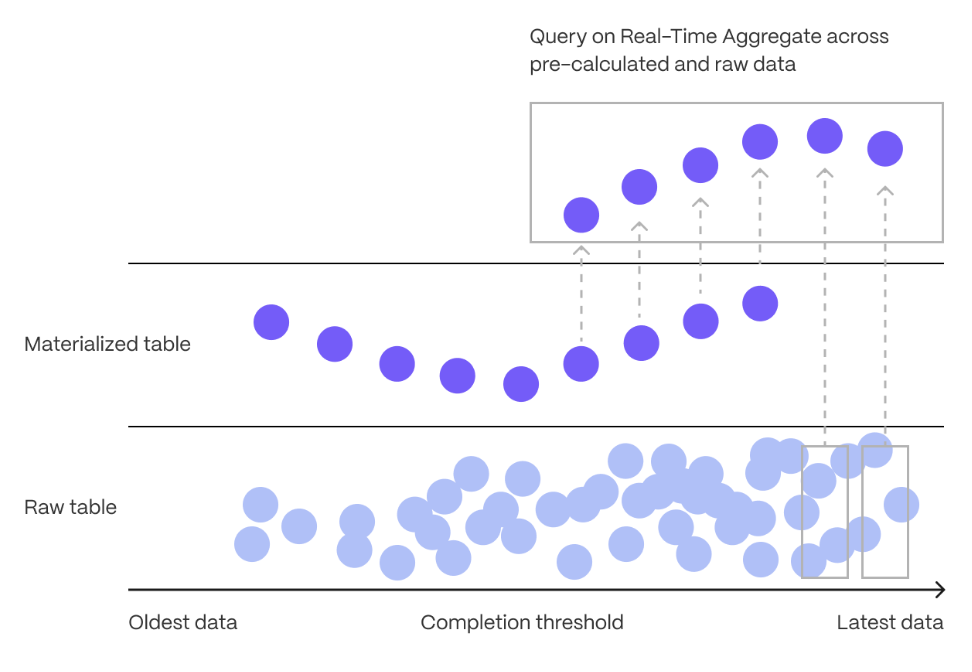

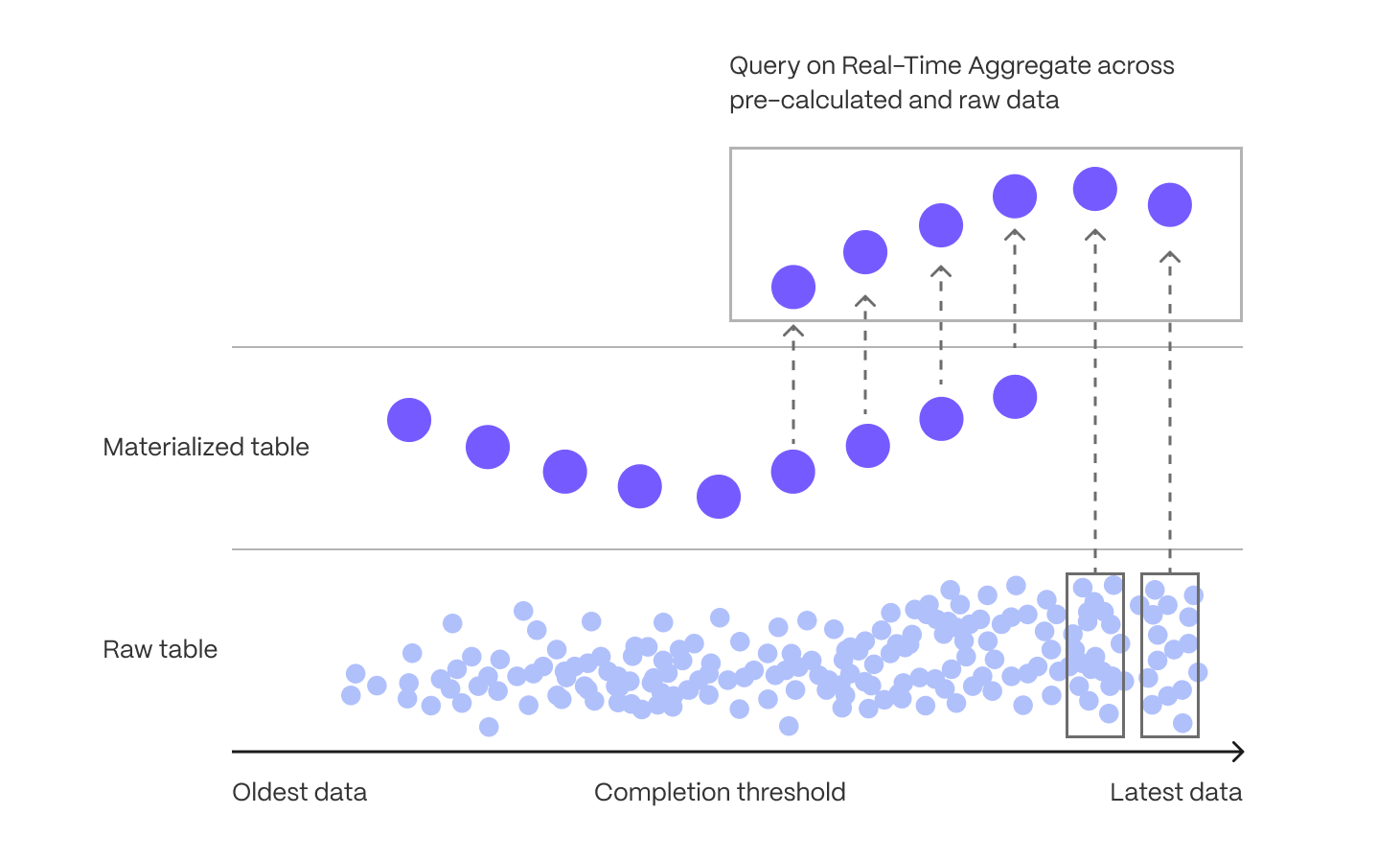

Aggregation is a way of combing data to get insights from it. Average, sum, and count are all examples of simple aggregates. However, with large amounts of data, aggregation slows things down, quickly. Continuous aggregates are a kind of hypertable that is refreshed automatically in the background as new data is added, or old data is modified. Changes to your dataset are tracked, and the hypertable behind the continuous aggregate is automatically updated in the background.

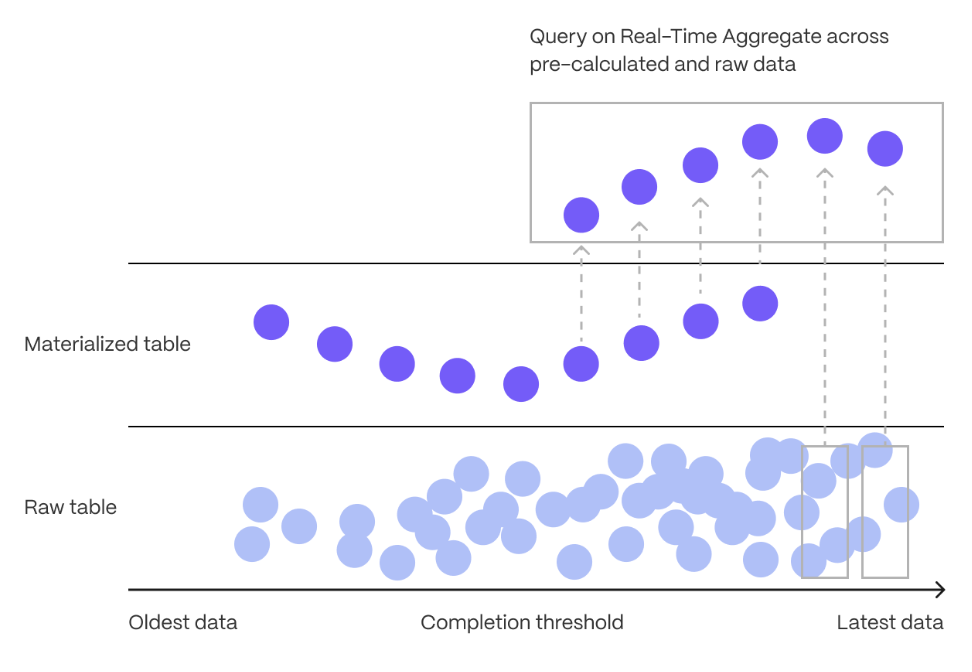

You create continuous aggregates on uncompressed data in high-performance storage. They continue to work on data in the columnstore and rarely accessed data in tiered storage. You can even create continuous aggregates on top of your continuous aggregates.

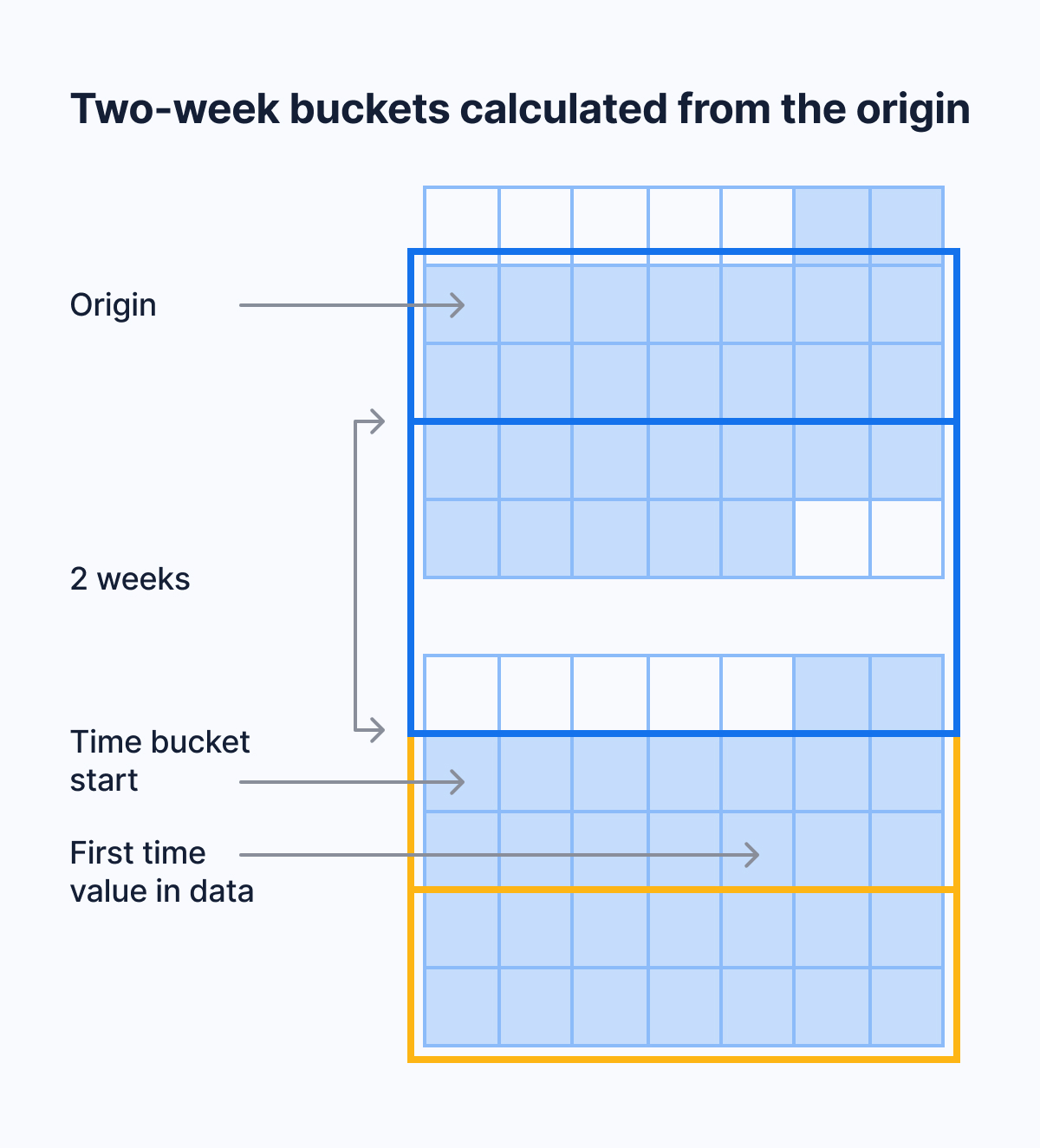

You use time buckets to create a continuous aggregate. Time buckets aggregate data in hypertables by time interval. For example, a 5-minute, 1-hour, or 3-day bucket. The data grouped in a time bucket uses a single timestamp. Continuous aggregates minimize the number of records that you need to look up to perform your query.

This section shows you how to run fast analytical queries using time buckets and continuous aggregate in Tiger Cloud Console. You can also do this using psql.

This feature is not available under the Free pricing plan.

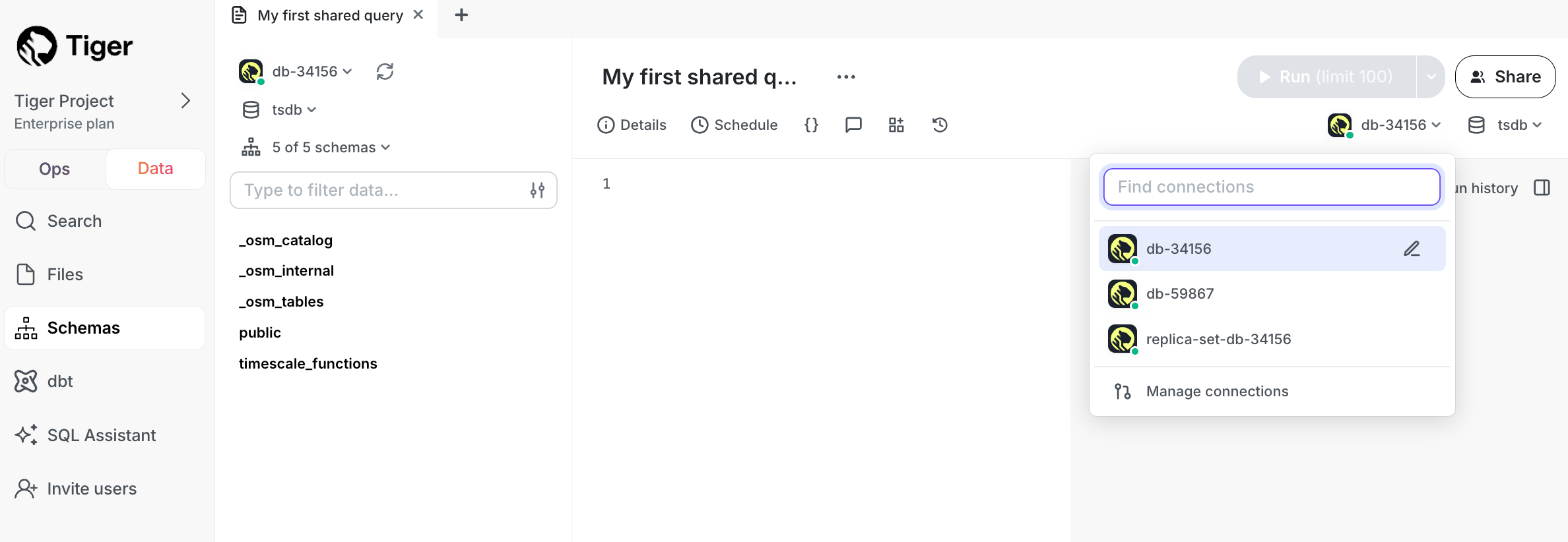

Connect to your service

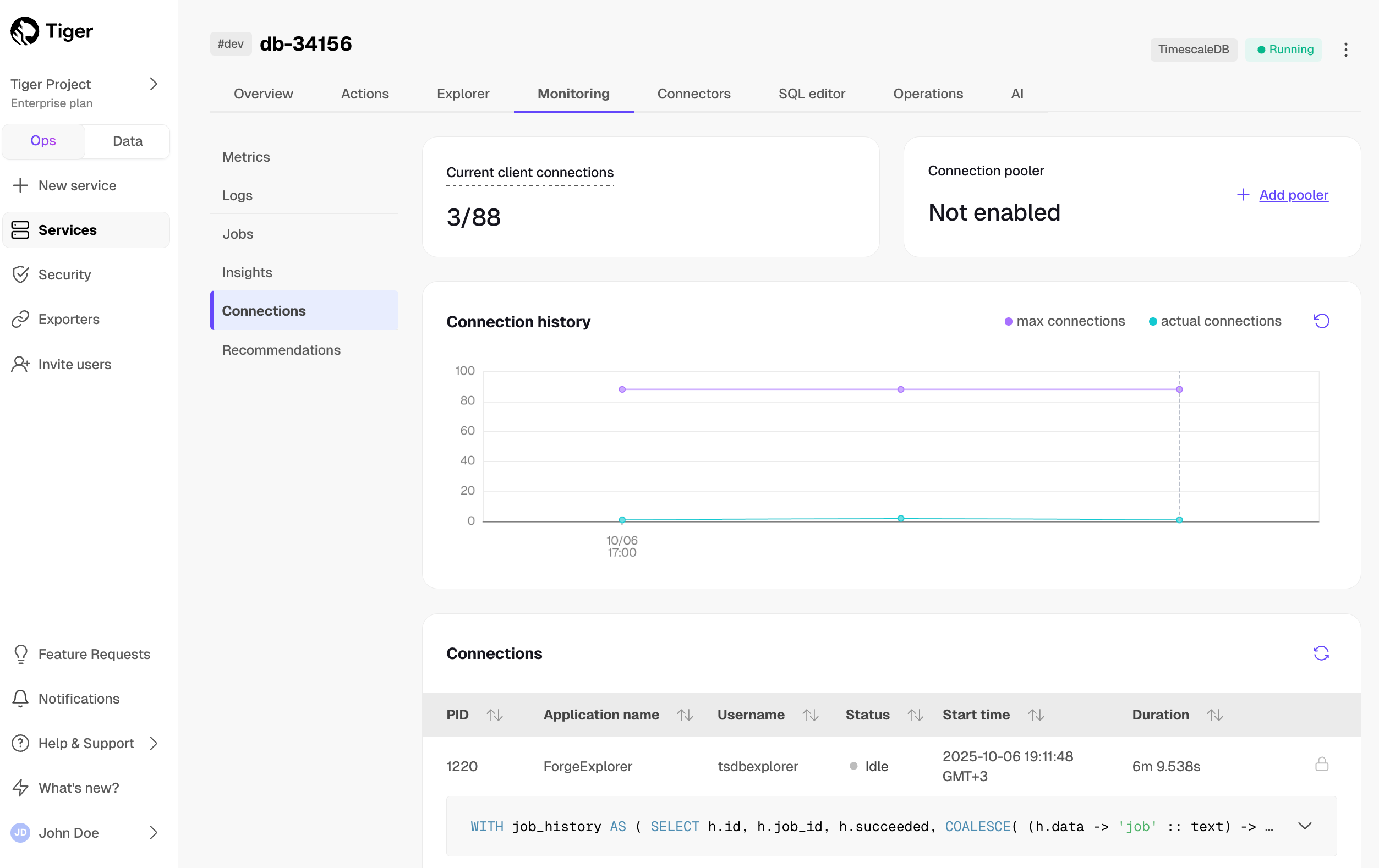

In Tiger Cloud Console, select your service in the connection drop-down in the top right.

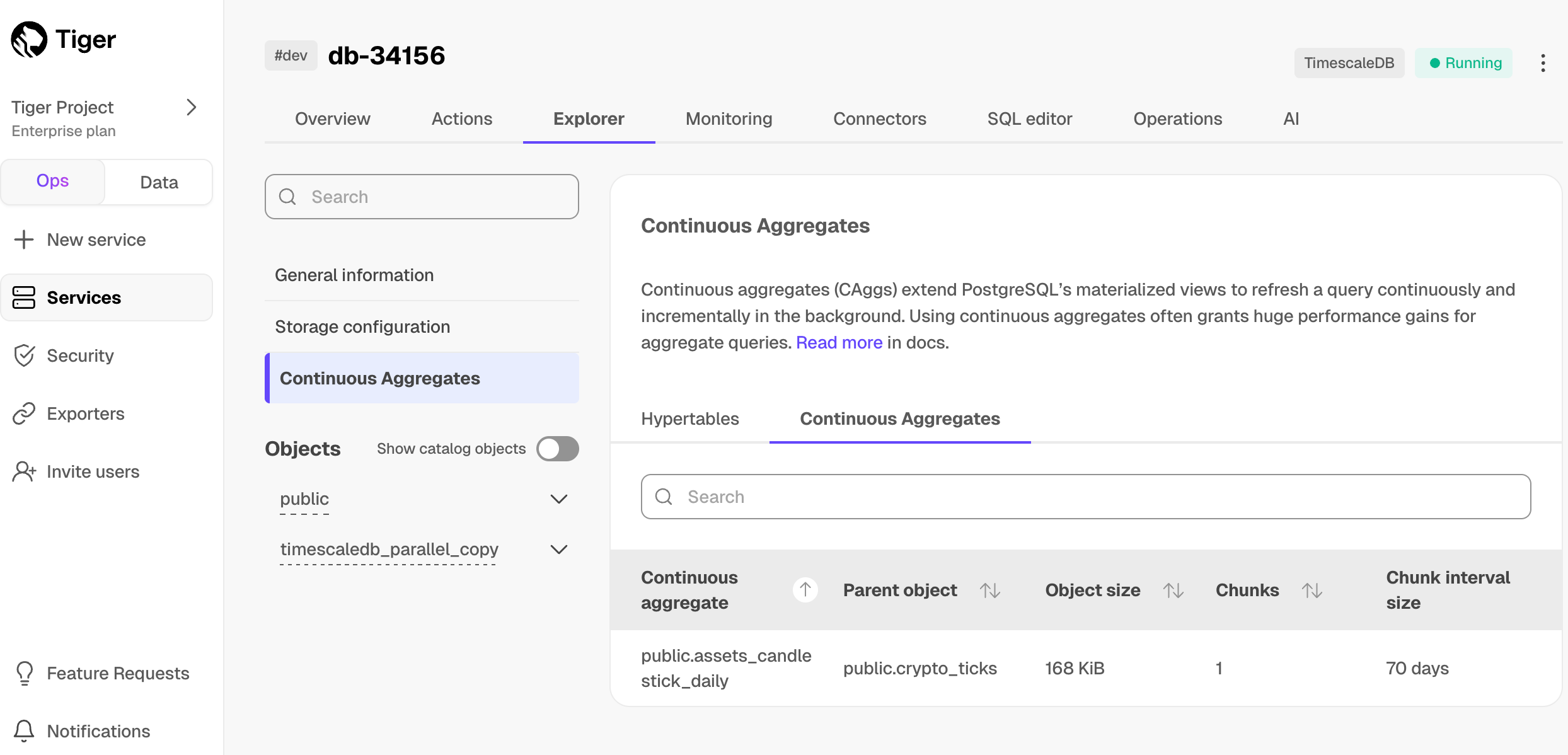

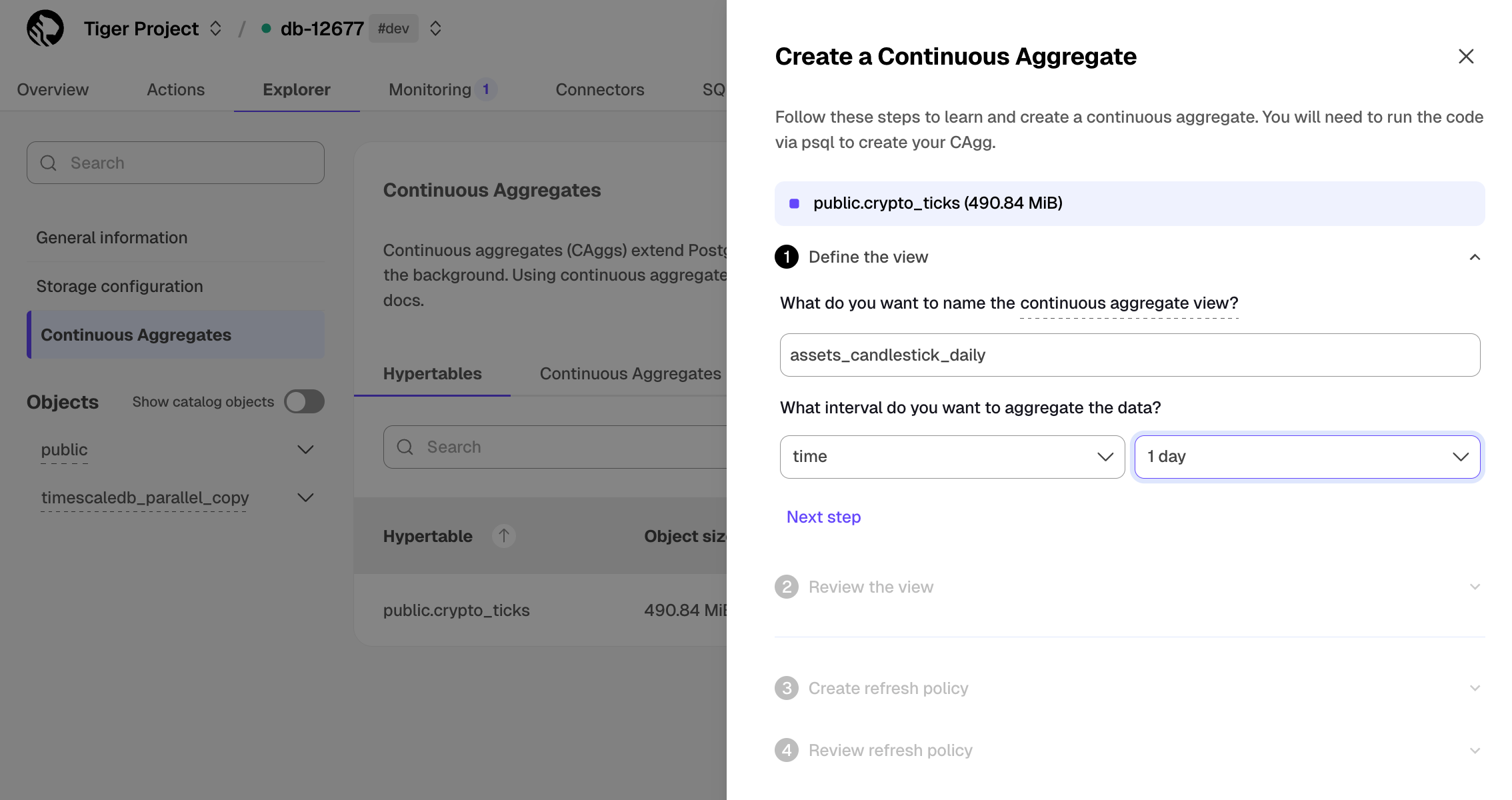

Create a continuous aggregate

For a continuous aggregate, data grouped using a time bucket is stored in a Postgres

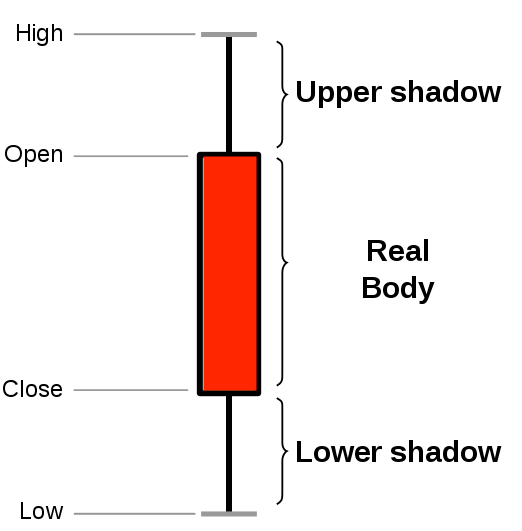

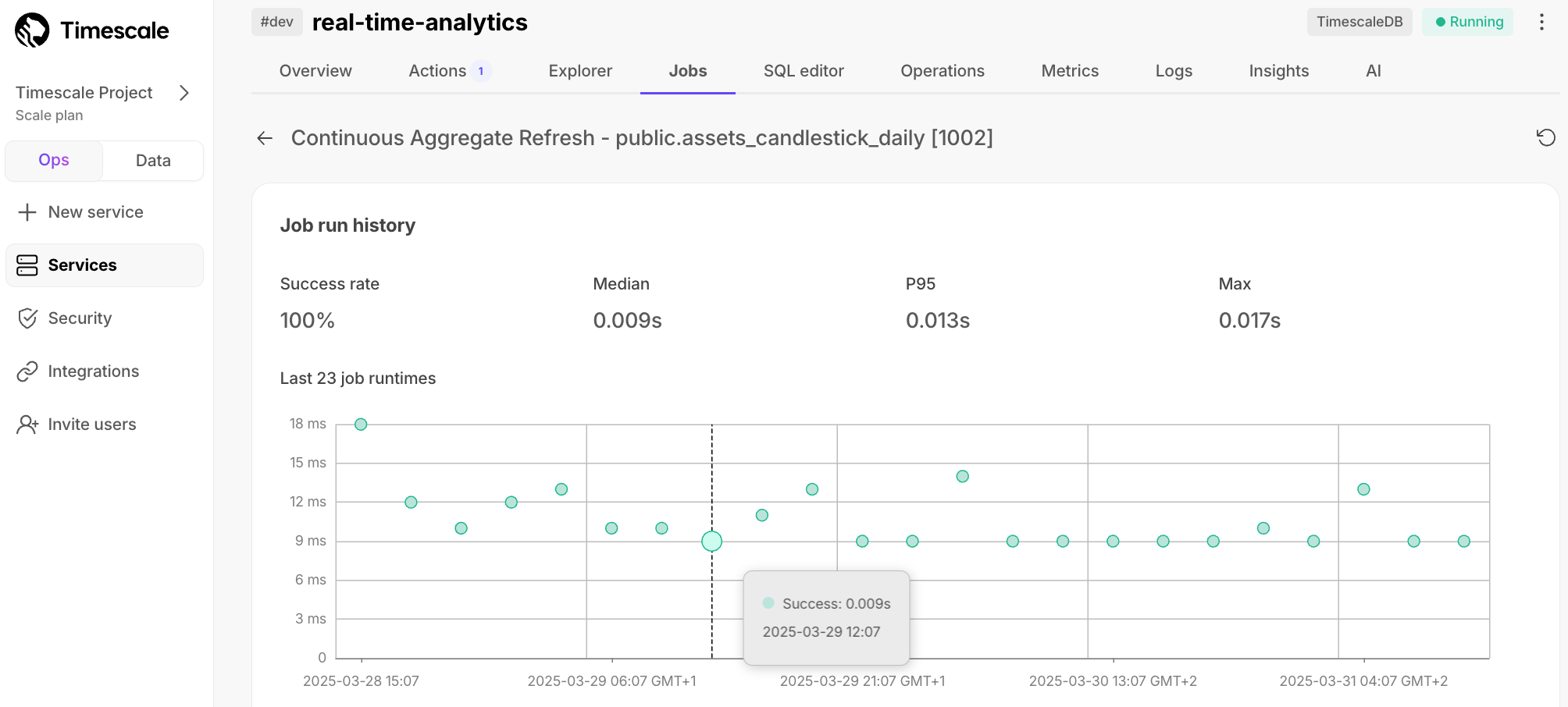

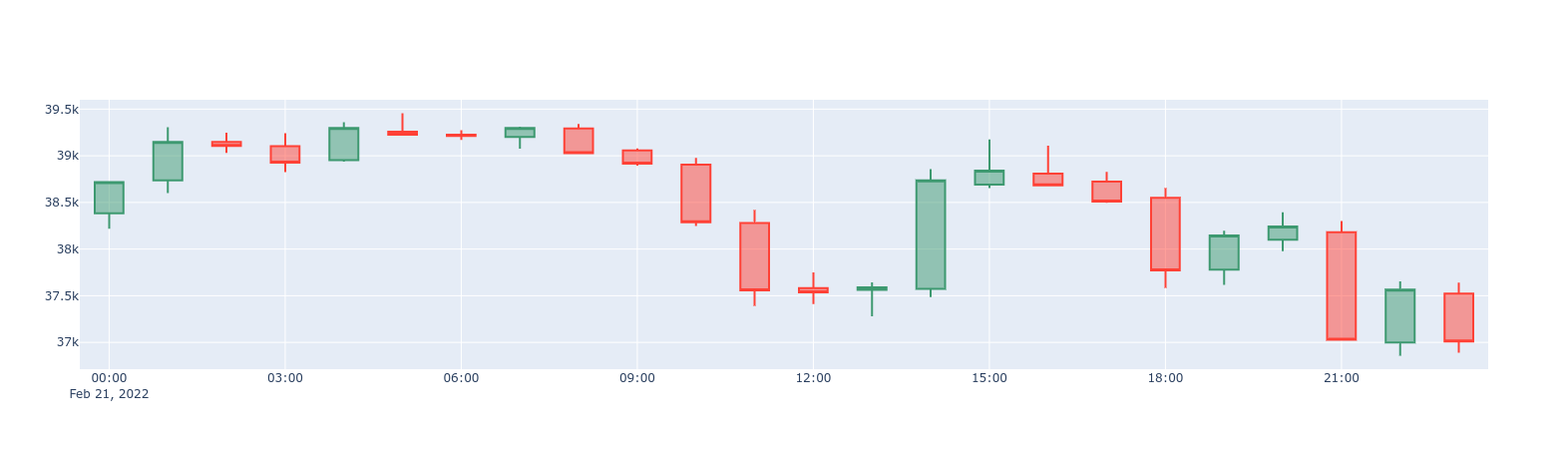

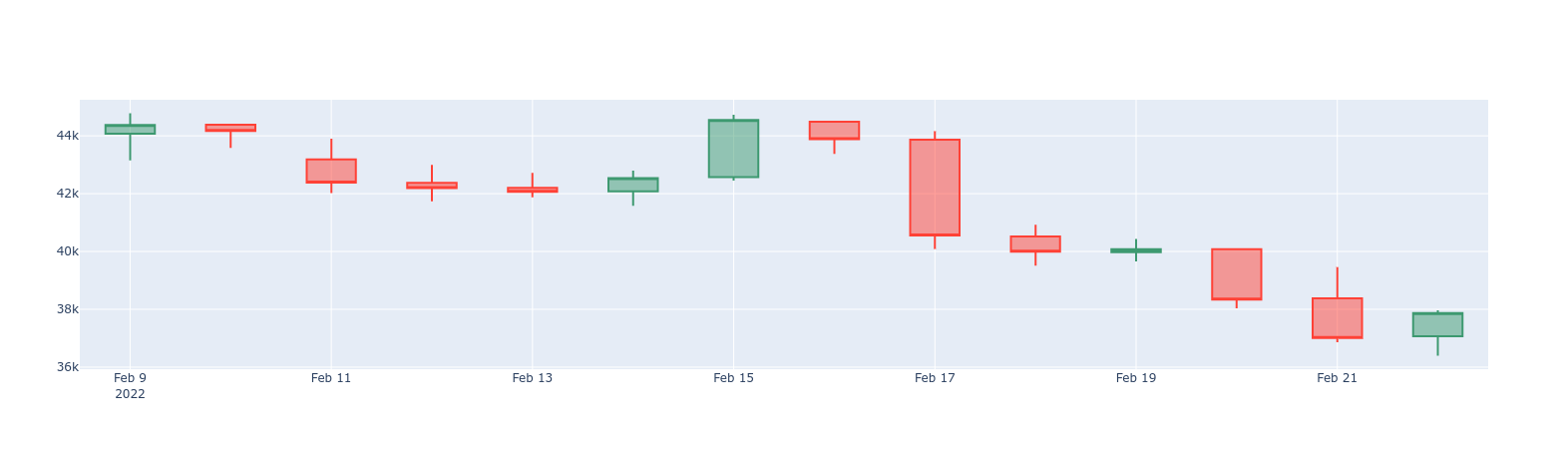

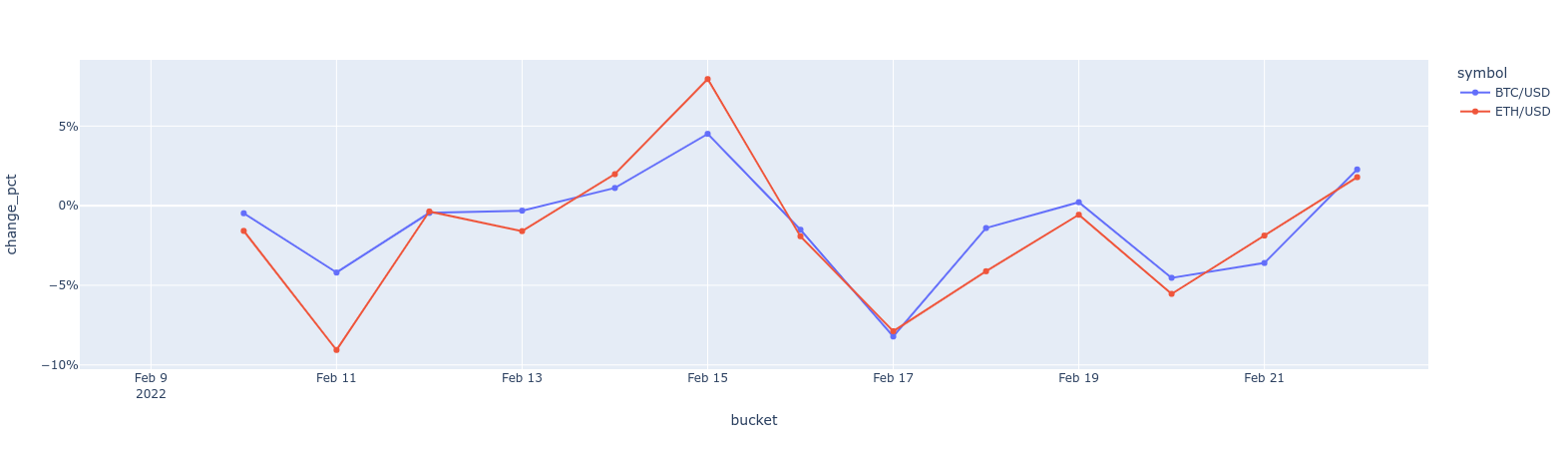

MATERIALIZED VIEWin a hypertable.timescaledb.continuousensures that this data is always up to date. In data mode, use the following code to create a continuous aggregate on the real-time data in thecrypto_tickstable:CREATE MATERIALIZED VIEW assets_candlestick_daily WITH (timescaledb.continuous) AS SELECT time_bucket('1 day', "time") AS day, symbol, max(price) AS high, first(price, time) AS open, last(price, time) AS close, min(price) AS low FROM crypto_ticks srt GROUP BY day, symbol;This continuous aggregate creates the candlestick chart data you use to visualize the price change of an asset.

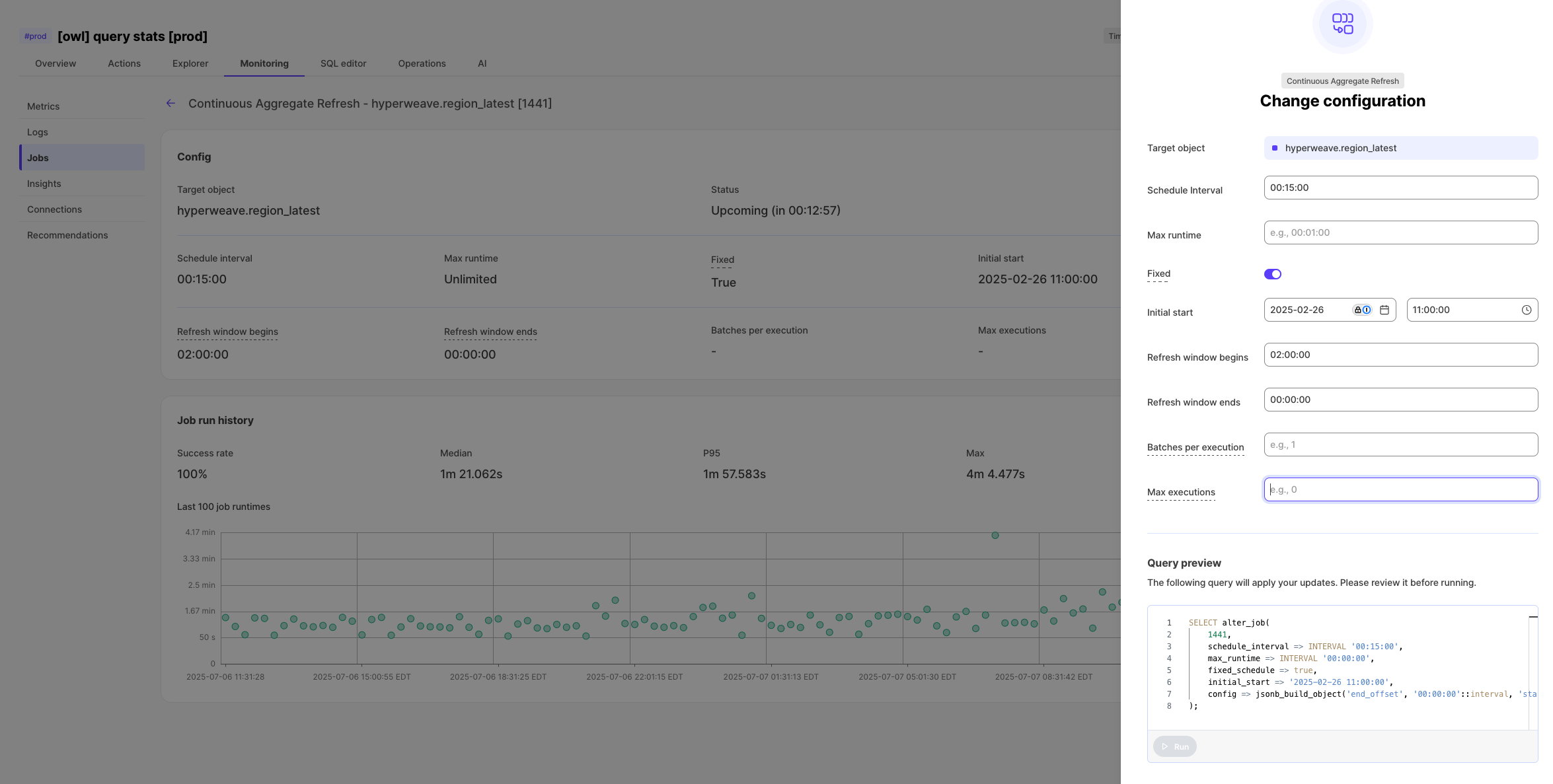

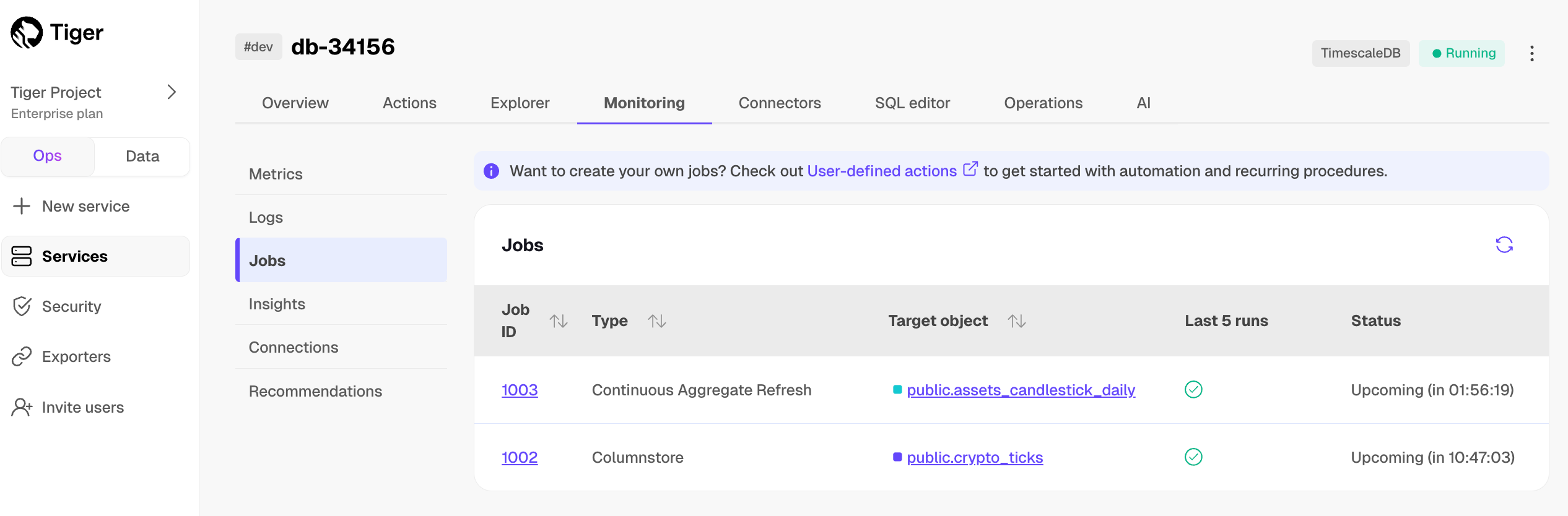

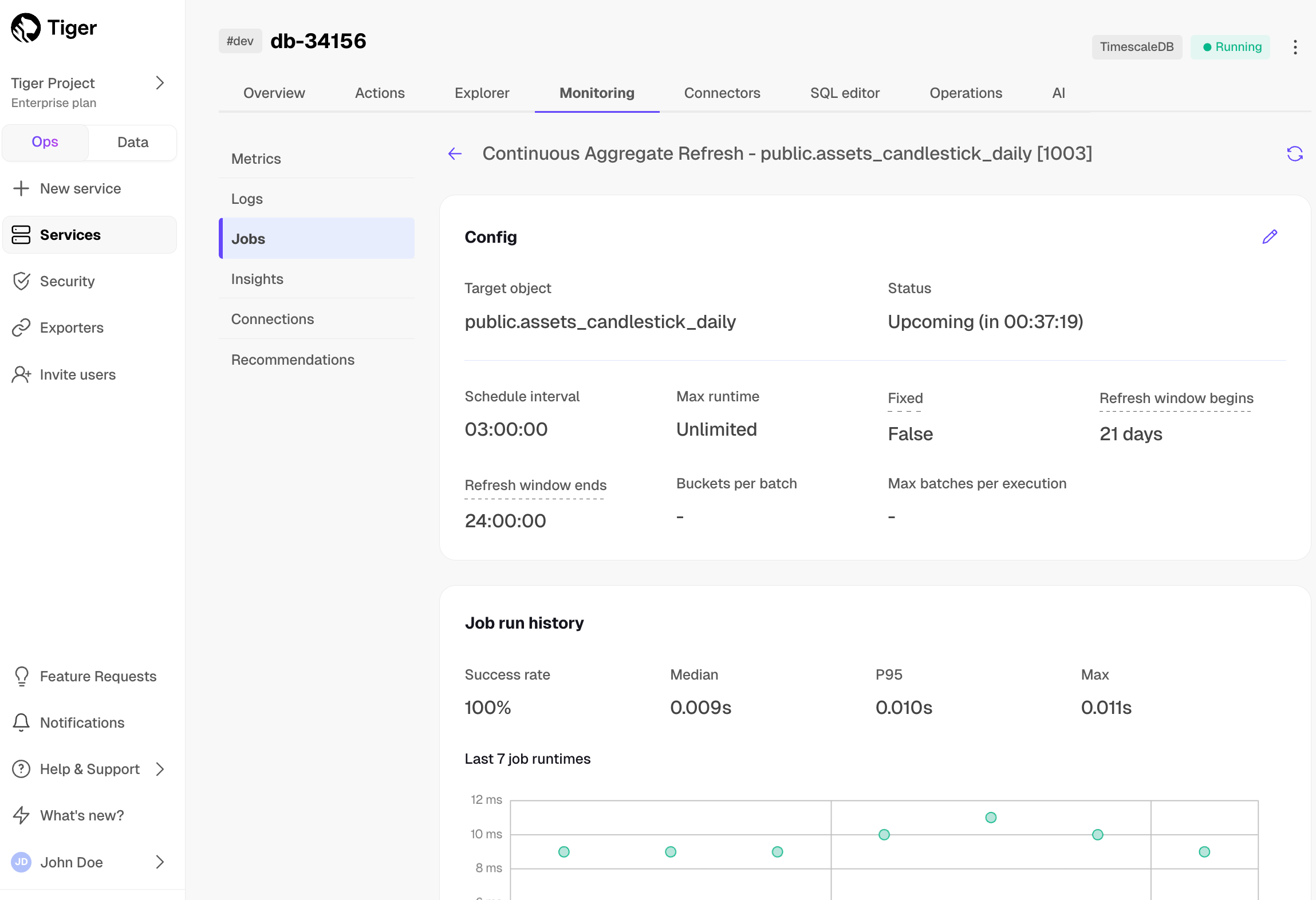

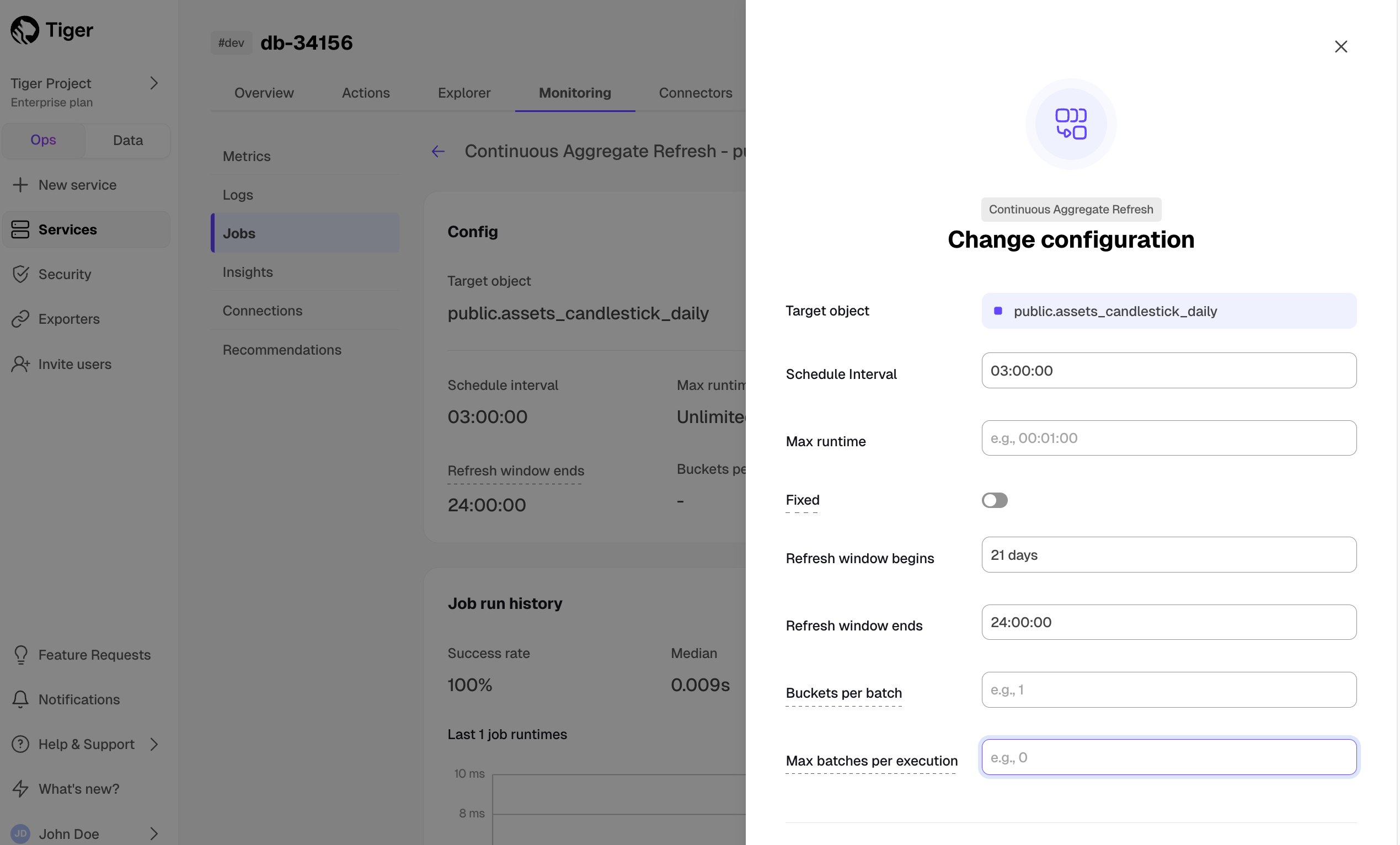

Create a policy to refresh the view every hour

SELECT add_continuous_aggregate_policy('assets_candlestick_daily', start_offset => INTERVAL '3 weeks', end_offset => INTERVAL '24 hours', schedule_interval => INTERVAL '3 hours');Have a quick look at your data

You query continuous aggregates exactly the same way as your other tables. To query the

assets_candlestick_dailycontinuous aggregate for all assets:In Tiger Cloud Console, select the service you uploaded data to

Click

Explorer>Continuous Aggregates>Create a Continuous Aggregatenext to thecrypto_tickshypertableCreate a view called

assets_candlestick_dailyon thetimecolumn with an interval of1 day, then clickNext step

Update the view SQL with the following functions, then click

RunCREATE MATERIALIZED VIEW assets_candlestick_daily WITH (timescaledb.continuous) AS SELECT time_bucket('1 day', "time") AS bucket, symbol, max(price) AS high, first(price, time) AS open, last(price, time) AS close, min(price) AS low FROM "public"."crypto_ticks" srt GROUP BY bucket, symbol;When the view is created, click

Next stepDefine a refresh policy with the following values:

How far back do you want to materialize?:3 weeksWhat recent data to exclude?:24 hoursHow often do you want the job to run?:3 hours

Click

Next step, then clickRun

Tiger Cloud creates the continuous aggregate and displays the aggregate ID in Tiger Cloud Console. Click DONE to close the wizard.

To see the change in terms of query time and data returned between a regular query and

a continuous aggregate, run the query part of the continuous aggregate

( SELECT ...GROUP BY day, symbol; ) and compare the results.

Slash storage charges

In the previous sections, you used continuous aggregates to make fast analytical queries, and hypercore to reduce storage costs on frequently accessed data. To reduce storage costs even more, you create tiering policies to move rarely accessed data to the object store. The object store is low-cost bottomless data storage built on Amazon S3. However, no matter the tier, you can query your data when you need. Tiger Cloud seamlessly accesses the correct storage tier and generates the response.

To set up data tiering:

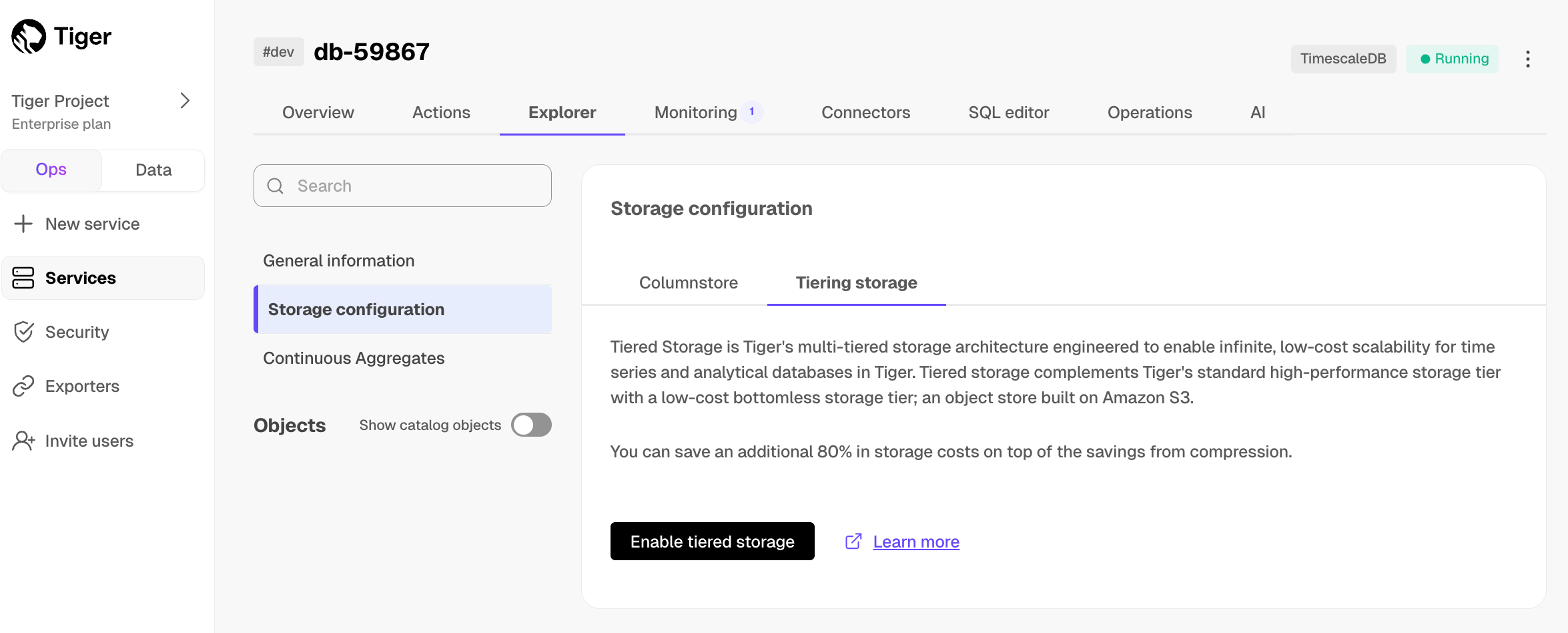

Enable data tiering

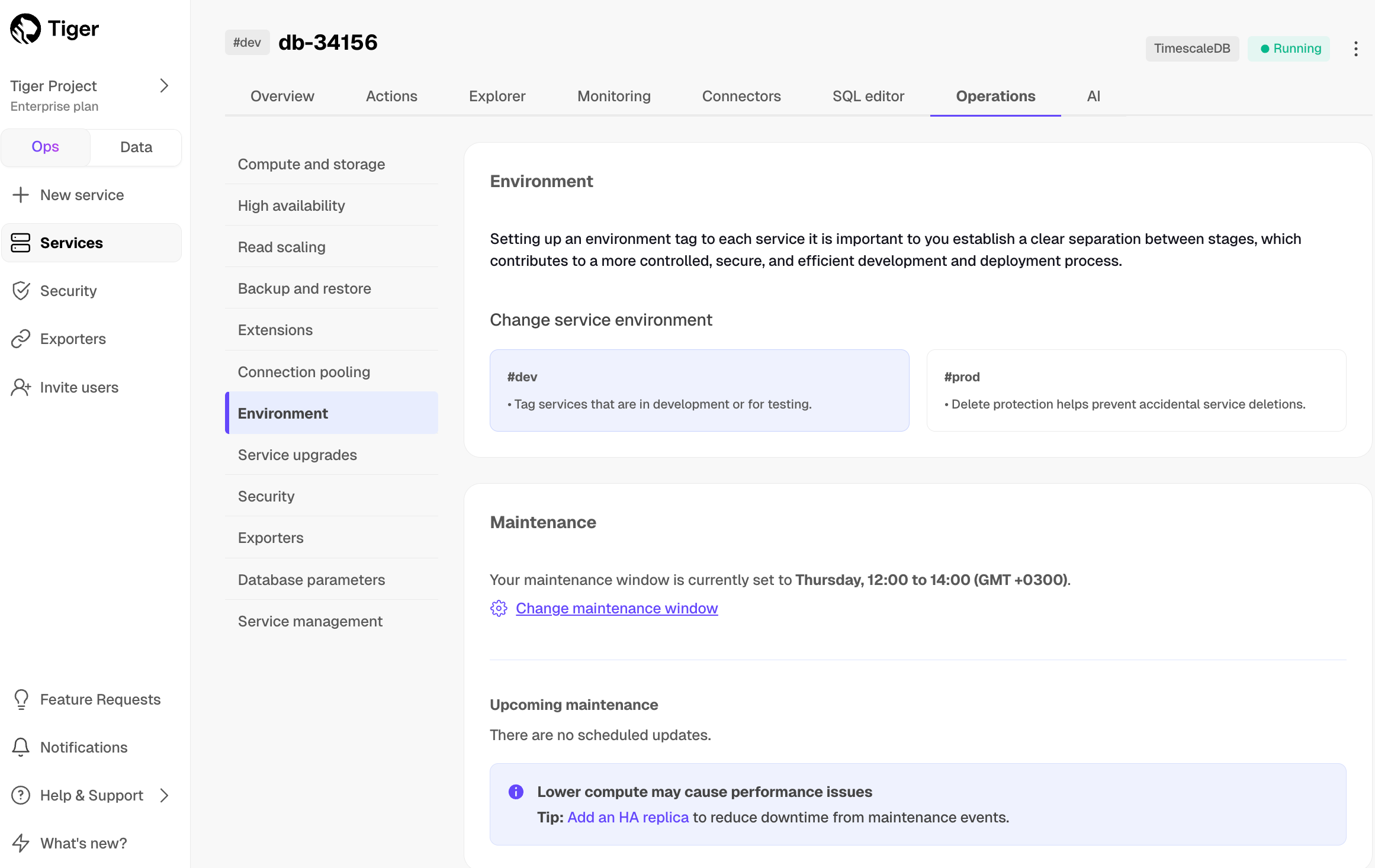

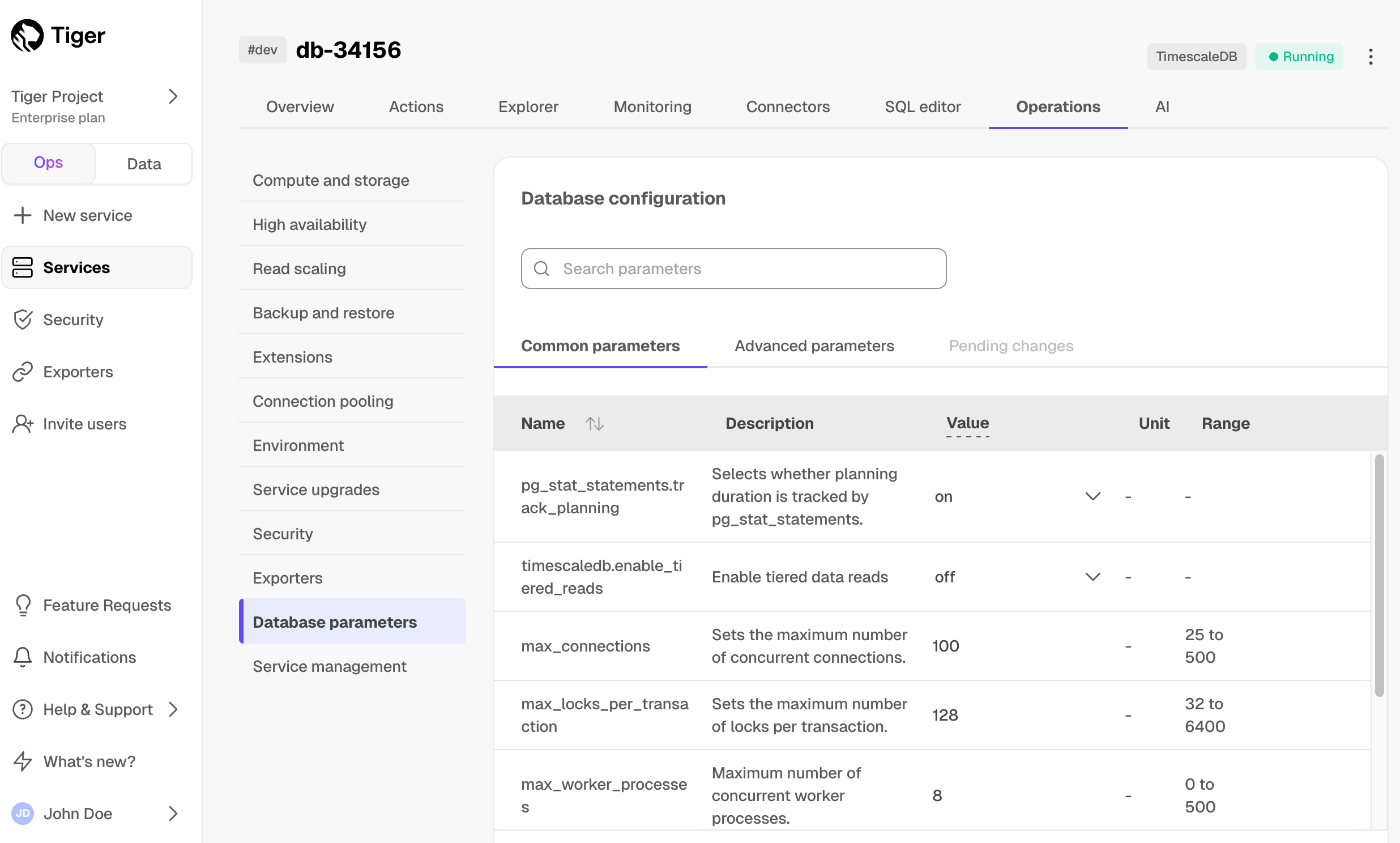

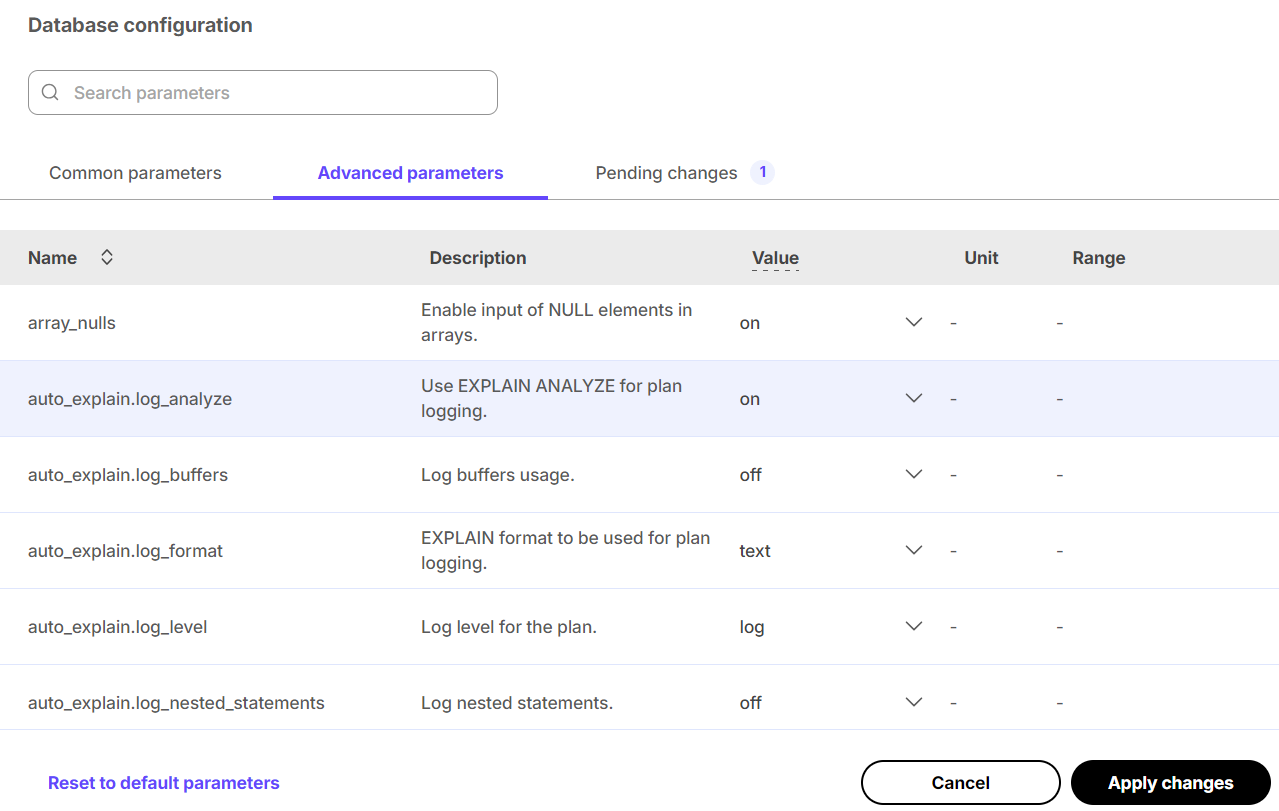

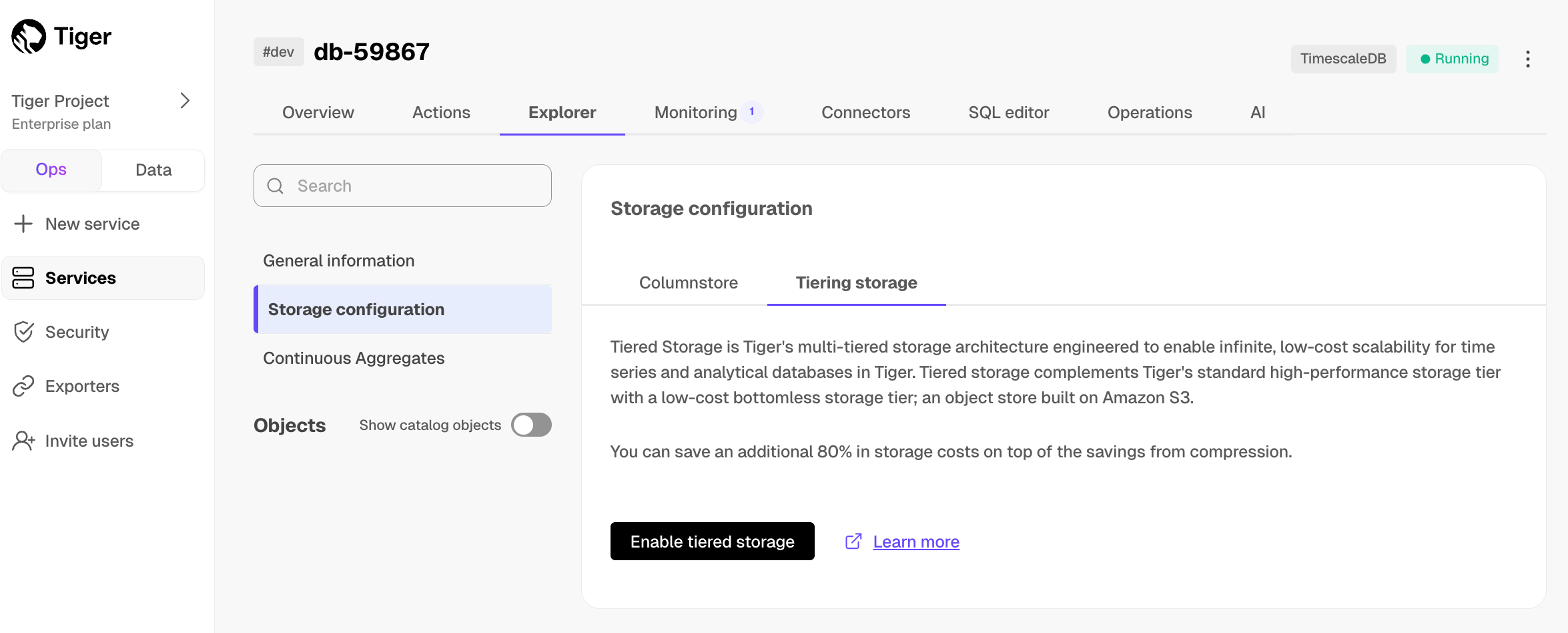

In Tiger Cloud Console, select the service to modify.

In

Explorer, clickStorage configuration>Tiering storage, then clickEnable tiered storage.

When tiered storage is enabled, you see the amount of data in the tiered object storage.

Set the time interval when data is tiered

In Tiger Cloud Console, click

Datato switch to the data mode, then enable data tiering on a hypertable with the following query:SELECT add_tiering_policy('assets_candlestick_daily', INTERVAL '3 weeks');Query tiered data

You enable reads from tiered data for each query, for a session or for all future sessions. To run a single query on tiered data:

Enable reads on tiered data:

set timescaledb.enable_tiered_reads = trueQuery the data:

SELECT * FROM crypto_ticks srt LIMIT 10Disable reads on tiered data:

set timescaledb.enable_tiered_reads = false;For more information, see Querying tiered data.

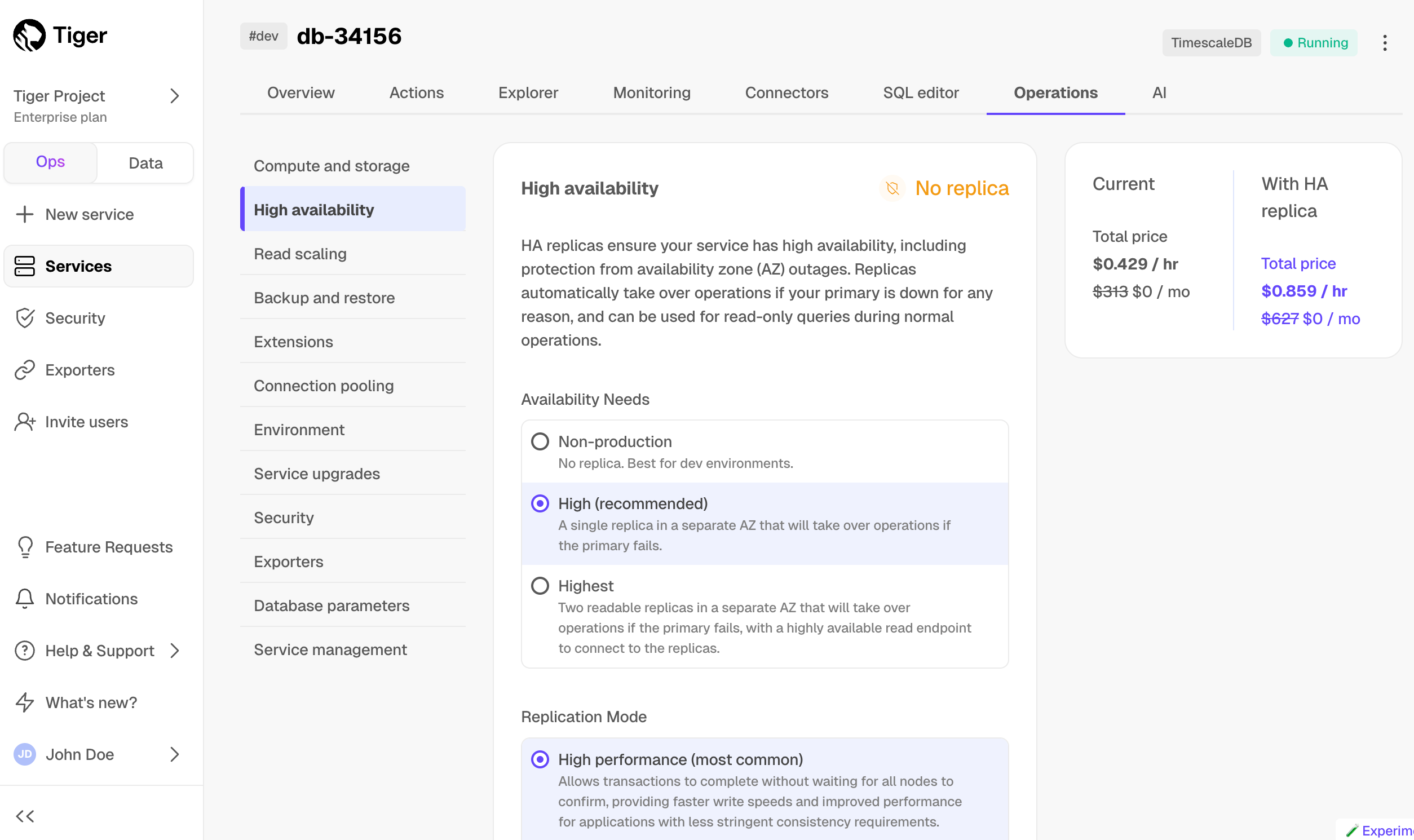

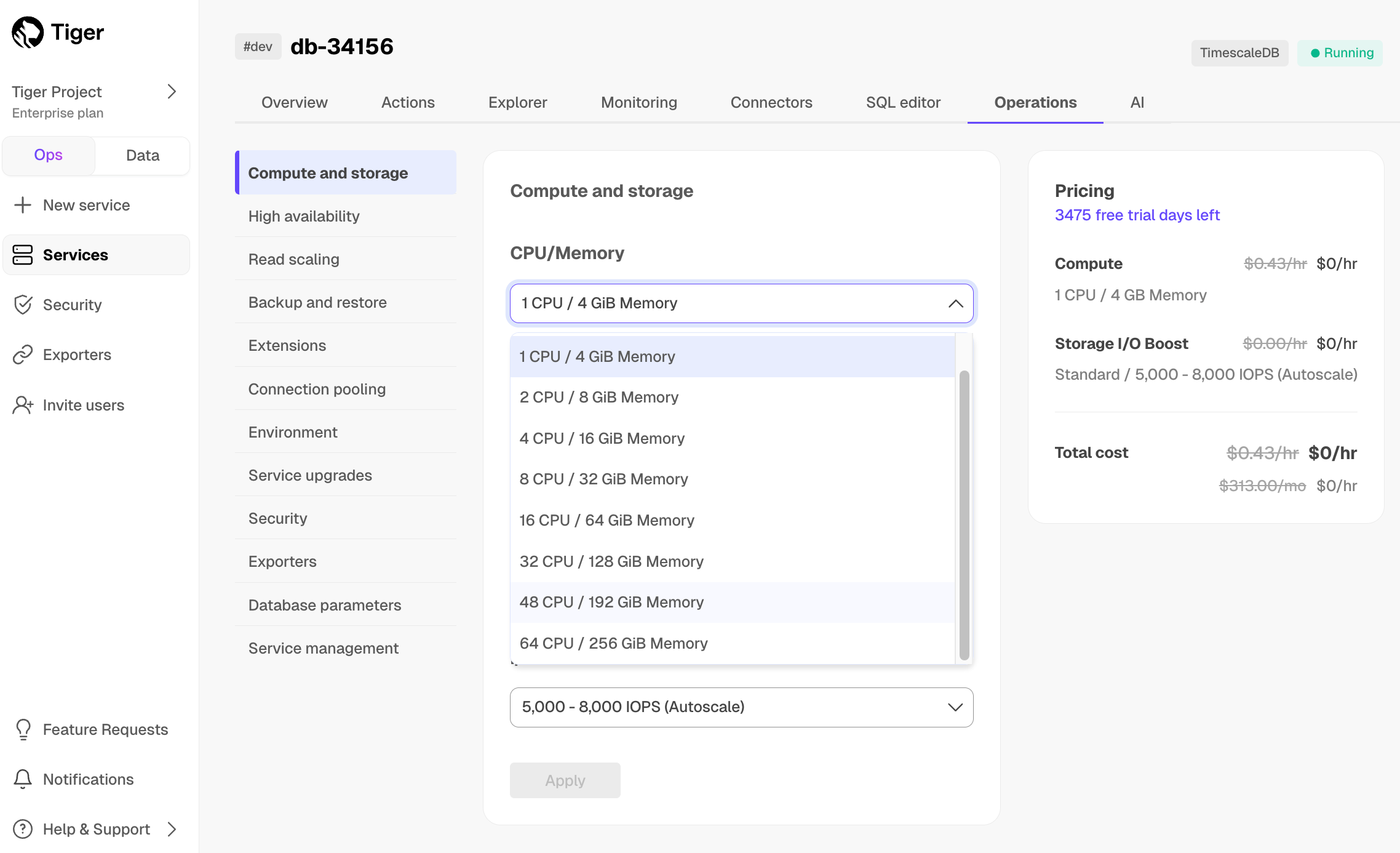

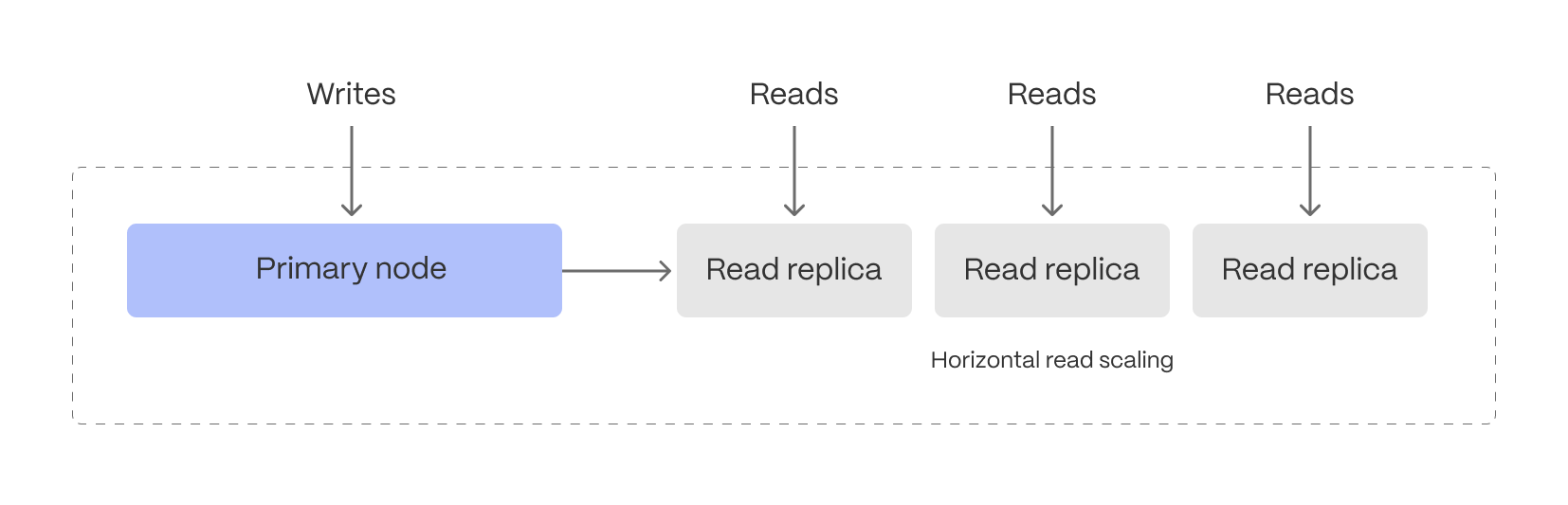

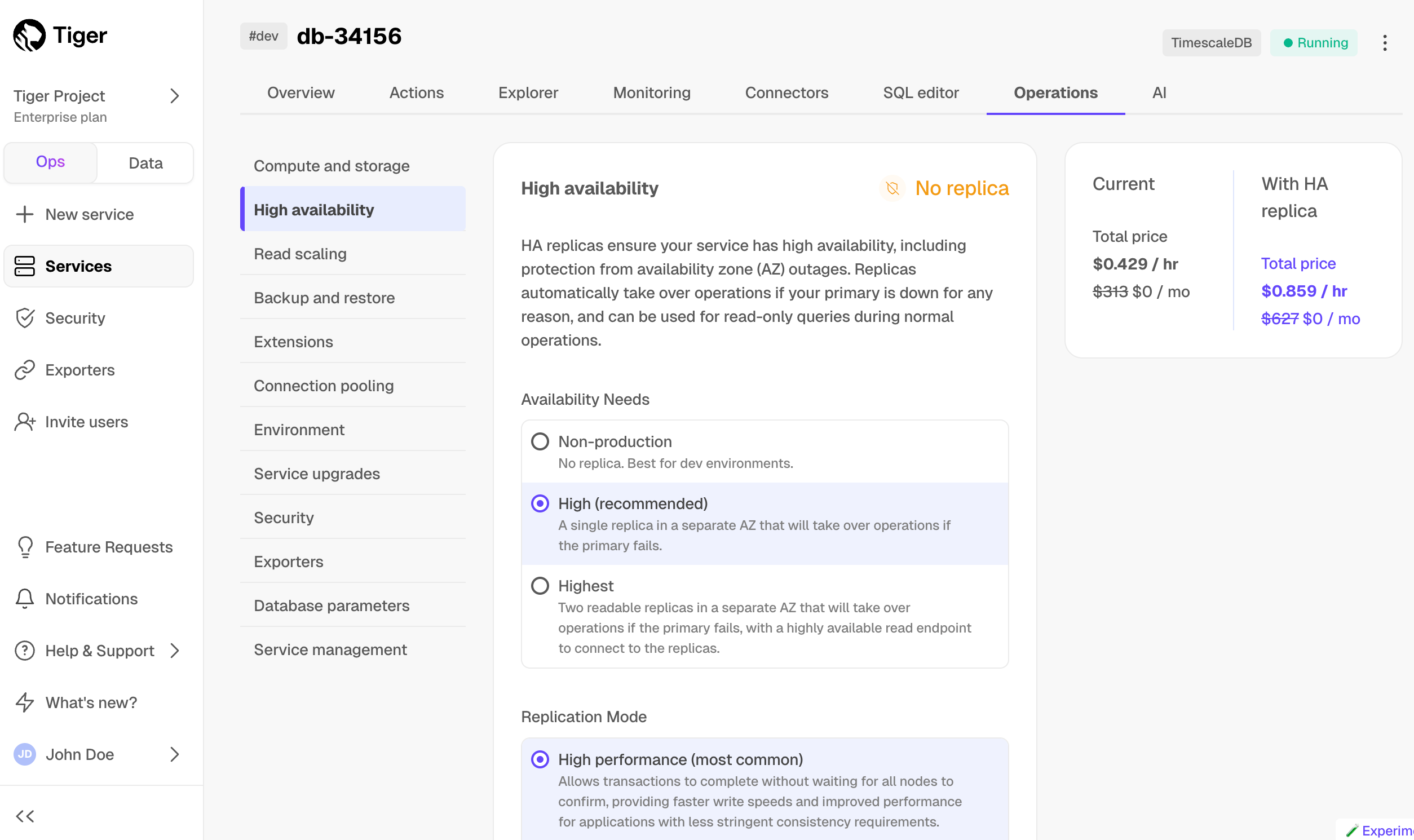

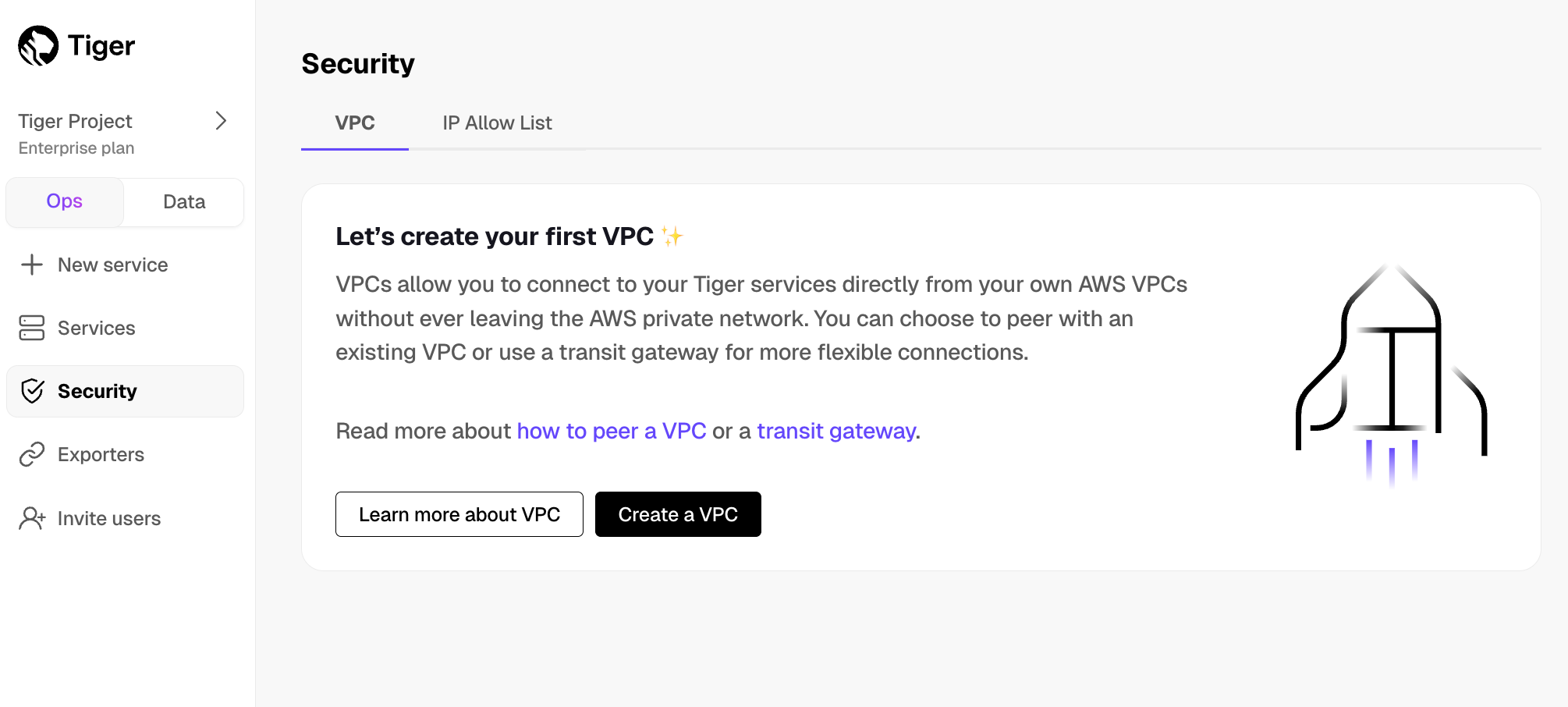

Reduce the risk of downtime and data loss

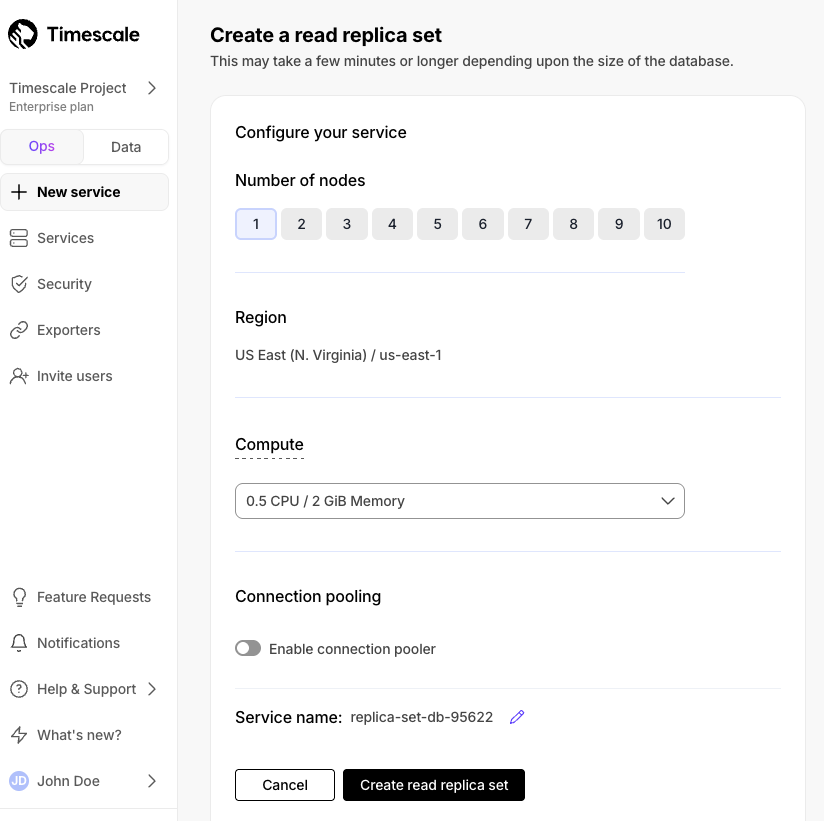

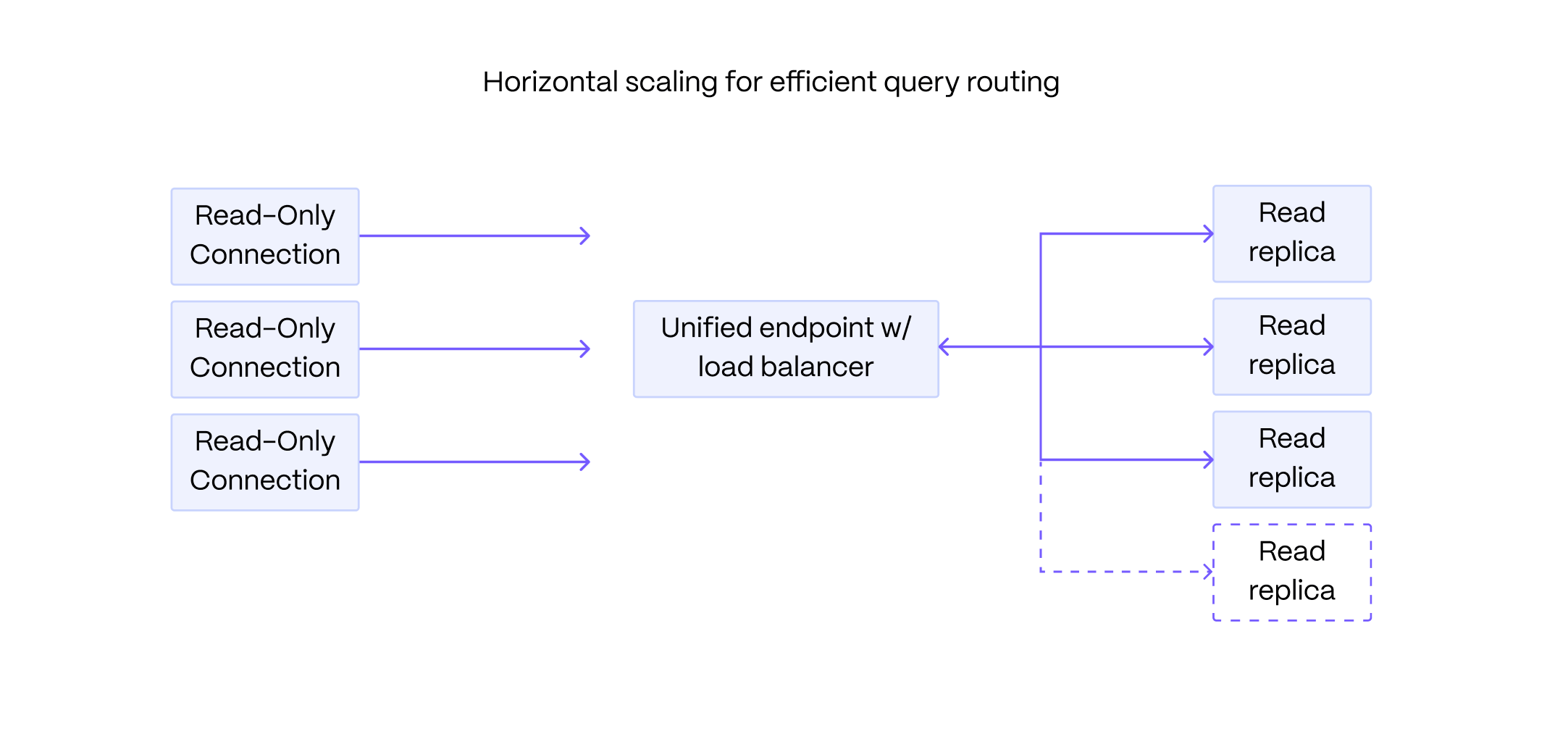

By default, all Tiger Cloud services have rapid recovery enabled. However, if your app has very low tolerance for downtime, Tiger Cloud offers high-availability replicas. HA replicas are exact, up-to-date copies of your database hosted in multiple AWS availability zones (AZ) within the same region as your primary node. HA replicas automatically take over operations if the original primary data node becomes unavailable. The primary node streams its write-ahead log (WAL) to the replicas to minimize the chances of data loss during failover.

- In Tiger Cloud Console, select the service to enable replication for.

- Click

Operations, then selectHigh availability. Choose your replication strategy, then click

Change configuration.In

Change high availability configuration, clickChange config.

For more information, see High availability.

What next? See the use case tutorials, interact with the data in your Tiger Cloud service using your favorite programming language, integrate your Tiger Cloud service with a range of third-party tools, plain old Use Tiger Data products, or dive into the API.

===== PAGE: https://docs.tigerdata.com/getting-started/start-coding-with-timescale/ =====

Start coding with Tiger Data

Easily integrate your app with Tiger Cloud or self-hosted TimescaleDB. Use your favorite programming language to connect to your Tiger Cloud service, create and manage hypertables, then ingest and query data.

"Quick Start: Ruby and TimescaleDB"

Prerequisites

To follow the steps on this page:

- Create a target Tiger Cloud service with the Real-time analytics capability.

You need your connection details. This procedure also works for self-hosted TimescaleDB.

- Install Rails.

Connect a Rails app to your service

Every Tiger Cloud service is a 100% Postgres database hosted in Tiger Cloud with Tiger Data extensions such as TimescaleDB. You connect to your Tiger Cloud service from a standard Rails app configured for Postgres.

Create a new Rails app configured for Postgres

Rails creates and bundles your app, then installs the standard Postgres Gems.

rails new my_app -d=postgresql cd my_appInstall the TimescaleDB gem

Open

Gemfile, add the following line, then save your changes:gem 'timescaledb'In Terminal, run the following command:

bundle install

Connect your app to your Tiger Cloud service

In

<my_app_home>/config/database.ymlupdate the configuration to read securely connect to your Tiger Cloud service by addingurl: <%= ENV['DATABASE_URL'] %>to the default configuration:default: &default adapter: postgresql encoding: unicode pool: <%= ENV.fetch("RAILS_MAX_THREADS") { 5 } %> url: <%= ENV['DATABASE_URL'] %>Set the environment variable for

DATABASE_URLto the value ofService URLfrom your connection detailsexport DATABASE_URL="value of Service URL"Create the database:

- Tiger Cloud: nothing to do. The database is part of your Tiger Cloud service.

Self-hosted TimescaleDB, create the database for the project:

rails db:create

Run migrations:

rails db:migrateVerify the connection from your app to your Tiger Cloud service:

echo "\dx" | rails dbconsole

The result shows the list of extensions in your Tiger Cloud service

| Name | Version | Schema | Description | | -- | -- | -- | -- | | pg_buffercache | 1.5 | public | examine the shared buffer cache| | pg_stat_statements | 1.11 | public | track planning and execution statistics of all SQL statements executed| | plpgsql | 1.0 | pg_catalog | PL/pgSQL procedural language| | postgres_fdw | 1.1 | public | foreign-data wrapper for remote Postgres servers| | timescaledb | 2.18.1 | public | Enables scalable inserts and complex queries for time-series data (Community Edition)| | timescaledb_toolkit | 1.19.0 | public | Library of analytical hyperfunctions, time-series pipelining, and other SQL utilities|

Optimize time-series data in hypertables

Hypertables are Postgres tables designed to simplify and accelerate data analysis. Anything you can do with regular Postgres tables, you can do with hypertables - but much faster and more conveniently.

In this section, you use the helpers in the TimescaleDB gem to create and manage a hypertable.

Generate a migration to create the page loads table

rails generate migration create_page_loads

This creates the <my_app_home>/db/migrate/<migration-datetime>_create_page_loads.rb migration file.

- Add hypertable options

Replace the contents of <my_app_home>/db/migrate/<migration-datetime>_create_page_loads.rb

with the following:

```ruby

class CreatePageLoads < ActiveRecord::Migration[8.0]

def change

hypertable_options = {

time_column: 'created_at',

chunk_time_interval: '1 day',

compress_segmentby: 'path',

compress_orderby: 'created_at',

compress_after: '7 days',

drop_after: '30 days'

}

create_table :page_loads, id: false, primary_key: [:created_at, :user_agent, :path], hypertable: hypertable_options do |t|

t.timestamptz :created_at, null: false

t.string :user_agent

t.string :path

t.float :performance

end

end

end

```

The `id` column is not included in the table. This is because TimescaleDB requires that any `UNIQUE` or `PRIMARY KEY`

indexes on the table include all partitioning columns. In this case, this is the time column. A new

Rails model includes a `PRIMARY KEY` index for id by default: either remove the column or make sure that the index

includes time as part of a "composite key."

For more information, check the Roby docs around composite primary keys.

Create a

PageLoadmodelCreate a new file called

<my_app_home>/app/models/page_load.rband add the following code:class PageLoad < ApplicationRecord extend Timescaledb::ActsAsHypertable include Timescaledb::ContinuousAggregatesHelper acts_as_hypertable time_column: "created_at", segment_by: "path", value_column: "performance" scope :chrome_users, -> { where("user_agent LIKE ?", "%Chrome%") } scope :firefox_users, -> { where("user_agent LIKE ?", "%Firefox%") } scope :safari_users, -> { where("user_agent LIKE ?", "%Safari%") } scope :performance_stats, -> { select("stats_agg(#{value_column}) as stats_agg") } scope :slow_requests, -> { where("performance > ?", 1.0) } scope :fast_requests, -> { where("performance < ?", 0.1) } continuous_aggregates scopes: [:performance_stats], timeframes: [:minute, :hour, :day], refresh_policy: { minute: { start_offset: '3 minute', end_offset: '1 minute', schedule_interval: '1 minute' }, hour: { start_offset: '3 hours', end_offset: '1 hour', schedule_interval: '1 minute' }, day: { start_offset: '3 day', end_offset: '1 day', schedule_interval: '1 minute' } } endRun the migration

rails db:migrate

Insert data your service

The TimescaleDB gem provides efficient ways to insert data into hypertables. This section shows you how to ingest test data into your hypertable.

Create a controller to handle page loads

Create a new file called

<my_app_home>/app/controllers/application_controller.rband add the following code:class ApplicationController < ActionController::Base around_action :track_page_load private def track_page_load start_time = Time.current yield end_time = Time.current PageLoad.create( path: request.path, user_agent: request.user_agent, performance: (end_time - start_time) ) end endGenerate some test data

Use

bin/consoleto join a Rails console session and run the following code to define some random page load access data:def generate_sample_page_loads(total: 1000) time = 1.month.ago paths = %w[/ /about /contact /products /blog] browsers = [ "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.114 Safari/537.36", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:89.0) Gecko/20100101 Firefox/89.0", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/14.1.1 Safari/605.1.15" ] total.times.map do time = time + rand(60).seconds { path: paths.sample, user_agent: browsers.sample, performance: rand(0.1..2.0), created_at: time, updated_at: time } end endInsert the generated data into your Tiger Cloud service

PageLoad.insert_all(generate_sample_page_loads, returning: false)Validate the test data in your Tiger Cloud service

PageLoad.count PageLoad.first

Reference

This section lists the most common tasks you might perform with the TimescaleDB gem.

Query scopes

The TimescaleDB gem provides several convenient scopes for querying your time-series data.

Built-in time-based scopes:

PageLoad.last_hour.count PageLoad.today.count PageLoad.this_week.count PageLoad.this_month.countBrowser-specific scopes:

PageLoad.chrome_users.last_hour.count PageLoad.firefox_users.last_hour.count PageLoad.safari_users.last_hour.count PageLoad.slow_requests.last_hour.count PageLoad.fast_requests.last_hour.countQuery continuous aggregates:

This query fetches the average and standard deviation from the performance stats for the /products path over the last day.

```ruby

PageLoad::PerformanceStatsPerMinute.last_hour

PageLoad::PerformanceStatsPerHour.last_day

PageLoad::PerformanceStatsPerDay.last_month

stats = PageLoad::PerformanceStatsPerHour.last_day.where(path: '/products').select("average(stats_agg) as average, stddev(stats_agg) as stddev").first

puts "Average: #{stats.average}"

puts "Standard Deviation: #{stats.stddev}"

```

TimescaleDB features

The TimescaleDB gem provides utility methods to access hypertable and chunk information. Every model that uses

the acts_as_hypertable method has access to these methods.

Access hypertable and chunk information

View chunk or hypertable information:

PageLoad.chunks.count PageLoad.hypertable.detailed_sizeCompress/Decompress chunks:

PageLoad.chunks.uncompressed.first.compress! # Compress the first uncompressed chunk PageLoad.chunks.compressed.first.decompress! # Decompress the oldest chunk PageLoad.hypertable.compression_stats # View compression stats

Access hypertable stats

You collect hypertable stats using methods that provide insights into your hypertable's structure, size, and compression status:

Get basic hypertable information:

hypertable = PageLoad.hypertable hypertable.hypertable_name # The name of your hypertable hypertable.schema_name # The schema where the hypertable is locatedGet detailed size information:

hypertable.detailed_size # Get detailed size information for the hypertable hypertable.compression_stats # Get compression statistics hypertable.chunks_detailed_size # Get chunk information hypertable.approximate_row_count # Get approximate row count hypertable.dimensions.map(&:column_name) # Get dimension information hypertable.continuous_aggregates.map(&:view_name) # Get continuous aggregate view names

Continuous aggregates

The continuous_aggregates method generates a class for each continuous aggregate.

Get all the continuous aggregate classes:

PageLoad.descendants # Get all continuous aggregate classesManually refresh a continuous aggregate:

PageLoad.refresh_aggregatesCreate or drop a continuous aggregate:

Create or drop all the continuous aggregates in the proper order to build them hierarchically. See more about how it works in this blog post.

PageLoad.create_continuous_aggregates

PageLoad.drop_continuous_aggregates

Next steps

Now that you have integrated the ruby gem into your app:

- Learn more about the TimescaleDB gem.

- Check out the official docs.

- Follow the LTTB, Open AI long-term storage, and candlesticks tutorials.

Prerequisites

To follow the steps on this page:

- Create a target Tiger Cloud service with the Real-time analytics capability.

You need your connection details. This procedure also works for self-hosted TimescaleDB.

- Install the

psycopg2library.

For more information, see the psycopg2 documentation.

- Create a Python virtual environment. [](#)

Connect to TimescaleDB

In this section, you create a connection to TimescaleDB using the psycopg2

library. This library is one of the most popular Postgres libraries for

Python. It allows you to execute raw SQL queries efficiently and safely, and

prevents common attacks such as SQL injection.

Import the psycogpg2 library:

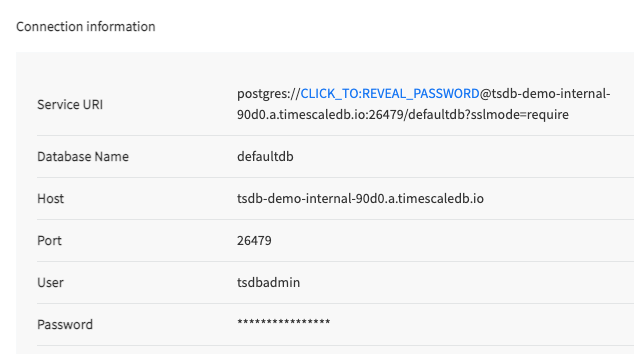

import psycopg2Locate your TimescaleDB credentials and use them to compose a connection string for

psycopg2.You'll need:

- password

- username

- host URL

- port

- database name

Compose your connection string variable as a libpq connection string, using this format:

CONNECTION = "postgres://username:password@host:port/dbname"If you're using a hosted version of TimescaleDB, or generally require an SSL connection, use this version instead:

CONNECTION = "postgres://username:password@host:port/dbname?sslmode=require"Alternatively you can specify each parameter in the connection string as follows

CONNECTION = "dbname=tsdb user=tsdbadmin password=secret host=host.com port=5432 sslmode=require"This method of composing a connection string is for test or development purposes only. For production, use environment variables for sensitive details like your password, hostname, and port number.

Use the

psycopg2connect function to create a new database session and create a new cursor object to interact with the database.In your

mainfunction, add these lines:CONNECTION = "postgres://username:password@host:port/dbname" with psycopg2.connect(CONNECTION) as conn: cursor = conn.cursor() # use the cursor to interact with your database # cursor.execute("SELECT * FROM table")Alternatively, you can create a connection object and pass the object around as needed, like opening a cursor to perform database operations:

CONNECTION = "postgres://username:password@host:port/dbname" conn = psycopg2.connect(CONNECTION) cursor = conn.cursor() # use the cursor to interact with your database cursor.execute("SELECT 'hello world'") print(cursor.fetchone())

Create a relational table

In this section, you create a table called sensors which holds the ID, type,

and location of your fictional sensors. Additionally, you create a hypertable

called sensor_data which holds the measurements of those sensors. The

measurements contain the time, sensor_id, temperature reading, and CPU

percentage of the sensors.

Compose a string which contains the SQL statement to create a relational table. This example creates a table called

sensors, with columnsid,typeandlocation:query_create_sensors_table = """CREATE TABLE sensors ( id SERIAL PRIMARY KEY, type VARCHAR(50), location VARCHAR(50) ); """Open a cursor, execute the query you created in the previous step, and commit the query to make the changes persistent. Afterward, close the cursor to clean up:

cursor = conn.cursor() # see definition in Step 1 cursor.execute(query_create_sensors_table) conn.commit() cursor.close()

Create a hypertable

When you have created the relational table, you can create a hypertable. Creating tables and indexes, altering tables, inserting data, selecting data, and most other tasks are executed on the hypertable.

Create a string variable that contains the

CREATE TABLESQL statement for your hypertable. Notice how the hypertable has the compulsory time column:# create sensor data hypertable query_create_sensordata_table = """CREATE TABLE sensor_data ( time TIMESTAMPTZ NOT NULL, sensor_id INTEGER, temperature DOUBLE PRECISION, cpu DOUBLE PRECISION, FOREIGN KEY (sensor_id) REFERENCES sensors (id) ); """Formulate a

SELECTstatement that converts thesensor_datatable to a hypertable. You must specify the table name to convert to a hypertable, and the name of the time column as the two arguments. For more information, see thecreate_hypertabledocs:query_create_sensordata_hypertable = "SELECT create_hypertable('sensor_data', by_range('time'));"The

by_rangedimension builder is an addition to TimescaleDB 2.13.Open a cursor with the connection, execute the statements from the previous steps, commit your changes, and close the cursor:

cursor = conn.cursor() cursor.execute(query_create_sensordata_table) cursor.execute(query_create_sensordata_hypertable) # commit changes to the database to make changes persistent conn.commit() cursor.close()

Insert rows of data

You can insert data into your hypertables in several different ways. In this

section, you can use psycopg2 with prepared statements, or you can use

pgcopy for a faster insert.

This example inserts a list of tuples, or relational data, called

sensors, into the relational table namedsensors. Open a cursor with a connection to the database, use prepared statements to formulate theINSERTSQL statement, and then execute that statement:sensors = [('a', 'floor'), ('a', 'ceiling'), ('b', 'floor'), ('b', 'ceiling')] cursor = conn.cursor() for sensor in sensors: try: cursor.execute("INSERT INTO sensors (type, location) VALUES (%s, %s);", (sensor[0], sensor[1])) except (Exception, psycopg2.Error) as error: print(error.pgerror) conn.commit()[](#)Alternatively, you can pass variables to the

cursor.executefunction and separate the formulation of the SQL statement,SQL, from the data being passed with it into the prepared statement,data:SQL = "INSERT INTO sensors (type, location) VALUES (%s, %s);" sensors = [('a', 'floor'), ('a', 'ceiling'), ('b', 'floor'), ('b', 'ceiling')] cursor = conn.cursor() for sensor in sensors: try: data = (sensor[0], sensor[1]) cursor.execute(SQL, data) except (Exception, psycopg2.Error) as error: print(error.pgerror) conn.commit()

If you choose to use pgcopy instead, install the pgcopy package

using pip, and then add this line to your list of

import statements:

from pgcopy import CopyManager

Generate some random sensor data using the

generate_seriesfunction provided by Postgres. This example inserts a total of 480 rows of data (4 readings, every 5 minutes, for 24 hours). In your application, this would be the query that saves your time-series data into the hypertable:# for sensors with ids 1-4 for id in range(1, 4, 1): data = (id,) # create random data simulate_query = """SELECT generate_series(now() - interval '24 hour', now(), interval '5 minute') AS time, %s as sensor_id, random()*100 AS temperature, random() AS cpu; """ cursor.execute(simulate_query, data) values = cursor.fetchall()Define the column names of the table you want to insert data into. This example uses the

sensor_datahypertable created earlier. This hypertable consists of columns namedtime,sensor_id,temperatureandcpu. The column names are defined in a list of strings calledcols:cols = ['time', 'sensor_id', 'temperature', 'cpu']Create an instance of the

pgcopyCopyManager,mgr, and pass the connection variable, hypertable name, and list of column names. Then use thecopyfunction of the CopyManager to insert the data into the database quickly usingpgcopy.mgr = CopyManager(conn, 'sensor_data', cols) mgr.copy(values)Commit to persist changes:

conn.commit()[](#)The full sample code to insert data into TimescaleDB using

pgcopy, using the example of sensor data from four sensors:# insert using pgcopy def fast_insert(conn): cursor = conn.cursor() # for sensors with ids 1-4 for id in range(1, 4, 1): data = (id,) # create random data simulate_query = """SELECT generate_series(now() - interval '24 hour', now(), interval '5 minute') AS time, %s as sensor_id, random()*100 AS temperature, random() AS cpu; """ cursor.execute(simulate_query, data) values = cursor.fetchall() # column names of the table you're inserting into cols = ['time', 'sensor_id', 'temperature', 'cpu'] # create copy manager with the target table and insert mgr = CopyManager(conn, 'sensor_data', cols) mgr.copy(values) # commit after all sensor data is inserted # could also commit after each sensor insert is done conn.commit()[](#)You can also check if the insertion worked:

cursor.execute("SELECT * FROM sensor_data LIMIT 5;") print(cursor.fetchall())

Execute a query

This section covers how to execute queries against your database.

The first procedure shows a simple SELECT * query. For more complex queries,

you can use prepared statements to ensure queries are executed safely against

the database.

For more information about properly using placeholders in psycopg2, see the

basic module usage document.

For more information about how to execute more complex queries in psycopg2,

see the psycopg2 documentation.

Execute a query

Define the SQL query you'd like to run on the database. This example is a simple

SELECTstatement querying each row from the previously createdsensor_datatable.query = "SELECT * FROM sensor_data;"Open a cursor from the existing database connection,

conn, and then execute the query you defined:cursor = conn.cursor() query = "SELECT * FROM sensor_data;" cursor.execute(query)To access all resulting rows returned by your query, use one of

pyscopg2's results retrieval methods, such asfetchall()orfetchmany(). This example prints the results of the query, row by row. Note that the result offetchall()is a list of tuples, so you can handle them accordingly:cursor = conn.cursor() query = "SELECT * FROM sensor_data;" cursor.execute(query) for row in cursor.fetchall(): print(row) cursor.close()[](#)If you want a list of dictionaries instead, you can define the cursor using

DictCursor:cursor = conn.cursor(cursor_factory=psycopg2.extras.DictCursor)Using this cursor,

cursor.fetchall()returns a list of dictionary-like objects.

For more complex queries, you can use prepared statements to ensure queries are executed safely against the database.

Execute queries using prepared statements

Write the query using prepared statements:

# query with placeholders cursor = conn.cursor() query = """ SELECT time_bucket('5 minutes', time) AS five_min, avg(cpu) FROM sensor_data JOIN sensors ON sensors.id = sensor_data.sensor_id WHERE sensors.location = %s AND sensors.type = %s GROUP BY five_min ORDER BY five_min DESC; """ location = "floor" sensor_type = "a" data = (location, sensor_type) cursor.execute(query, data) results = cursor.fetchall()

Prerequisites

To follow the steps on this page:

- Create a target Tiger Cloud service with the Real-time analytics capability.

You need your connection details. This procedure also works for self-hosted TimescaleDB.

Connect to TimescaleDB

In this section, you create a connection to TimescaleDB with a common Node.js ORM (object relational mapper) called Sequelize.

At the command prompt, initialize a new Node.js app:

npm init -yThis creates a

package.jsonfile in your directory, which contains all of the dependencies for your project. It looks something like this:{ "name": "node-sample", "version": "1.0.0", "description": "", "main": "index.js", "scripts": { "test": "echo \"Error: no test specified\" && exit 1" }, "keywords": [], "author": "", "license": "ISC" }Install Express.js:

npm install expressCreate a simple web page to check the connection. Create a new file called

index.js, with this content:const express = require('express') const app = express() const port = 3000; app.use(express.json()); app.get('/', (req, res) => res.send('Hello World!')) app.listen(port, () => console.log(`Example app listening at http://localhost:${port}`))Test your connection by starting the application:

node index.js

In your web browser, navigate to http://localhost:3000. If the connection

is successful, it shows "Hello World!"

Add Sequelize to your project:

npm install sequelize sequelize-cli pg pg-hstoreLocate your TimescaleDB credentials and use them to compose a connection string for Sequelize.

You'll need:

- password

- username

- host URL

- port

- database name

Compose your connection string variable, using this format:

'postgres://<user>:<password>@<host>:<port>/<dbname>'Open the

index.jsfile you created. Require Sequelize in the application, and declare the connection string:const Sequelize = require('sequelize') const sequelize = new Sequelize('postgres://<user>:<password>@<host>:<port>/<dbname>', { dialect: 'postgres', protocol: 'postgres', dialectOptions: { ssl: { require: true, rejectUnauthorized: false } } })Make sure you add the SSL settings in the

dialectOptionssections. You can't connect to TimescaleDB using SSL without them.You can test the connection by adding these lines to

index.jsafter theapp.getstatement:sequelize.authenticate().then(() => { console.log('Connection has been established successfully.'); }).catch(err => { console.error('Unable to connect to the database:', err); });Start the application on the command line:

node index.jsIf the connection is successful, you'll get output like this:

Example app listening at http://localhost:3000 Executing (default): SELECT 1+1 AS result Connection has been established successfully.

Create a relational table

In this section, you create a relational table called page_loads.

Use the Sequelize command line tool to create a table and model called

page_loads:npx sequelize model:generate --name page_loads \ --attributes userAgent:string,time:dateThe output looks similar to this:

Sequelize CLI [Node: 12.16.2, CLI: 5.5.1, ORM: 5.21.11] New model was created at <PATH>. New migration was created at <PATH>.Edit the migration file so that it sets up a migration key:

'use strict'; module.exports = { up: async (queryInterface, Sequelize) => { await queryInterface.createTable('page_loads', { userAgent: { primaryKey: true, type: Sequelize.STRING }, time: { primaryKey: true, type: Sequelize.DATE } }); }, down: async (queryInterface, Sequelize) => { await queryInterface.dropTable('page_loads'); } };Migrate the change and make sure that it is reflected in the database:

npx sequelize db:migrateThe output looks similar to this:

Sequelize CLI [Node: 12.16.2, CLI: 5.5.1, ORM: 5.21.11] Loaded configuration file "config/config.json". Using environment "development". == 20200528195725-create-page-loads: migrating ======= == 20200528195725-create-page-loads: migrated (0.443s)Create the

PageLoadsmodel in your code. In theindex.jsfile, above theapp.usestatement, add these lines:let PageLoads = sequelize.define('page_loads', { userAgent: {type: Sequelize.STRING, primaryKey: true }, time: {type: Sequelize.DATE, primaryKey: true } }, { timestamps: false });Instantiate a

PageLoadsobject and save it to the database.

Create a hypertable

When you have created the relational table, you can create a hypertable. Creating tables and indexes, altering tables, inserting data, selecting data, and most other tasks are executed on the hypertable.

Create a migration to modify the

page_loadsrelational table, and change it to a hypertable by first running the following command:npx sequelize migration:generate --name add_hypertableThe output looks similar to this:

Sequelize CLI [Node: 12.16.2, CLI: 5.5.1, ORM: 5.21.11] migrations folder at <PATH> already exists. New migration was created at <PATH>/20200601202912-add_hypertable.js .In the

migrationsfolder, there is now a new file. Open the file, and add this content:'use strict'; module.exports = { up: (queryInterface, Sequelize) => { return queryInterface.sequelize.query("SELECT create_hypertable('page_loads', by_range('time'));"); }, down: (queryInterface, Sequelize) => { } };The

by_rangedimension builder is an addition to TimescaleDB 2.13.At the command prompt, run the migration command:

npx sequelize db:migrateThe output looks similar to this:

Sequelize CLI [Node: 12.16.2, CLI: 5.5.1, ORM: 5.21.11] Loaded configuration file "config/config.json". Using environment "development". == 20200601202912-add_hypertable: migrating ======= == 20200601202912-add_hypertable: migrated (0.426s)

Insert rows of data

This section covers how to insert data into your hypertables.

In the

index.jsfile, modify the/route to get theuser-agentfrom the request object (req) and the current timestamp. Then, call thecreatemethod onPageLoadsmodel, supplying the user agent and timestamp parameters. Thecreatecall executes anINSERTon the database:app.get('/', async (req, res) => { // get the user agent and current time const userAgent = req.get('user-agent'); const time = new Date().getTime(); try { // insert the record await PageLoads.create({ userAgent, time }); // send response res.send('Inserted!'); } catch (e) { console.log('Error inserting data', e) } })

Execute a query

This section covers how to execute queries against your database. In this example, every time the page is reloaded, all information currently in the table is displayed.

Modify the

/route in theindex.jsfile to call the SequelizefindAllfunction and retrieve all data from thepage_loadstable using thePageLoadsmodel:app.get('/', async (req, res) => { // get the user agent and current time const userAgent = req.get('user-agent'); const time = new Date().getTime(); try { // insert the record await PageLoads.create({ userAgent, time }); // now display everything in the table const messages = await PageLoads.findAll(); res.send(messages); } catch (e) { console.log('Error inserting data', e) } })

Now, when you reload the page, you should see all of the rows currently in the

page_loads table.

Prerequisites

To follow the steps on this page:

- Create a target Tiger Cloud service with the Real-time analytics capability.

You need your connection details. This procedure also works for self-hosted TimescaleDB.

- Install Go.

- Install the PGX driver for Go.

Connect to your Tiger Cloud service

In this section, you create a connection to Tiger Cloud using the PGX driver. PGX is a toolkit designed to help Go developers work directly with Postgres. You can use it to help your Go application interact directly with TimescaleDB.

Locate your TimescaleDB credentials and use them to compose a connection string for PGX.

You'll need:

- password

- username

- host URL

- port number

- database name

Compose your connection string variable as a libpq connection string, using this format:

connStr := "postgres://username:password@host:port/dbname"If you're using a hosted version of TimescaleDB, or if you need an SSL connection, use this format instead:

connStr := "postgres://username:password@host:port/dbname?sslmode=require"[](#)You can check that you're connected to your database with this hello world program:

package main import ( "context" "fmt" "os" "github.com/jackc/pgx/v5" ) //connect to database using a single connection func main() { /***********************************************/ /* Single Connection to TimescaleDB/ PostgreSQL */ /***********************************************/ ctx := context.Background() connStr := "yourConnectionStringHere" conn, err := pgx.Connect(ctx, connStr) if err != nil { fmt.Fprintf(os.Stderr, "Unable to connect to database: %v\n", err) os.Exit(1) } defer conn.Close(ctx) //run a simple query to check our connection var greeting string err = conn.QueryRow(ctx, "select 'Hello, Timescale!'").Scan(&greeting) if err != nil { fmt.Fprintf(os.Stderr, "QueryRow failed: %v\n", err) os.Exit(1) } fmt.Println(greeting) }If you'd like to specify your connection string as an environment variable, you can use this syntax to access it in place of the

connStrvariable:os.Getenv("DATABASE_CONNECTION_STRING")

Alternatively, you can connect to TimescaleDB using a connection pool. Connection pooling is useful to conserve computing resources, and can also result in faster database queries:

To create a connection pool that can be used for concurrent connections to your database, use the

pgxpool.New()function instead ofpgx.Connect(). Also note that this script importsgithub.com/jackc/pgx/v5/pgxpool, instead ofpgx/v5which was used to create a single connection:package main import ( "context" "fmt" "os" "github.com/jackc/pgx/v5/pgxpool" ) func main() { ctx := context.Background() connStr := "yourConnectionStringHere" dbpool, err := pgxpool.New(ctx, connStr) if err != nil { fmt.Fprintf(os.Stderr, "Unable to connect to database: %v\n", err) os.Exit(1) } defer dbpool.Close() //run a simple query to check our connection var greeting string err = dbpool.QueryRow(ctx, "select 'Hello, Tiger Data (but concurrently)'").Scan(&greeting) if err != nil { fmt.Fprintf(os.Stderr, "QueryRow failed: %v\n", err) os.Exit(1) } fmt.Println(greeting) }

Create a relational table

In this section, you create a table called sensors which holds the ID, type,

and location of your fictional sensors. Additionally, you create a hypertable

called sensor_data which holds the measurements of those sensors. The

measurements contain the time, sensor_id, temperature reading, and CPU

percentage of the sensors.

Compose a string that contains the SQL statement to create a relational table. This example creates a table called

sensors, with columns for ID, type, and location:queryCreateTable := `CREATE TABLE sensors (id SERIAL PRIMARY KEY, type VARCHAR(50), location VARCHAR(50));`Execute the

CREATE TABLEstatement with theExec()function on thedbpoolobject, using the arguments of the current context and the statement string you created:package main import ( "context" "fmt" "os" "github.com/jackc/pgx/v5/pgxpool" ) func main() { ctx := context.Background() connStr := "yourConnectionStringHere" dbpool, err := pgxpool.New(ctx, connStr) if err != nil { fmt.Fprintf(os.Stderr, "Unable to connect to database: %v\n", err) os.Exit(1) } defer dbpool.Close() /********************************************/ /* Create relational table */ /********************************************/ //Create relational table called sensors queryCreateTable := `CREATE TABLE sensors (id SERIAL PRIMARY KEY, type VARCHAR(50), location VARCHAR(50));` _, err = dbpool.Exec(ctx, queryCreateTable) if err != nil { fmt.Fprintf(os.Stderr, "Unable to create SENSORS table: %v\n", err) os.Exit(1) } fmt.Println("Successfully created relational table SENSORS") }

Generate a hypertable

When you have created the relational table, you can create a hypertable. Creating tables and indexes, altering tables, inserting data, selecting data, and most other tasks are executed on the hypertable.

Create a variable for the

CREATE TABLE SQLstatement for your hypertable. Notice how the hypertable has the compulsory time column:queryCreateTable := `CREATE TABLE sensor_data ( time TIMESTAMPTZ NOT NULL, sensor_id INTEGER, temperature DOUBLE PRECISION, cpu DOUBLE PRECISION, FOREIGN KEY (sensor_id) REFERENCES sensors (id)); `Formulate the

SELECTstatement to convert the table into a hypertable. You must specify the table name to convert to a hypertable, and its time column name as the second argument. For more information, see thecreate_hypertabledocs:queryCreateHypertable := `SELECT create_hypertable('sensor_data', by_range('time'));`The

by_rangedimension builder is an addition to TimescaleDB 2.13.Execute the

CREATE TABLEstatement andSELECTstatement which converts the table into a hypertable. You can do this by calling theExec()function on thedbpoolobject, using the arguments of the current context, and thequeryCreateTableandqueryCreateHypertablestatement strings:package main import ( "context" "fmt" "os" "github.com/jackc/pgx/v5/pgxpool" ) func main() { ctx := context.Background() connStr := "yourConnectionStringHere" dbpool, err := pgxpool.New(ctx, connStr) if err != nil { fmt.Fprintf(os.Stderr, "Unable to connect to database: %v\n", err) os.Exit(1) } defer dbpool.Close() /********************************************/ /* Create Hypertable */ /********************************************/ // Create hypertable of time-series data called sensor_data queryCreateTable := `CREATE TABLE sensor_data ( time TIMESTAMPTZ NOT NULL, sensor_id INTEGER, temperature DOUBLE PRECISION, cpu DOUBLE PRECISION, FOREIGN KEY (sensor_id) REFERENCES sensors (id)); ` queryCreateHypertable := `SELECT create_hypertable('sensor_data', by_range('time'));` //execute statement _, err = dbpool.Exec(ctx, queryCreateTable+queryCreateHypertable) if err != nil { fmt.Fprintf(os.Stderr, "Unable to create the `sensor_data` hypertable: %v\n", err) os.Exit(1) } fmt.Println("Successfully created hypertable `sensor_data`") }

Insert rows of data

You can insert rows into your database in a couple of different

ways. Each of these example inserts the data from the two arrays, sensorTypes and

sensorLocations, into the relational table named sensors.

The first example inserts a single row of data at a time. The second example inserts multiple rows of data. The third example uses batch inserts to speed up the process.

Open a connection pool to the database, then use the prepared statements to formulate an

INSERTSQL statement, and execute it:package main import ( "context" "fmt" "os" "github.com/jackc/pgx/v5/pgxpool" ) func main() { ctx := context.Background() connStr := "yourConnectionStringHere" dbpool, err := pgxpool.New(ctx, connStr) if err != nil { fmt.Fprintf(os.Stderr, "Unable to connect to database: %v\n", err) os.Exit(1) } defer dbpool.Close() /********************************************/ /* INSERT into relational table */ /********************************************/ //Insert data into relational table // Slices of sample data to insert // observation i has type sensorTypes[i] and location sensorLocations[i] sensorTypes := []string{"a", "a", "b", "b"} sensorLocations := []string{"floor", "ceiling", "floor", "ceiling"} for i := range sensorTypes { //INSERT statement in SQL queryInsertMetadata := `INSERT INTO sensors (type, location) VALUES ($1, $2);` //Execute INSERT command _, err := dbpool.Exec(ctx, queryInsertMetadata, sensorTypes[i], sensorLocations[i]) if err != nil { fmt.Fprintf(os.Stderr, "Unable to insert data into database: %v\n", err) os.Exit(1) } fmt.Printf("Inserted sensor (%s, %s) into database \n", sensorTypes[i], sensorLocations[i]) } fmt.Println("Successfully inserted all sensors into database") }

Instead of inserting a single row of data at a time, you can use this procedure to insert multiple rows of data, instead:

This example uses Postgres to generate some sample time-series to insert into the

sensor_datahypertable. Define the SQL statement to generate the data, calledqueryDataGeneration. Then use the.Query()function to execute the statement and return the sample data. The data returned by the query is stored inresults, a slice of structs, which is then used as a source to insert data into the hypertable:package main import ( "context" "fmt" "os" "time" "github.com/jackc/pgx/v5/pgxpool" ) func main() { ctx := context.Background() connStr := "yourConnectionStringHere" dbpool, err := pgxpool.New(ctx, connStr) if err != nil { fmt.Fprintf(os.Stderr, "Unable to connect to database: %v\n", err) os.Exit(1) } defer dbpool.Close() // Generate data to insert //SQL query to generate sample data queryDataGeneration := ` SELECT generate_series(now() - interval '24 hour', now(), interval '5 minute') AS time, floor(random() * (3) + 1)::int as sensor_id, random()*100 AS temperature, random() AS cpu ` //Execute query to generate samples for sensor_data hypertable rows, err := dbpool.Query(ctx, queryDataGeneration) if err != nil { fmt.Fprintf(os.Stderr, "Unable to generate sensor data: %v\n", err) os.Exit(1) } defer rows.Close() fmt.Println("Successfully generated sensor data") //Store data generated in slice results type result struct { Time time.Time SensorId int Temperature float64 CPU float64 } var results []result for rows.Next() { var r result err = rows.Scan(&r.Time, &r.SensorId, &r.Temperature, &r.CPU) if err != nil { fmt.Fprintf(os.Stderr, "Unable to scan %v\n", err) os.Exit(1) } results = append(results, r) } // Any errors encountered by rows.Next or rows.Scan are returned here if rows.Err() != nil { fmt.Fprintf(os.Stderr, "rows Error: %v\n", rows.Err()) os.Exit(1) } // Check contents of results slice fmt.Println("Contents of RESULTS slice") for i := range results { var r result r = results[i] fmt.Printf("Time: %s | ID: %d | Temperature: %f | CPU: %f |\n", &r.Time, r.SensorId, r.Temperature, r.CPU) } }Formulate an SQL insert statement for the

sensor_datahypertable://SQL query to generate sample data queryInsertTimeseriesData := ` INSERT INTO sensor_data (time, sensor_id, temperature, cpu) VALUES ($1, $2, $3, $4); `Execute the SQL statement for each sample in the results slice:

//Insert contents of results slice into TimescaleDB for i := range results { var r result r = results[i] _, err := dbpool.Exec(ctx, queryInsertTimeseriesData, r.Time, r.SensorId, r.Temperature, r.CPU) if err != nil { fmt.Fprintf(os.Stderr, "Unable to insert sample into TimescaleDB %v\n", err) os.Exit(1) } defer rows.Close() } fmt.Println("Successfully inserted samples into sensor_data hypertable")[](#)This example

main.gogenerates sample data and inserts it into thesensor_datahypertable:package main import ( "context" "fmt" "os" "time" "github.com/jackc/pgx/v5/pgxpool" ) func main() { /********************************************/ /* Connect using Connection Pool */ /********************************************/ ctx := context.Background() connStr := "yourConnectionStringHere" dbpool, err := pgxpool.New(ctx, connStr) if err != nil { fmt.Fprintf(os.Stderr, "Unable to connect to database: %v\n", err) os.Exit(1) } defer dbpool.Close() /********************************************/ /* Insert data into hypertable */ /********************************************/ // Generate data to insert //SQL query to generate sample data queryDataGeneration := ` SELECT generate_series(now() - interval '24 hour', now(), interval '5 minute') AS time, floor(random() * (3) + 1)::int as sensor_id, random()*100 AS temperature, random() AS cpu ` //Execute query to generate samples for sensor_data hypertable rows, err := dbpool.Query(ctx, queryDataGeneration) if err != nil { fmt.Fprintf(os.Stderr, "Unable to generate sensor data: %v\n", err) os.Exit(1) } defer rows.Close() fmt.Println("Successfully generated sensor data") //Store data generated in slice results type result struct { Time time.Time SensorId int Temperature float64 CPU float64 } var results []result for rows.Next() { var r result err = rows.Scan(&r.Time, &r.SensorId, &r.Temperature, &r.CPU) if err != nil { fmt.Fprintf(os.Stderr, "Unable to scan %v\n", err) os.Exit(1) } results = append(results, r) } // Any errors encountered by rows.Next or rows.Scan are returned here if rows.Err() != nil { fmt.Fprintf(os.Stderr, "rows Error: %v\n", rows.Err()) os.Exit(1) } // Check contents of results slice fmt.Println("Contents of RESULTS slice") for i := range results { var r result r = results[i] fmt.Printf("Time: %s | ID: %d | Temperature: %f | CPU: %f |\n", &r.Time, r.SensorId, r.Temperature, r.CPU) } //Insert contents of results slice into TimescaleDB //SQL query to generate sample data queryInsertTimeseriesData := ` INSERT INTO sensor_data (time, sensor_id, temperature, cpu) VALUES ($1, $2, $3, $4); ` //Insert contents of results slice into TimescaleDB for i := range results { var r result r = results[i] _, err := dbpool.Exec(ctx, queryInsertTimeseriesData, r.Time, r.SensorId, r.Temperature, r.CPU) if err != nil { fmt.Fprintf(os.Stderr, "Unable to insert sample into TimescaleDB %v\n", err) os.Exit(1) } defer rows.Close() } fmt.Println("Successfully inserted samples into sensor_data hypertable") }

Inserting multiple rows of data using this method executes as many insert

statements as there are samples to be inserted. This can make ingestion of data

slow. To speed up ingestion, you can batch insert data instead.

Here's a sample pattern for how to do so, using the sample data you generated in

the previous procedure. It uses the pgx Batch object:

This example batch inserts data into the database:

package main import ( "context" "fmt" "os" "time" "github.com/jackc/pgx/v5" "github.com/jackc/pgx/v5/pgxpool" ) func main() { /********************************************/ /* Connect using Connection Pool */ /********************************************/ ctx := context.Background() connStr := "yourConnectionStringHere" dbpool, err := pgxpool.New(ctx, connStr) if err != nil { fmt.Fprintf(os.Stderr, "Unable to connect to database: %v\n", err) os.Exit(1) } defer dbpool.Close() // Generate data to insert //SQL query to generate sample data queryDataGeneration := ` SELECT generate_series(now() - interval '24 hour', now(), interval '5 minute') AS time, floor(random() * (3) + 1)::int as sensor_id, random()*100 AS temperature, random() AS cpu ` //Execute query to generate samples for sensor_data hypertable rows, err := dbpool.Query(ctx, queryDataGeneration) if err != nil { fmt.Fprintf(os.Stderr, "Unable to generate sensor data: %v\n", err) os.Exit(1) } defer rows.Close() fmt.Println("Successfully generated sensor data") //Store data generated in slice results type result struct { Time time.Time SensorId int Temperature float64 CPU float64 } var results []result for rows.Next() { var r result err = rows.Scan(&r.Time, &r.SensorId, &r.Temperature, &r.CPU) if err != nil { fmt.Fprintf(os.Stderr, "Unable to scan %v\n", err) os.Exit(1) } results = append(results, r) } // Any errors encountered by rows.Next or rows.Scan are returned here if rows.Err() != nil { fmt.Fprintf(os.Stderr, "rows Error: %v\n", rows.Err()) os.Exit(1) } // Check contents of results slice /*fmt.Println("Contents of RESULTS slice") for i := range results { var r result r = results[i] fmt.Printf("Time: %s | ID: %d | Temperature: %f | CPU: %f |\n", &r.Time, r.SensorId, r.Temperature, r.CPU) }*/ //Insert contents of results slice into TimescaleDB //SQL query to generate sample data queryInsertTimeseriesData := ` INSERT INTO sensor_data (time, sensor_id, temperature, cpu) VALUES ($1, $2, $3, $4); ` /********************************************/ /* Batch Insert into TimescaleDB */ /********************************************/ //create batch batch := &pgx.Batch{} //load insert statements into batch queue for i := range results { var r result r = results[i] batch.Queue(queryInsertTimeseriesData, r.Time, r.SensorId, r.Temperature, r.CPU) } batch.Queue("select count(*) from sensor_data") //send batch to connection pool br := dbpool.SendBatch(ctx, batch) //execute statements in batch queue _, err = br.Exec() if err != nil { fmt.Fprintf(os.Stderr, "Unable to execute statement in batch queue %v\n", err) os.Exit(1) } fmt.Println("Successfully batch inserted data") //Compare length of results slice to size of table fmt.Printf("size of results: %d\n", len(results)) //check size of table for number of rows inserted // result of last SELECT statement var rowsInserted int err = br.QueryRow().Scan(&rowsInserted) fmt.Printf("size of table: %d\n", rowsInserted) err = br.Close() if err != nil { fmt.Fprintf(os.Stderr, "Unable to closer batch %v\n", err) os.Exit(1) } }

Execute a query

This section covers how to execute queries against your database.

Define the SQL query you'd like to run on the database. This example uses a SQL query that combines time-series and relational data. It returns the average CPU values for every 5 minute interval, for sensors located on location

ceilingand of typea:// Formulate query in SQL // Note the use of prepared statement placeholders $1 and $2 queryTimebucketFiveMin := ` SELECT time_bucket('5 minutes', time) AS five_min, avg(cpu) FROM sensor_data JOIN sensors ON sensors.id = sensor_data.sensor_id WHERE sensors.location = $1 AND sensors.type = $2 GROUP BY five_min ORDER BY five_min DESC; `Use the

.Query()function to execute the query string. Make sure you specify the relevant placeholders://Execute query on TimescaleDB rows, err := dbpool.Query(ctx, queryTimebucketFiveMin, "ceiling", "a") if err != nil { fmt.Fprintf(os.Stderr, "Unable to execute query %v\n", err) os.Exit(1) } defer rows.Close() fmt.Println("Successfully executed query")Access the rows returned by

.Query(). Create a struct with fields representing the columns that you expect to be returned, then use therows.Next()function to iterate through the rows returned and fillresultswith the array of structs. This uses therows.Scan()function, passing in pointers to the fields that you want to scan for results.This example prints out the results returned from the query, but you might want to use those results for some other purpose. Once you've scanned through all the rows returned you can then use the results array however you like.

//Do something with the results of query // Struct for results type result2 struct { Bucket time.Time Avg float64 } // Print rows returned and fill up results slice for later use var results []result2 for rows.Next() { var r result2 err = rows.Scan(&r.Bucket, &r.Avg) if err != nil { fmt.Fprintf(os.Stderr, "Unable to scan %v\n", err) os.Exit(1) } results = append(results, r) fmt.Printf("Time bucket: %s | Avg: %f\n", &r.Bucket, r.Avg) } // Any errors encountered by rows.Next or rows.Scan are returned here if rows.Err() != nil { fmt.Fprintf(os.Stderr, "rows Error: %v\n", rows.Err()) os.Exit(1) } // use results here…[](#)This example program runs a query, and accesses the results of that query:

package main import ( "context" "fmt" "os" "time" "github.com/jackc/pgx/v5/pgxpool" ) func main() { ctx := context.Background() connStr := "yourConnectionStringHere" dbpool, err := pgxpool.New(ctx, connStr) if err != nil { fmt.Fprintf(os.Stderr, "Unable to connect to database: %v\n", err) os.Exit(1) } defer dbpool.Close() /********************************************/ /* Execute a query */ /********************************************/ // Formulate query in SQL // Note the use of prepared statement placeholders $1 and $2 queryTimebucketFiveMin := ` SELECT time_bucket('5 minutes', time) AS five_min, avg(cpu) FROM sensor_data JOIN sensors ON sensors.id = sensor_data.sensor_id WHERE sensors.location = $1 AND sensors.type = $2 GROUP BY five_min ORDER BY five_min DESC; ` //Execute query on TimescaleDB rows, err := dbpool.Query(ctx, queryTimebucketFiveMin, "ceiling", "a") if err != nil { fmt.Fprintf(os.Stderr, "Unable to execute query %v\n", err) os.Exit(1) } defer rows.Close() fmt.Println("Successfully executed query") //Do something with the results of query // Struct for results type result2 struct { Bucket time.Time Avg float64 } // Print rows returned and fill up results slice for later use var results []result2 for rows.Next() { var r result2 err = rows.Scan(&r.Bucket, &r.Avg) if err != nil { fmt.Fprintf(os.Stderr, "Unable to scan %v\n", err) os.Exit(1) } results = append(results, r) fmt.Printf("Time bucket: %s | Avg: %f\n", &r.Bucket, r.Avg) } // Any errors encountered by rows.Next or rows.Scan are returned here if rows.Err() != nil { fmt.Fprintf(os.Stderr, "rows Error: %v\n", rows.Err()) os.Exit(1) } }

Next steps

Now that you're able to connect, read, and write to a TimescaleDB instance from your Go application, be sure to check out these advanced TimescaleDB tutorials:

- Refer to the pgx documentation for more information about pgx.

- Get up and running with TimescaleDB with the Getting Started tutorial.

- Want fast inserts on CSV data? Check out TimescaleDB parallel copy, a tool for fast inserts, written in Go.

Prerequisites

To follow the steps on this page:

- Create a target Tiger Cloud service with the Real-time analytics capability.

You need your connection details. This procedure also works for self-hosted TimescaleDB.

- Install the Java Development Kit (JDK).

- Install the PostgreSQL JDBC driver.

All code in this quick start is for Java 16 and later. If you are working with older JDK versions, use legacy coding techniques.

Connect to your Tiger Cloud service

In this section, you create a connection to your service using an application in

a single file. You can use any of your favorite build tools, including gradle

or maven.

Create a directory containing a text file called

Main.java, with this content:package com.timescale.java; public class Main { public static void main(String... args) { System.out.println("Hello, World!"); } }From the command line in the current directory, run the application:

java Main.javaIf the command is successful,

Hello, World!line output is printed to your console.Import the PostgreSQL JDBC driver. If you are using a dependency manager, include the PostgreSQL JDBC Driver as a dependency.

Download the JAR artifact of the JDBC Driver and save it with the

Main.javafile.Import the

JDBC Driverinto the Java application and display a list of available drivers for the check:package com.timescale.java; import java.sql.DriverManager; public class Main { public static void main(String... args) { DriverManager.drivers().forEach(System.out::println); } }Run all the examples:

java -cp *.jar Main.java

If the command is successful, a string similar to

org.postgresql.Driver@7f77e91b is printed to your console. This means that you

are ready to connect to TimescaleDB from Java.

Locate your TimescaleDB credentials and use them to compose a connection string for JDBC.

You'll need:

- password

- username

- host URL

- port

- database name

Compose your connection string variable, using this format:

var connUrl = "jdbc:postgresql://<HOSTNAME>:<PORT>/<DATABASE_NAME>?user=<USERNAME>&password=<PASSWORD>";For more information about creating connection strings, see the JDBC documentation.

This method of composing a connection string is for test or development purposes only. For production, use environment variables for sensitive details like your password, hostname, and port number.

package com.timescale.java; import java.sql.DriverManager; import java.sql.SQLException; public class Main { public static void main(String... args) throws SQLException { var connUrl = "jdbc:postgresql://<HOSTNAME>:<PORT>/<DATABASE_NAME>?user=<USERNAME>&password=<PASSWORD>"; var conn = DriverManager.getConnection(connUrl); System.out.println(conn.getClientInfo()); } }Run the code:

java -cp *.jar Main.javaIf the command is successful, a string similar to

{ApplicationName=PostgreSQL JDBC Driver}is printed to your console.

Create a relational table

In this section, you create a table called sensors which holds the ID, type,

and location of your fictional sensors. Additionally, you create a hypertable

called sensor_data which holds the measurements of those sensors. The

measurements contain the time, sensor_id, temperature reading, and CPU

percentage of the sensors.

Compose a string which contains the SQL statement to create a relational table. This example creates a table called

sensors, with columnsid,typeandlocation:CREATE TABLE sensors ( id SERIAL PRIMARY KEY, type TEXT NOT NULL, location TEXT NOT NULL );Create a statement, execute the query you created in the previous step, and check that the table was created successfully:

package com.timescale.java; import java.sql.DriverManager; import java.sql.SQLException; public class Main { public static void main(String... args) throws SQLException { var connUrl = "jdbc:postgresql://<HOSTNAME>:<PORT>/<DATABASE_NAME>?user=<USERNAME>&password=<PASSWORD>"; var conn = DriverManager.getConnection(connUrl); var createSensorTableQuery = """ CREATE TABLE sensors ( id SERIAL PRIMARY KEY, type TEXT NOT NULL, location TEXT NOT NULL ) """; try (var stmt = conn.createStatement()) { stmt.execute(createSensorTableQuery); } var showAllTablesQuery = "SELECT tablename FROM pg_catalog.pg_tables WHERE schemaname = 'public'"; try (var stmt = conn.createStatement(); var rs = stmt.executeQuery(showAllTablesQuery)) { System.out.println("Tables in the current database: "); while (rs.next()) { System.out.println(rs.getString("tablename")); } } } }

Create a hypertable

When you have created the relational table, you can create a hypertable. Creating tables and indexes, altering tables, inserting data, selecting data, and most other tasks are executed on the hypertable.

Create a

CREATE TABLESQL statement for your hypertable. Notice how the hypertable has the compulsory time column:CREATE TABLE sensor_data ( time TIMESTAMPTZ NOT NULL, sensor_id INTEGER REFERENCES sensors (id), value DOUBLE PRECISION );Create a statement, execute the query you created in the previous step:

SELECT create_hypertable('sensor_data', by_range('time'));The

by_rangeandby_hashdimension builder is an addition to TimescaleDB 2.13.Execute the two statements you created, and commit your changes to the database:

package com.timescale.java; import java.sql.Connection; import java.sql.DriverManager; import java.sql.SQLException; import java.util.List; public class Main { public static void main(String... args) { final var connUrl = "jdbc:postgresql://<HOSTNAME>:<PORT>/<DATABASE_NAME>?user=<USERNAME>&password=<PASSWORD>"; try (var conn = DriverManager.getConnection(connUrl)) { createSchema(conn); insertData(conn); } catch (SQLException ex) { System.err.println(ex.getMessage()); } } private static void createSchema(final Connection conn) throws SQLException { try (var stmt = conn.createStatement()) { stmt.execute(""" CREATE TABLE sensors ( id SERIAL PRIMARY KEY, type TEXT NOT NULL, location TEXT NOT NULL ) """); } try (var stmt = conn.createStatement()) { stmt.execute(""" CREATE TABLE sensor_data ( time TIMESTAMPTZ NOT NULL, sensor_id INTEGER REFERENCES sensors (id), value DOUBLE PRECISION ) """); } try (var stmt = conn.createStatement()) { stmt.execute("SELECT create_hypertable('sensor_data', by_range('time'))"); } } }

Insert data

You can insert data into your hypertables in several different ways. In this section, you can insert single rows, or insert by batches of rows.

Open a connection to the database, use prepared statements to formulate the

INSERTSQL statement, then execute the statement:final List<Sensor> sensors = List.of( new Sensor("temperature", "bedroom"), new Sensor("temperature", "living room"), new Sensor("temperature", "outside"), new Sensor("humidity", "kitchen"), new Sensor("humidity", "outside")); for (final var sensor : sensors) { try (var stmt = conn.prepareStatement("INSERT INTO sensors (type, location) VALUES (?, ?)")) { stmt.setString(1, sensor.type()); stmt.setString(2, sensor.location()); stmt.executeUpdate(); } }

If you want to insert a batch of rows by using a batching mechanism. In this

example, you generate some sample time-series data to insert into the

sensor_data hypertable:

Insert batches of rows:

final var sensorDataCount = 100; final var insertBatchSize = 10; try (var stmt = conn.prepareStatement(""" INSERT INTO sensor_data (time, sensor_id, value) VALUES ( generate_series(now() - INTERVAL '24 hours', now(), INTERVAL '5 minutes'), floor(random() * 4 + 1)::INTEGER, random() ) """)) { for (int i = 0; i < sensorDataCount; i++) { stmt.addBatch(); if ((i > 0 && i % insertBatchSize == 0) || i == sensorDataCount - 1) { stmt.executeBatch(); } } }

Execute a query

This section covers how to execute queries against your database.

Execute queries on TimescaleDB

Define the SQL query you'd like to run on the database. This example combines time-series and relational data. It returns the average values for every 15 minute interval for sensors with specific type and location.

SELECT time_bucket('15 minutes', time) AS bucket, avg(value) FROM sensor_data JOIN sensors ON sensors.id = sensor_data.sensor_id WHERE sensors.type = ? AND sensors.location = ? GROUP BY bucket ORDER BY bucket DESC;Execute the query with the prepared statement and read out the result set for all

a-type sensors located on thefloor:try (var stmt = conn.prepareStatement(""" SELECT time_bucket('15 minutes', time) AS bucket, avg(value) FROM sensor_data JOIN sensors ON sensors.id = sensor_data.sensor_id WHERE sensors.type = ? AND sensors.location = ? GROUP BY bucket ORDER BY bucket DESC """)) { stmt.setString(1, "temperature"); stmt.setString(2, "living room"); try (var rs = stmt.executeQuery()) { while (rs.next()) { System.out.printf("%s: %f%n", rs.getTimestamp(1), rs.getDouble(2)); } } }If the command is successful, you'll see output like this:

2021-05-12 23:30:00.0: 0,508649 2021-05-12 23:15:00.0: 0,477852 2021-05-12 23:00:00.0: 0,462298 2021-05-12 22:45:00.0: 0,457006 2021-05-12 22:30:00.0: 0,568744 ...

Next steps

Now that you're able to connect, read, and write to a TimescaleDB instance from your Java application, and generate the scaffolding necessary to build a new application from an existing TimescaleDB instance, be sure to check out these advanced TimescaleDB tutorials:

Complete code samples

This section contains complete code samples.

Complete code sample

package com.timescale.java;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.util.List;

public class Main {

public static void main(String... args) {

final var connUrl = "jdbc:postgresql://<HOSTNAME>:<PORT>/<DATABASE_NAME>?user=<USERNAME>&password=<PASSWORD>";

try (var conn = DriverManager.getConnection(connUrl)) {

createSchema(conn);

insertData(conn);

} catch (SQLException ex) {

System.err.println(ex.getMessage());

}

}

private static void createSchema(final Connection conn) throws SQLException {

try (var stmt = conn.createStatement()) {

stmt.execute("""

CREATE TABLE sensors (

id SERIAL PRIMARY KEY,

type TEXT NOT NULL,

location TEXT NOT NULL

)

""");

}

try (var stmt = conn.createStatement()) {

stmt.execute("""

CREATE TABLE sensor_data (

time TIMESTAMPTZ NOT NULL,

sensor_id INTEGER REFERENCES sensors (id),

value DOUBLE PRECISION

)

""");

}

try (var stmt = conn.createStatement()) {

stmt.execute("SELECT create_hypertable('sensor_data', by_range('time'))");

}

}

private static void insertData(final Connection conn) throws SQLException {

final List<Sensor> sensors = List.of(

new Sensor("temperature", "bedroom"),

new Sensor("temperature", "living room"),

new Sensor("temperature", "outside"),

new Sensor("humidity", "kitchen"),