compression.md 164 KB

Timescaledb - Compression

Pages: 19

Inserting or modifying data in the columnstore

URL: llms-txt#inserting-or-modifying-data-in-the-columnstore

Contents:

- Earlier versions of TimescaleDB (before v2.11.0)

In TimescaleDB [v2.11.0][tsdb-release-2-11-0] and later, you can use the UPDATE and DELETE

commands to modify existing rows in compressed chunks. This works in a similar

way to INSERT operations. To reduce the amount of decompression, TimescaleDB only attempts to decompress data where it is necessary.

However, if there are no qualifiers, or if the qualifiers cannot be used as filters, calls to UPDATE and DELETE may convert large amounts of data to the rowstore and back to the columnstore.

To avoid large scale conversion, filter on the columns you use to segementby and orderby. This filters as much data as possible before any data is modified, and reduces the amount of data conversions.

DML operations on the columnstore work if the data you are inserting has unique constraints. Constraints are preserved during the insert operation. TimescaleDB uses a Postgres function that decompresses relevant data during the insert to check if the new data breaks unique checks. This means that any time you insert data into the columnstore, a small amount of data is decompressed to allow a speculative insertion, and block any inserts which could violate constraints.

For TimescaleDB [v2.17.0][tsdb-release-2-17-0] and later, delete performance is improved on compressed

hypertables when a large amount of data is affected. When you delete whole segments of

data, filter your deletes by segmentby column(s) instead of separate deletes.

This considerably increases performance by skipping the decompression step.

Since TimescaleDB [v2.21.0][tsdb-release-2-21-0] and later, DELETE operations on the columnstore

are executed on the batch level, which allows more performant deletion of data of non-segmentby columns

and reduces IO usage.

Earlier versions of TimescaleDB (before v2.11.0)

This feature requires Postgres 14 or later

From TimescaleDB v2.3.0, you can insert data into compressed chunks with some

limitations. The primary limitation is that you can't insert data with unique

constraints. Additionally, newly inserted data needs to be compressed at the

same time as the data in the chunk, either by a running recompression policy, or

by using recompress_chunk manually on the chunk.

In TimescaleDB v2.2.0 and earlier, you cannot insert data into compressed chunks.

===== PAGE: https://docs.tigerdata.com/use-timescale/jobs/create-and-manage-jobs/ =====

timescaledb_information.jobs

URL: llms-txt#timescaledb_information.jobs

Contents:

- Samples

- Arguments

Shows information about all jobs registered with the automation framework.

Shows a job associated with the refresh policy for continuous aggregates:

Find all jobs related to compression policies (before TimescaleDB v2.20):

Find all jobs related to columnstore policies (TimescaleDB v2.20 and later):

|Name|Type| Description |

|-|-|--------------------------------------------------------------------------------------------------------------|

|job_id|INTEGER| The ID of the background job |

|application_name|TEXT| Name of the policy or job |

|schedule_interval|INTERVAL| The interval at which the job runs. Defaults to 24 hours |

|max_runtime|INTERVAL| The maximum amount of time the job is allowed to run by the background worker scheduler before it is stopped |

|max_retries|INTEGER| The number of times the job is retried if it fails |

|retry_period|INTERVAL| The amount of time the scheduler waits between retries of the job on failure |

|proc_schema|TEXT| Schema name of the function or procedure executed by the job |

|proc_name|TEXT| Name of the function or procedure executed by the job |

|owner|TEXT| Owner of the job |

|scheduled|BOOLEAN| Set to true to run the job automatically |

|fixed_schedule|BOOLEAN| Set to true for jobs executing at fixed times according to a schedule interval and initial start |

|config|JSONB| Configuration passed to the function specified by proc_name at execution time |

|next_start|TIMESTAMP WITH TIME ZONE| Next start time for the job, if it is scheduled to run automatically |

|initial_start|TIMESTAMP WITH TIME ZONE| Time the job is first run and also the time on which execution times are aligned for jobs with fixed schedules |

|hypertable_schema|TEXT| Schema name of the hypertable. Set to NULL for a job |

|hypertable_name|TEXT| Table name of the hypertable. Set to NULL for a job |

|check_schema|TEXT| Schema name of the optional configuration validation function, set when the job is created or updated |

|check_name|TEXT| Name of the optional configuration validation function, set when the job is created or updated |

===== PAGE: https://docs.tigerdata.com/api/informational-views/hypertables/ =====

Examples:

Example 1 (sql):

SELECT * FROM timescaledb_information.jobs;

job_id | 1001

application_name | Refresh Continuous Aggregate Policy [1001]

schedule_interval | 01:00:00

max_runtime | 00:00:00

max_retries | -1

retry_period | 01:00:00

proc_schema | _timescaledb_internal

proc_name | policy_refresh_continuous_aggregate

owner | postgres

scheduled | t

config | {"start_offset": "20 days", "end_offset": "10

days", "mat_hypertable_id": 2}

next_start | 2020-10-02 12:38:07.014042-04

hypertable_schema | _timescaledb_internal

hypertable_name | _materialized_hypertable_2

check_schema | _timescaledb_internal

check_name | policy_refresh_continuous_aggregate_check

Example 2 (sql):

SELECT * FROM timescaledb_information.jobs where application_name like 'Compression%';

-[ RECORD 1 ]-----+--------------------------------------------------

job_id | 1002

application_name | Compression Policy [1002]

schedule_interval | 15 days 12:00:00

max_runtime | 00:00:00

max_retries | -1

retry_period | 01:00:00

proc_schema | _timescaledb_internal

proc_name | policy_compression

owner | postgres

scheduled | t

config | {"hypertable_id": 3, "compress_after": "60 days"}

next_start | 2020-10-18 01:31:40.493764-04

hypertable_schema | public

hypertable_name | conditions

check_schema | _timescaledb_internal

check_name | policy_compression_check

Example 3 (sql):

SELECT * FROM timescaledb_information.jobs where application_name like 'Columnstore%';

-[ RECORD 1 ]-----+--------------------------------------------------

job_id | 1002

application_name | Columnstore Policy [1002]

schedule_interval | 15 days 12:00:00

max_runtime | 00:00:00

max_retries | -1

retry_period | 01:00:00

proc_schema | _timescaledb_internal

proc_name | policy_compression

owner | postgres

scheduled | t

config | {"hypertable_id": 3, "compress_after": "60 days"}

next_start | 2025-10-18 01:31:40.493764-04

hypertable_schema | public

hypertable_name | conditions

check_schema | _timescaledb_internal

check_name | policy_compression_check

Example 4 (sql):

SELECT * FROM timescaledb_information.jobs where application_name like 'User-Define%';

-[ RECORD 1 ]-----+------------------------------

job_id | 1003

application_name | User-Defined Action [1003]

schedule_interval | 01:00:00

max_runtime | 00:00:00

max_retries | -1

retry_period | 00:05:00

proc_schema | public

proc_name | custom_aggregation_func

owner | postgres

scheduled | t

config | {"type": "function"}

next_start | 2020-10-02 14:45:33.339885-04

hypertable_schema |

hypertable_name |

check_schema | NULL

check_name | NULL

-[ RECORD 2 ]-----+------------------------------

job_id | 1004

application_name | User-Defined Action [1004]

schedule_interval | 01:00:00

max_runtime | 00:00:00

max_retries | -1

retry_period | 00:05:00

proc_schema | public

proc_name | custom_retention_func

owner | postgres

scheduled | t

config | {"type": "function"}

next_start | 2020-10-02 14:45:33.353733-04

hypertable_schema |

hypertable_name |

check_schema | NULL

check_name | NULL

Low compression rate

URL: llms-txt#low-compression-rate

Low compression rates are often caused by [high cardinality][cardinality-blog] of the segment key. This means that the column you selected for grouping the rows during compression has too many unique values. This makes it impossible to group a lot of rows in a batch. To achieve better compression results, choose a segment key with lower cardinality.

===== PAGE: https://docs.tigerdata.com/_troubleshooting/dropping-chunks-times-out/ =====

Query time-series data tutorial - set up compression

URL: llms-txt#query-time-series-data-tutorial---set-up-compression

Contents:

- Compression setup

- Add a compression policy

- Taking advantage of query speedups

You have now seen how to create a hypertable for your NYC taxi trip data and query it. When ingesting a dataset like this is seldom necessary to update old data and over time the amount of data in the tables grows. Over time you end up with a lot of data and since this is mostly immutable you can compress it to save space and avoid incurring additional cost.

It is possible to use disk-oriented compression like the support offered by ZFS and Btrfs but since TimescaleDB is build for handling event-oriented data (such as time-series) it comes with support for compressing data in hypertables.

TimescaleDB compression allows you to store the data in a vastly more efficient format allowing up to 20x compression ratio compared to a normal Postgres table, but this is of course highly dependent on the data and configuration.

TimescaleDB compression is implemented natively in Postgres and does not require special storage formats. Instead it relies on features of Postgres to transform the data into columnar format before compression. The use of a columnar format allows better compression ratio since similar data is stored adjacently. For more details on how the compression format looks, you can look at the [compression design][compression-design] section.

A beneficial side-effect of compressing data is that certain queries are significantly faster since less data has to be read into memory.

- Connect to the Tiger Cloud service that contains the

dataset using, for example

psql. - Enable compression on the table and pick suitable segment-by and

order-by column using the

ALTER TABLEcommand:

Depending on the choice if segment-by and order-by column you can

get very different performance and compression ratio. To learn

more about how to pick the correct columns, see

[here][segment-by-columns].

You can manually compress all the chunks of the hypertable using

compress_chunkin this manner:You can also [automate compression][automatic-compression] by adding a [compression policy][add_compression_policy] which will be covered below.

Now that you have compressed the table you can compare the size of the dataset before and after compression:

This shows a significant improvement in data usage:

Add a compression policy

To avoid running the compression step each time you have some data to compress you can set up a compression policy. The compression policy allows you to compress data that is older than a particular age, for example, to compress all chunks that are older than 8 days:

Compression policies run on a regular schedule, by default once every day, which means that you might have up to 9 days of uncompressed data with the setting above.

You can find more information on compression policies in the [add_compression_policy][add_compression_policy] section.

Taking advantage of query speedups

Previously, compression was set up to be segmented by vendor_id column value.

This means fetching data by filtering or grouping on that column will be

more efficient. Ordering is also set to time descending so if you run queries

which try to order data with that ordering, you should see performance benefits.

For instance, if you run the query example from previous section:

You should see a decent performance difference when the dataset is compressed and when is decompressed. Try it yourself by running the previous query, decompressing the dataset and running it again while timing the execution time. You can enable timing query times in psql by running:

To decompress the whole dataset, run:

On an example setup, speedup performance observed was pretty significant, 700 ms when compressed vs 1,2 sec when decompressed.

Try it yourself and see what you get!

===== PAGE: https://docs.tigerdata.com/tutorials/blockchain-query/blockchain-compress/ =====

Examples:

Example 1 (sql):

ALTER TABLE rides

SET (

timescaledb.compress,

timescaledb.compress_segmentby='vendor_id',

timescaledb.compress_orderby='pickup_datetime DESC'

);

Example 2 (sql):

SELECT compress_chunk(c) from show_chunks('rides') c;

Example 3 (sql):

SELECT

pg_size_pretty(before_compression_total_bytes) as before,

pg_size_pretty(after_compression_total_bytes) as after

FROM hypertable_compression_stats('rides');

Example 4 (sql):

before | after

---------+--------

1741 MB | 603 MB

add_policies()

URL: llms-txt#add_policies()

Contents:

- Samples

- Required arguments

- Optional arguments

- Returns

Add refresh, compression, and data retention policies to a continuous aggregate in one step. The added compression and retention policies apply to the continuous aggregate, not to the original hypertable.

Experimental features could have bugs. They might not be backwards compatible, and could be removed in future releases. Use these features at your own risk, and do not use any experimental features in production.

add_policies() does not allow the schedule_interval for the continuous aggregate to be set, instead using a default value of 1 hour.

If you would like to set this add your policies manually (see [add_continuous_aggregate_policy][add_continuous_aggregate_policy]).

Given a continuous aggregate named example_continuous_aggregate, add three

policies to it:

- Regularly refresh the continuous aggregate to materialize data between 1 day and 2 days old.

- Compress data in the continuous aggregate after 20 days.

- Drop data in the continuous aggregate after 1 year.

Required arguments

|Name|Type|Description|

|-|-|-|

|relation|REGCLASS|The continuous aggregate that the policies should be applied to|

Optional arguments

|Name|Type|Description|

|-|-|-|

|if_not_exists|BOOL|When true, prints a warning instead of erroring if the continuous aggregate doesn't exist. Defaults to false.|

|refresh_start_offset|INTERVAL or INTEGER|The start of the continuous aggregate refresh window, expressed as an offset from the policy run time.|

|refresh_end_offset|INTERVAL or INTEGER|The end of the continuous aggregate refresh window, expressed as an offset from the policy run time. Must be greater than refresh_start_offset.|

|compress_after|INTERVAL or INTEGER|Continuous aggregate chunks are compressed if they exclusively contain data older than this interval.|

|drop_after|INTERVAL or INTEGER|Continuous aggregate chunks are dropped if they exclusively contain data older than this interval.|

For arguments that could be either an INTERVAL or an INTEGER, use an

INTERVAL if your time bucket is based on timestamps. Use an INTEGER if your

time bucket is based on integers.

Returns true if successful.

===== PAGE: https://docs.tigerdata.com/api/continuous-aggregates/create_materialized_view/ =====

Examples:

Example 1 (sql):

timescaledb_experimental.add_policies(

relation REGCLASS,

if_not_exists BOOL = false,

refresh_start_offset "any" = NULL,

refresh_end_offset "any" = NULL,

compress_after "any" = NULL,

drop_after "any" = NULL)

) RETURNS BOOL

Example 2 (sql):

SELECT timescaledb_experimental.add_policies(

'example_continuous_aggregate',

refresh_start_offset => '1 day'::interval,

refresh_end_offset => '2 day'::interval,

compress_after => '20 days'::interval,

drop_after => '1 year'::interval

);

About writing data

URL: llms-txt#about-writing-data

TimescaleDB supports writing data in the same way as Postgres, using INSERT,

UPDATE, INSERT ... ON CONFLICT, and DELETE.

TimescaleDB is optimized for running real-time analytics workloads on time-series data. For this reason, hypertables are optimized for inserts to the most recent time intervals. Inserting data with recent time values gives excellent performance. However, if you need to make frequent updates to older time intervals, you might see lower write throughput.

===== PAGE: https://docs.tigerdata.com/use-timescale/write-data/upsert/ =====

Decompression

URL: llms-txt#decompression

Contents:

- Decompress chunks manually

- Decompress individual chunks

- Decompress chunks by time

- Decompress chunks on more precise constraints

Old API since TimescaleDB v2.18.0 Replaced by convert_to_rowstore.

When compressing your data, you can reduce the amount of storage space used. But you should always leave some additional storage capacity. This gives you the flexibility to decompress chunks when necessary, for actions such as bulk inserts.

This section describes commands to use for decompressing chunks. You can filter by time to select the chunks you want to decompress.

Decompress chunks manually

Before decompressing chunks, stop any compression policy on the hypertable you are decompressing.

The database automatically recompresses your chunks in the next scheduled job.

If you accumulate a large amount of chunks that need to be compressed, the [troubleshooting guide][troubleshooting-oom-chunks] shows how to compress a backlog of chunks.

For more information on how to stop and run compression policies using alter_job(), see the [API reference][api-reference-alter-job].

There are several methods for selecting chunks and decompressing them.

Decompress individual chunks

To decompress a single chunk by name, run this command:

where, <chunk_name> is the name of the chunk you want to decompress.

Decompress chunks by time

To decompress a set of chunks based on a time range, you can use the output of

show_chunks to decompress each one:

For more information about the decompress_chunk function, see the decompress_chunk

[API reference][api-reference-decompress].

Decompress chunks on more precise constraints

If you want to use more precise matching constraints, for example space partitioning, you can construct a command like this:

===== PAGE: https://docs.tigerdata.com/use-timescale/compression/compression-on-continuous-aggregates/ =====

Examples:

Example 1 (sql):

SELECT decompress_chunk('_timescaledb_internal.<chunk_name>');

Example 2 (sql):

SELECT decompress_chunk(c, true)

FROM show_chunks('table_name', older_than, newer_than) c;

Example 3 (sql):

SELECT tableoid::regclass FROM metrics

WHERE time = '2000-01-01' AND device_id = 1

GROUP BY tableoid;

tableoid

------------------------------------------

_timescaledb_internal._hyper_72_37_chunk

Designing your database for compression

URL: llms-txt#designing-your-database-for-compression

Contents:

- Compressing data

- Querying compressed data

Old API since TimescaleDB v2.18.0 Replaced by hypercore.

Time-series data can be unique, in that it needs to handle both shallow and wide queries, such as "What's happened across the deployment in the last 10 minutes," and deep and narrow, such as "What is the average CPU usage for this server over the last 24 hours." Time-series data usually has a very high rate of inserts as well; hundreds of thousands of writes per second can be very normal for a time-series dataset. Additionally, time-series data is often very granular, and data is collected at a higher resolution than many other datasets. This can result in terabytes of data being collected over time.

All this means that if you need great compression rates, you probably need to consider the design of your database, before you start ingesting data. This section covers some of the things you need to take into consideration when designing your database for maximum compression effectiveness.

TimescaleDB is built on Postgres which is, by nature, a row-based database. Because time-series data is accessed in order of time, when you enable compression, TimescaleDB converts many wide rows of data into a single row of data, called an array form. This means that each field of that new, wide row stores an ordered set of data comprising the entire column.

For example, if you had a table with data that looked a bit like this:

|Timestamp|Device ID|Status Code|Temperature| |-|-|-|-| |12:00:01|A|0|70.11| |12:00:01|B|0|69.70| |12:00:02|A|0|70.12| |12:00:02|B|0|69.69| |12:00:03|A|0|70.14| |12:00:03|B|4|69.70|

You can convert this to a single row in array form, like this:

|Timestamp|Device ID|Status Code|Temperature| |-|-|-|-| |[12:00:01, 12:00:01, 12:00:02, 12:00:02, 12:00:03, 12:00:03]|[A, B, A, B, A, B]|[0, 0, 0, 0, 0, 4]|[70.11, 69.70, 70.12, 69.69, 70.14, 69.70]|

Even before you compress any data, this format immediately saves storage by reducing the per-row overhead. Postgres typically adds a small number of bytes of overhead per row. So even without any compression, the schema in this example is now smaller on disk than the previous format.

This format arranges the data so that similar data, such as timestamps, device IDs, or temperature readings, is stored contiguously. This means that you can then use type-specific compression algorithms to compress the data further, and each array is separately compressed. For more information about the compression methods used, see the [compression methods section][compression-methods].

When the data is in array format, you can perform queries that require a subset of the columns very quickly. For example, if you have a query like this one, that asks for the average temperature over the past day:

now() - interval ‘1 day’ ORDER BY minute DESC GROUP BY minute; `} />

The query engine can fetch and decompress only the timestamp and temperature columns to efficiently compute and return these results.

Finally, TimescaleDB uses non-inline disk pages to store the compressed arrays. This means that the in-row data points to a secondary disk page that stores the compressed array, and the actual row in the main table becomes very small, because it is now just pointers to the data. When data stored like this is queried, only the compressed arrays for the required columns are read from disk, further improving performance by reducing disk reads and writes.

Querying compressed data

In the previous example, the database has no way of knowing which rows need to be fetched and decompressed to resolve a query. For example, the database can't easily determine which rows contain data from the past day, as the timestamp itself is in a compressed column. You don't want to have to decompress all the data in a chunk, or even an entire hypertable, to determine which rows are required.

TimescaleDB automatically includes more information in the row and includes

additional groupings to improve query performance. When you compress a

hypertable, either manually or through a compression policy, it can help to specify

an ORDER BY column.

ORDER BY columns specify how the rows that are part of a compressed batch are

ordered. For most time-series workloads, this is by timestamp, so if you don't

specify an ORDER BY column, TimescaleDB defaults to using the time column. You

can also specify additional dimensions, such as location.

For each ORDER BY column, TimescaleDB automatically creates additional columns

that store the minimum and maximum value of that column. This way, the query

planner can look at the range of timestamps in the compressed column, without

having to do any decompression, and determine whether the row could possibly

match the query.

When you compress your hypertable, you can also choose to specify a SEGMENT BY

column. This allows you to segment compressed rows by a specific column, so that

each compressed row corresponds to a data about a single item such as, for

example, a specific device ID. This further allows the query planner to

determine if the row could possibly match the query without having to decompress

the column first. For example:

|Device ID|Timestamp|Status Code|Temperature|Min Timestamp|Max Timestamp| |-|-|-|-|-|-| |A|[12:00:01, 12:00:02, 12:00:03]|[0, 0, 0]|[70.11, 70.12, 70.14]|12:00:01|12:00:03| |B|[12:00:01, 12:00:02, 12:00:03]|[0, 0, 4]|[69.70, 69.69, 69.70]|12:00:01|12:00:03|

With the data segmented in this way, a query for device A between a time interval becomes quite fast. The query planner can use an index to find those rows for device A that contain at least some timestamps corresponding to the specified interval, and even a sequential scan is quite fast since evaluating device IDs or timestamps does not require decompression. This means the query executor only decompresses the timestamp and temperature columns corresponding to those selected rows.

===== PAGE: https://docs.tigerdata.com/use-timescale/compression/compression-policy/ =====

remove_compression_policy()

URL: llms-txt#remove_compression_policy()

Contents:

- Samples

- Required arguments

- Optional arguments

Old API since TimescaleDB v2.18.0 Replaced by remove_columnstore_policy().

If you need to remove the compression policy. To restart policy-based compression you need to add the policy again. To view the policies that already exist, see [informational views][informational-views].

Remove the compression policy from the 'cpu' table:

Remove the compression policy from the 'cpu_weekly' continuous aggregate:

Required arguments

|Name|Type|Description|

|-|-|-|

|hypertable|REGCLASS|Name of the hypertable or continuous aggregate the policy should be removed from|

Optional arguments

| Name | Type | Description |

|---|---|---|

if_exists |

BOOLEAN | Setting to true causes the command to fail with a notice instead of an error if a compression policy does not exist on the hypertable. Defaults to false. |

===== PAGE: https://docs.tigerdata.com/api/compression/alter_table_compression/ =====

Examples:

Example 1 (unknown):

Remove the compression policy from the 'cpu_weekly' continuous aggregate:

About compression methods

URL: llms-txt#about-compression-methods

Contents:

- Integer compression

- Delta encoding

- Delta-of-delta encoding

- Simple-8b

- Run-length encoding

- Floating point compression

- XOR-based compression

- Data-agnostic compression

- Dictionary compression

Depending on the data type that is compressed when your data is converted from the rowstore to the columnstore, TimescaleDB uses the following compression algorithms:

- Integers, timestamps, boolean and other integer-like types: a combination of the following compression methods is used: [delta encoding][delta], [delta-of-delta][delta-delta], [simple-8b][simple-8b], and [run-length encoding][run-length].

- Columns that do not have a high amount of repeated values: [XOR-based][xor] compression with some [dictionary compression][dictionary].

- All other types: [dictionary compression][dictionary].

This page gives an in-depth explanation of the compression methods used in hypercore.

Integer compression

For integers, timestamps, and other integer-like types TimescaleDB uses a combination of delta encoding, delta-of-delta, simple 8-b, and run-length encoding.

The simple-8b compression method has been extended so that data can be decompressed in reverse order. Backward scanning queries are common in time-series workloads. This means that these types of queries run much faster.

Delta encoding reduces the amount of information required to represent a data object by only storing the difference, sometimes referred to as the delta, between that object and one or more reference objects. These algorithms work best where there is a lot of redundant information, and it is often used in workloads like versioned file systems. For example, this is how Dropbox keeps your files synchronized. Applying delta-encoding to time-series data means that you can use fewer bytes to represent a data point, because you only need to store the delta from the previous data point.

For example, imagine you had a dataset that collected CPU, free memory, temperature, and humidity over time. If you time column was stored as an integer value, like seconds since UNIX epoch, your raw data would look a little like this:

|time|cpu|mem_free_bytes|temperature|humidity| |-|-|-|-|-| |2023-04-01 10:00:00|82|1,073,741,824|80|25| |2023-04-01 10:00:05|98|858,993,459|81|25| |2023-04-01 10:00:10|98|858,904,583|81|25|

With delta encoding, you only need to store how much each value changed from the previous data point, resulting in smaller values to store. So after the first row, you can represent subsequent rows with less information, like this:

|time|cpu|mem_free_bytes|temperature|humidity| |-|-|-|-|-| |2023-04-01 10:00:00|82|1,073,741,824|80|25| |5 seconds|16|-214,748,365|1|0| |5 seconds|0|-88,876|0|0|

Applying delta encoding to time-series data takes advantage of the fact that most time-series datasets are not random, but instead represent something that is slowly changing over time. The storage savings over millions of rows can be substantial, especially if the value changes very little, or doesn't change at all.

Delta-of-delta encoding

Delta-of-delta encoding takes delta encoding one step further and applies delta-encoding over data that has previously been delta-encoded. With time-series datasets where data collection happens at regular intervals, you can apply delta-of-delta encoding to the time column, which results in only needing to store a series of zeroes.

In other words, delta encoding stores the first derivative of the dataset, while delta-of-delta encoding stores the second derivative of the dataset.

Applied to the example dataset from earlier, delta-of-delta encoding results in this:

|time|cpu|mem_free_bytes|temperature|humidity| |-|-|-|-|-| |2020-04-01 10:00:00|82|1,073,741,824|80|25| |5 seconds|16|-214,748,365|1|0| |0 seconds|0|-88,876|0|0|

In this example, delta-of-delta further compresses 5 seconds in the time column down to 0 for every entry in the time column after the second row, because the five second gap remains constant for each entry. Note that you see two entries in the table before the delta-delta 0 values, because you need two deltas to compare.

This compresses a full timestamp of 8 bytes, or 64 bits, down to just a single bit, resulting in 64x compression.

With delta and delta-of-delta encoding, you can significantly reduce the number of digits you need to store. But you still need an efficient way to store the smaller integers. The previous examples used a standard integer datatype for the time column, which needs 64 bits to represent the value of 0 when delta-delta encoded. This means that even though you are only storing the integer 0, you are still consuming 64 bits to store it, so you haven't actually saved anything.

Simple-8b is one of the simplest and smallest methods of storing variable-length integers. In this method, integers are stored as a series of fixed-size blocks. For each block, every integer within the block is represented by the minimal bit-length needed to represent the largest integer in that block. The first bits of each block denotes the minimum bit-length for the block.

This technique has the advantage of only needing to store the length once for a given block, instead of once for each integer. Because the blocks are of a fixed size, you can infer the number of integers in each block from the size of the integers being stored.

For example, if you wanted to store a temperature that changed over time, and you applied delta encoding, you might end up needing to store this set of integers:

|temperature (deltas)| |-| |1| |10| |11| |13| |9| |100| |22| |11|

With a block size of 10 digits, you could store this set of integers as two blocks: one block storing 5 2-digit numbers, and a second block storing 3 3-digit numbers, like this:

In this example, both blocks store about 10 digits worth of data, even though some of the numbers have to be padded with a leading 0. You might also notice that the second block only stores 9 digits, because 10 is not evenly divisible by 3.

Simple-8b works in this way, except it uses binary numbers instead of decimal, and it usually uses 64-bit blocks. In general, the longer the integer, the fewer number of integers that can be stored in each block.

Run-length encoding

Simple-8b compresses integers very well, however, if you have a large number of repeats of the same value, you can get even better compression with run-length encoding. This method works well for values that don't change very often, or if an earlier transformation removes the changes.

Run-length encoding is one of the classic compression algorithms. For time-series data with billions of contiguous zeroes, or even a document with a million identically repeated strings, run-length encoding works incredibly well.

For example, if you wanted to store a temperature that changed minimally over time, and you applied delta encoding, you might end up needing to store this set of integers:

|temperature (deltas)| |-| |11| |12| |12| |12| |12| |12| |12| |1| |12| |12| |12| |12|

For values like these, you do not need to store each instance of the value, but

rather how long the run, or number of repeats, is. You can store this set of

numbers as {run; value} pairs like this:

This technique uses 11 digits of storage (1, 1, 1, 6, 1, 2, 1, 1, 4, 1, 2), rather than 23 digits that an optimal series of variable-length integers requires (11, 12, 12, 12, 12, 12, 12, 1, 12, 12, 12, 12).

Run-length encoding is also used as a building block for many more advanced algorithms, such as Simple-8b RLE, which is an algorithm that combines run-length and Simple-8b techniques. TimescaleDB implements a variant of Simple-8b RLE. This variant uses different sizes to standard Simple-8b, in order to handle 64-bit values, and RLE.

Floating point compression

For columns that do not have a high amount of repeated values, TimescaleDB uses XOR-based compression.

The standard XOR-based compression method has been extended so that data can be decompressed in reverse order. Backward scanning queries are common in time-series workloads. This means that queries that use backwards scans run much faster.

XOR-based compression

Floating point numbers are usually more difficult to compress than integers. Fixed-length integers often have leading zeroes, but floating point numbers usually use all of their available bits, especially if they are converted from decimal numbers, which can't be represented precisely in binary.

Techniques like delta-encoding don't work well for floats, because they do not reduce the number of bits sufficiently. This means that most floating-point compression algorithms tend to be either complex and slow, or truncate significant digits. One of the few simple and fast lossless floating-point compression algorithms is XOR-based compression, built on top of Facebook's Gorilla compression.

XOR is the binary function exclusive or. In this algorithm, successive

floating point numbers are compared with XOR, and a difference results in a bit

being stored. The first data point is stored without compression, and subsequent

data points are represented using their XOR'd values.

Data-agnostic compression

For values that are not integers or floating point, TimescaleDB uses dictionary compression.

Dictionary compression

One of the earliest lossless compression algorithms, dictionary compression is the basis of many popular compression methods. Dictionary compression can also be found in areas outside of computer science, such as medical coding.

Instead of storing values directly, dictionary compression works by making a list of the possible values that can appear, and then storing an index into a dictionary containing the unique values. This technique is quite versatile, can be used regardless of data type, and works especially well when you have a limited set of values that repeat frequently.

For example, if you had the list of temperatures shown earlier, but you wanted an additional column storing a city location for each measurement, you might have a set of values like this:

|City| |-| |New York| |San Francisco| |San Francisco| |Los Angeles|

Instead of storing all the city names directly, you can instead store a dictionary, like this:

You can then store just the indices in your column, like this:

|City| |-| |0| |1| |1| |2|

For a dataset with a lot of repetition, this can offer significant compression. In the example, each city name is on average 11 bytes in length, while the indices are never going to be more than 4 bytes long, reducing space usage nearly 3 times. In TimescaleDB, the list of indices is compressed even further with the Simple-8b+RLE method, making the storage cost even smaller.

Dictionary compression doesn't always result in savings. If your dataset doesn't have a lot of repeated values, then the dictionary is the same size as the original data. TimescaleDB automatically detects this case, and falls back to not using a dictionary in that scenario.

===== PAGE: https://docs.tigerdata.com/use-timescale/compression/modify-a-schema/ =====

Changelog

URL: llms-txt#changelog

Contents:

- TimescaleDB 2.22.1 – configurable indexing, enhanced partitioning, and faster queries

- Highlighted features

- Deprecations

- Kafka Source Connector (beta)

- Phased update rollouts,

pg_cron, larger compute options, and backup reports- 🛡️ Phased rollouts for TimescaleDB minor releases

- ⏰ pg_cron extension

- ⚡️ Larger compute options: 48 and 64 CPU

- 📋 Backup report for compliance

- 🗺️ New router for Tiger Cloud Console

All the latest features and updates to Tiger Cloud.

TimescaleDB 2.22.1 – configurable indexing, enhanced partitioning, and faster queries

October 10, 2025

TimescaleDB 2.22.1 introduces major performance and flexibility improvements across indexing, compression, and query execution. TimescaleDB 2.22.1 was released on September 30th and is now available to all users of Tiger.

Highlighted features

Configurable sparse indexes: manually configure sparse indexes (min-max or bloom) on one or more columns of compressed hypertables, optimizing query performance for specific workloads and reducing I/O. In previous versions, these were automatically created based on heuristics and could not be modified.

UUIDv7 support: native support for UUIDv7 for both compression and partitioning. UUIDv7 embeds a time component, improving insert locality and enabling efficient time-based range queries while maintaining global uniqueness.

Vectorized UUID compression: new vectorized compression for UUIDv7 columns doubles query performance and improves storage efficiency by up to 30%.

UUIDv7 partitioning: hypertables can now be partitioned on UUIDv7 columns, combining time-based chunking with globally unique IDs—ideal for large-scale event and log data.

Multi-column SkipScan: expands SkipScan to support multiple distinct keys, delivering millisecond-fast deduplication and

DISTINCT ONqueries across billions of rows. Learn more in our blog post and documentation.Compression improvements: default

segmentbyandorderbysettings are now applied at compression time for each chunk, automatically adapting to evolving data patterns for better performance. This was previously set at the hypertable level and fixed across all chunks.

The experimental Hypercore Table Access Method (TAM) has been removed in this release following advancements in the columnstore architecture.

For a comprehensive list of changes, refer to the TimescaleDB 2.22 & 2.22.1 release notes.

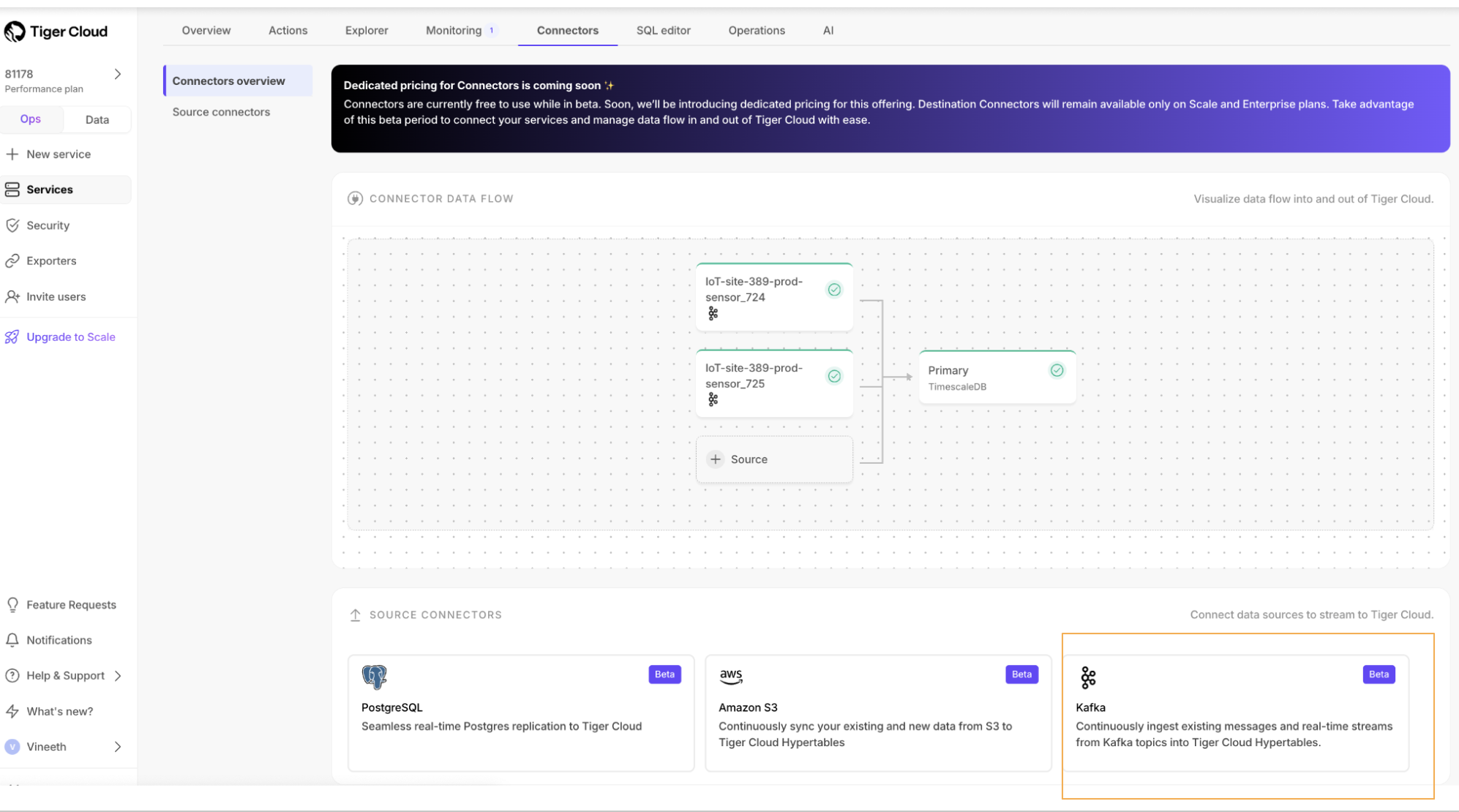

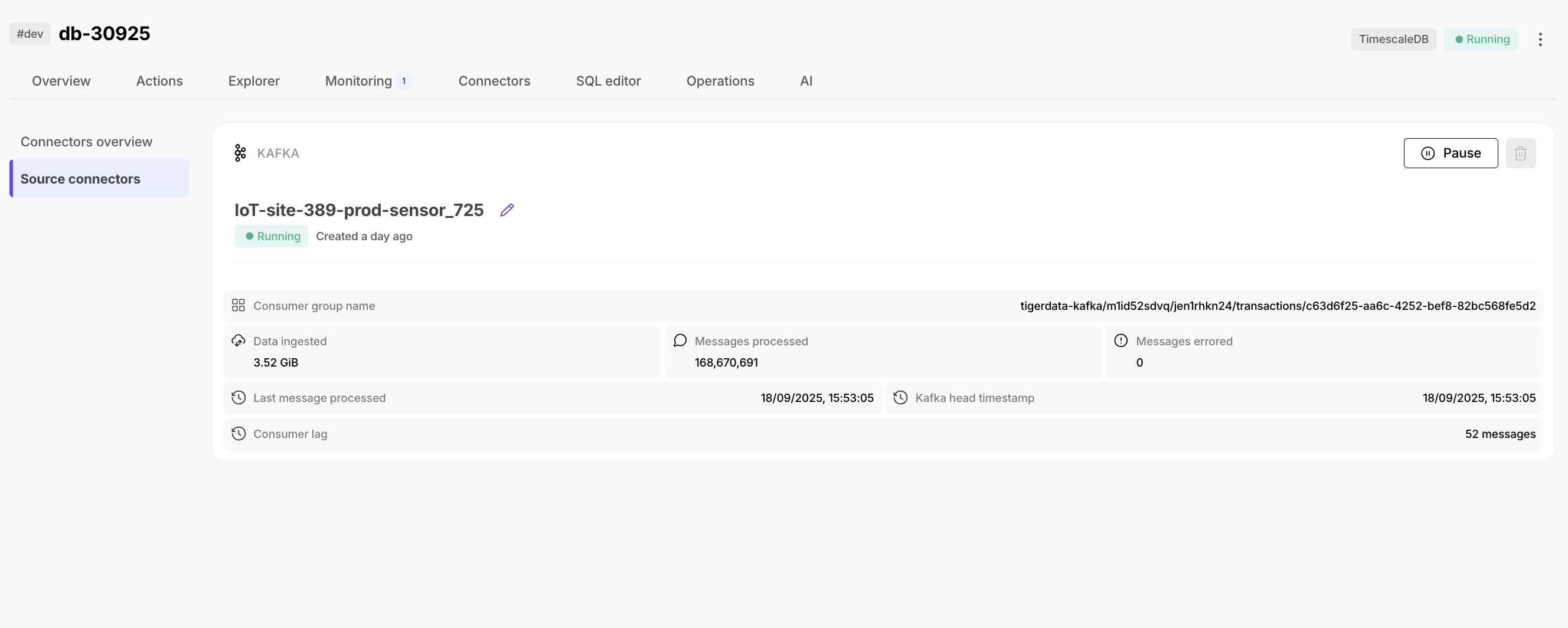

Kafka Source Connector (beta)

September 19, 2025

The new Kafka Source Connector enables you to connect your existing Kafka clusters directly to Tiger Cloud and ingest data from Kafka topics into hypertables. Developers often build proxies or run JDBC Sink Connectors to bridge Kafka and Tiger Cloud, which is error-prone and time-consuming. With the Kafka Source Connector, you can seamlessly start ingesting your Kafka data natively without additional middleware.

- Supported formats: AVRO

- Supported platforms: Confluent Cloud and Amazon Managed Streaming for Apache Kafka

Phased update rollouts, pg_cron, larger compute options, and backup reports

September 12, 2025

🛡️ Phased rollouts for TimescaleDB minor releases

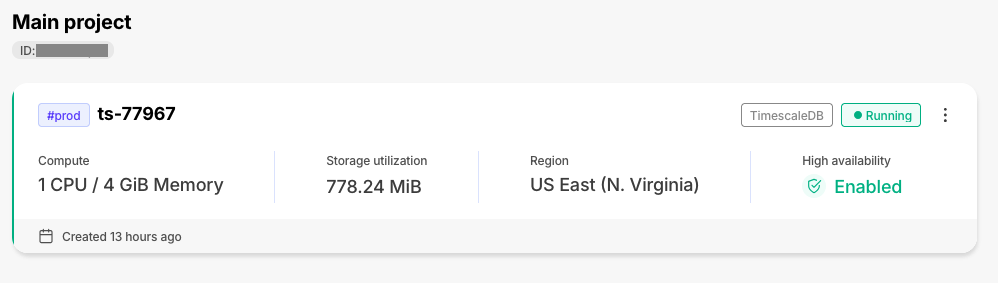

Starting with TimescaleDB 2.22.0, minor releases will now roll out in phases. Services tagged #dev will get upgraded first, followed by #prod after 21 days. This gives you time to validate upgrades in #dev before they reach #prod services. Subscribe to get an email notification before your #prod service is upgraded. See Maintenance and upgrades for details.

⏰ pg_cron extension

pg_cron is now available on Tiger Cloud! With pg_cron, you can:

- Schedule SQL commands to run automatically—like generating weekly sales reports or cleaning up old log entries every night at 2 AM.

- Automate routine maintenance tasks such as refreshing materialized views hourly to keep dashboards current.

- Eliminate external cron jobs and task schedulers, keeping all your automation logic within PostgreSQL.

To enable pg_cron on your service, contact our support team. We're working on making this self-service in future updates.

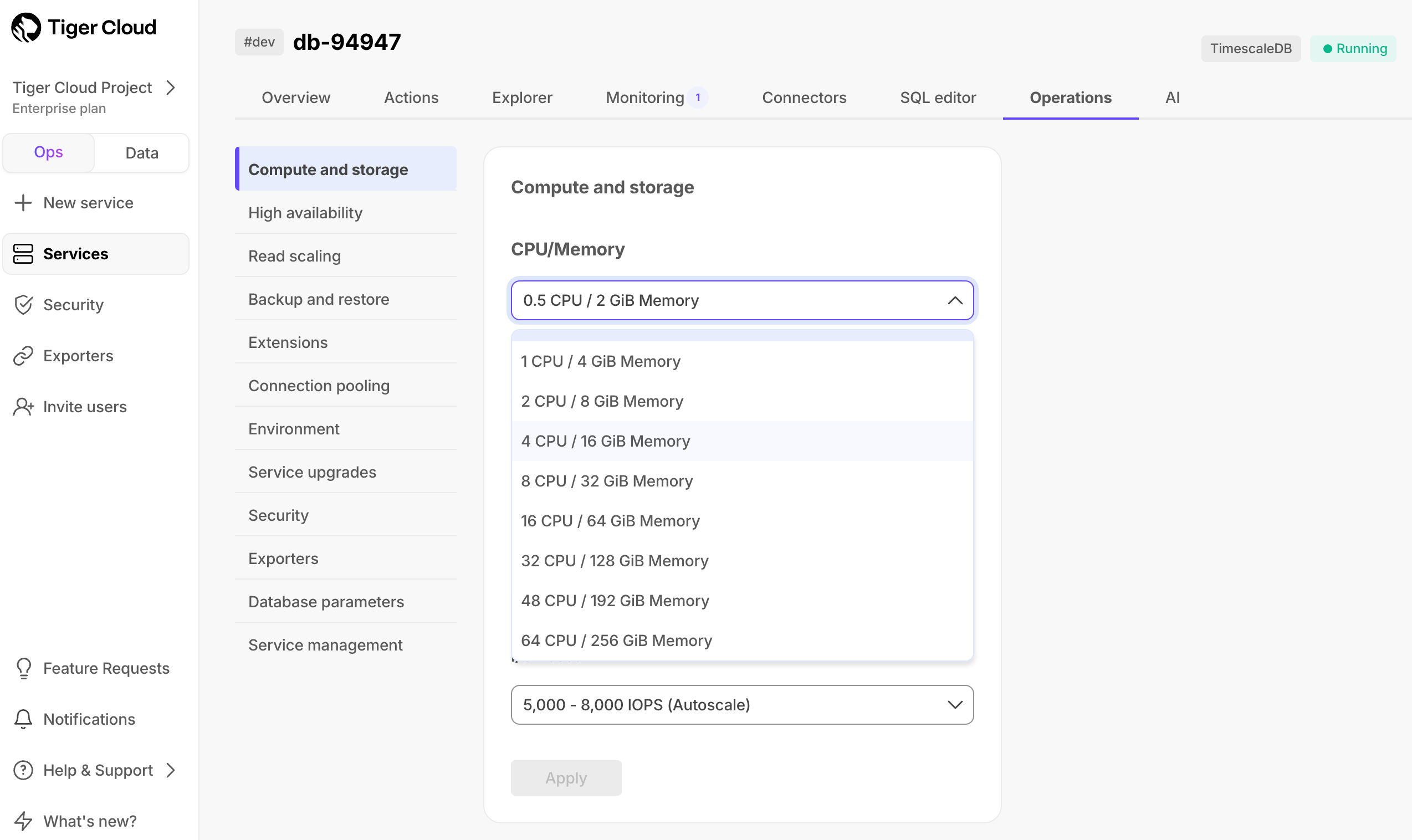

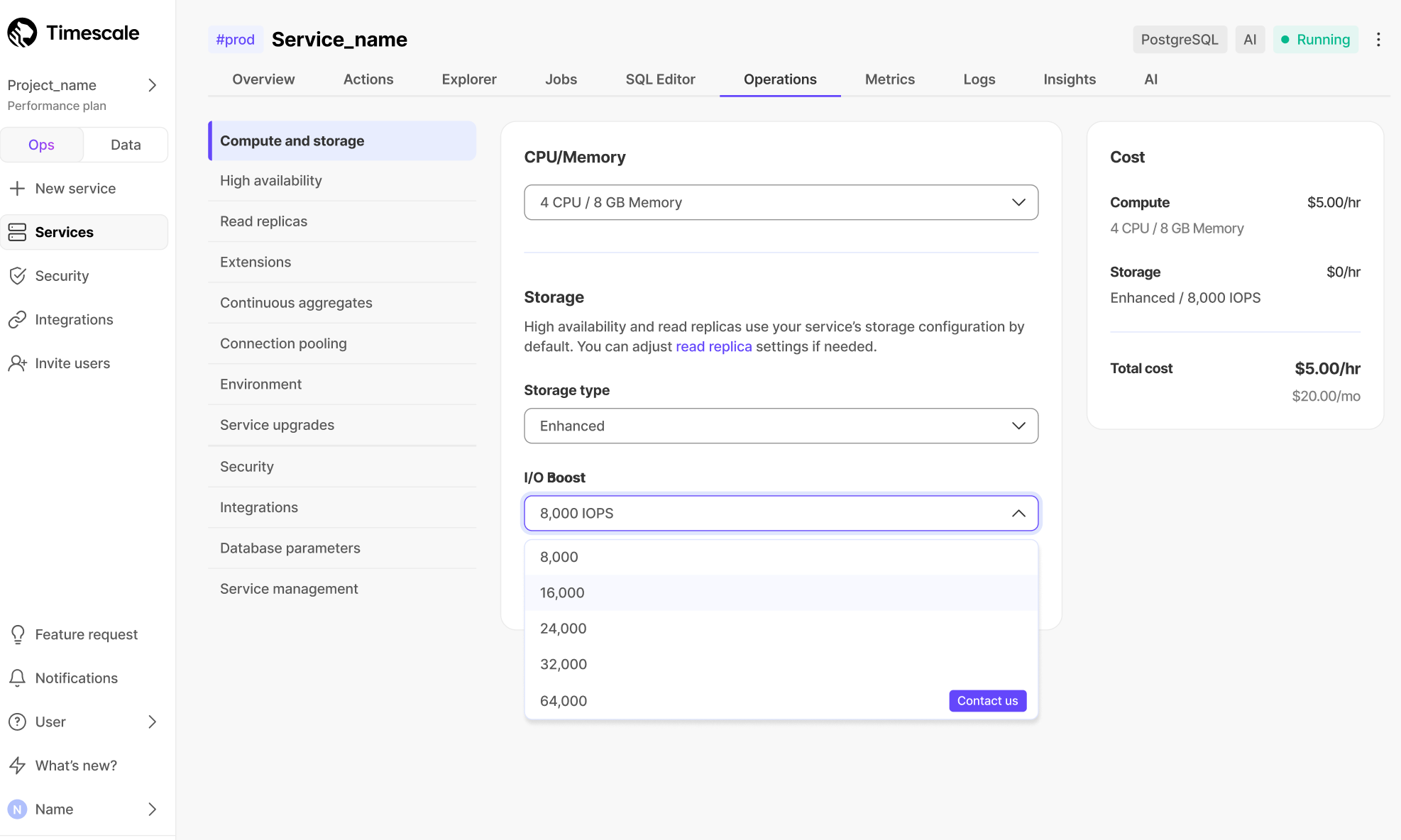

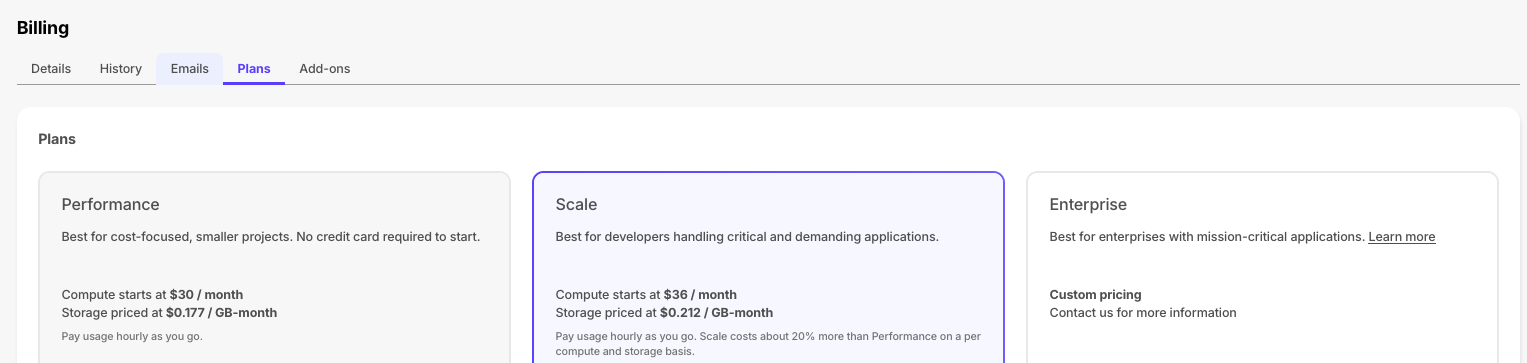

⚡️ Larger compute options: 48 and 64 CPU

For the most demanding workloads, you can now create services with 48 and 64 CPUs. These options are only available on our Enterprise plan, and they're dedicated instances that are not shared with other customers.

📋 Backup report for compliance

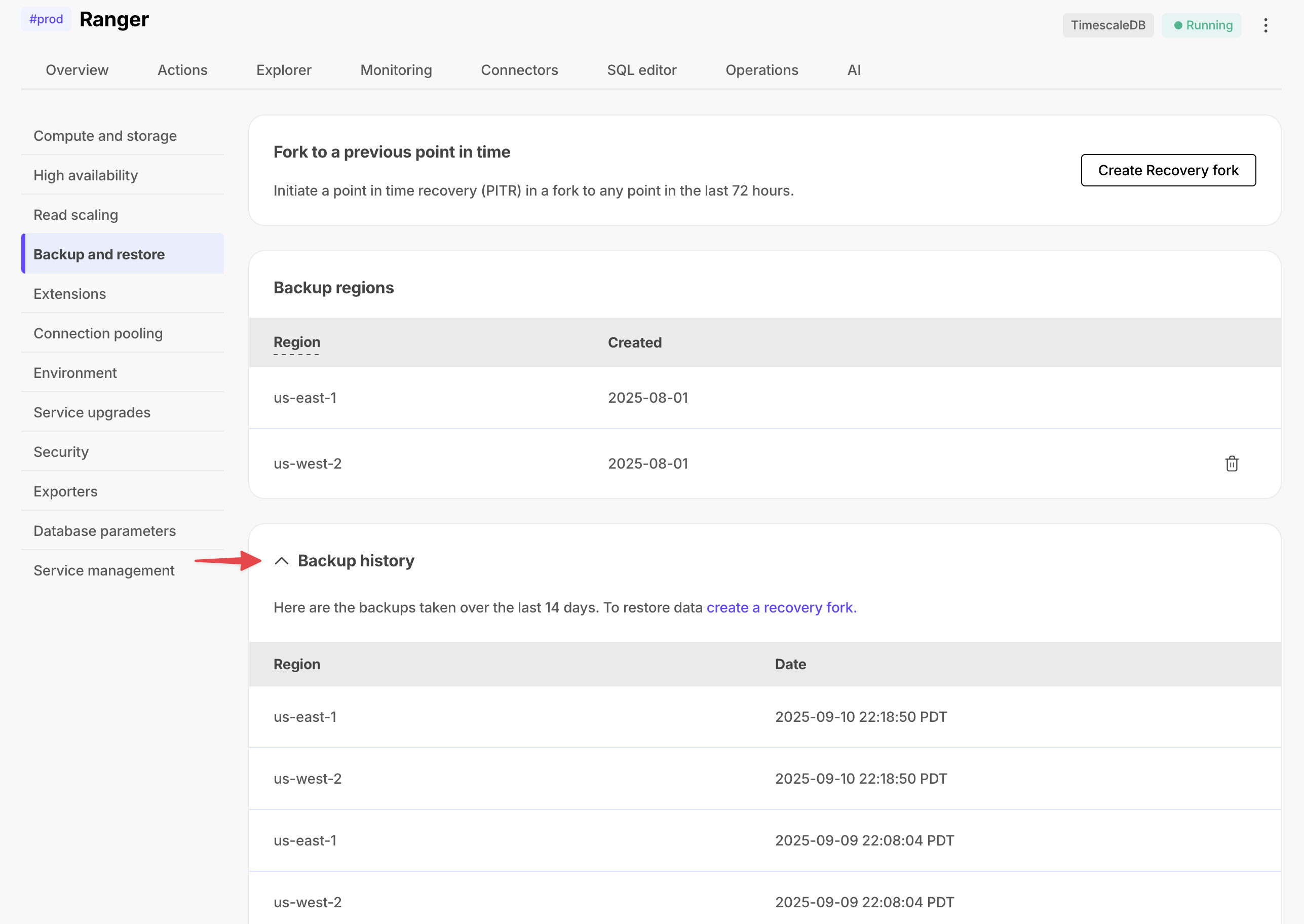

Scale and Enterprise customers can now see a list of their backups in Tiger Cloud Console. For customers with SOC 2 or other compliance needs, this serves as auditable proof of backups.

🗺️ New router for Tiger Cloud Console

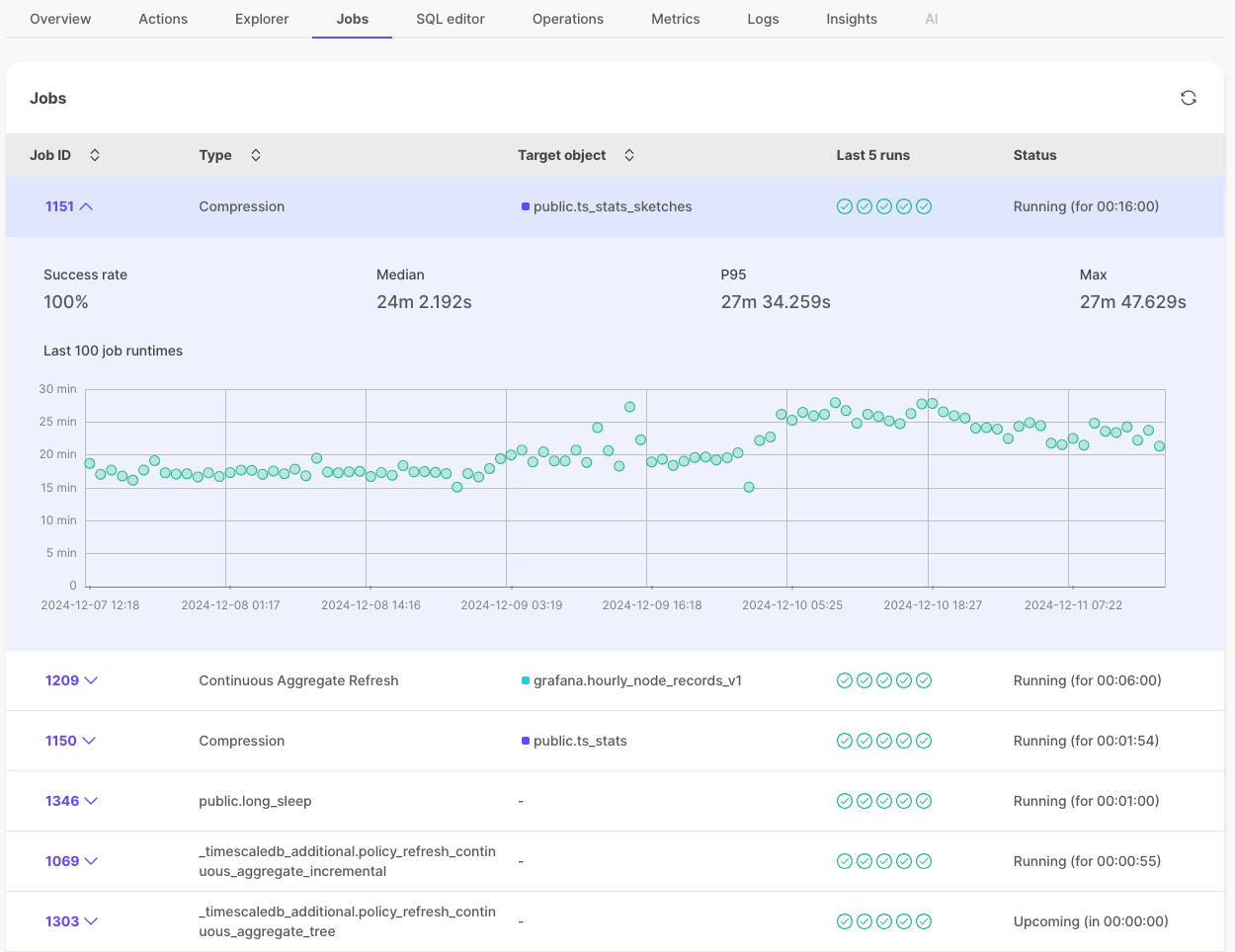

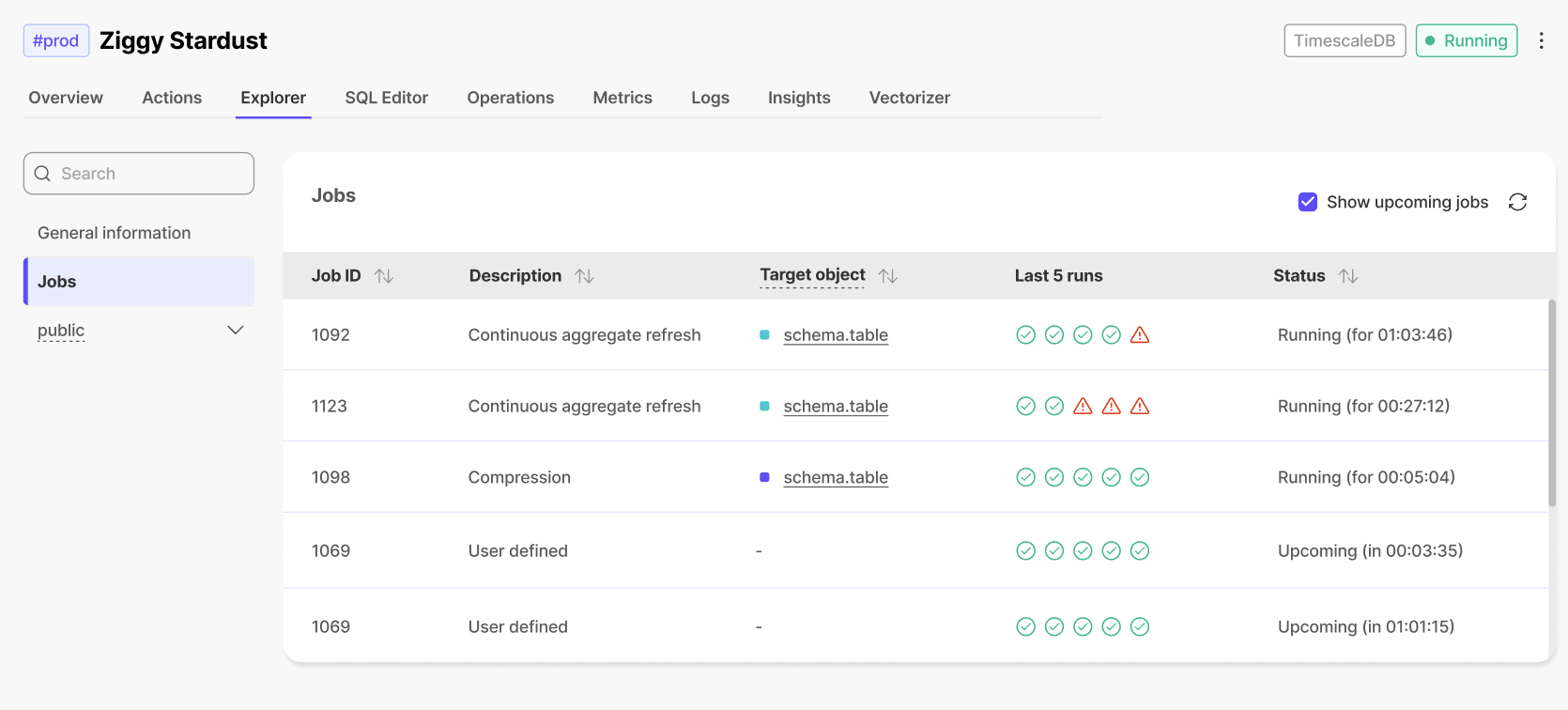

The UI just got snappier and easier to navigate with improved interlinking. For example, click an object in the Jobs page to see what hypertable the job is associated with.

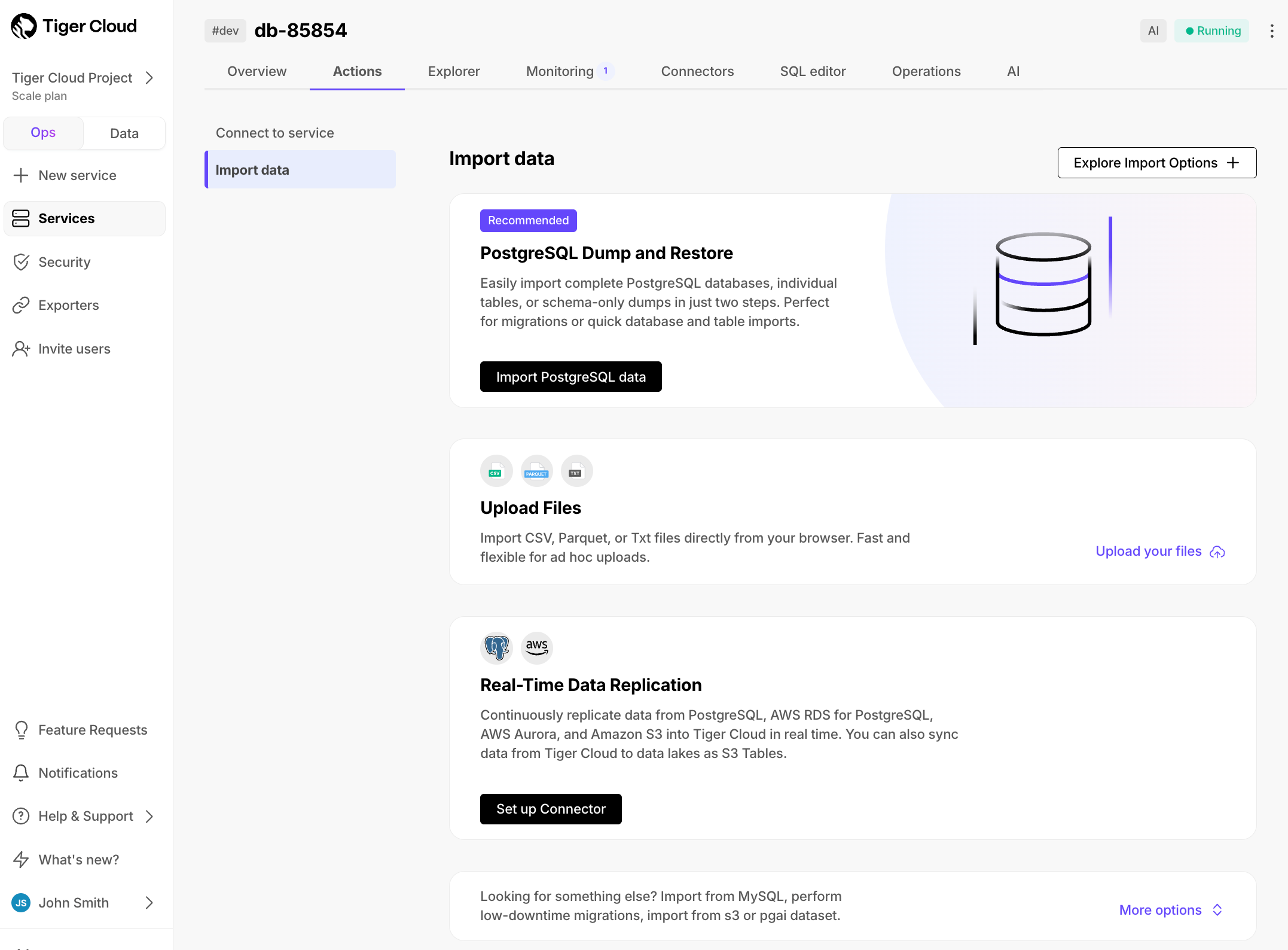

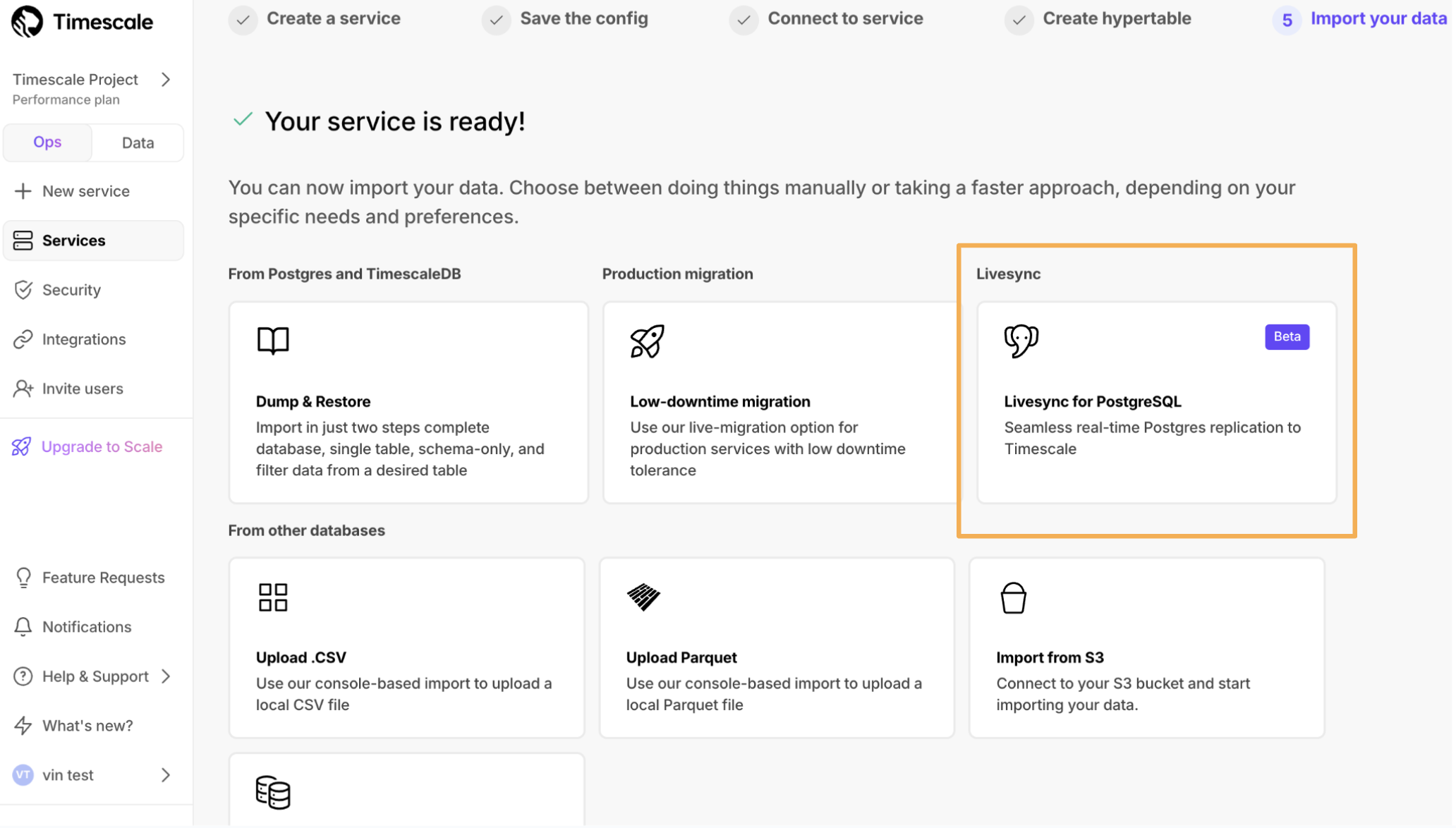

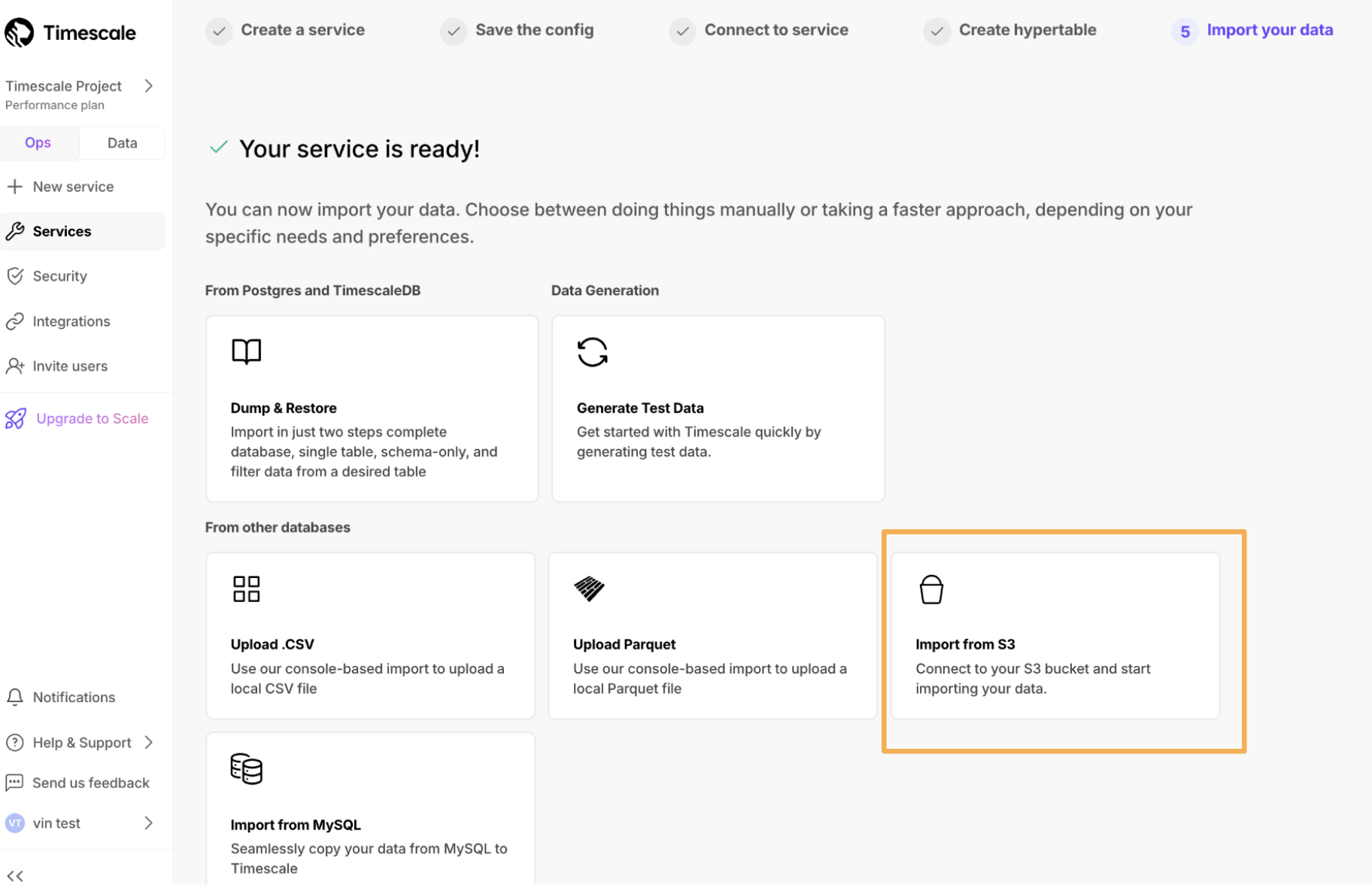

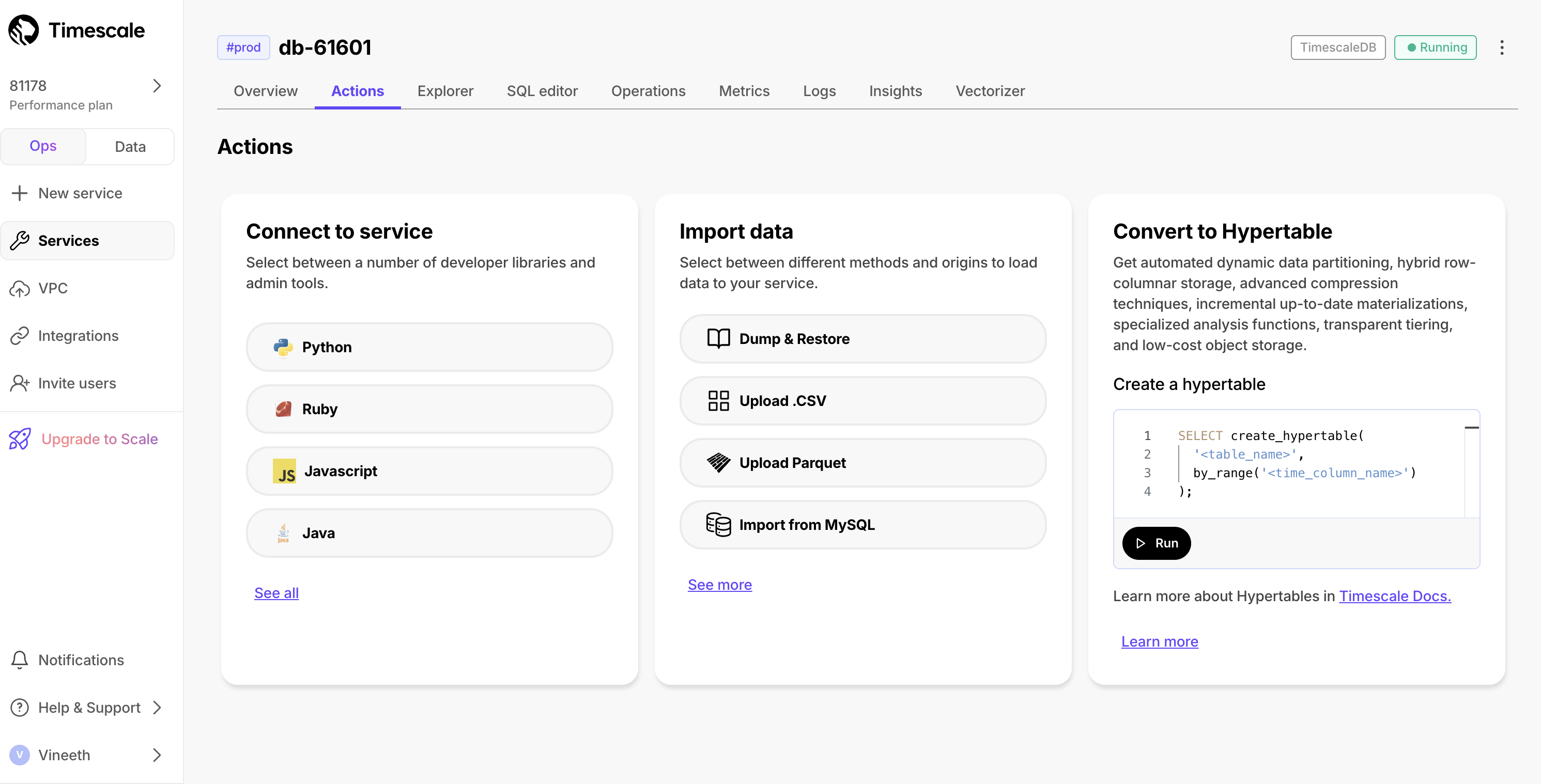

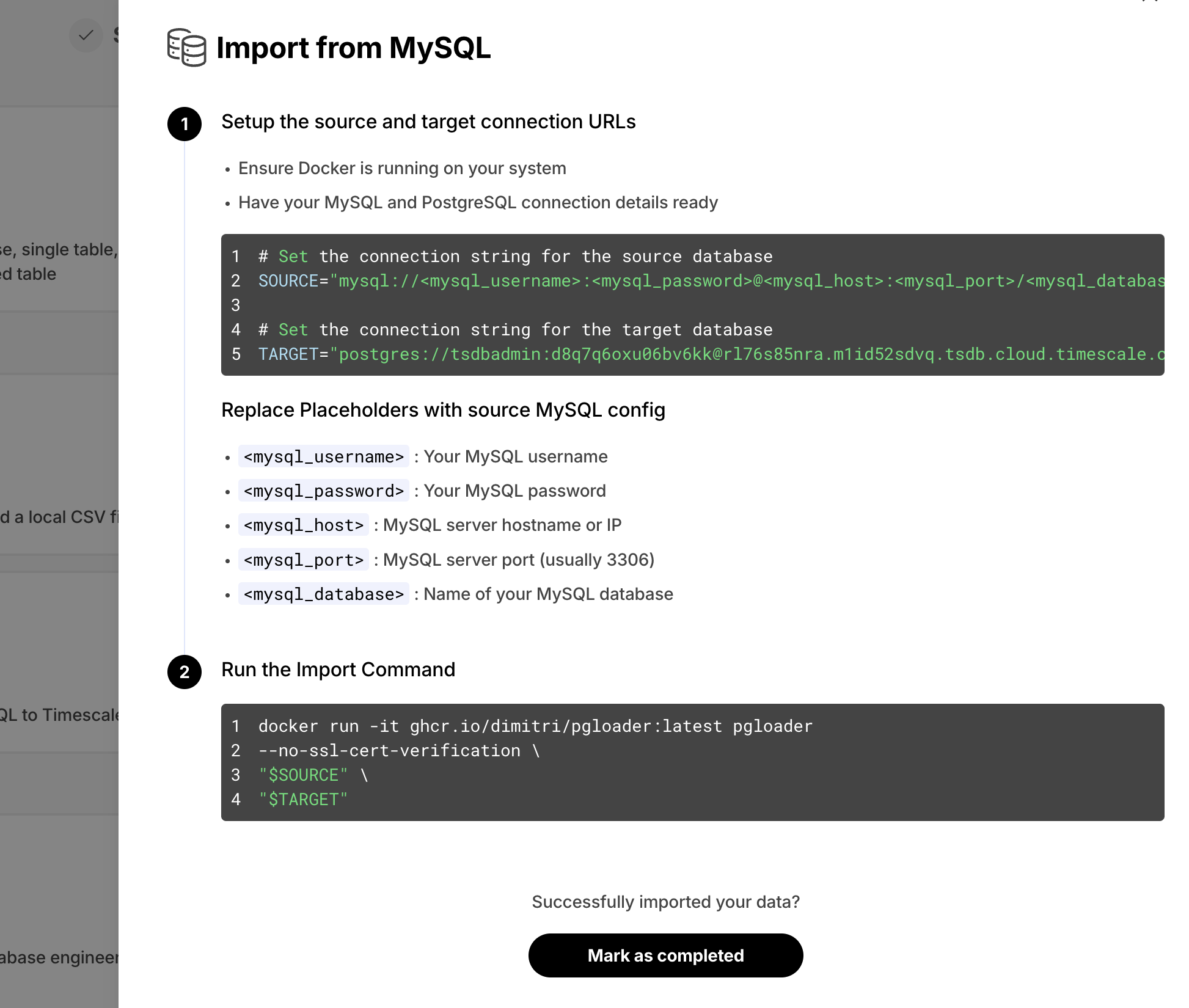

New data import wizard

September 5, 2025

To make navigation easier, we’ve introduced a cleaner, more intuitive UI for data import. It highlights the most common and recommended option, PostgreSQL Dump & Restore, while organizing all import options into clear categories, to make navigation easier.

The new categories include:

- PostgreSQL Dump & Restore

- Upload Files: CSV, Parquet, TXT

- Real-time Data Replication: source connectors

- Migrations & Other Options

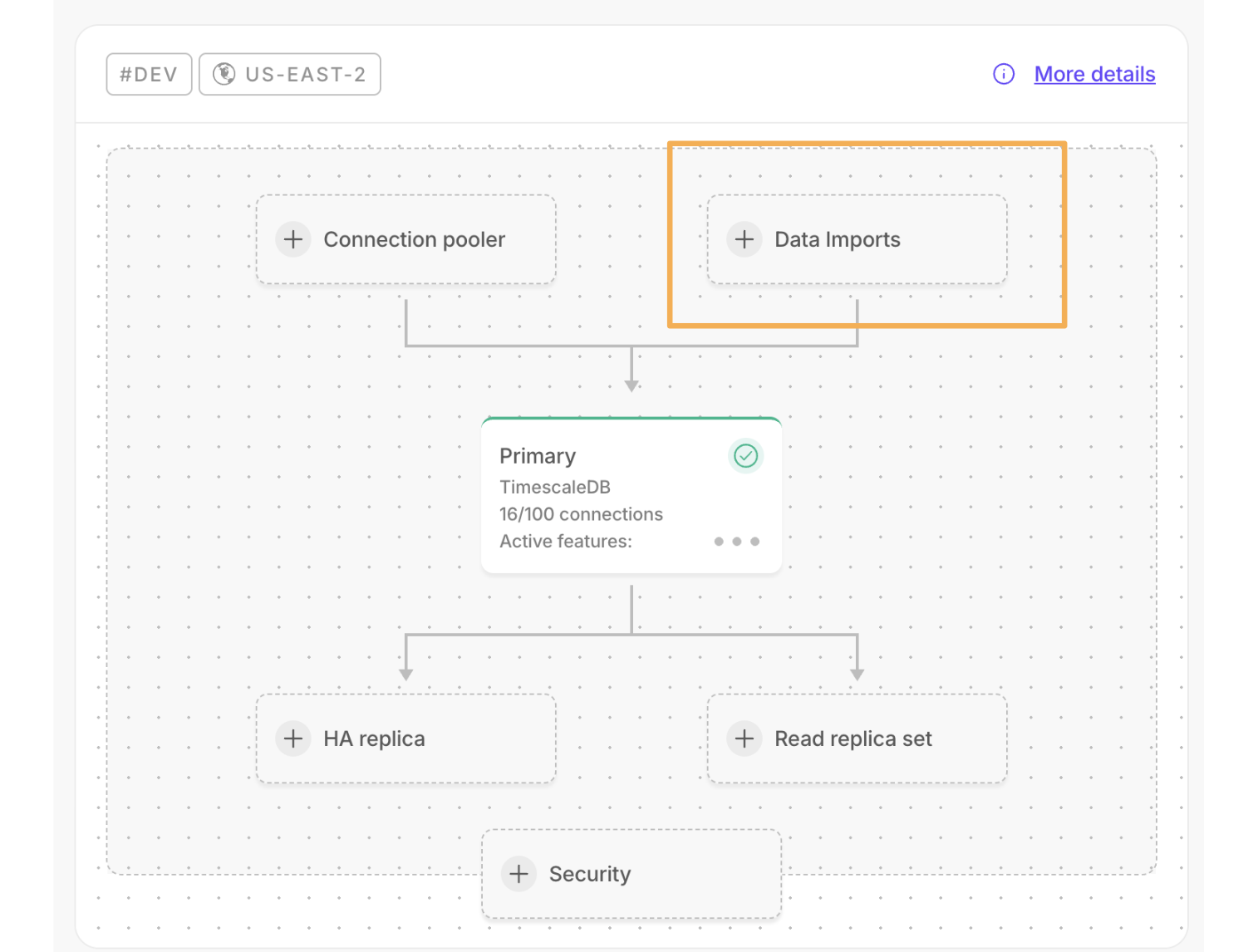

A new data import component has been added to the overview dashboard, providing a clear view of your imports. This includes quick start, in-progress status, and completed imports:

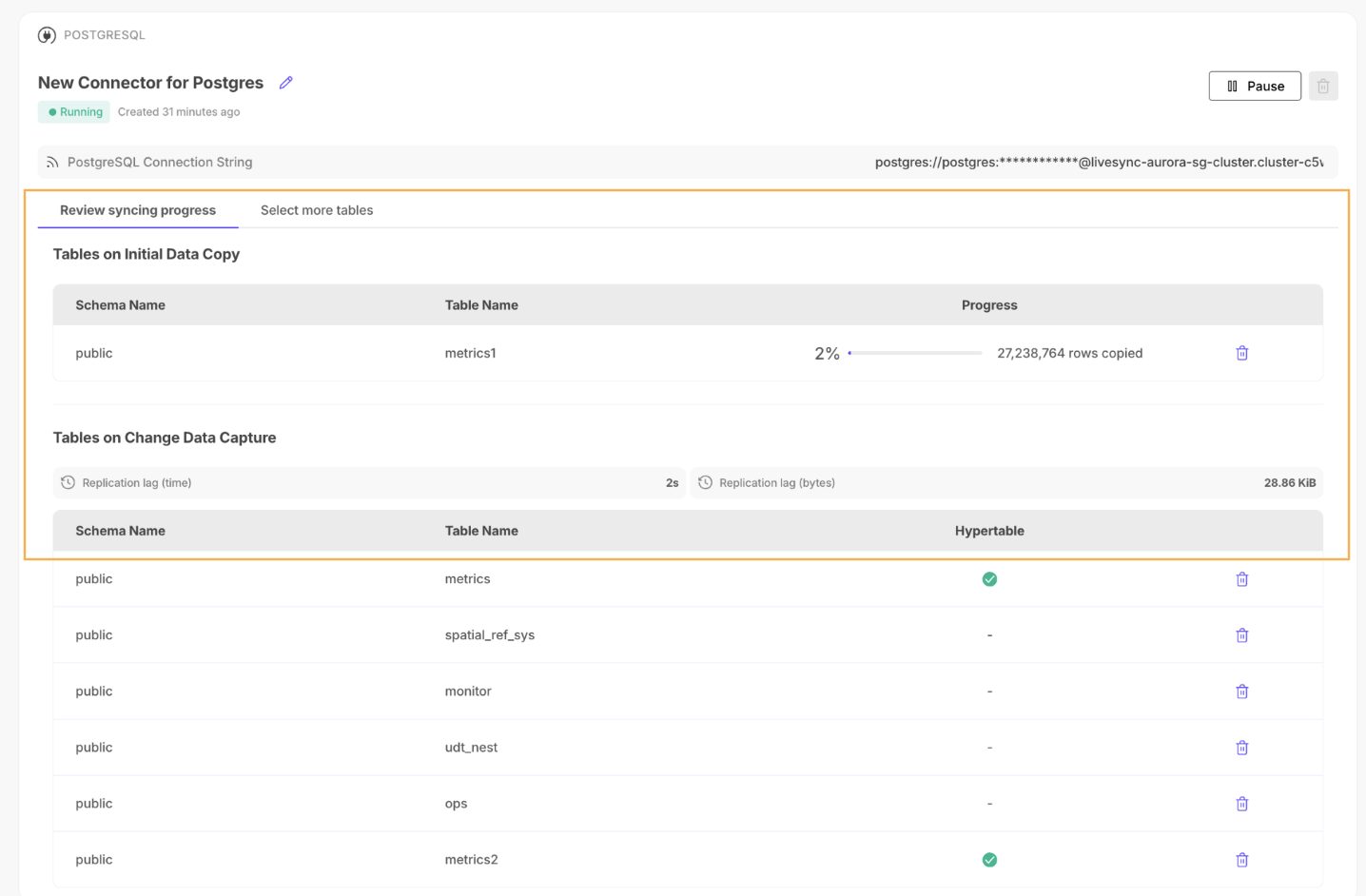

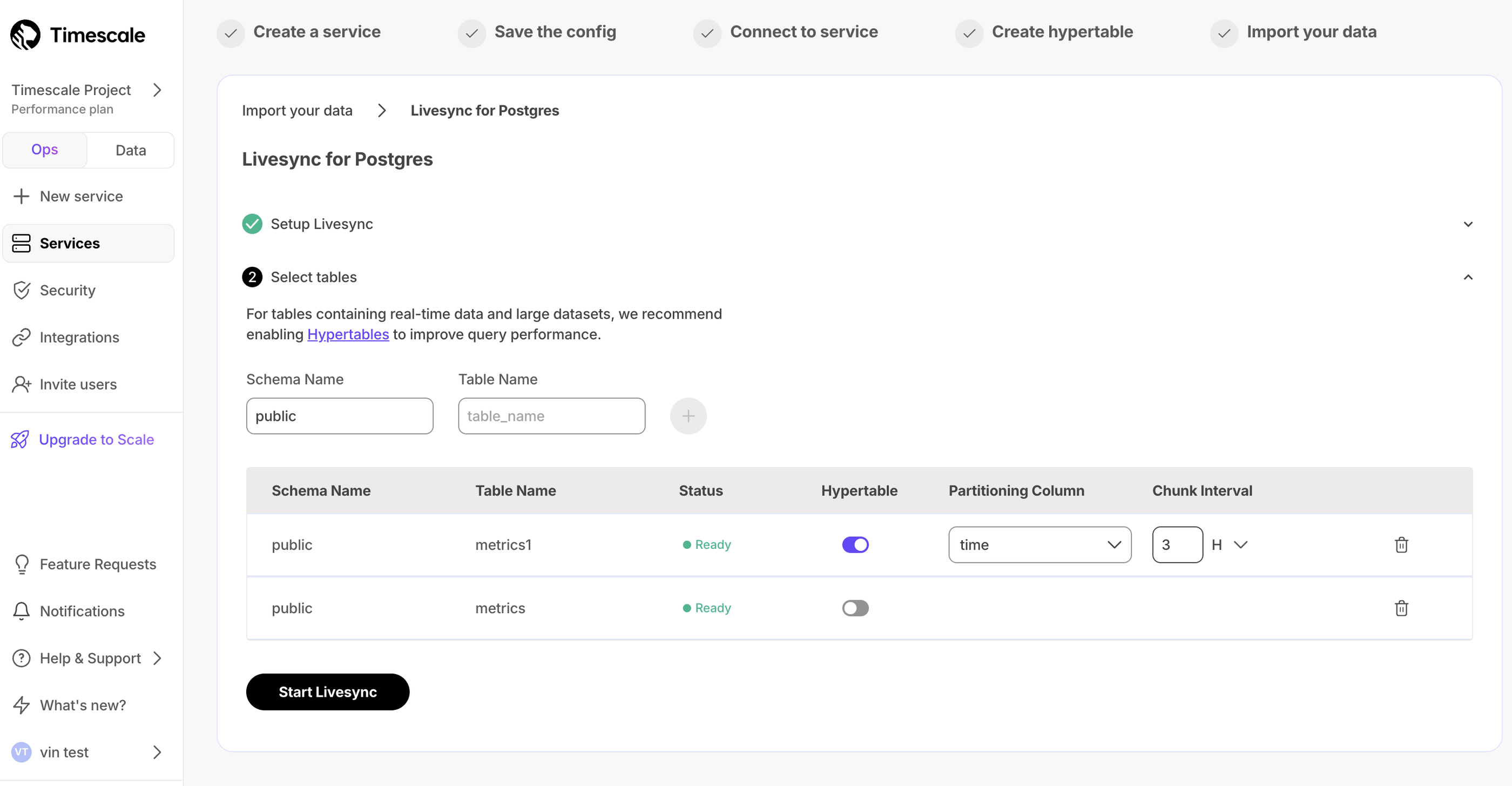

🚁 Enhancements to the Postgres source connector

August 28, 2025

- Easy table selection: You can now sync the complete source schema in one go. Select multiple tables from the drop-down menu and start the connector.

- Sync metadata: Connectors now display the following detailed metadata:

Initial data copy: The number of rows copied at any given point in time.Change data capture: The replication lag represented in time and data size.

- Improved UX design: In-progress syncs with separate sections showing the tables and metadata for

initial data copyandchange data capture, plus a dedicated tab where you can add more tables to the connector.

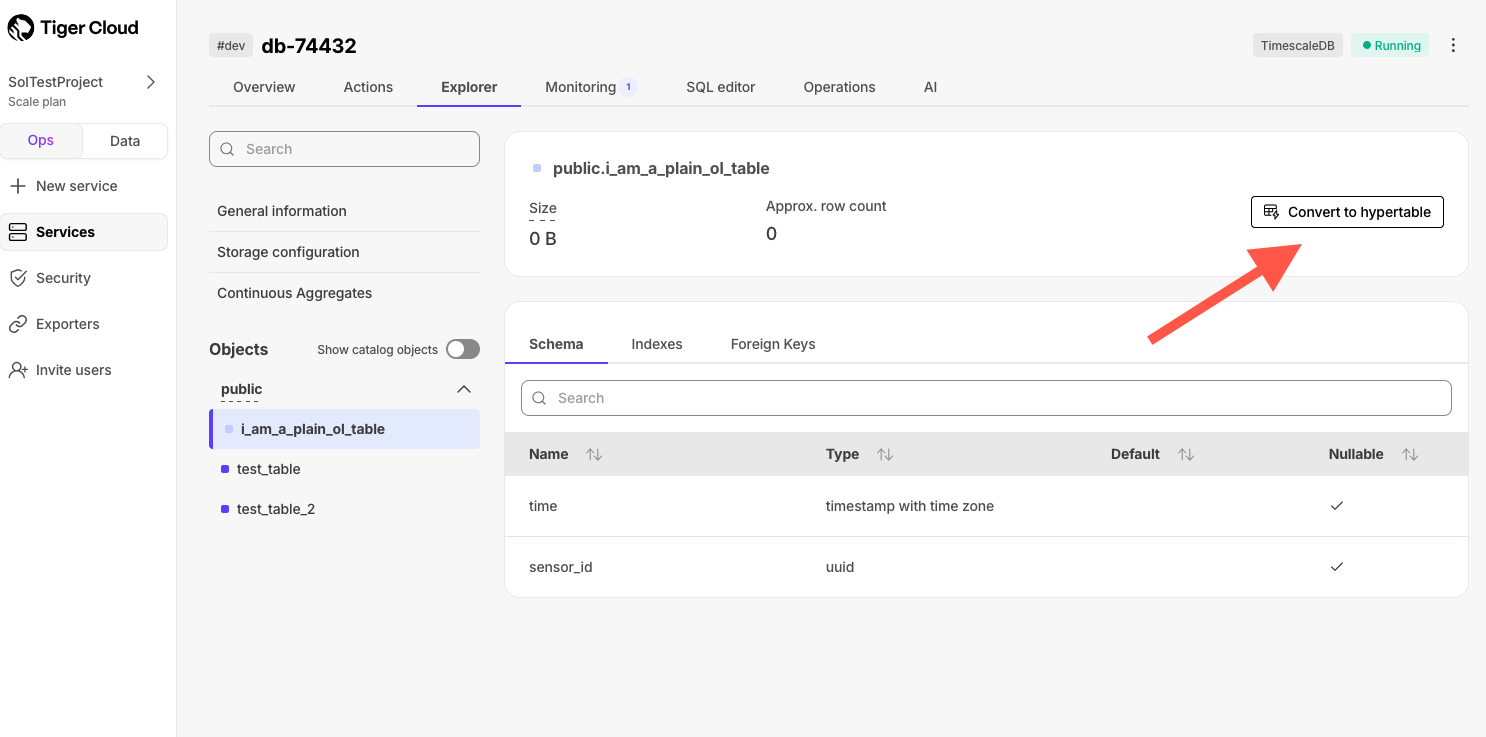

🦋 Developer role GA and hypertable transformation in Console

August 21, 2025

Developer role (GA)

The Developer role in Tiger Cloud is now generally available. It’s a project‑scoped permission set that lets technical users build and operate services, create or modify resources, run queries, and use observability—without admin or billing access. This enforces least‑privilege by default, reducing risk and audit noise, while keeping governance with Admins/Owners and billing with Finance. This means faster delivery (fewer access escalations), protected sensitive settings, and clear boundaries, so the right users can ship changes safely, while compliance and cost control remain intact.

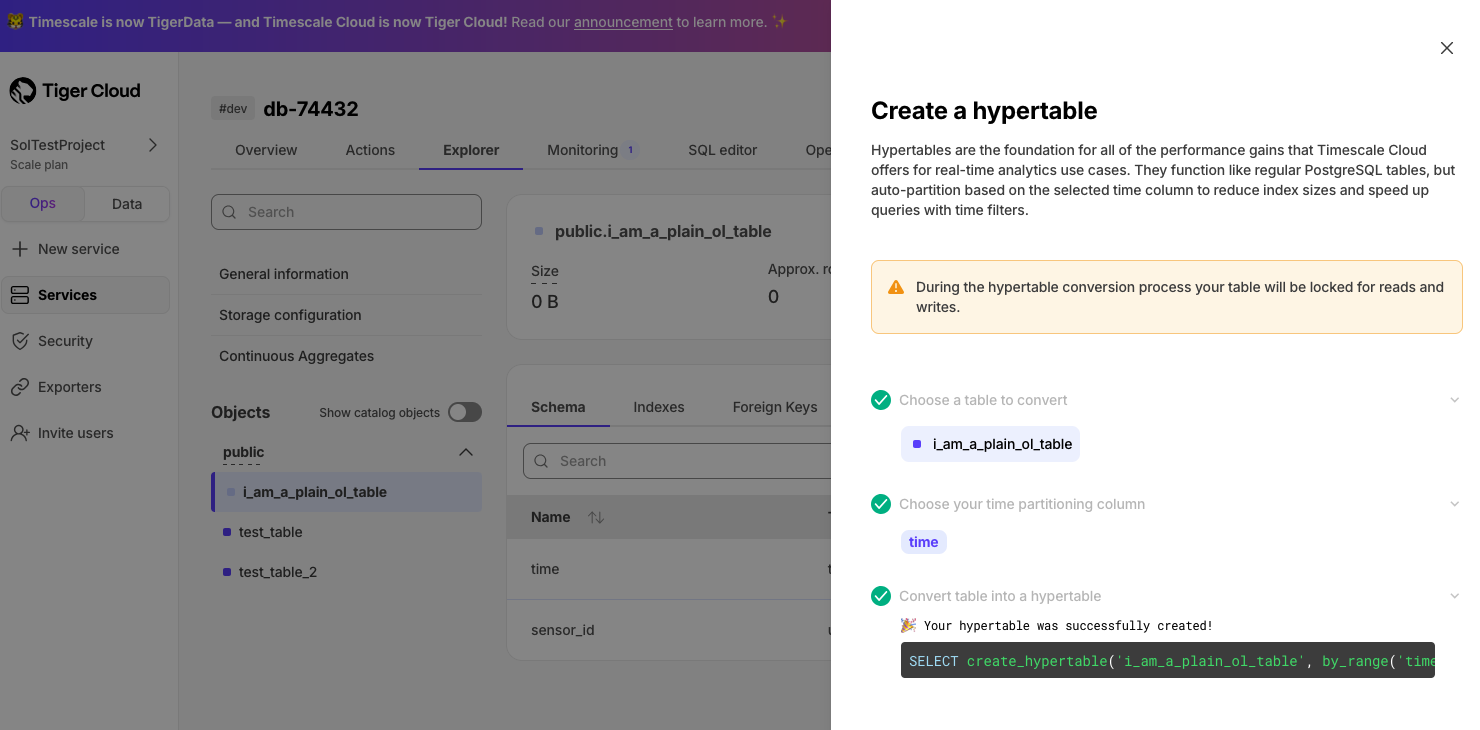

Transform a table to a hypertable from the Explorer

In Console, you can now easily create hypertables from your regular Postgres tables directly from the Explorer. Clicking on any Postgres table shows an option to open up the hypertable action. Follow the simple steps to set up your partition key and transform the table to a hypertable.

Cross-region backups, Postgres options, and onboarding

August 14, 2025

Cross-region backups

You can now store backups in a different region than your service, which improves resilience and helps meet enterprise compliance requirements. Cross‑region backups are available on our Enterprise plan for free at launch; usage‑based billing may be introduced later. For full details, please see the docs.

Standard Postgres instructions for onboarding

We have added basic instructions for INSERT, UPDATE, DELETE commands to the Tiger Cloud console. It's now shown as an option in the Import Data page.

Postgres-only service type

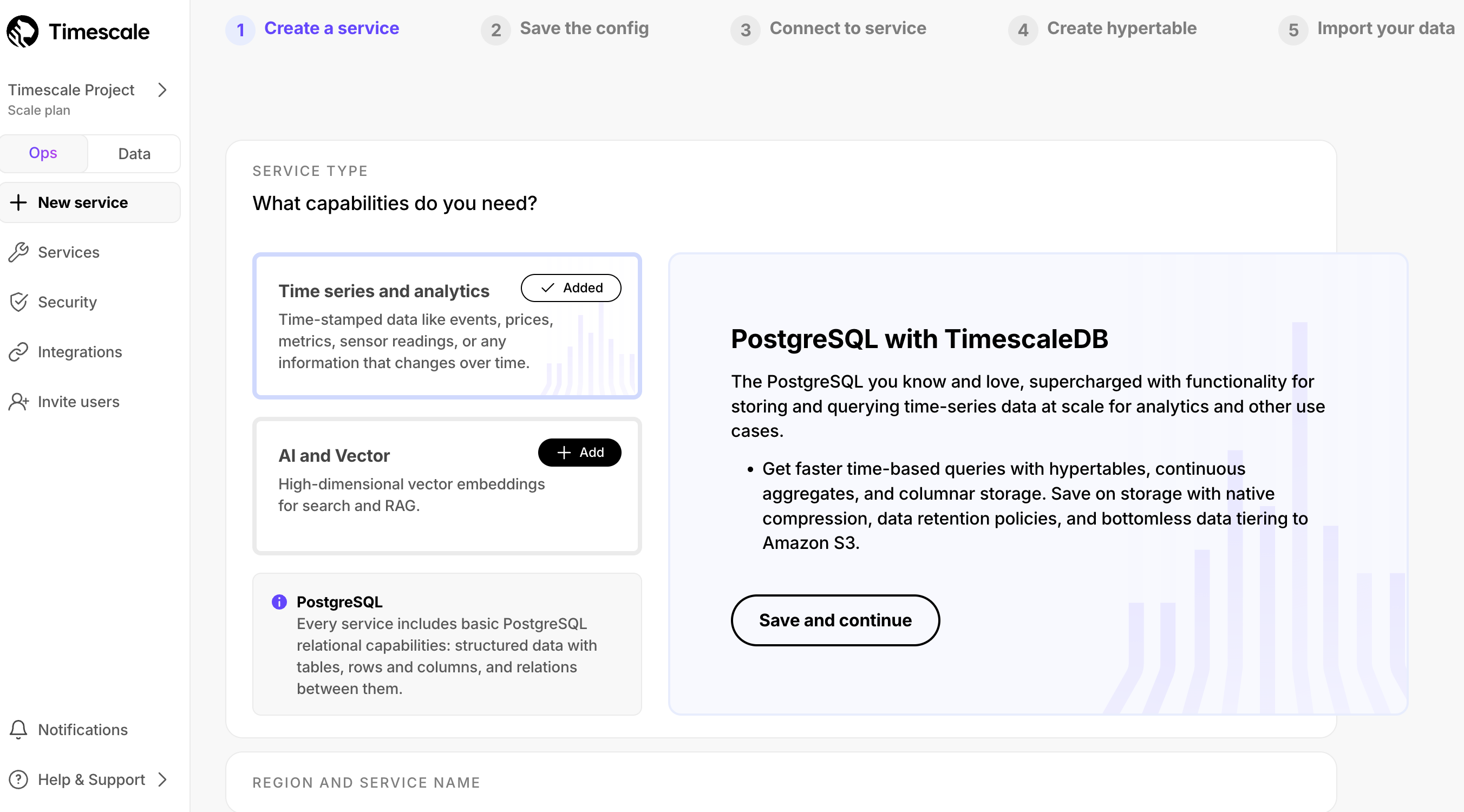

In Tiger Cloud, you now have an option to choose Postgres-only in the service creation flow. Just click Looking for plan PostgreSQL? on the Service Type screen.

Viewer role GA, EXPLAIN plans, and chunk index sizes in Explorer

July 31, 2025

GA release of the viewer role in role-based access

The viewer role is now generally available for all projects and organizations. It provides read-only access to services, metrics, and logs without modify permissions. Viewers cannot create, update, or delete resources, nor manage users or billing. It's ideal for auditors, analysts, and cross-functional collaborators who need visibility but not control.

EXPLAIN plans in Insights

You can now find automatically generated EXPLAIN plans on queries that take longer than 10 seconds within Insights. EXPLAIN plans can be very useful to determine how you may be able to increase the performance of your queries.

Chunk index size in Explorer

Find the index size of hypertable chunks in the Explorer. This information can be very valuable to determine if a hypertable's chunk size is properly configured.

TimescaleDB v2.21 and catalog objects in the Console Explorer

July 25, 2025

🏎️ TimescaleDB v2.21—ingest millions of rows/second and faster columnstore UPSERTs and DELETEs

TimescaleDB v2.21 was released on July 8 and is now available to all developers on Tiger Cloud.

Highlighted features in TimescaleDB v2.21 include:

- High-scale ingestion performance (tech preview): introducing a new approach that compresses data directly into the columnstore during ingestion, demonstrating over 1.2M rows/second in tests with bursts over 50M rows/second. We are actively seeking design partners for this feature.

- Faster data updates (UPSERTs): columnstore UPSERTs are now 2.5x faster for heavily constrained tables, building on the 10x improvement from v2.20.

- Faster data deletion: DELETE operations on non-segmentby columns are 42x faster, reducing I/O and bloat.

- Reduced bloat after recompression: optimized recompression processes lead to less bloat and more efficient storage.

- Enhanced continuous aggregates:

- Concurrent refresh policies enable multiple continuous aggregates to update concurrently.

- Batched refreshes are now enabled by default for more efficient processing.

- Complete chunk management: full support for splitting columnstore chunks, complementing the existing merge capabilities.

For a comprehensive list of changes, refer to the TimescaleDB v2.21 release notes.

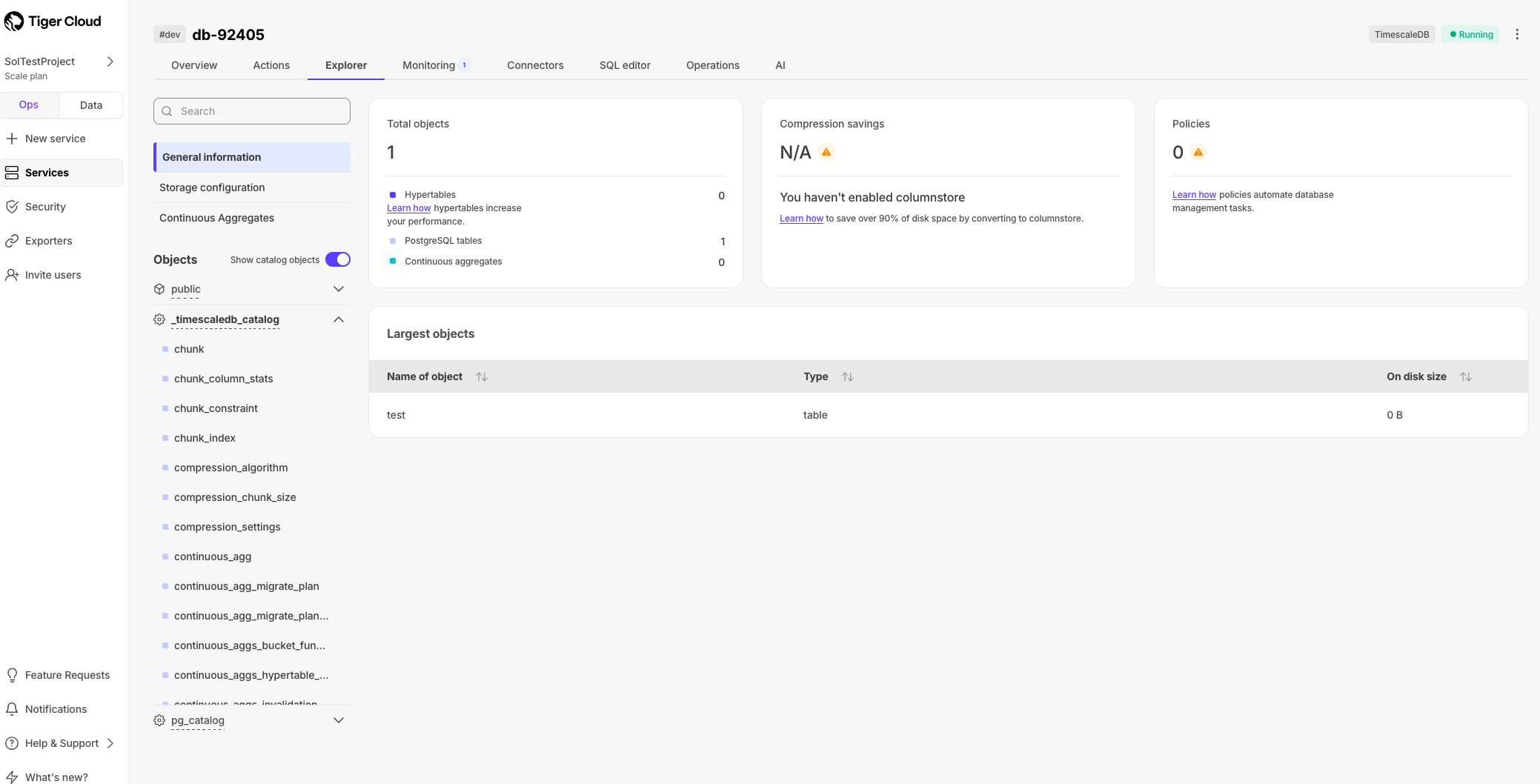

🔬 Catalog objects available in the Console Explorer

You can now view catalog objects in the Console Explorer. Check out the internal schemas for PostgreSQL and TimescaleDB to better understand the inner workings of your database. To turn on/off visibility, select your service in Tiger Cloud Console, then click Explorer and toggle Show catalog objects.

Iceberg Destination Connector (Tiger Lake)

July 18, 2025

We have released a beta Iceberg destination connector that enables Scale and Enterprise users to integrate Tiger Cloud services with Amazon S3 tables. This enables you to connect Tiger Cloud to data lakes seamlessly. We are actively developing several improvements that will make the overall data lake integration process even smoother.

To use this feature, select your service in Tiger Cloud Console, then navigate to Connectors and select the Amazon S3 Tables destination connector. Integrate the connector to your S3 table bucket by providing the ARN roles, then simply select the tables that you want to sync into S3 tables. See the documentation for details.

🔆Console just got better

July 11, 2025

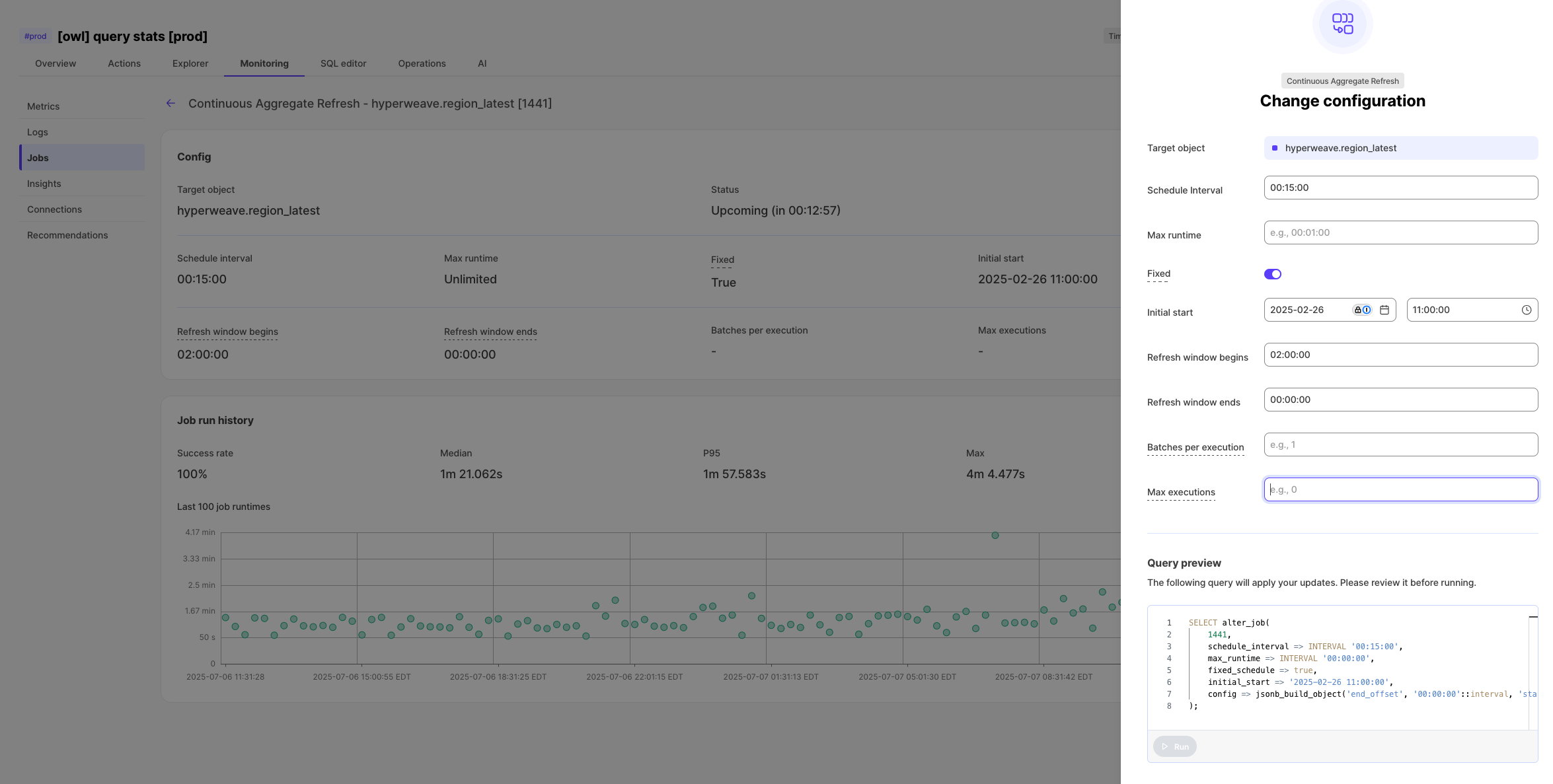

✏️ Editable jobs in Console

You can now edit jobs directly in Console! We've added the handy pencil icon in the top right corner of any job view. Click a job, hit the edit button, then make your changes. This works for all jobs, even user-defined ones. Tiger Cloud jobs come with custom wizards to guide you through the right inputs. This means you can spot and fix issues without leaving the UI - a small change that makes a big difference!

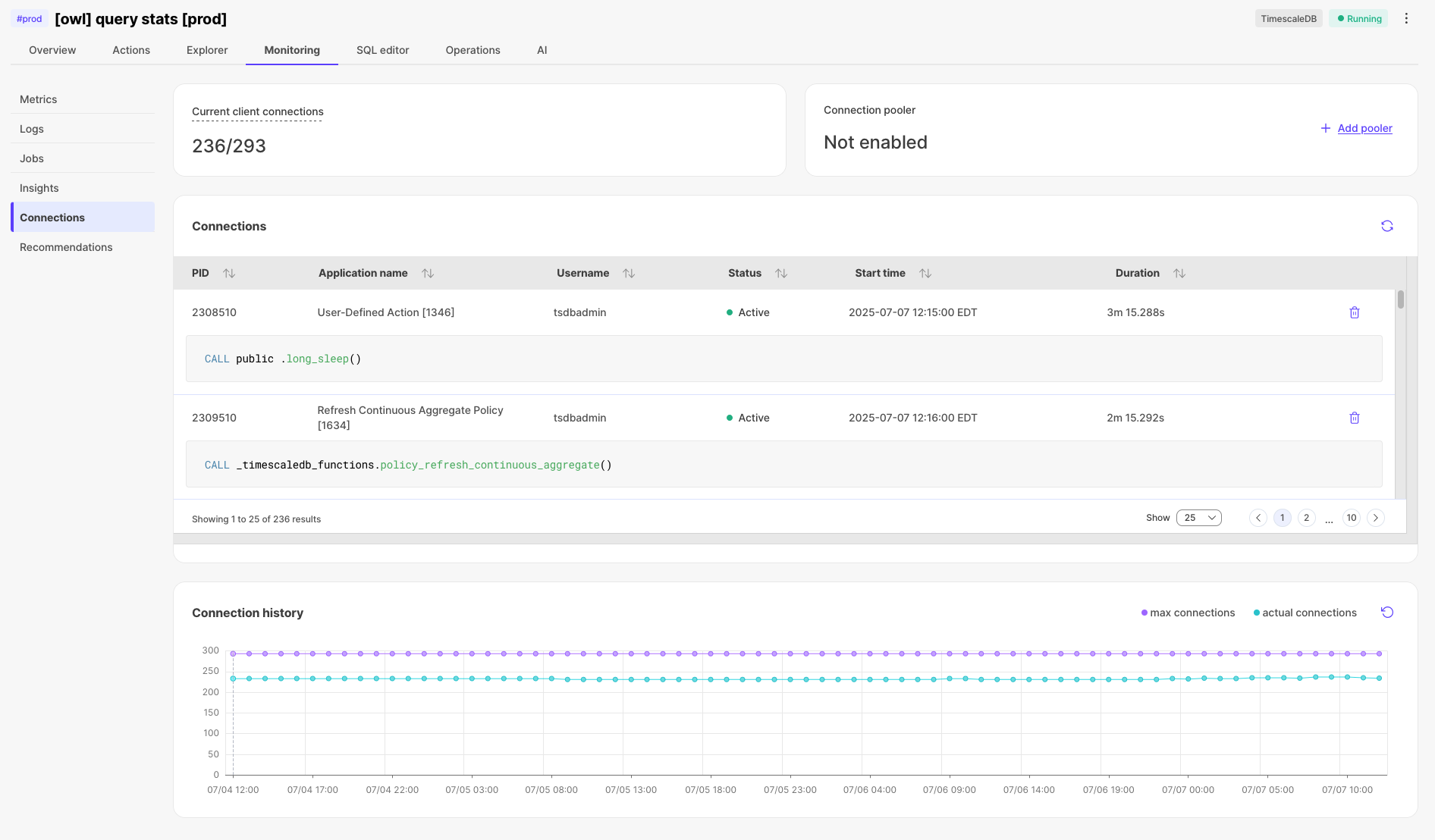

📊 Connection history

Now you can see your historical connection counts right in the Connections tab! This helps spot those pesky connection management bugs before they impact your app. We're logging max connections every hour (sampled every 5 mins) and might adjust based on your feedback. Just another way we're making the Console more powerful for troubleshooting.

🔐 New in Public Beta: Read-Only Access through RBAC

We’ve just launched Read/Viewer-only access for Tiger Cloud projects into public beta!

You can now invite users with view-only permissions — perfect for folks who need to see dashboards, metrics, and query results, without the ability to make changes.

This has been one of our most requested RBAC features, and it's a big step forward in making Tiger Cloud more secure and collaborative.

No write access. No config changes. Just visibility.

In Console, Go to Project Settings > Users & Roles to try it out, and let us know what you think!

👀 Super useful doc updates

July 4, 2025

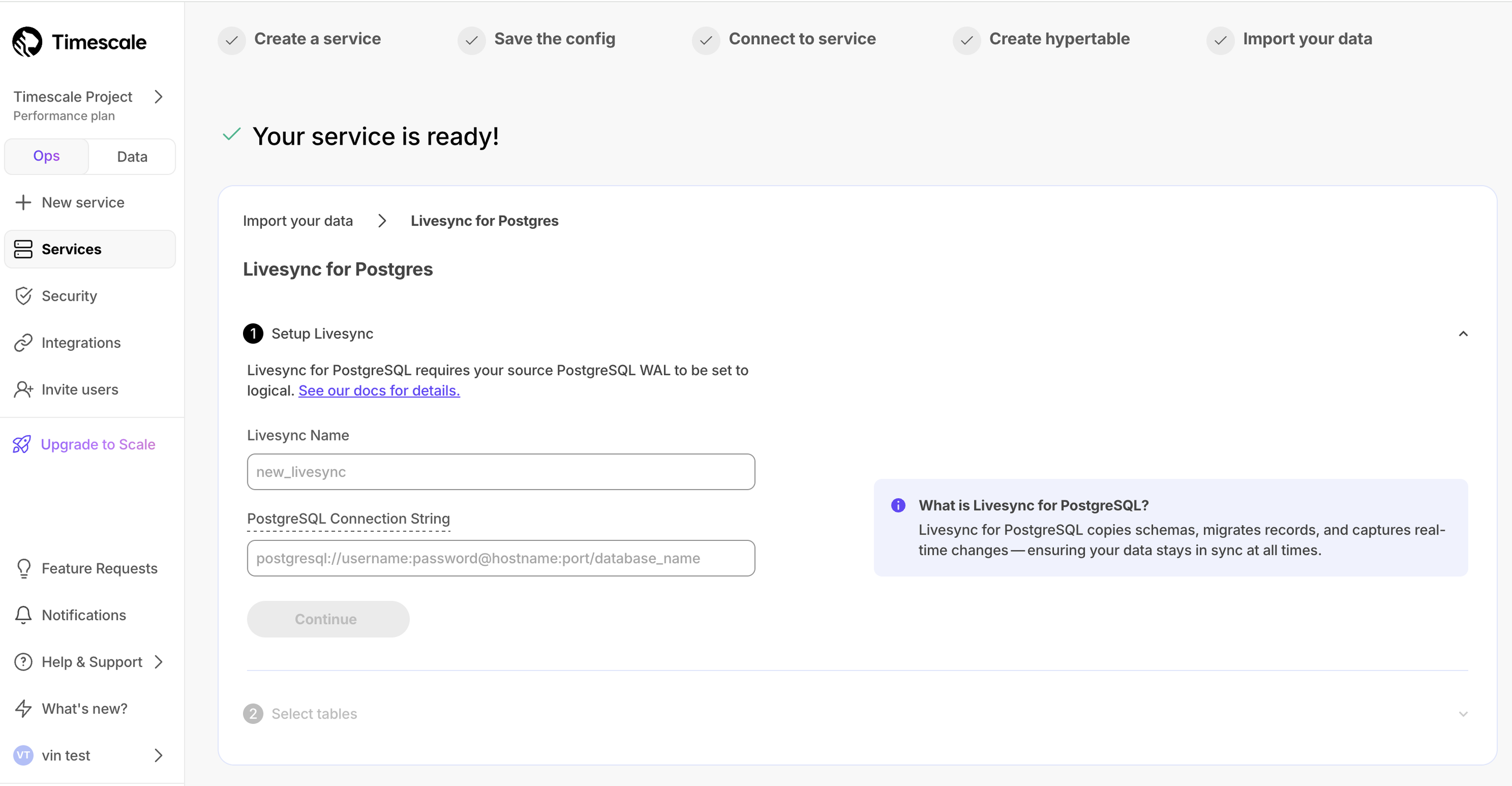

Updates to instructions for livesync

In the Console UI, we have clarified the step-by-step procedure for setting up your livesync from self-hosted installations by:

- Adding definitions for some flags when running your Docker container.

- Including more detailed examples of the output from the table synchronization list.

New optional argument for add_continuous_aggregate_policy API

Added the new refresh_newest_first optional argument that controls the order of incremental refreshes.

🚀 Multi-command queries in SQL editor, improved job page experience, multiple AWS Transit Gateways, and a new service creation flow

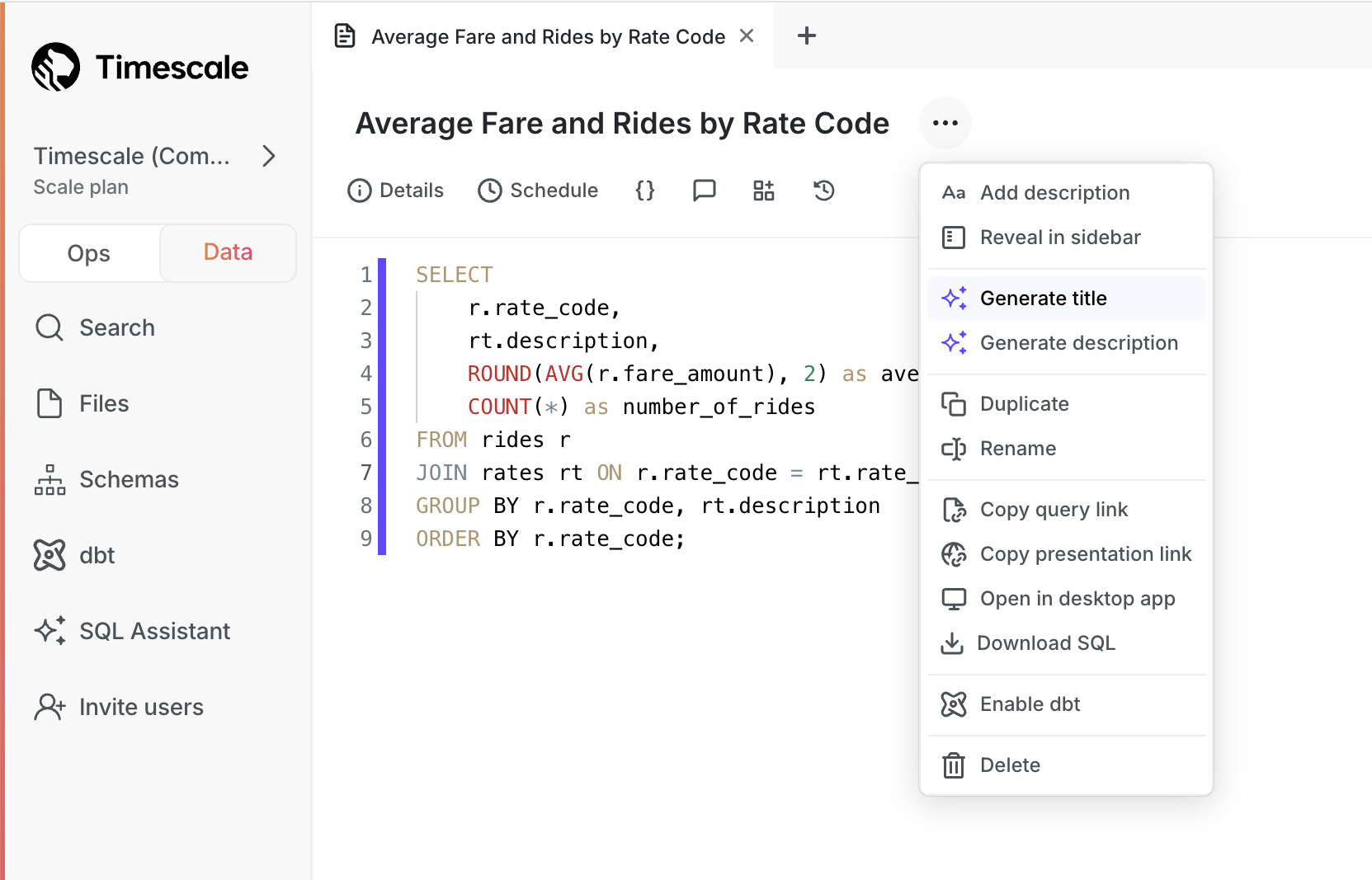

June 20, 2025

Run multiple statements in SQL editor

Execute complex queries with multiple commands in a single run—perfect for data transformations, table setup, and batch operations.

Branch conversations in SQL assistant

Start new discussion threads from any point in your SQL assistant chat to explore different approaches to your data questions more easily.

Smarter results table

- Expand JSON data instantly: turn complex JSON objects into readable columns with one click—no more digging through nested data structures.

- Filter with precision: use a new smart filter to pick exactly what you want from a dropdown of all available values.

Jobs page improvements

Individual job pages now display their corresponding configuration for TimescaleDB job types—for example, columnstore, retention, CAgg refreshes, tiering, and others.

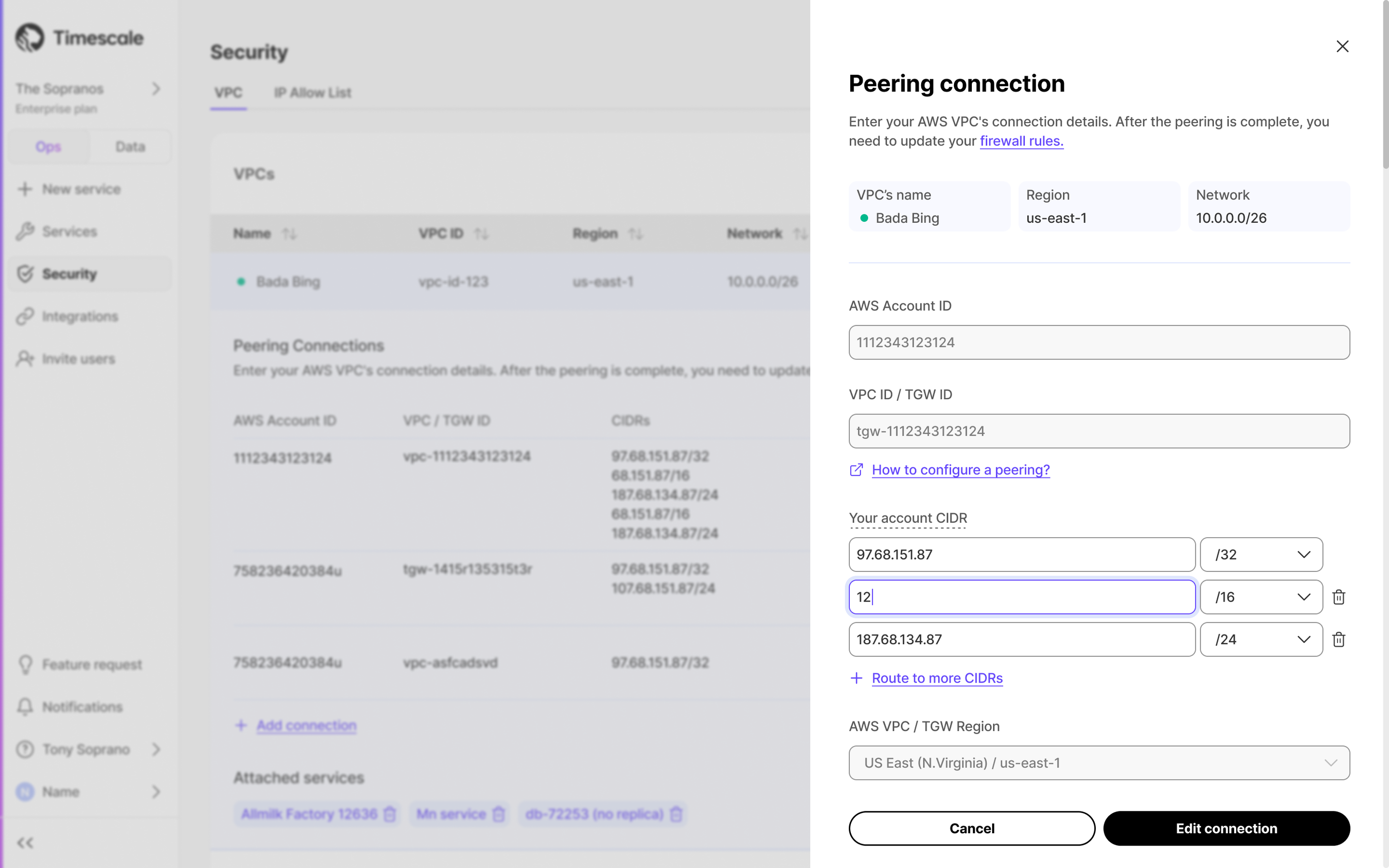

Multiple AWS Transit Gateways

You can now connect multiple AWS Transit Gateways, when those gateways use overlapping CIDRs. Ideal for teams with zero-trust policies, this lets you keep each network path isolated.

How it works: when you create a new peering connection, Tiger Cloud reuses the existing Transit Gateway if you supply the same ID—otherwise it automatically creates a new, isolated Transit Gateway.

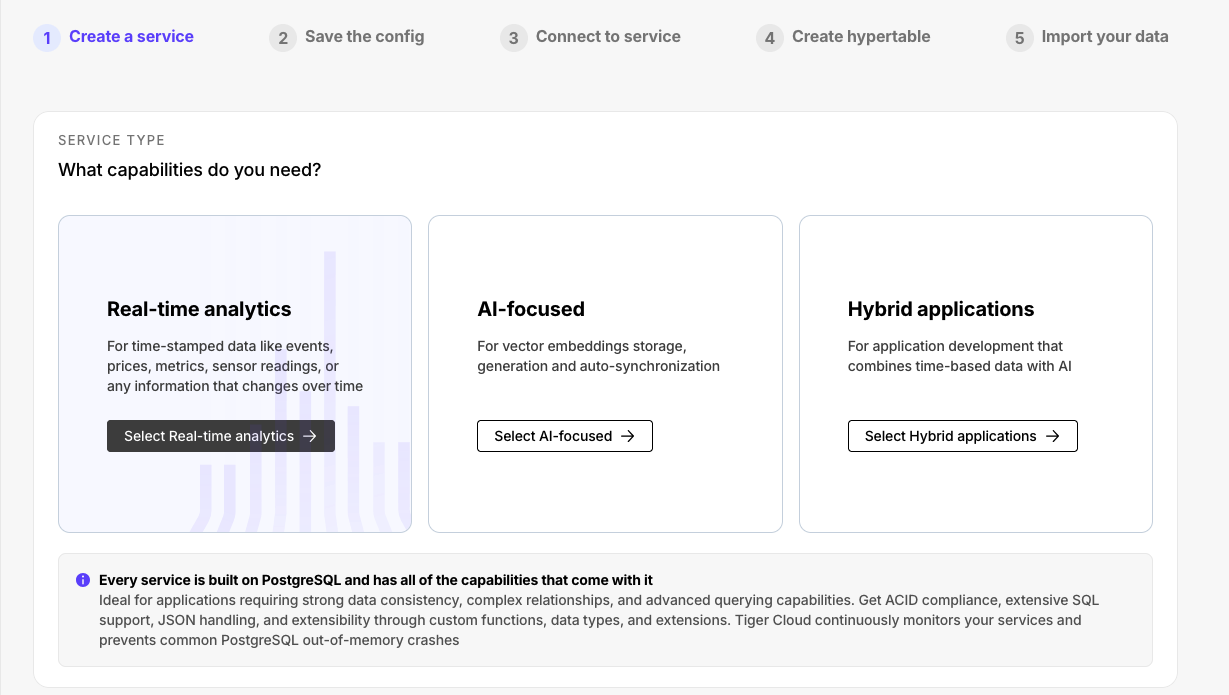

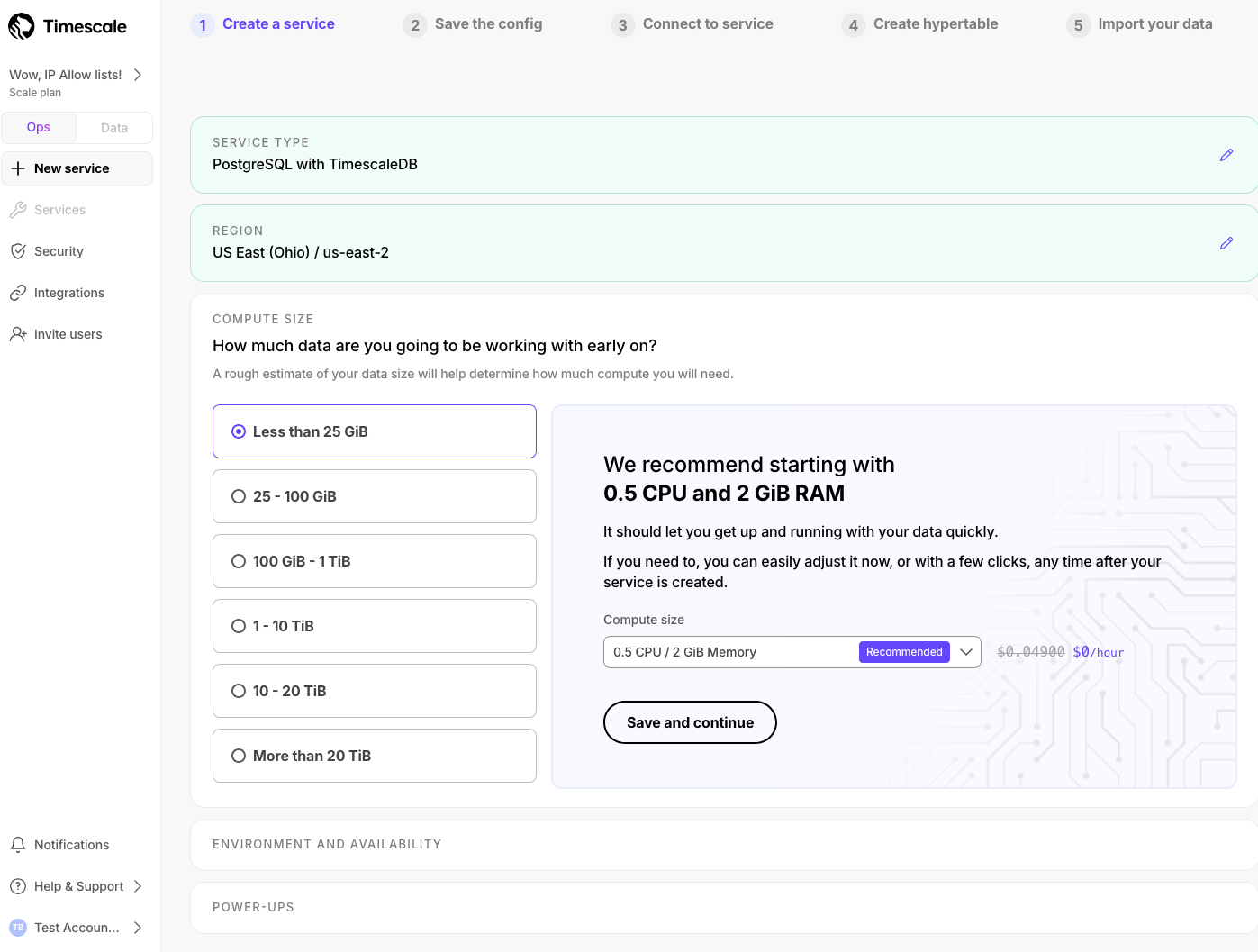

Updated service creation flow

The new service creation flow makes the choice of service type clearer. You can now create distinct types with Postgres extensions for real-time analytics (TimescaleDB), AI (pgvectorscale, pgai), and RTA/AI hybrid applications.

⚙️ Improved Terraform support and TimescaleDB v2.20.3

June 13, 2025

Terraform support for Exporters and AWS Transit Gateway

The latest version of the Timescale Terraform provider (2.3.0) adds support for:

- Creating and attaching observability exporters to your services.

- Securing the connections to your Timescale Cloud services with AWS Transit Gateway.

- Configuring CIDRs for VPC and AWS Transit Gateway connections.

Check the Timescale Terraform provider documentation for more details.

TimescaleDB v2.20.3

This patch release for TimescaleDB v2.20 includes several bug fixes and minor improvements. Notable bug fixes include:

- Adjustments to SkipScan costing for queries that require a full scan of indexed data.

- A fix for issues encountered during dump and restore operations when chunk skipping is enabled.

- Resolution of a bug related to dropped "quals" (qualifications/conditions) in SkipScan.

For a comprehensive list of changes, refer to the TimescaleDB 2.20.3 release notes.

🧘 Read replica sets, faster tables, new anthropic models, and VPC support in data mode

June 6, 2025

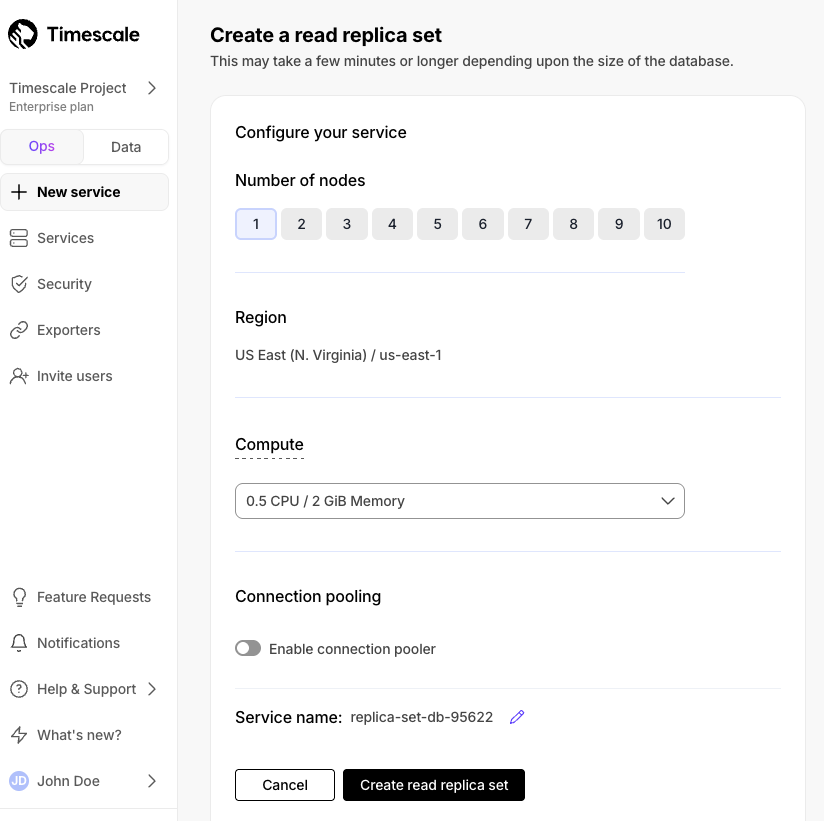

Horizontal read scaling with read replica sets

Read replica sets are an improved version of read replicas. They let you scale reads horizontally by creating up to 10 replica nodes behind a single read endpoint. Just point your read queries to the endpoint and configure the number of replicas you need without changing your application logic. You can increase or decrease the number of replicas in the set dynamically, with no impact on the endpoint.

Read replica sets are used to:

- Scale reads for read-heavy workloads and dashboards.

- Isolate internal analytics and reporting from customer-facing applications.

- Provide high availability and fault tolerance for read traffic.

All existing read replicas have been automatically upgraded to a replica set with one node—no action required. Billing remains the same.

Read replica sets are available for all Scale and Enterprise customers.

Faster, smarter results tables in data mode

We've completely rebuilt how query results are displayed in the data mode to give you a faster, more powerful way to work with your data. The new results table can handle millions of rows with smooth scrolling and instant responses when you sort, filter, or format your data. You'll find it today in notebooks and presentation pages, with more areas coming soon.

- Your settings stick around: when you customize how your table looks—applying filters, sorting columns, or formatting data—those settings are automatically saved. Switch to another tab and come back, and everything stays exactly how you left it.

- Better ways to find what you need: filter your results by any column value, with search terms highlighted so you can quickly spot what you're looking for. The search box is now available everywhere you work with data.

- Export exactly what you want: download your entire table or just select the specific rows and columns you need. Both CSV and Excel formats are supported.

- See patterns in your data: highlight cells based on their values to quickly spot trends, outliers, or important thresholds in your results.

- Smoother navigation: click any row number to see the full details in an expanded view. Columns automatically resize to show your data clearly, and web links in your results are now clickable.

As a result, working with large datasets is now faster and more intuitive. Whether you're exploring millions of rows or sharing results with your team, the new table keeps up with how you actually work with data.

Latest anthropic models added to SQL assistant

Data mode's SQL assistant now supports Anthropic's latest models:

- Sonnet 4

- Sonnet 4 (extended thinking)

- Opus 4

- Opus 4 (extended thinking)

VPC support for passwordless data mode connections

We previously made it much easier to connect newly created services to Timescale’s data mode. We have now expanded this functionality to services using a VPC.

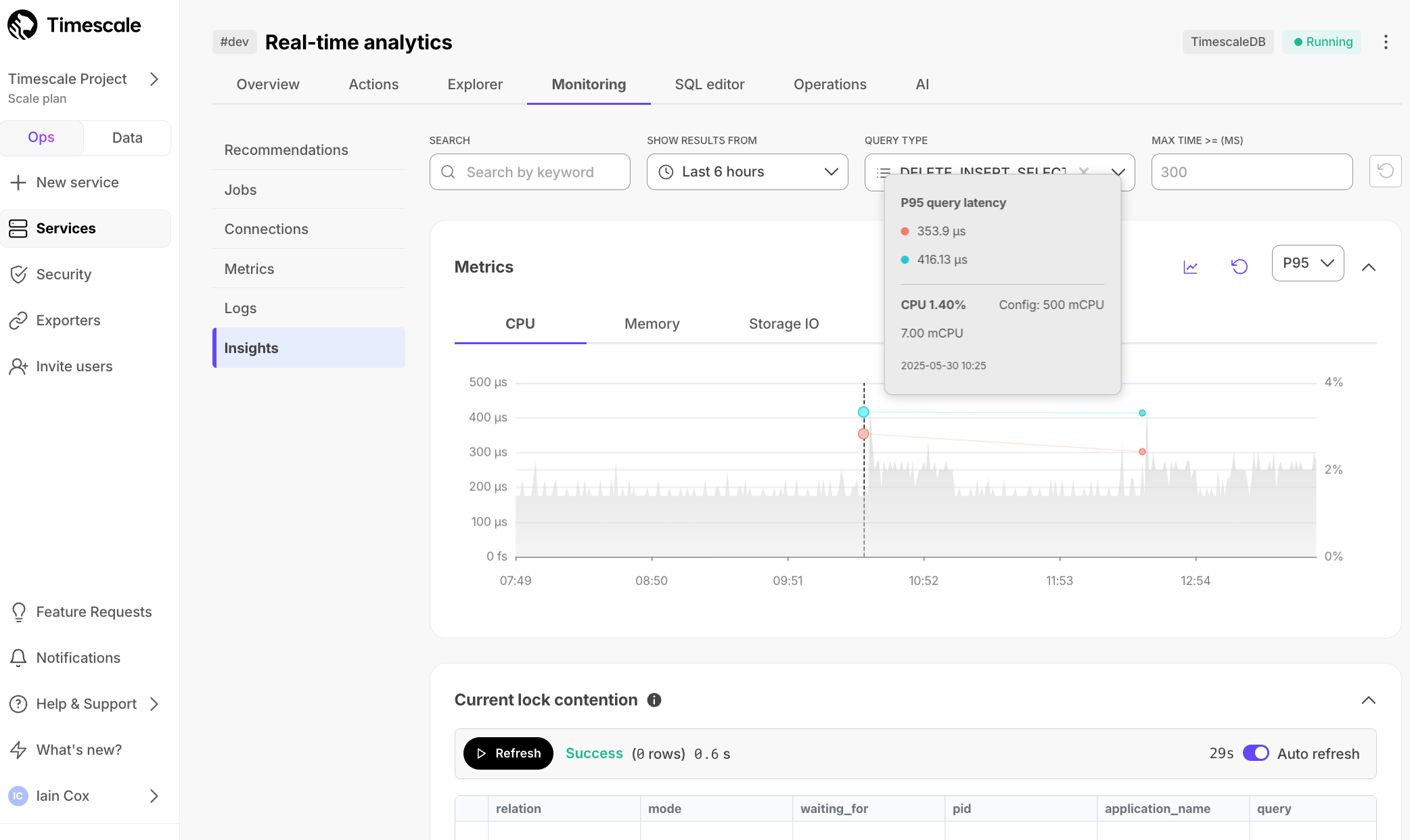

🕵🏻️ Enhanced service monitoring, TimescaleDB v2.20, and livesync for Postgres

May 30, 2025

Updated top-level navigation - Monitoring tab

In Timescale Console, we have consolidated multiple top-level service information tabs into the single Monitoring tab.

This tab houses information previously displayed in the Recommendations, Jobs, Connections, Metrics, Logs,

and Insights tabs.

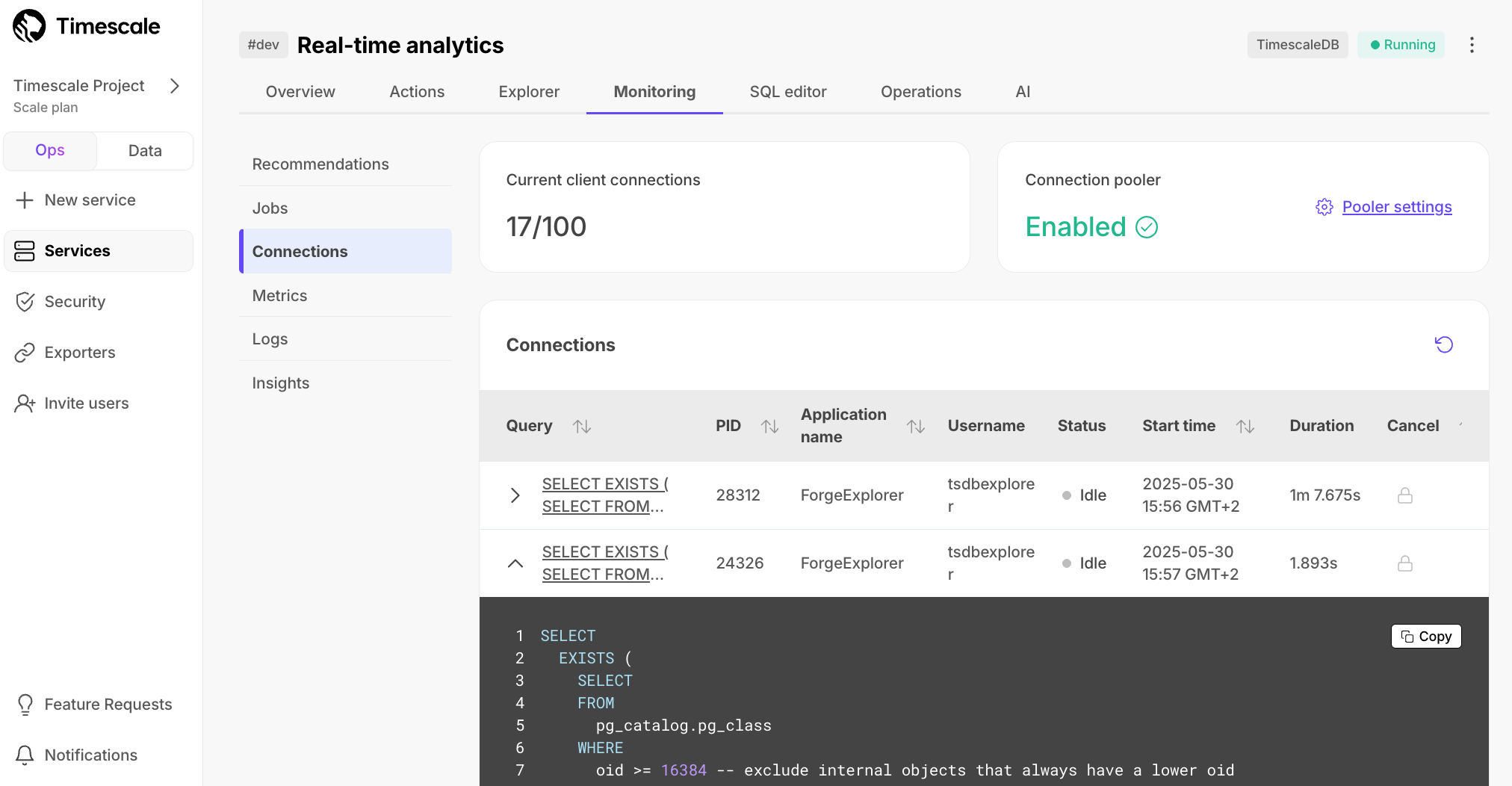

Monitor active connections

In the Connections section under Monitoring, you can now see information like the query being run, the application

name, and duration for all current connections to a service.

The information in Connections enables you to debug misconfigured applications, or

cancel problematic queries to free up other connections to your database.

TimescaleDB v2.20 - query performance and faster data updates

All new services created on Timescale Cloud are created using TimescaleDB v2.20. Existing services will be automatically upgraded during their maintenance window.

Highlighted features in TimescaleDB v2.20 include:

- Efficiently handle data updates and upserts (including backfills, that are now up to 10x faster).

- Up to 6x faster point queries on high-cardinality columns using new bloom filters.

- Up to 2500x faster DISTINCT operations with SkipScan, perfect for quickly getting a unique list or the latest reading from any device, event, or transaction.

- 8x more efficient Boolean column storage with vectorized processing, resulting in 30-45% faster queries.

- Enhanced developer flexibility with continuous aggregates now supporting window and mutable functions, plus customizable refresh orders.

Postgres 13 and 14 deprecated on Tiger Cloud

TimescaleDB version 2.20 is not compatible with Postgres versions v14 and below. TimescaleDB 2.19.3 is the last bug-fix release for Postgres 14. Future fixes are for Postgres 15+ only. To continue receiving critical fixes and security patches, and to take advantage of the latest TimescaleDB features, you must upgrade to Postgres 15 or newer. This deprecation affects all Tiger Cloud services currently running Postgres 13 or Postgres 14.

The timeline for the Postgres 13 and 14 deprecation is as follows:

- Deprecation notice period begins: starting in early June 2025, you will receive email communication.

- Customer self-service upgrade window: June 2025 through September 14, 2025. We strongly encourage you to manually upgrade Postgres during this period.

- Automatic upgrade deadline: your service will be automatically upgraded from September 15, 2025.

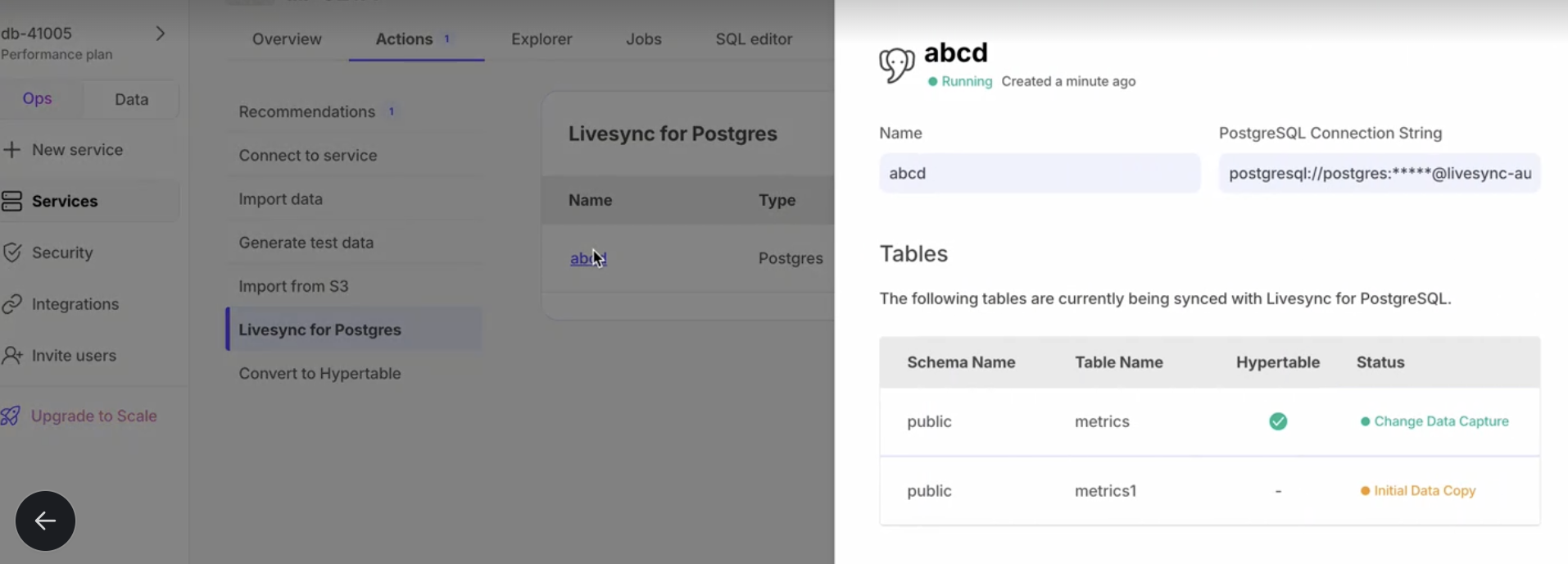

Enhancements to livesync for Postgres

You now can:

- Edit a running livesync to add and drop tables from an existing configuration:

- For existing tables, Timescale Console stops the livesync while keeping the target table intact.

- Newly added tables sync their existing data and transition into the Change Data Capture (CDC) state.

- Create multiple livesync instances for Postgres per service. This is an upgrade from our initial launch which limited users to one LiveSync per service.

This enables you to sync data from multiple Postgres source databases into a single Timescale Cloud service.

- No more hassle looking up schema and table names for livesync configuration from the source. Starting today, all schema and table names are available in a dropdown menu for seamless source table selection.

➕ More storage types and IOPS

May 22, 2025

🚀 Enhanced storage: scale to 64 TB and 32,000 IOPS

We're excited to introduce enhanced storage, a new storage type in Timescale Cloud that significantly boosts both capacity and performance. Designed for customers with mission-critical workloads.

With enhanced storage, Timescale Cloud now supports:

- Up to 64 TB of storage per Timescale Cloud service (4x increase from the previous limit)

- Up to 32,000 IOPS, enabling high-throughput ingest and low-latency queries

Powered by AWS io2 volumes, enhanced storage gives your workloads the headroom they need—whether you're building financial data pipelines, developing IoT platforms, or processing billions of rows of telemetry. No more worrying about storage ceilings or IOPS bottlenecks.

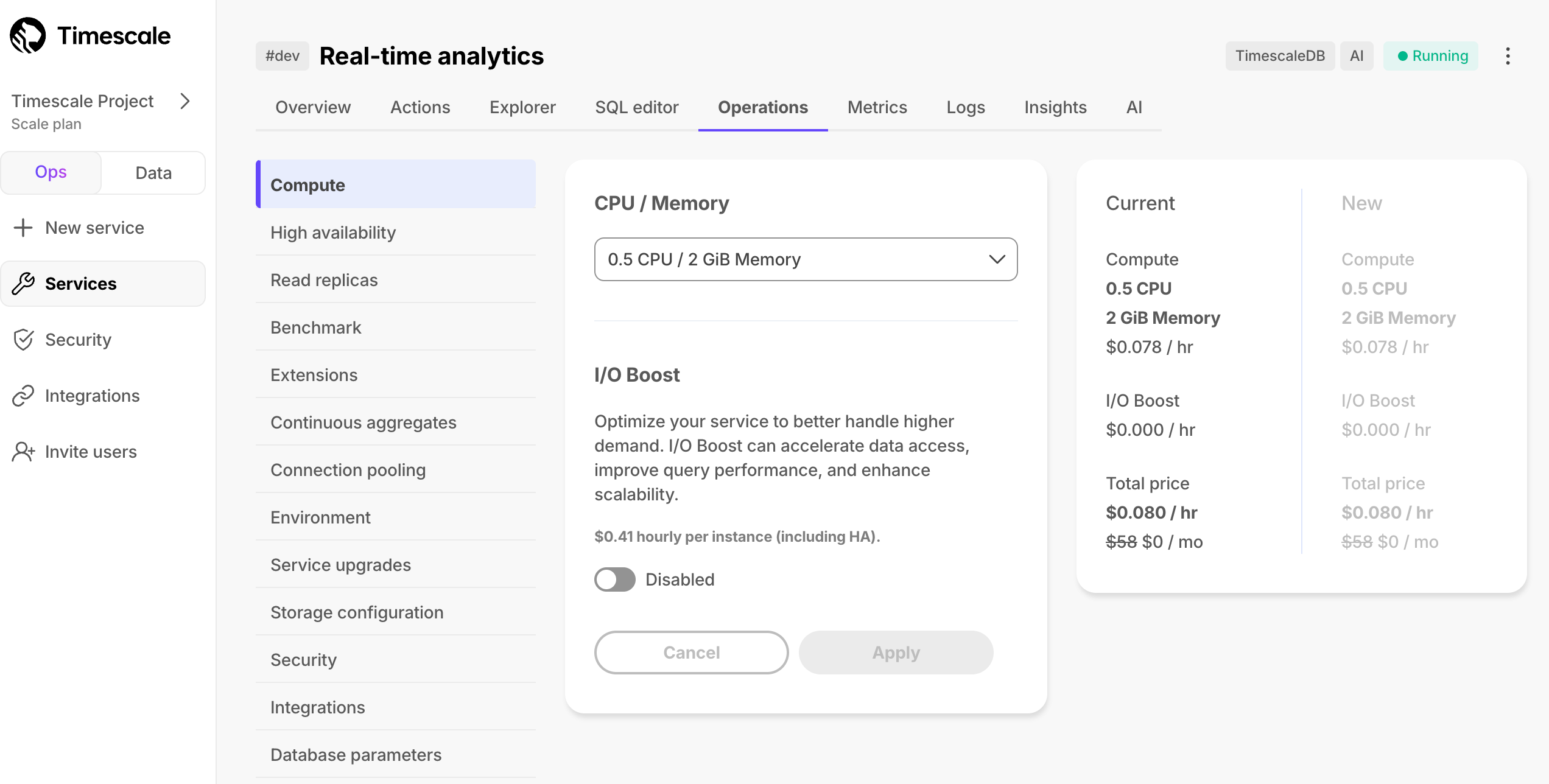

Enable enhanced storage in Timescale Console under Operations → Compute & Storage. Enhanced storage is currently available on the Enterprise pricing plan only. Learn more here.

↔️ New export and import options

May 15, 2025

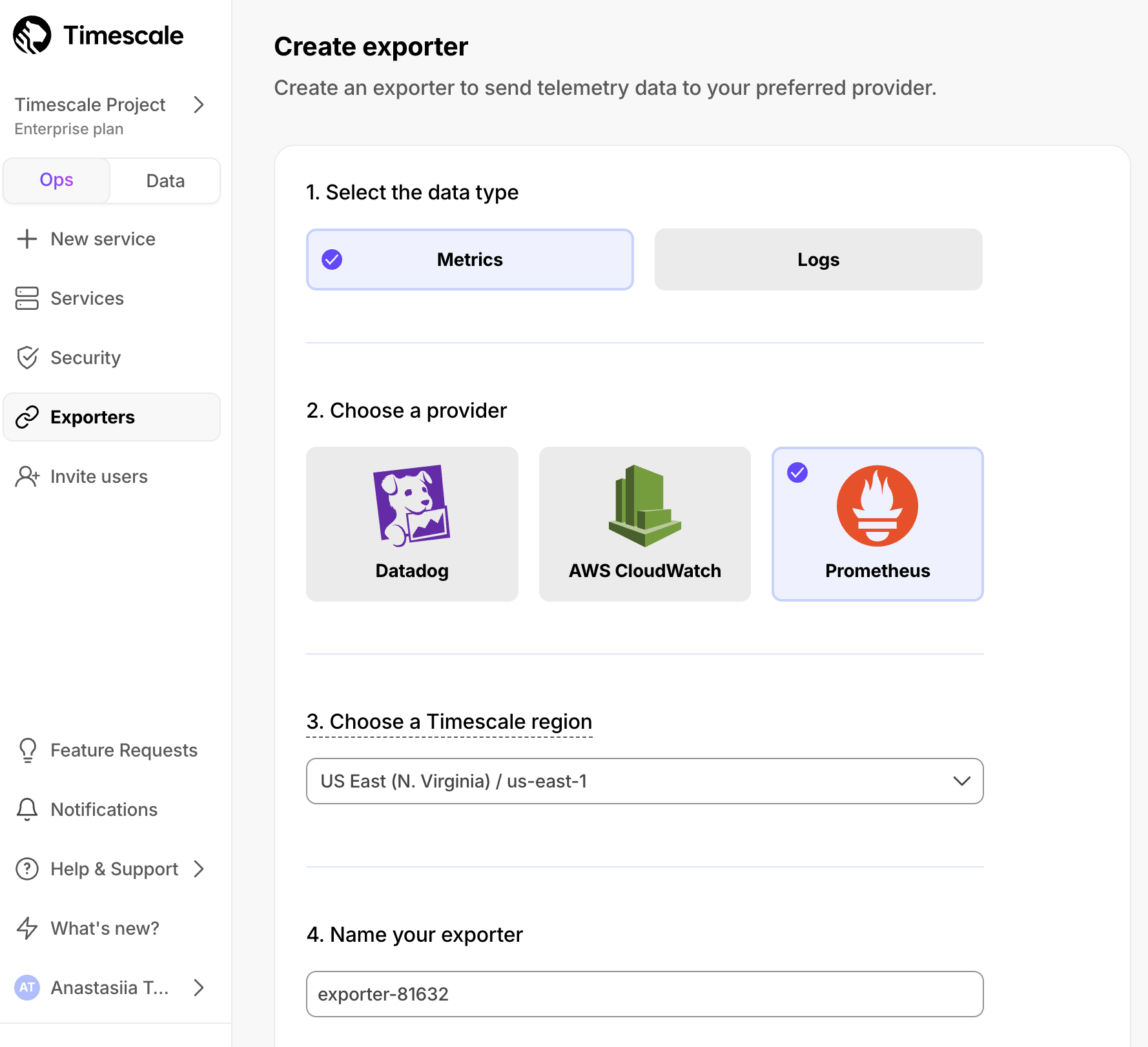

🔥 Ship TimescaleDB metrics to Prometheus

We’re excited to release the Prometheus Exporter for Timescale Cloud, making it easy to ship TimescaleDB metrics to your Prometheus instance. With the Prometheus Exporter, you can:

- Export TimescaleDB metrics like CPU, memory, and storage

- Visualize usage trends with your own Grafana dashboards

- Set alerts for high CPU load, low memory, or storage nearing capacity

To get started, create a Prometheus Exporter in the Timescale Console, attach it to your service, and configure Prometheus to scrape from the exposed URL. Metrics are secured with basic auth. Available on Scale and Enterprise plans. Learn more here.

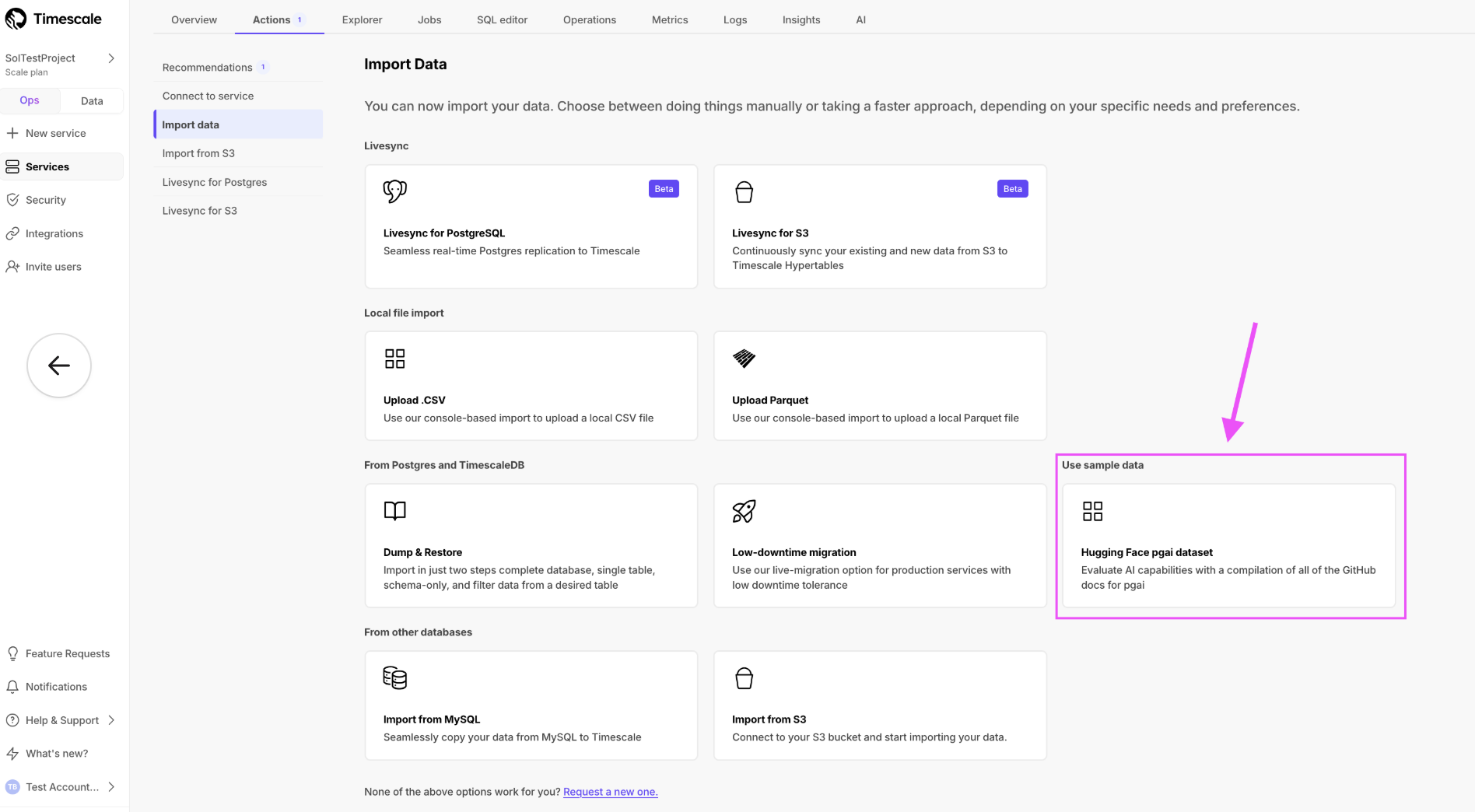

📥 Import text files into Postgres tables

Our import options in Timescale Console have expanded to include local text files. You can add the content of multiple text files (one file per row) into a Postgres table for use with Vectorizers while creating embeddings for evaluation and development. This new option is located in Service > Actions > Import Data.

🤖 Automatic document embeddings from S3 and a sample dataset for AI testing

May 09, 2025

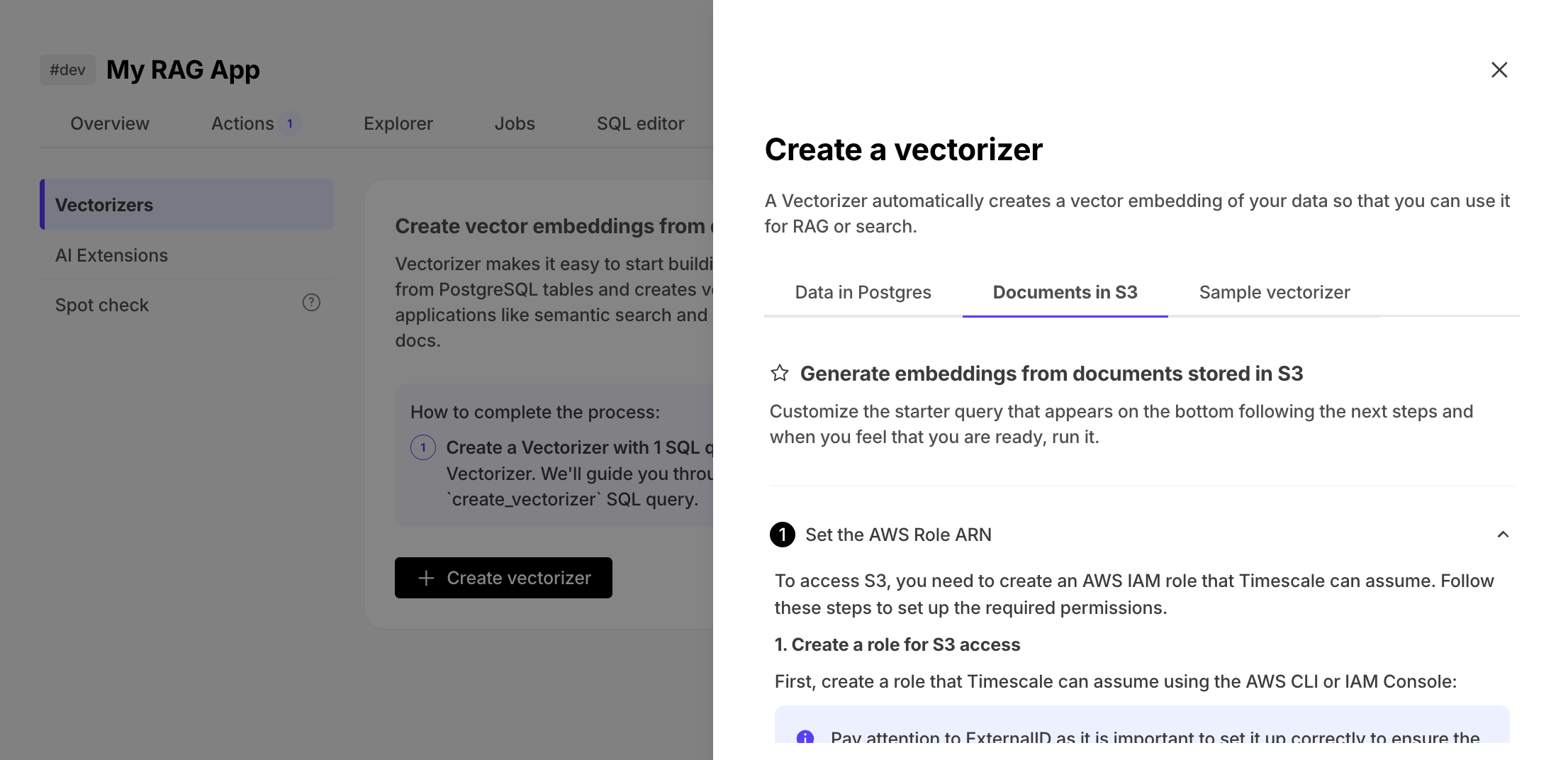

Automatic document embeddings from S3

pgai vectorizer now supports automatic document vectorization. This makes it dramatically easier to build RAG and semantic search applications on top of unstructured data stored in Amazon S3. With just a SQL command, developers can create, update, and synchronize vector embeddings from a wide range of document formats—including PDFs, DOCX, XLSX, HTML, and more—without building or maintaining complex ETL pipelines.

Instead of juggling multiple systems and syncing metadata, vectorizer handles the entire process: downloading documents from S3, parsing them, chunking text, and generating vector embeddings stored right in Postgres using pgvector. As documents change, embeddings stay up-to-date automatically—keeping your Postgres database the single source of truth for both structured and semantic data.

Sample dataset for AI testing

You can now import a dataset directly from Hugging Face using Timescale Console. This dataset is ideal for testing vectorizers, you find it in the Import Data page under the Service > Actions tab.

🔁 Livesync for S3 and passwordless connections for data mode

April 25, 2025

Livesync for S3 (beta)

Livesync for S3 is our second livesync offering in Timescale Console, following livesync for Postgres. This feature helps users sync data in their S3 buckets to a Timescale Cloud service, and simplifies data importing. Livesync handles both existing and new data in real time, automatically syncing everything into a Timescale Cloud service. Users can integrate Timescale Cloud alongside S3, where S3 stores data in raw form as the source for multiple destinations.

With livesync, users can connect Timescale Cloud with S3 in minutes, rather than spending days setting up and maintaining an ingestion layer.

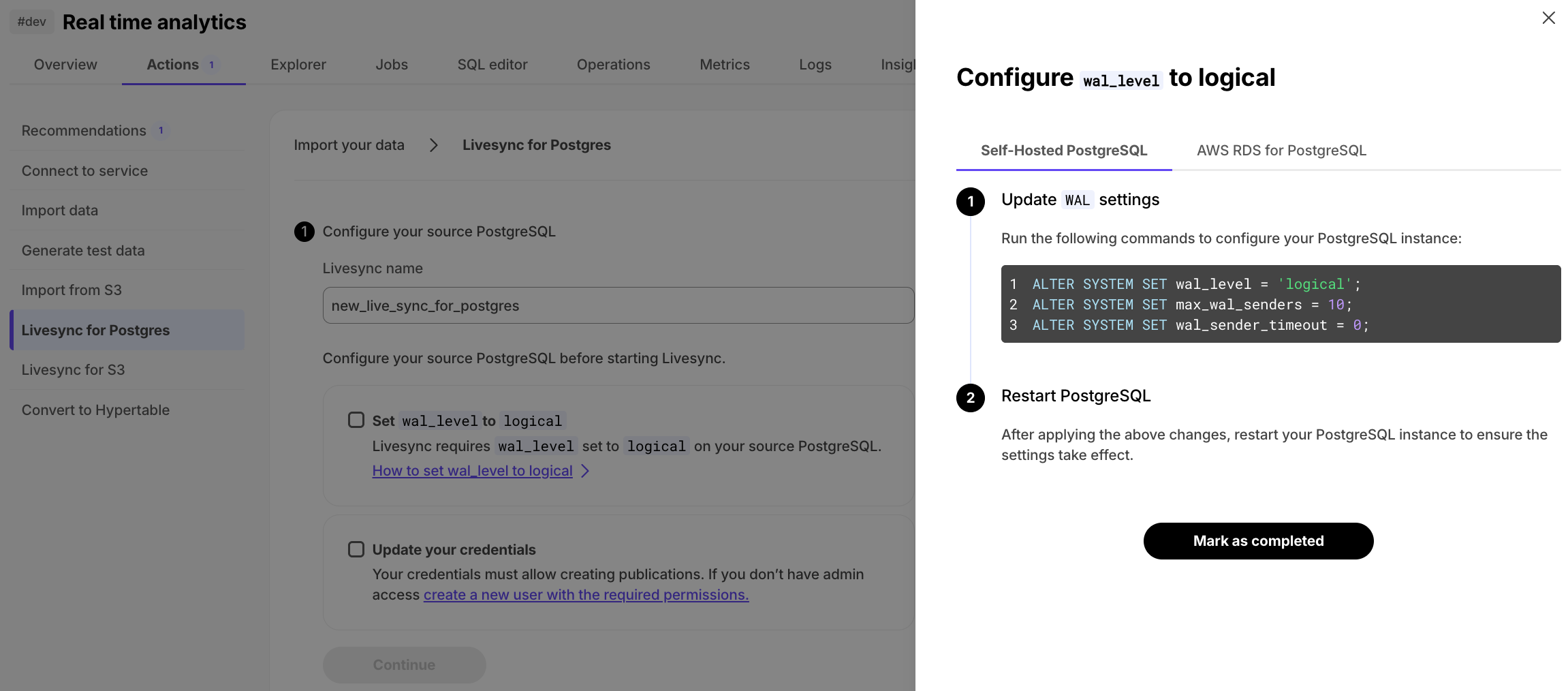

UX improvements to livesync for Postgres

In livesync for Postgres, getting started

requires setting the WAL_LEVEL to logical, and granting specific permissions to start a publication

on the source database. To simplify this setup process, we have added a detailed two-step checklist with comprehensive

configuration instructions to Timescale Console.

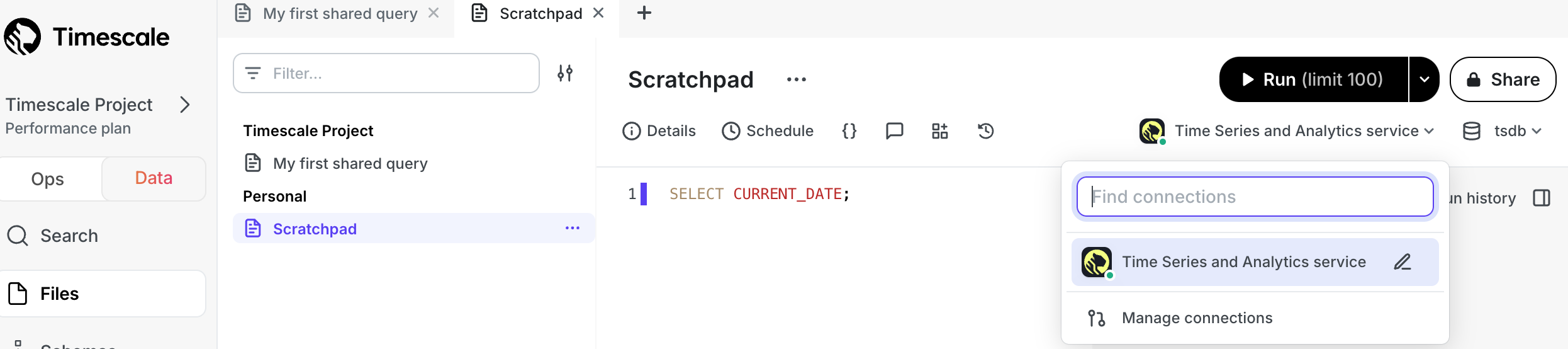

Passwordless data mode connections

We’ve made connecting to your Timescale Cloud services from data mode in Timescale Console even easier! All new services created in Timescale Cloud are now automatically accessible from data mode without requiring you to enter your service credentials. Just open data mode, select your service, and start querying.

We will be expanding this functionality to existing services in the coming weeks (including services using VPC peering), so stay tuned.

☑️ Embeddings spot checks, TimescaleDB v2.19.3, and new models in SQL Assistant

April 18, 2025

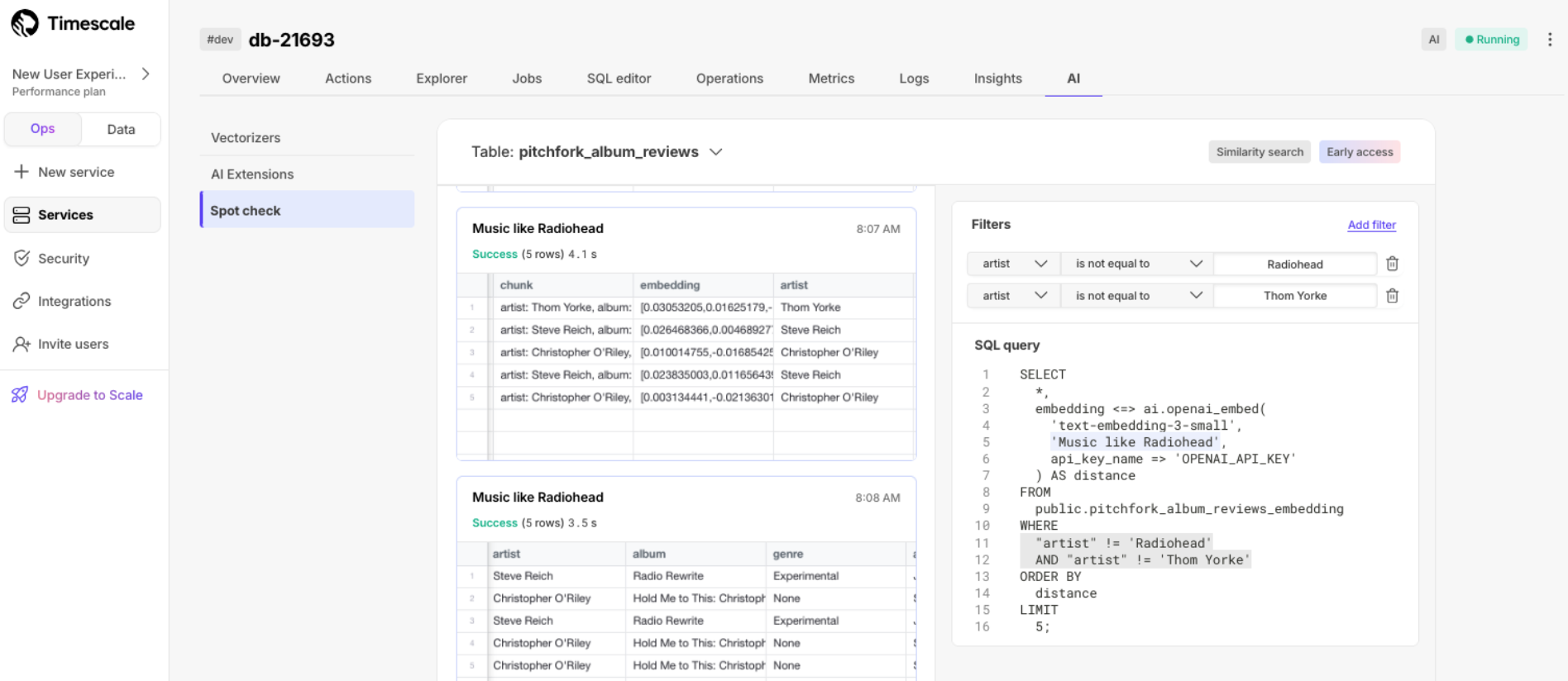

Embeddings spot checks

In Timescale Cloud, you can now quickly check the quality of the embeddings from the vectorizers' outputs. Construct a similarity search query with additional filters on source metadata using a simple UI. Run the query right away, or copy it to the SQL editor or data mode and further customize it to your needs. Run the check in Timescale Console > Services > AI:

TimescaleDB v2.19.3

New services created in Timescale Cloud now use TimescaleDB v2.19.3. Existing services are in the process of being automatically upgraded to this version.

This release adds a number of bug fixes including:

- Fix segfault when running a query against columnstore chunks that group by multiple columns, including UUID segmentby columns.

- Fix hypercore table access method segfault on DELETE operations using a segmentby column.

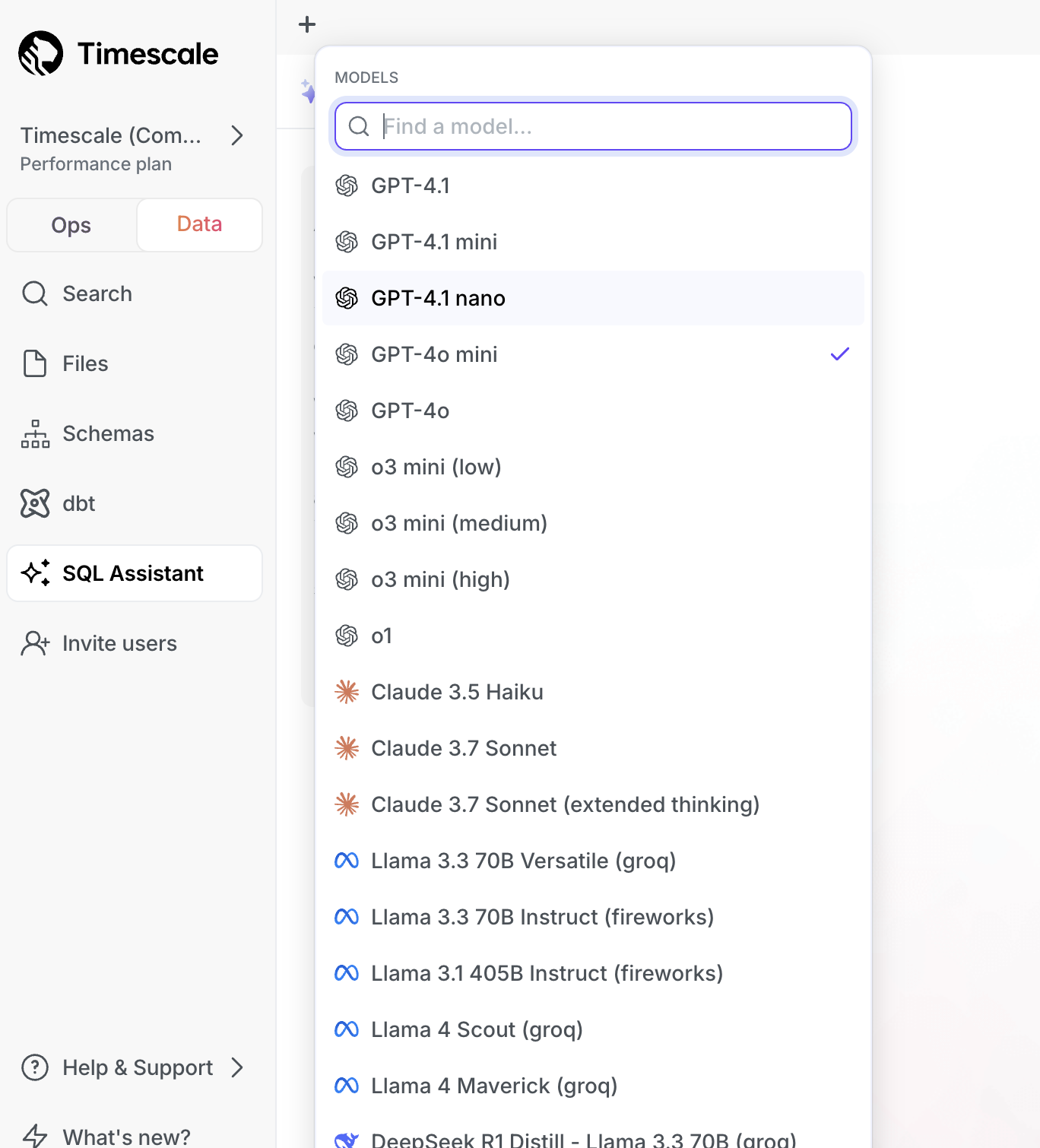

New OpenAI, Llama, and Gemini models in SQL Assistant

The data mode's SQL Assistant now includes support for the latest models from OpenAI and Llama: GPT-4.1 (including mini and nano) and Llama 4 (Scout and Maverick). Additionally, we've added support for Gemini models, in particular Gemini 2.0 Nano and 2.5 Pro (experimental and preview). With the new additions, SQL Assistant supports more than 20 language models so you can select the one best suited to your needs.

🪵 TimescaleDB v2.19, new service overview page, and log improvements

April 11, 2025

TimescaleDB v2.19—query performance and concurrency improvements

Starting this week, all new services created on Timescale Cloud use TimescaleDB v2.19. Existing services will be upgraded gradually during their maintenance window.

Highlighted features in TimescaleDB v2.19 include:

- Improved concurrency of

INSERT,UPDATE, andDELETEoperations on the columnstore by no longer blocking DML statements during the recompression of a chunk. - Improved system performance during continuous aggregate refreshes by breaking them into smaller batches. This reduces systems pressure and minimizes the risk of spilling to disk.

- Faster and more up-to-date results for queries against continuous aggregates by materializing the most recent data first, as opposed to old data first in prior versions.

- Faster analytical queries with SIMD vectorization of aggregations over text columns and

GROUP BYover multiple columns. - Enable chunk size optimization for better query performance in the columnstore by merging them with

merge_chunk.

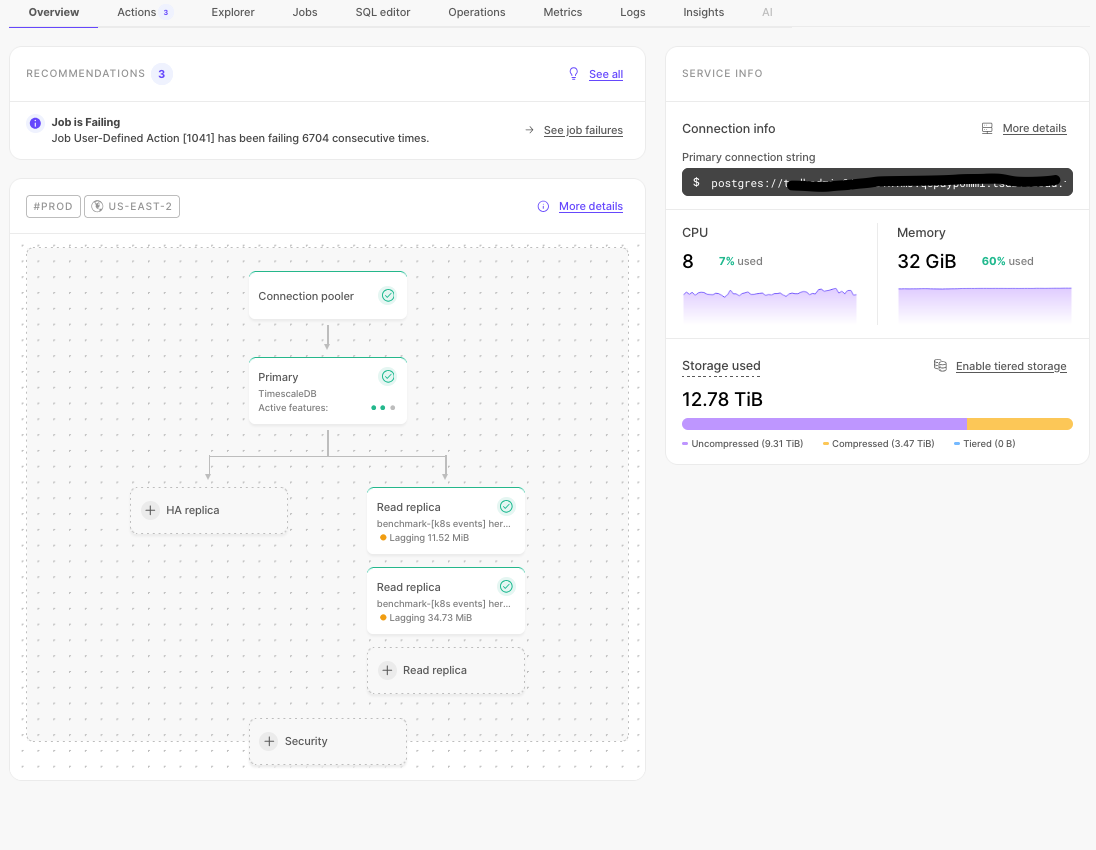

New service overview page

The service overview page in Timescale Console has been overhauled to make it simpler and easier to use. Navigate to the Overview tab for any of your services and you will find an architecture diagram and general information pertaining to it. You may also see recommendations at the top, for how to optimize your service.

To leave the product team your feedback, open Help & Support on the left and select Send feedback to the product team.

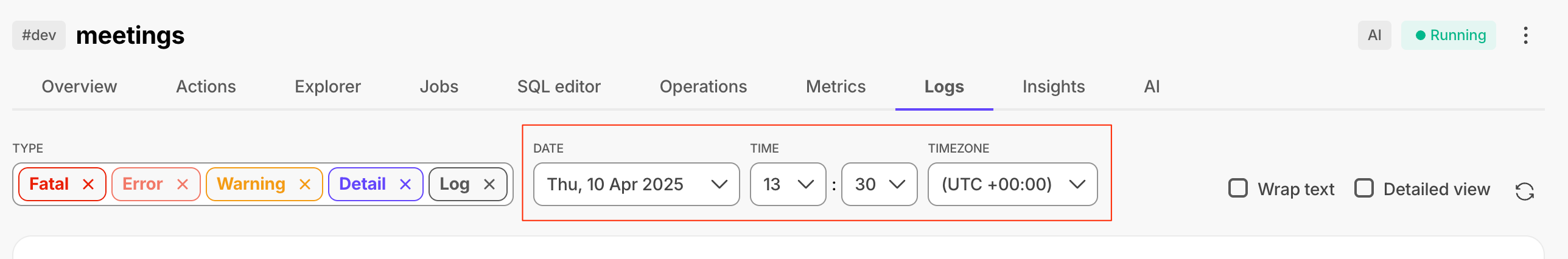

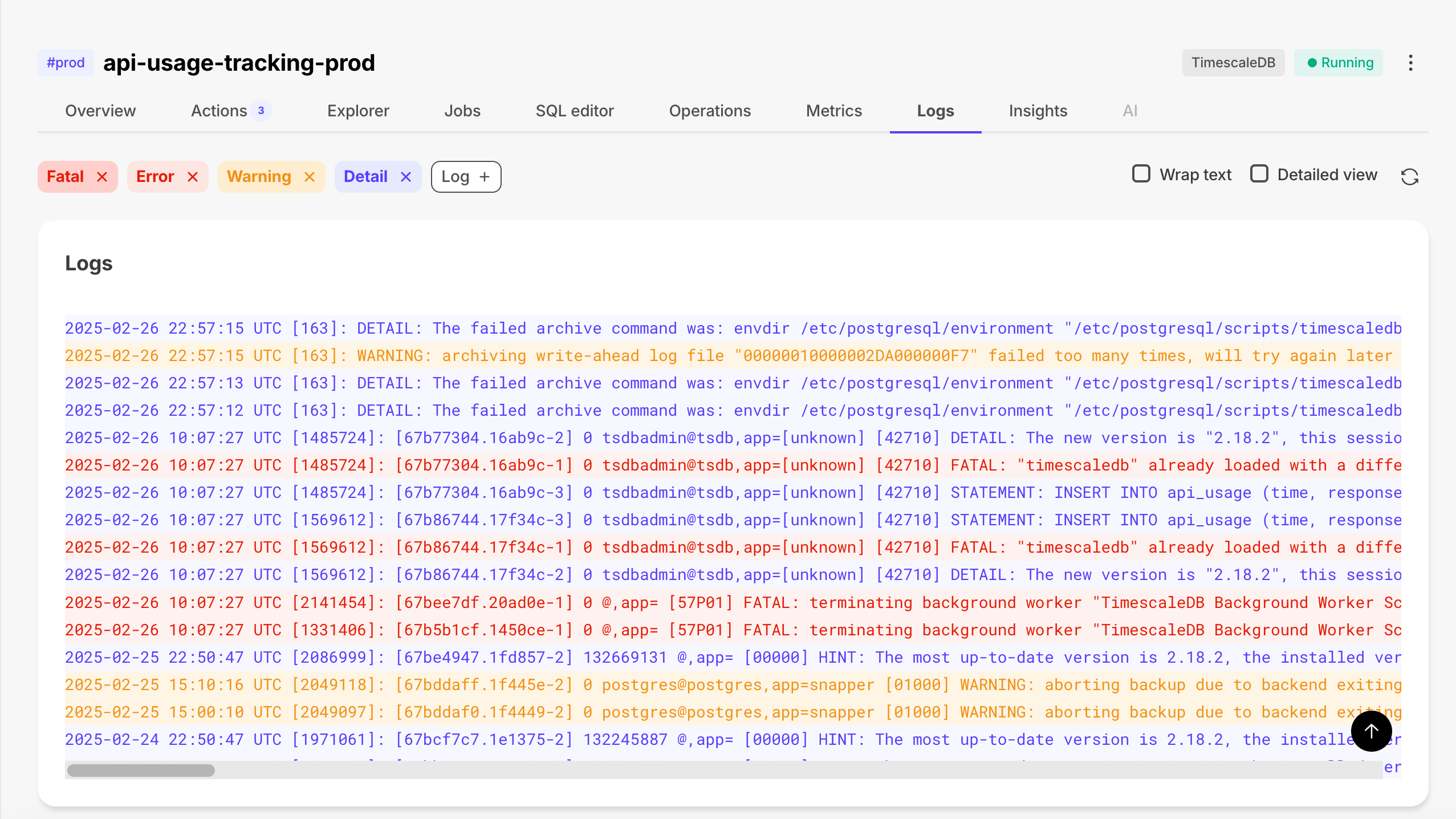

Finding logs just got easier! We've added a date, time, and timezone picker, so you can jump straight to the exact moment you're interested in—no more endless scrolling.

📒Faster vector search and improved job information

April 4, 2025

pgvectorscale 0.7.0: faster filtered filtered vector search with filtered indexes

This pgvectorscale release adds label-based filtered vector search to the StreamingDiskANN index. This enables you to return more precise and efficient results by combining vector similarity search with label filtering while still uitilizing the ANN index. This is a common need for large-scale RAG and Agentic applications that rely on vector searches with metadata filters to return relevant results. Filtered indexes add even more capabilities for filtered search at scale, complementing the high accuracy streaming filtering already present in pgvectorscale. The implementation is inspired by Microsoft's Filtered DiskANN research. For more information, see the [pgvectorscale release notes][log-28032025-pgvectorscale-rn] and a [usage example][log-28032025-pgvectorscale-example].

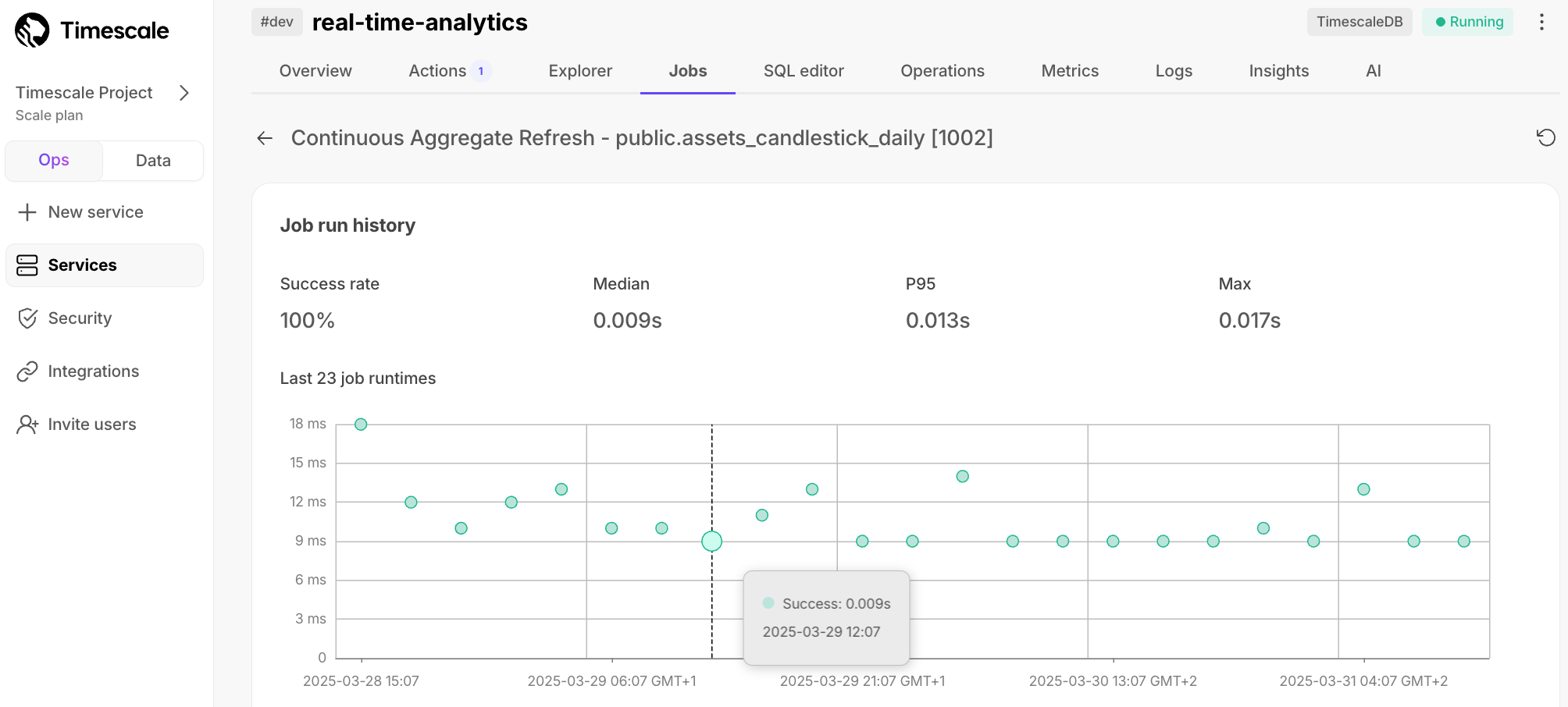

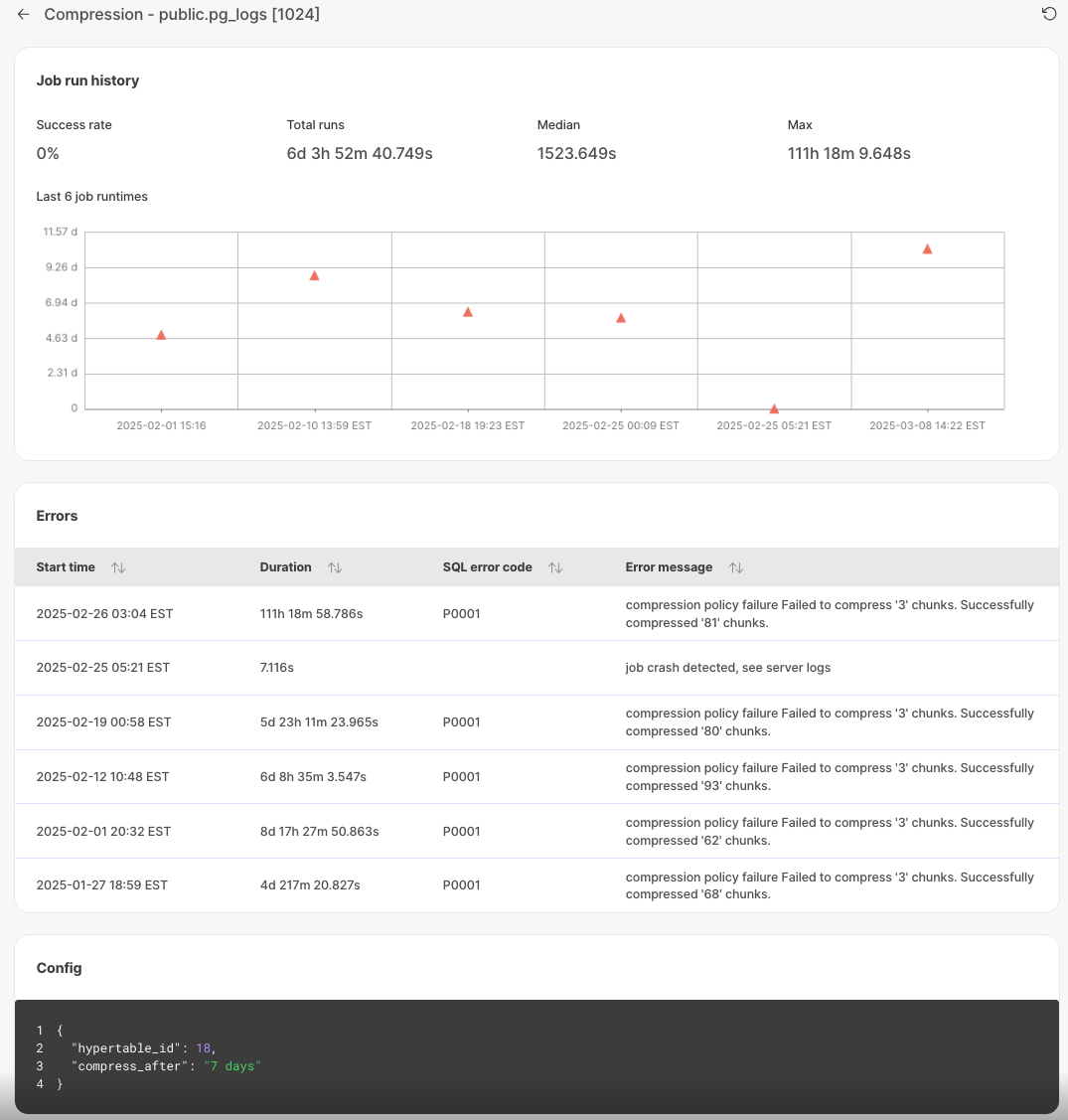

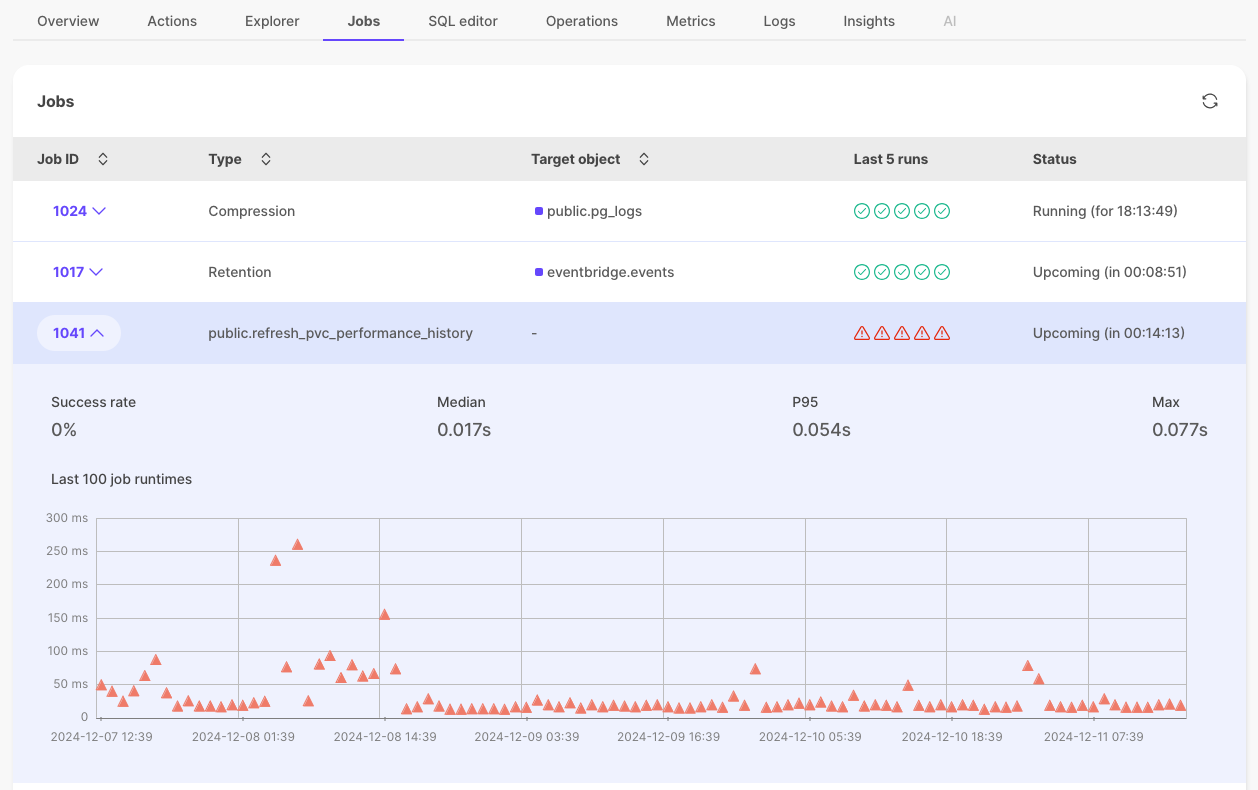

Job errors and individual job pages

Each job now has an individual page in Timescale Console, and displays additional details about job errors. You use this information to debug failing jobs.

To see the job information page, in [Timescale Console][console], select the service to check, then click Jobs > job ID to investigate.

- Unsuccessful jobs with errors:

🤩 In-Console Livesync for Postgres

March 21, 2025

You can now set up an active data ingestion pipeline with livesync for Postgres in Timescale Console. This tool enables you to replicate your source database tables into Timescale's hypertables indefinitely. Yes, you heard that right—keep livesync running for as long as you need, ensuring that your existing source Postgres tables stay in sync with Timescale Cloud. Read more about setting up and using Livesync for Postgres.

💾 16K dimensions on pgvectorscale plus new pgai Vectorizer support

March 14, 2025

pgvectorscale 0.6 — store up to 16K dimension embeddings

pgvectorscale 0.6.0 now supports storing vectors with up to 16,000 dimensions, removing the previous limitation of 2,000 from pgvector. This lets you use larger embedding models like OpenAI's text-embedding-3-large (3072 dim) with Postgres as your vector database. This release also includes key performance and capability enhancements, including NEON support for SIMD distance calculations on aarch64 processors, improved inner product distance metric implementation, and improved index statistics. See the release details here.

pgai Vectorizer supports models from AWS Bedrock, Azure AI, Google Vertex via LiteLLM

Access embedding models from popular cloud model hubs like AWS Bedrock, Azure AI Foundry, Google Vertex, as well as HuggingFace and Cohere as part of the LiteLLM integration with pgai Vectorizer. To use these models with pgai Vectorizer on Timescale Cloud, select Other when adding the API key in the credentials section of Timescale Console.

🤖 Agent Mode for PopSQL and more

March 7, 2025

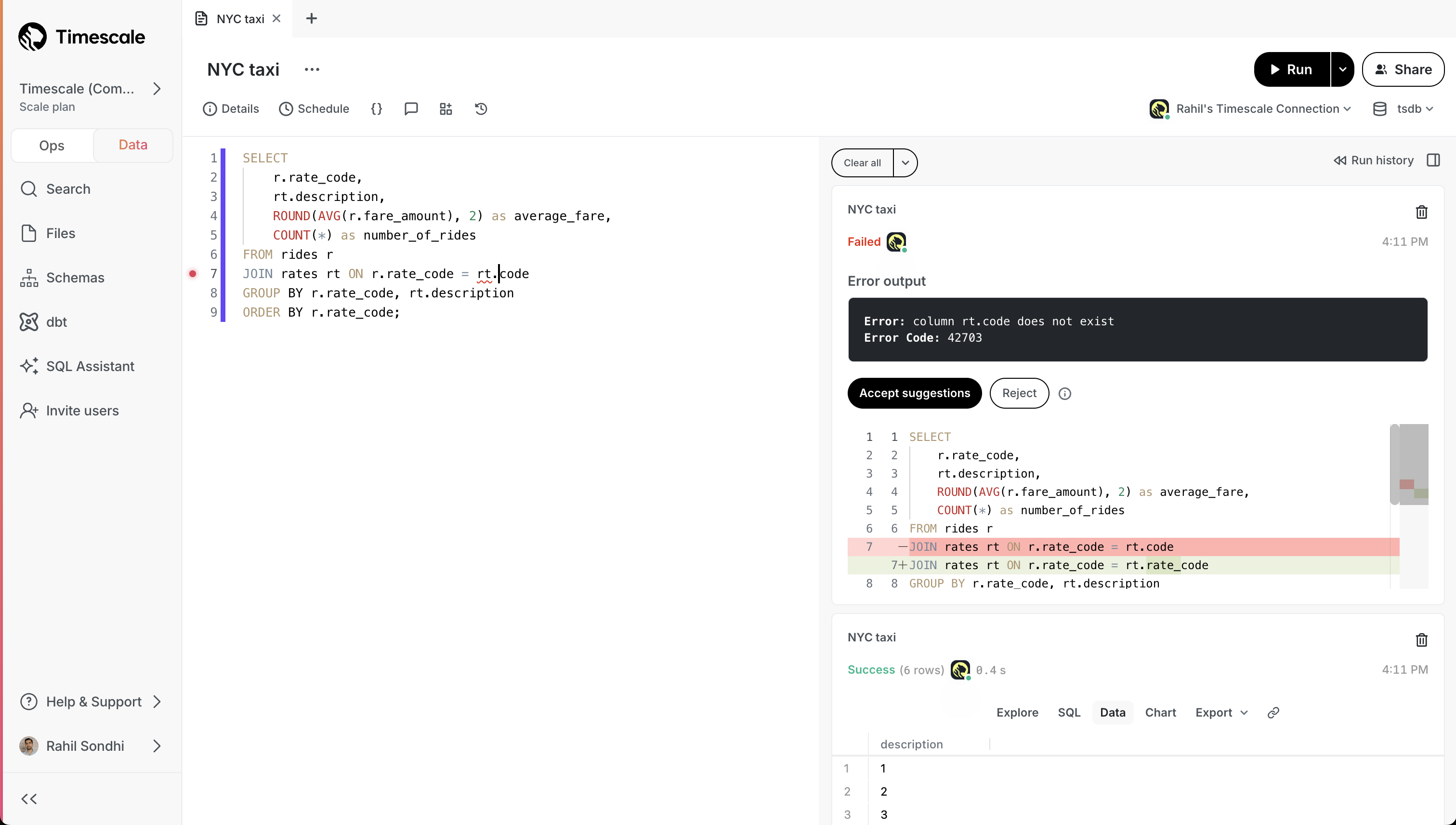

Agent Mode for PopSQL

Introducing Agent Mode, a new feature in Timescale Console SQL Assistant. SQL Assistant lets you query your database using natural language. However, if you ran into errors, you have to approve the implementation of the Assistant's suggestions.

With Agent Mode on, SQL Assistant automatically adjusts and executes your query without intervention. It runs, diagnoses, and fixes any errors that it runs into until you get your desired results.

Below you can see SQL Assistant run into an error, identify the resolution, execute the fixed query, display results, and even change the title of the query:

To use Agent Mode, make sure you have SQL Assistant enabled, then click on the model selector dropdown, and tick the Agent Mode checkbox.

Improved AWS Marketplace integration for a smoother experience

We've enhanced the AWS Marketplace workflow to make your experience even better! Now, everything is fully automated, ensuring a seamless process from setup to billing. If you're using the AWS Marketplace integration, you'll notice a smoother transition and clearer billing visibility—your Timescale Cloud subscription will be reflected directly in AWS Marketplace!

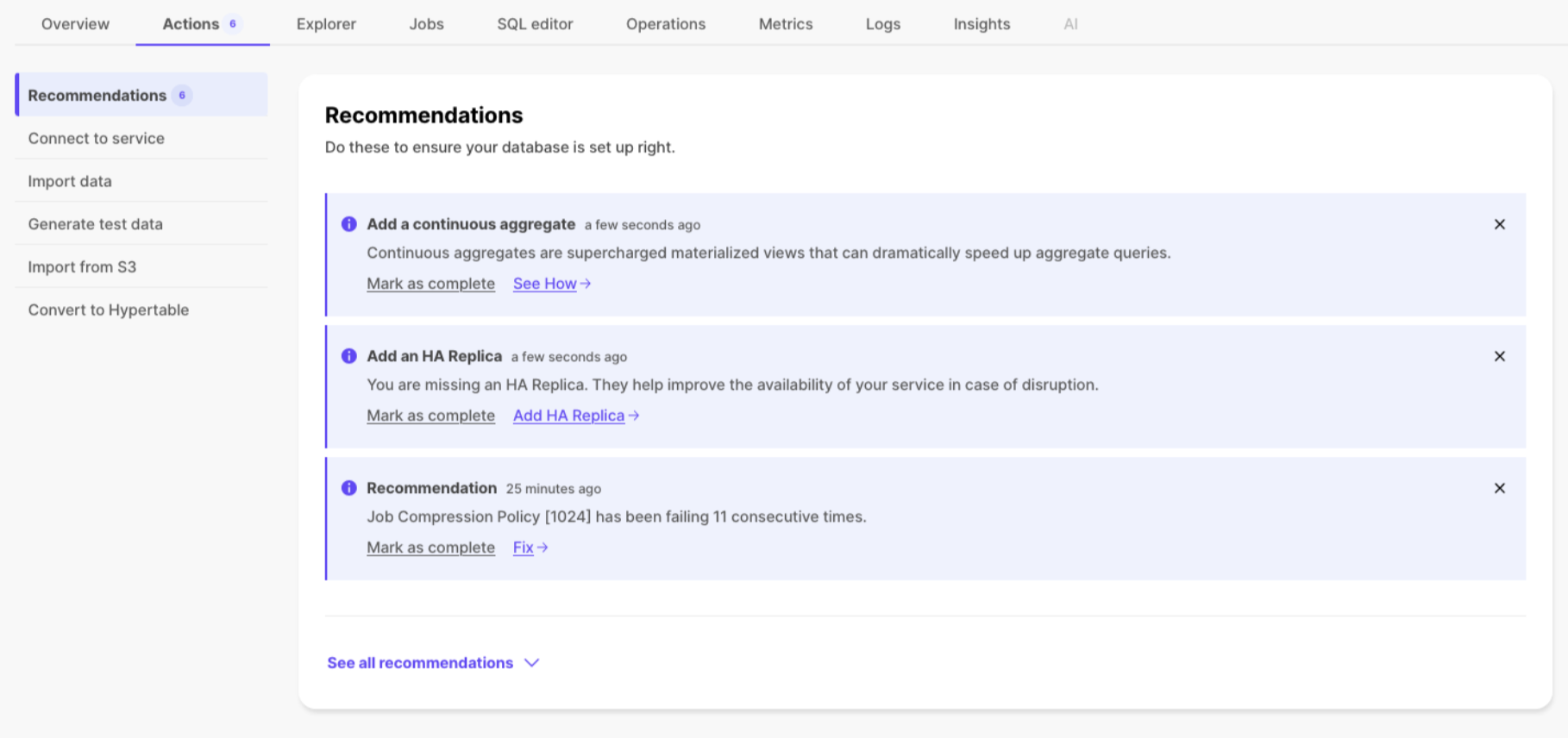

Timescale Console recommendations

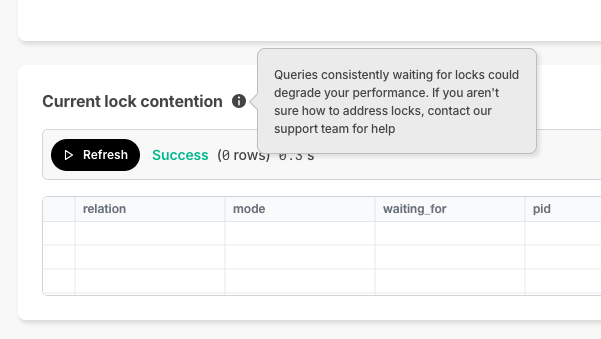

Sometimes it can be hard to know if you are getting the best use out of your service. To help with this, Timescale Cloud now provides recommendations based on your service's context, assisting with onboarding or notifying if there is a configuration concern with your service, such as consistently failing jobs.

To start, recommendations are focused primarily on onboarding or service health, though we will regularly add new ones. You can see if you have any existing recommendations for your service by going to the Actions tab in Timescale Console.

🛣️ Configuration Options for Secure Connections and More

February 28, 2025

Edit VPC and AWS Transit Gateway CIDRs

You can now modify the CIDRs blocks for your VPC or Transit Gateway directly from Timescale Console, giving you greater control over network access and security. This update makes it easier to adjust your private networking setup without needing to recreate your VPC or contact support.

Improved log filtering

We’ve enhanced the Logs screen with the new Warning and Log filters to help you quickly find the logs you need. These additions complement the existing Fatal, Error, and Detail filters, making it easier to pinpoint specific events and troubleshoot issues efficiently.

TimescaleDB v2.18.2 on Timescale Cloud

New services created in Timescale Cloud now use TimescaleDB v2.18.2. Existing services are in the process of being automatically upgraded to this version.

This new release fixes a number of bugs including:

- Fix

ExplainHookbreaking the call chain. - Respect

ExecutorStarthooks of other extensions. - Block dropping internal compressed chunks with

drop_chunk().

SQL Assistant improvements

- Support for Claude 3.7 Sonnet and extended thinking including reasoning tokens.

- Ability to abort SQL Assistant requests while the response is streaming.

🤖 SQL Assistant Improvements and Pgai Docs Reorganization

February 21, 2025

New models and improved UX for SQL Assistant

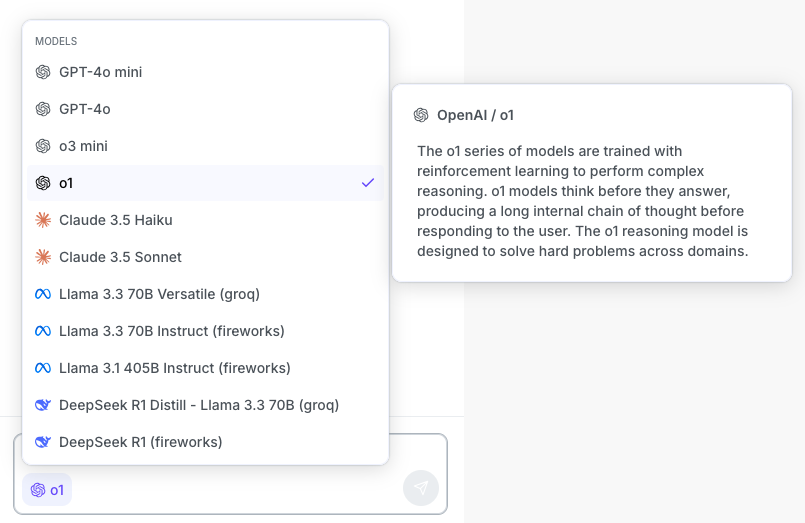

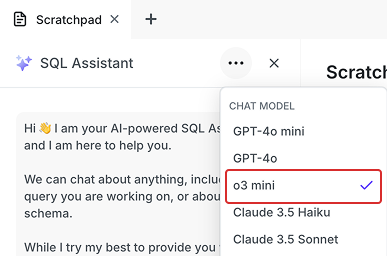

We have added fireworks.ai and Groq as service providers, and several new LLM options for SQL Assistant:

- OpenAI o1

- DeepSeek R1

- Llama 3.3 70B

- Llama 3.1 405B

- DeepSeek R1 Distill - Llama 3.3

We've also improved the model picker by adding descriptions for each model:

Updated and reorganized docs for pgai

We have improved the GitHub docs for pgai. Now relevant sections have been grouped into their own folders and we've created a comprehensive summary doc. Check it out here.

💘 TimescaleDB v2.18.1 and AWS Transit Gateway Support Generally Available

February 14, 2025

TimescaleDB v2.18.1

New services created in Timescale Cloud now use TimescaleDB v2.18.1. Existing services will be automatically upgraded in their next maintenance window starting next week.

This new release includes a number of bug fixes and small improvements including:

- Faster columnar scans when using the hypercore table access method

- Ensure all constraints are always applied when deleting data on the columnstore

- Pushdown all filters on scans for UPDATE/DELETE operations on the columnstore

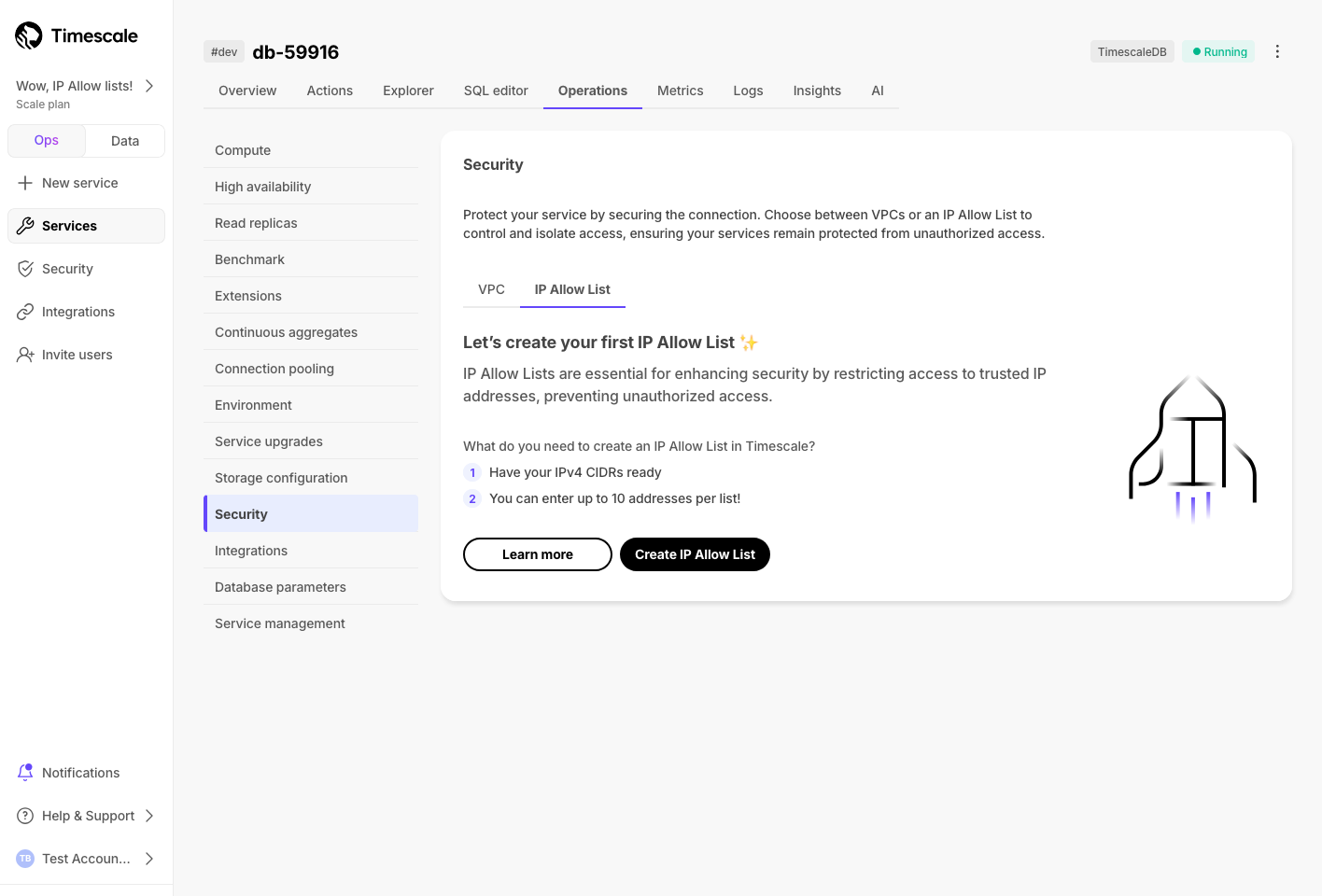

AWS Transit Gateway support is now generally available!

Timescale Cloud now fully supports AWS Transit Gateway, making it even easier to securely connect your database to multiple VPCs across different environments—including AWS, on-prem, and other cloud providers.

With this update, you can establish a peering connection between your Timescale Cloud services and an AWS Transit Gateway in your AWS account. This keeps your Timescale Cloud services safely behind a VPC while allowing seamless access across complex network setups.

🤖 TimescaleDB v2.18 and SQL Assistant Improvements in Data Mode and PopSQL

February 6, 2025

TimescaleDB v2.18 - dense indexes in the columnstore and query vectorization improvements

Starting this week, all new services created on Timescale Cloud use TimescaleDB v2.18. Existing services will be upgraded gradually during their maintenance window.

Highlighted features in TimescaleDB v2.18.0 include:

- The ability to add dense indexes (btree and hash) to the columnstore through the new hypercore table access method.

- Significant performance improvements through vectorization (SIMD) for aggregations using a group by with one column and/or using a filter clause when querying the columnstore.